Best Practices in Jitter, Part 1

Jitter was first released in 2002. At the time of its release, it provided some of the most comprehensive and intuitive ways of working with video and image processing in realtime. But times have changed; in the years since the initial release of Jitter, the computing landscape has changed, and changed somewhat dramatically. This series will focus on ways to maximize performance and efficiency in Jitter by laying out some current best practices. In this first article we're going to look at the following topics:

Why textures are important and how to use them

How to efficiently preview an OpenGL scene or video process in your patch

When to choose a matrix over a texture

How to minimize the impact of matrices on system resources

Matrices or Textures?

One of the most important changes to computers since Jitter's release is the general move away from faster and faster CPUs toward faster GPUs - the graphics processing unit cards in your system. Jitter has the tools to leverage your machine's power, but you'll need to learn some new techniques to access them. Much of what was once handled by the CPU in linear sequence in matrix form can now be done in parallel on a GPU in a fraction of the time using textures. For most users, switching to textures will yield significant (and immediate) performance gains. However, there are certain kinds of processing — computer vision, for example — that rely on analyzing images to detect features or objects. Those kinds of operations still require the use of matrix data, so it’s important to understand both approaches going forward.

Textures and Shaders

What is a texture and why is it important? For the purposes of this article, you can think of a textures simply as images stored in buffers and processed by shaders on the GPU. To take advantage of textures you need to use specific Jitter objects. The following objects provides support for textures in Jitter:

The jit.gl.texture object is simply a storage space for a texture. It handles the uploading of matrix data to the texture’s buffer on the GPU, along with fast copying of textures sent to its input. You can think of the jit.gl.texture object as the GPU equivalent of the jit.matrix object.

The jit.gl.slab and jit.gl.pix objects provide the core of any GPU image processing patch. Both of these objects encapsulate a texture buffer and shader program — they can take a matrix or texture as input to fill the buffer and then use a specified shader to process it in a variety of ways.

Shaders are simply image filters that are processed on the GPU. The jit.gl.slab object loads and runs Jitter shader files. Although the jit.gl.pix uses Gen-based patching to do its work, the jit.gl.slab and jit.gl.pix are otherwise identical in terms of performing GPU-based processing. It is possible (and rewarding) to write your own shaders or to do your own GPU-based processing using the jit.gl.pix object (as described in these introductory and advanced tutorials), many Jitter matrix processing objects have corresponding Jitter shaders or Gen patchers that you can use as drop-in replacements for the Jitter objects you know and love.

A list of object equivalents can be found here.

Using jit.playlist, jit.movie and jit.grab with textures

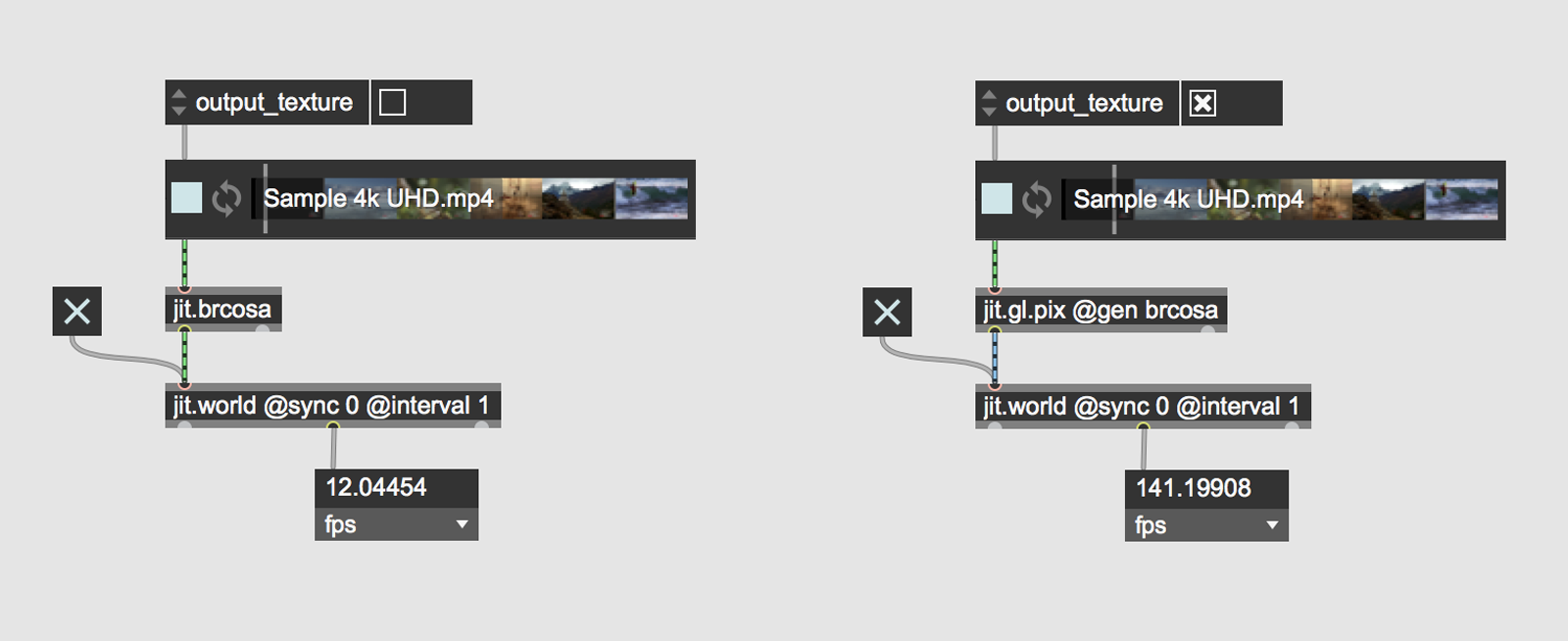

The jit.playlist, jit.movie and jit.grab objects that you commonly use as input sources for your Jitter patches can all be configured to output textures rather than matrices by using the @output_texture attribute set to a value of 1. In many cases, the performance gains for switching to texture processing will be substantial. For example, on a mid-range laptop, playing back a 4K video through a simple jit.brcosa filter provides a startling difference in terms of matrix vs. texture processing.

Vizzie modules and textures

As of Max8, all Vizzie effect modules process and output textures automatically. Opening these up and looking at the contained shaders and Gen files can be a great way to deepen your understanding of texture processing.

Reworking Matrix-based Patches

So now you know why you’d want to use textures instead of matrices, the next question is how. For most matrix-based patches, all that’s required is replacing the jit.window object with jit.world, using the @output_texture 1 attribute on any video objects, and then replacing matrix objects with their jit.gl.slab or jit.gl.pix equivalent.

Viewing your work

[ edit - With the release of Max 8.1.4 the following section is no longer relevant. Users are advised to use jit.pwindow for all previewing needs. ]

One of the most common elements in any Jitter patch is preview windows, usually in the form of jit.pwindow. We use them for everything from checking the state of the image at a particular point in an effect chain to having a preview of the final output during a performance. And if you are like most of us, they are probably scattered across your patchers and subpatchers. The problem is that without the proper settings these preview windows are often inefficient and have a strong impact on overall performance.

We could walk you through how to add a second shared render context to efficiently view texture data, but there’s a much easier drop in solution — simply replace all jit.pwindow objects in your patch with a Vizzie VIEWR module. In many cases, this is all you need to do to considerably speed up an existing patch. The VIEWR object provides high performance texture preview functionality, whereas jit.pwindow object will require a matrix readback to display the textures. Another great thing about the Vizzie VIEWR module is that it’s simply a patcher abstraction, and therefore modifiable to suit your needs. To make a new customized preview window based on the VIEWR module, open the vz.viewr patcher (search the File Browser for vz.viewr.maxpat), edit and resave to your user Library folder, create a new bpatcher and load your customized patch, and save the bpatcher as a snippet. Here's an example

Matrices

Given all the benefits of using textures, you may wonder why the matrix objects are still included in Jitter. The reason is that certain techniques and operations are only possible using matrices. Things like computer vision (cv.jit family of objects, jit.findbounds) and analysis (jit.3m, jit.histogram) are only possible using matrix objects on the CPU. Matrices are also used extensively to store and process geometry data for jit.gl.mesh. There may also be cases where the matrix objects simply work better or match a desired look better than their texture equivalents. Therefore the trick is knowing how to use matrix objects effectively.

Minimize Uploads and Readbacks

There are two basic rules to follow to get the best performance out of your patch when working with textures and matrices:

minimize data copies from CPU to GPU (uploads) and ensure they happen as early in your processing chain as possible. When possible, use the output_texture attribute.

minimize data copies from GPU to CPU (readbacks) and ensure they happen on the smallest dimensions as possible.

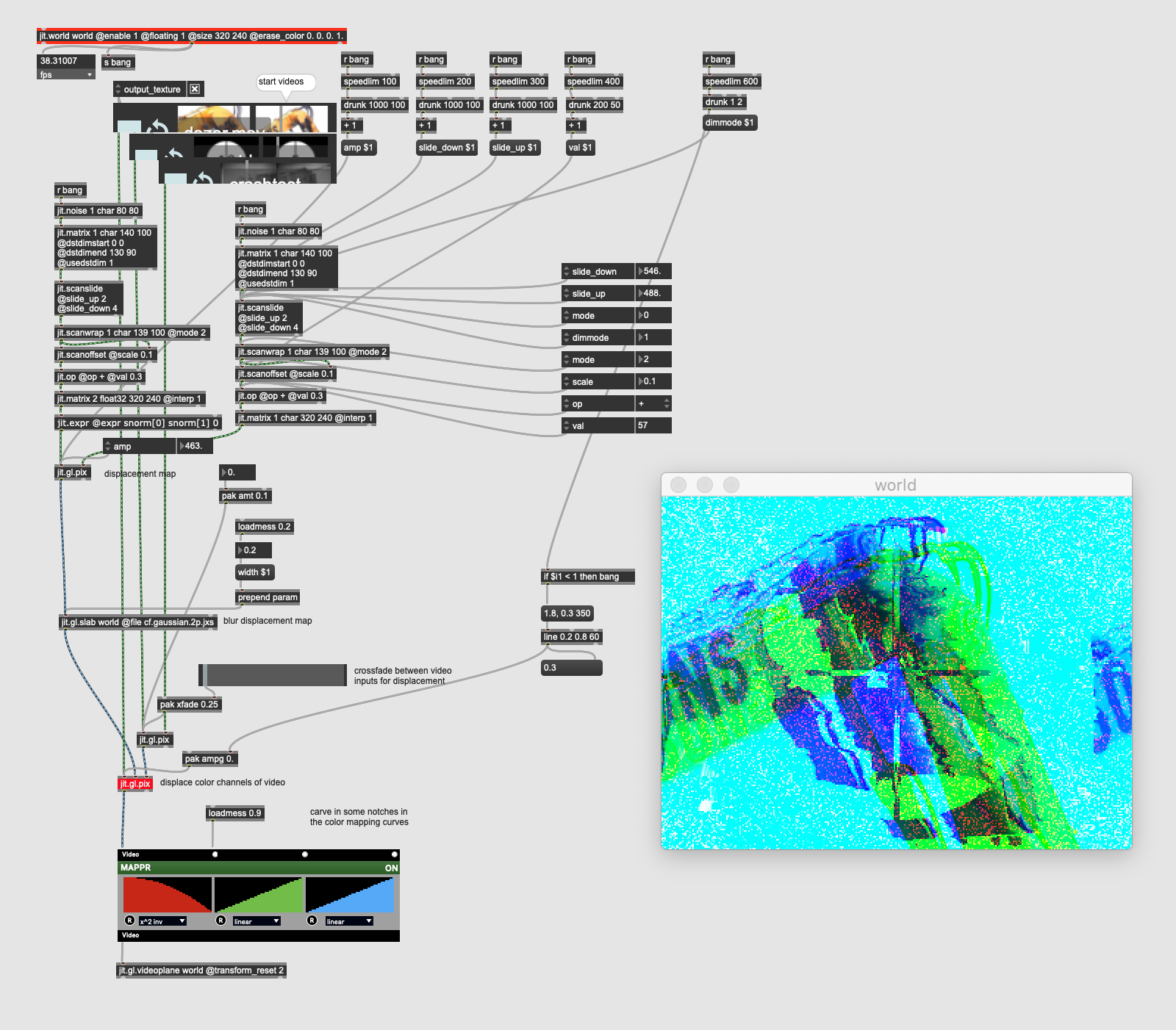

An example of a data upload is loading an image file with the importmovie message of jit.matrix, and sending that directly to a jit.gl.texture object. Once the image data is uploaded to the texture object, it should only be sent to texture processing objects (jit.gl.slab / jit.gl.pix) or sent to some geometry for display (jit.gl.videoplane). For a real world example, the following patch posted by user Martin Beck from this epic thread on glitch techniques from the Jitter forum demonstrates the concept.

The matrix operations happen early in the chain, the results are uploaded to the GPU and from that point forward all processing is done using texture objects.

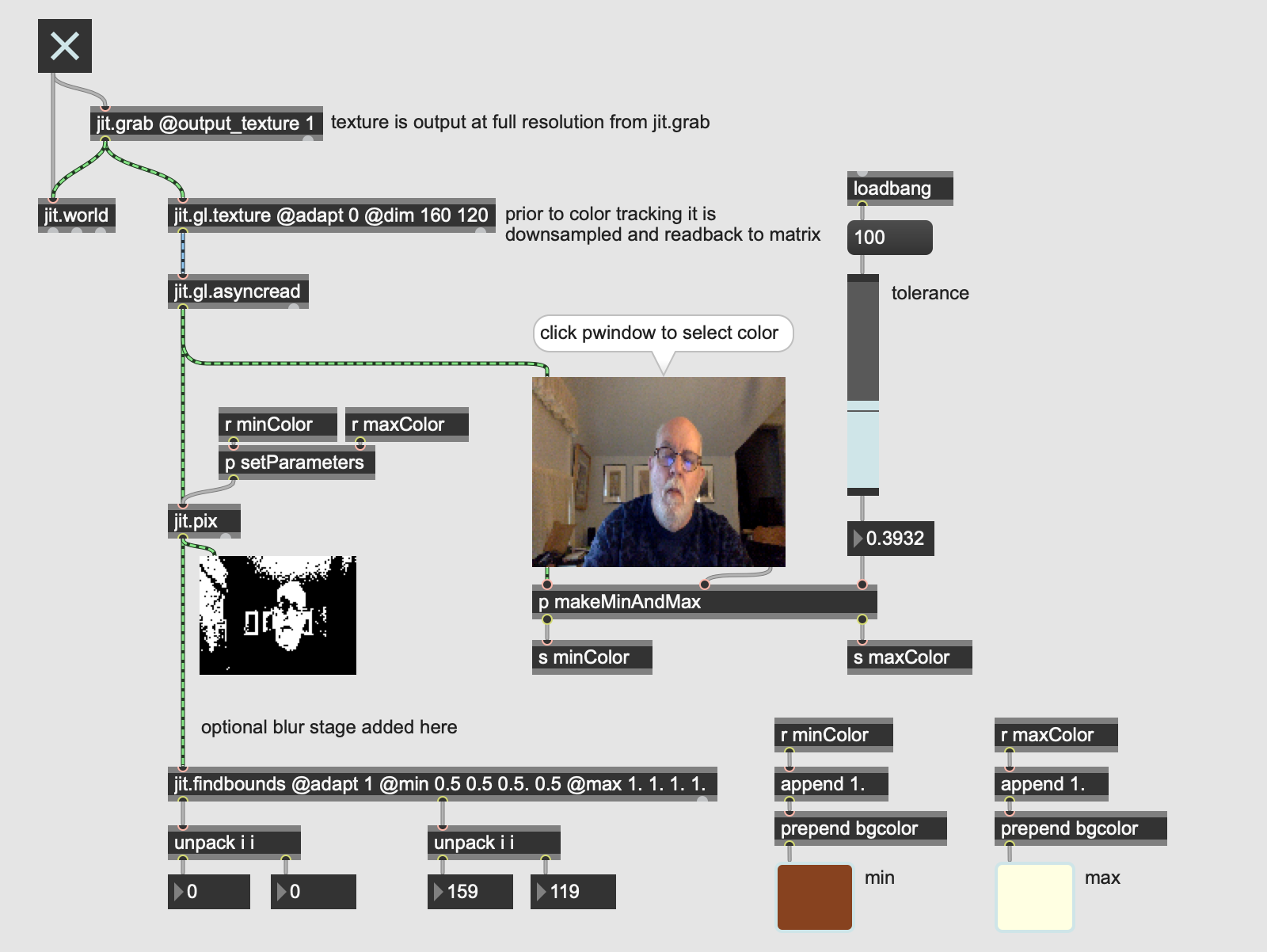

For an example of best practices with readbacks check out the following example patch demonstrating color tracking with jit.findbounds object. In the patch, jit.grab is set to output a texture at full resolution. In order to perform the color tracking operation using jit.findbounds, the texture must be read back to a matrix. The optimal object for this is jit.gl.asyncread (asyncread stands for asynchronous readback because the output matrix is delayed by a single frame from the input texture). We take the additional step of downsampling the texture on the GPU using a jit.gl.texture object with the adapt attribute disabled (@adapt 0) and the dimensions attribute set to the smallest size necessary for accurate detection.

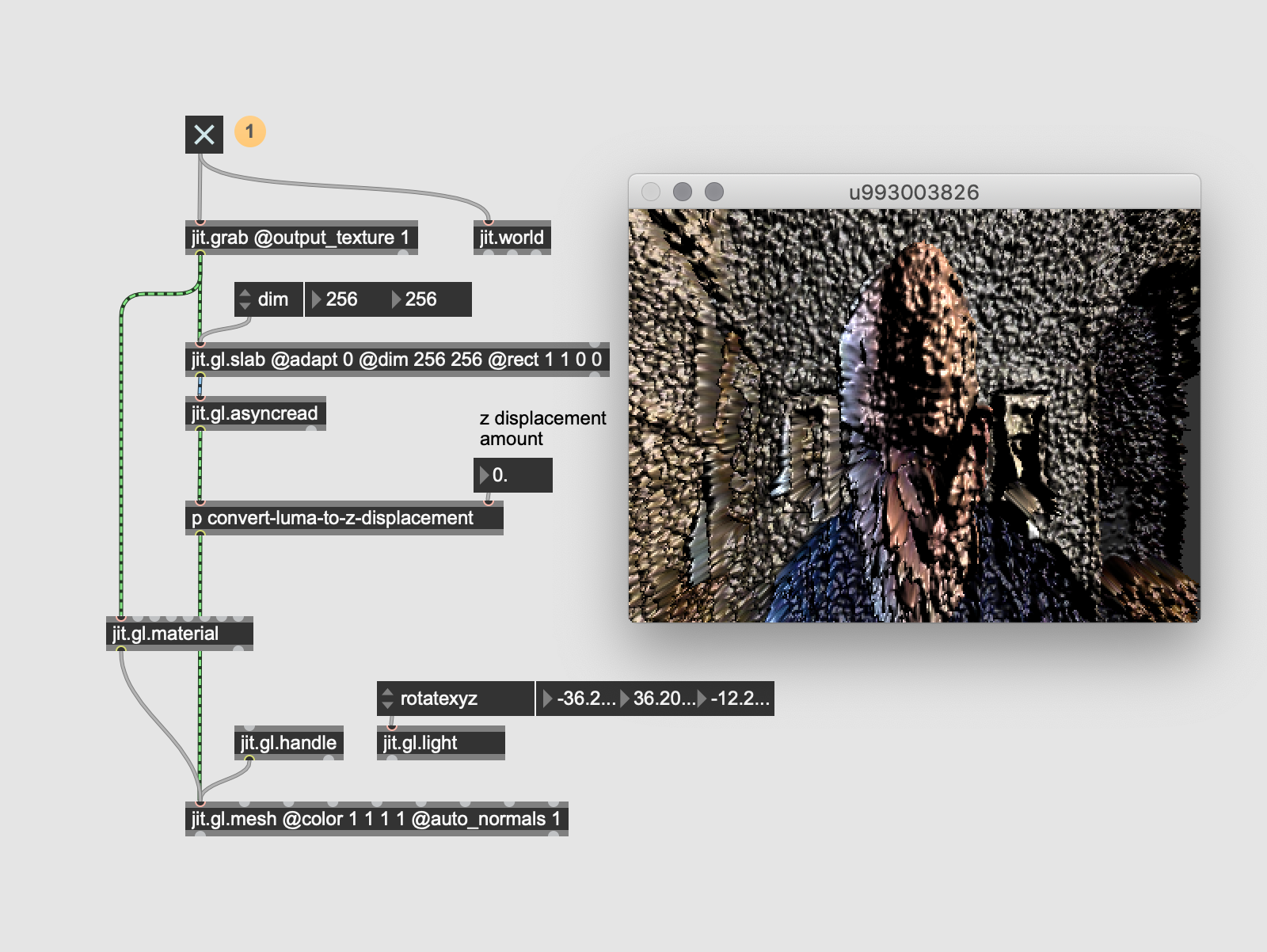

An additional example of using readbacks efficiently is shown below. In this example of a basic luminance displacement effect we use the GPU to flip and downsample the input texture as necessary for our geometry. We also feed the same input texture unaltered at full resolution to a jit.gl.material object to use as the diffuse color texture.

Learn More: See all the articles in this series

by Rob RamirezCory Metcalf on 2019年5月14日