Your First Shader

Typically, when I talk to Jitter users about writing one's own shader programs for use with jit.gl.slab, I usually get glazed-over eyes and this sort of distant look of wonder. When I try to explain how easy it is, that look typically turns to one of annoyed disbelief. So, for a long time now I've been thinking about writing an article to de-mystify the process of writing your own GLSL shaders, and to help everyone avoid some common frustrations.

Please download the Max 4.6 legacy files and the updated Max 5 files used in this tutorial.

If you are one of those people who fear text-based programming like your worst enemy, have no fear. For most things you will want to do with GLSL, the programming is really pretty lightweight, and you don't have to do deal with things like memory allocation or dealing with multiple functions. A shader program can sometimes be as short as a couple of lines of code, depending on how complex your ideas are.

For this article, I am going to walk you through the first GLSL shader I wrote, which I call "lumagate". The purpose of the shader is to generate an alpha channel, or transparency map, for the incoming image by only showing a specific range of luminance. This was an effect I was using a great deal to produce arbitrarily shaped textures, and I figured I could save a lot of CPU overhead by doing the calculations on GPU. For a jitter patcher-based example of this effect, see the patch called "01Lumagate_Patch".

The File Format

Now, before we dive into the specific GLSL code I used to achieve this effect, we're going to have a look at the JXS file format. JXS is an XML-based file format that jit.gl.slab and jit.gl.shader use to load shader code and configuration data (initializing variables, metadata etc.). The JXS format allows you to specify all of the expected parameters and their default values in a familiar and easily readable way. Go ahead and open the file "tut.blank.jxs" in a text-editing application. This is a very simple shader program that just multiplies the input with a variable and passes it to the output.

shader program that does absolutely nothing!

At the very beginning of the file, you will see a tag that says jittershader name="blank". This line tells Jitter that the file is to be read as a shader file, and that its name is "blank". You can also describe the shader itself using the description tag.

The param tag is the most important one for you to understand. This is where you define any uniform parameters that you are using in your code. A uniform parameter is a value that can be passed into the shader from the patcher, but can't be altered by the shader program itself. These will typically be used for any value that you need an interactive interface for. Within the param you must specify the name of your variable, the data type (I'll get to that later), and the default value for the parameter. The default value is passed to the shader program automatically when it is loaded. Think of it like a [loadbang]. You might notice that the textures used by the [jit.gl.slab] object are also defined using the param tag. In the case of textures, the default value will be correspond to the [jit.gl.slab] input, starting with "0".

Once you create the parameters of your shader program using the param tag, you then need to bind them to either the fragment or vertex program. For the sake of this article, we're only going to look at the fragment program, which is where all of the per-pixel processing is done in GLSL. The bind tag tells Jitter where to send the parameter info. If you try to bind a parameter to a program that doesn't have a variable with the same name, you will get a compiler warning when you load the shader.

The program tag allows you to specify a vertex and a fragment program for your shader. Using the "source" argument, you can specify another file for a specific program. In this case we are using "sh.passthrudim.vp.glsl" vertex program, which is one of several handy little utilities in the "jitter-shaders/shared" folder. This particular one just does some basic setup work for you and passes some useful variables to the fragment program.

I would recommend to keep a copy of this "tut.blank.jxs" on hand to use as a template for any shaders that you want to write in the future. Now that we've covered the file format, let's get into some real GLSL code!

Shader Variables

The first variable that any worthwhile texture processing shader should have is the texture sample. This is defined as

uniform sampler2DRect tex0;in our example code (for non-rectangular textures use sampler2D()). This means that we are using a texture with the name "tex0" that will be sampled and processed by our program. To sample multiple pixels to be processed together (such as in a blur filter), you will need to use a sampler for each one. The way that fragment shaders work, you are essentially programming a process for a single output pixel of the framebuffer. This is then run for each pixel of the output texture in a massively parallel way. Because of the architecture of the graphics card, you only have access to the single framebuffer output pixel within your program.Our next variable is a varying variable, which means that it includes data that is being sent from the vertex program. The "texcoord0" varying variable represents the current coordinates of the texture pixel that you are processing. Combined, these two variables give you the data needed to access your texture and process it within the shader program.

The other variable here is a uniform variable called nothing that represents a floating-point number that is passed from the interface to MaxMSP to your shader. The beginning of the shader program is represented by void main() {, which will be familiar to anyone who has written code for Java or C. For those who are unfamiliar with programming, this basically just tells the compiler that what follows is the main function of our program.

Special Data Types for Shaders

In addition to familiar data types such as int and float, GLSL also uses a number of special data types that are optimized to work with video and OpenGL data. The most important of these are "vectors", which are small collections of numbers with 2,3, or 4 members. These are declared as:

vec2- a vector of 2 float valuesvec3- a vector of 3 float valuesvec4- a vector of 4 float valuesivec2- a vector of 2 int valuesivec3- a vector of 3 int valuesivec4- a vector of 4 int valuesbvec2- a vector of 2 boolean valuesbvec3- a vector of 3 boolean valuesbvec4- a vector of 4 boolean values

Color in GLSL is almost always represented as a vec4 value (red,green,blue,alpha). To convert a single float value to a vec4 value, you can use the constructor vec4(floatvalue). You can also convert between different types of vectors using the constructor: vec4(ivec4variable). This is often necessary as GLSL will rarely allow you to mix and match data types within an equation or function.Another really cool thing you can do with vectors in GLSL is to use the "swizzle" notation to reorder and create new vectors. For example, if you wanted a vec4 variable with the red and green values swapped, you can write it as -

vec4 swapped = foo.grba;or as

vec4 swapped = foo.yxzw;Notice that you can use either cartesian coordinate or color name swizzle, but you can't mix and match. This will not work:

vec4 swapped = foo.gxbw;If you would like to learn more about the GLSL data types and special ways to deal with them, I strongly encourage you to read the official GLSL documentation.

Ridiculously Basic Program

What follows is a ridiculously simple GLSL program:

void main()

{

gl_FragColor = texture2DRect(tex0, texcoord0)*vec4(nothing);

}Here we are outputting the result of multiplying the value of the current pixel by our nothing value. The variable gl_FragColor is a GLSL built-in variable that defines what color a specific pixel should be set to. Think of this as the output of your program. Usually, this will be the last part of the process.In this statement, you will also notice the function texture2DRect(). This function requires two values - a specified texture to sample and the vec2(x,y) coordinates of the texture sample. All of our texture-displacement shaders do their work by playing with the texture coordinate values passed to the texture2DRect() function. The result of this function is a vec4 value - a collection of 4 floating-point numbers - that represents the color of that pixel as (red,green,blue,alpha). You will notice that after the "*" (multiplier) there is the vec4(nothing) statement. This converts the float variable nothing into a vec4 variable for the purpose of multiplying with our texture color. You need to be very explicit in converting data to the proper format.

This concludes our detailed walk-through of a shader program. Are you still with us?

Lumagate

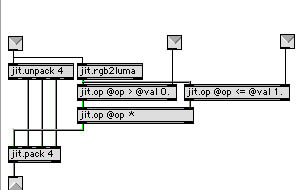

Now we are going to take this lame shader program and turn it into something really useful and awesome. Have a look at the file "01LumagatePatch" for a patcher-based description of the functionality that we are trying to create with this shader. In order to get this working in GLSL, it will help to break down the steps necessary for it to work:

Convert RGB value to luminance

Compare luminance to our high and low thresholds

Multiply our two comparisons together to get a combined range

Use the value of the combined comparison as the value of the Alpha, or transparency, channel

Now that we know what we need to do, all of this is pretty simple to implement in GLSL code.First, we need to get the value of the pixel into the shader program. As you saw earlier, this is done using the texture2DRect() function. You will need to add the following line to the beginning of your main() function:

vec4 a = texture2DRect(tex0, texcoord0);This creates a new vec4 variable "a" and fills it with the color value of the current pixel.Now that we have the color value, we can begin to do some calculations. To convert the RGB value to a luminance value, we will borrow a technique used in "co.lumakey.jxs", which is to do a dot-product of the RGB value with a set of luminance coefficients (0.299,0.587,0.114,0.). Rather than just writing these values directly in our program, it is generally far more readable to create a meaningful name for this set of numbers. To do this, we will define our luminance coefficients as a "const" (constant value) variable:

const vec4 lumcoeff = vec4(0.299,0.587,0.114,0.);We do this before the beginning of the main function because all of the varying, uniform, and const variables must be declared as global variables. Now we can add the following line after our texture lookup statement:

float luminance = dot(a,lumcoeff);This creates a new float variable "luminance" which represents the dot-product of "a" and "lumcoeff". A dot-product returns a float value which comes from multiplying each value in the first vector by the associated value in the second vector, and then adding all the members of the resulting vector together (this.r*that.r+this.g*that.g...). You may be thinking, "Why not just use a mathematical expression for this?" The answer is that whenever possible, it is best to use built-in GLSL vector function as these are optimized for the best performance. In fact, whenever possible, using vec4 calculations is going to give you the best efficiency as GLSL is optimized for vec4 data types.

void main()

{

vec4 a = texture2DRect(tex0, texcoord0);

// calculate our luminance

float luminance = dot(a,lumcoeff);

// compare to the thresholds

float clo = step(lum.x,luminance);

float chi = step(luminance,lum.y);Now that we have the luminance value, we will look at the step() function to do our comparison operations. This GLSL built-in function returns a "1." if the second value is greater than the first (edge) value, and a "0." if it is less than or equal to the edge value. Knowing this, we can create a vec2 variable - a collection of 2 floats - that represent our high and low luminance thresholds. As shown previously, there is a little bit of setup involved in creating an interactive variable for a shader. First we use the param and bind tags to define the parameter and bind it to our fragment program. To see how we do this, take a peek at the file "tut.lumagate.jxs". I set the default values to (0.,1.) to have the visible range include all possible values by default when the shader is loaded. We then define our uniform vec2 lum; in the section just before the beginning of the main() function.

Now that we have a variable representing the luminance thresholds, we can perform some comparison operations. First we will compare with the low threshold to see if our luminance is greater than the minimum:

float clo = step(lum.x,luminance);Notice that I used lum.x to represent the first value of the vec2 variable lum. We do a similar thing to compare for the upper threshold, but we reverse the terms since we want to do a less-than comparison:

float chi = step(luminance,lum.y);Now all we need to do is combine the results of the two comparisons and load this into the Alpha-channel of the output color. To combine the two comparisons, we will just multiply them together:

float amask = clo * chi;Now we have a variable "amask" that will become the alpha channel of our pixel when we include the following line:

gl_FragColor = vec4(a.rgb,amask);Note that in order to accomplish this we used the vec4() constructor, using the first (rgb) values of the texture sample a.rgb and amask as the Alpha value. Tricky, right? Don't forget to "Save As..." and name your shader using the .JXS extension. Make sure that your text-editor doesn't try to add any extra extensions to the file.

void main()

{

vec4 a = texture2DRect(tex0, texcoord0);

// calculate our luminance

float luminance = dot(a,lumcoeff);

// compare to the thresholds

float clo = step(lum.x,luminance);

float chi = step(luminance,lum.y);

//combine the comparisons

float amask = clo * chi;

// output texture with alpha-mask

gl_FragColor = vec4(a.rgb,amask);

}This leaves us with a great shader program that will only show us a texture pixel (texel in official terms) if it falls within our defined range of luminance values. To test this shader, open up the file "03Shader_Test" in MaxMSP/Jitter and load a movie file. Try reading your saved shader into jit.gl.slab. Are you getting any compiler errors? How did you do?

This concludes your first lesson in mastering the new world of GLSL shaders. If you want to learn more, I highly recommend poking around the "jitter-shaders" folder and grabbing the official GLSL specification(PDF) or the GLSL Orange Book. Now that you have a basic understanding of how shaders are written, you should be able to work out how we are doing things in other shaders. If you come across a built-in function you don't recognize, just check the official GLSL documentation. This is really just a small taste of the cool things you can do with GPU-based video processing. When I get some time I will try and write up some more tips and tricks, but this should keep you busy for a little bit. If you have any questions, I hope that you post them to the Jitter forum.

by Andrew Benson on May 23, 2007