jweb mediapipe noisy output?

I'm using the jweb mediapipe for eyetracking and I'm using 12 floating numbers from its list.

each floating data contains 6 numbers after the dot that are rapidly changing. how can i smooth this change so any change at the 4 5 6 place after the dot will not pass?

where do you want to smooth/thin the data ?

In first place it would make sense when sending that floats.

you can round to 0.001 or any other value and insert change 0.

where do you want to smooth/thin the data ?

I guess just right after the place where I received it

you should reduce float precision at very input.

Which is NOT visible in your screenshot

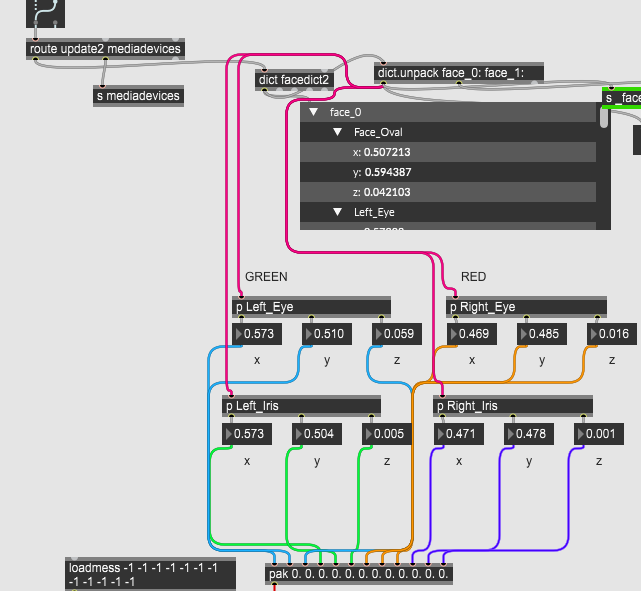

This is the basic patch I'm using. Where is the very input?

Can you strip float precision before feeding dict.

Would be best option I guess.

But don't ask me how to do that.

I am not using face recognition.

In this case I would say the very input is the Mediapipe API, which provides an already structured and populated dictionary, that is then passed to Max.

Formatting data before that would mean to modify the code behind the API which seems too much of a struggle for the task. And most likely an entire dictionary would be passed at every frame anyway.

Instead, you could make the rounding math to operate in the js code before passing the data into Max, but I would say there isn't much difference between that or doing the math in the Max patch itself directly. Just do what seems the most comfortable for you given your skills.

For rounding you could simply use [round 0.001], and maybe add a [change 0.] after that to filter out repetitions.

But what you possibly didn't expect is that rounding doesn't just smooth data, it also makes it loose resolution/precision. There are several ways to smooth data (basycally low-pass filtering quick changes), by using [slide], [zl.stream] + [zl.median], an abstraction called [smoov] that you can find in this post, as well as many other method.

Thanks I will look into the method you say.

I have other question regarding the mediapipe - How can I know what is the rate of the data passing? is it writing anywhere?

I like to use [jit.fpsui] to know the rate of incoming messages. It is meant to work with matrices, but it also bangs. Then you can send it a getfps to get the actual fps value.

Another solution is to use [timer].

Thanks for that.

I have another question regarding the mediapipe jweb application

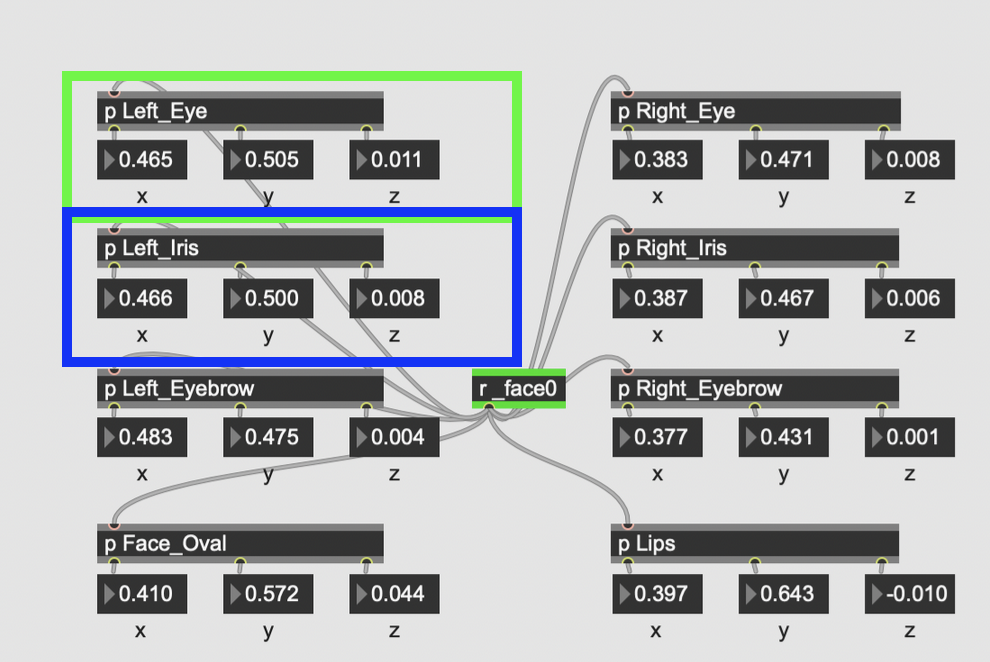

I'm using this version:

what is the part called Eye marked in Green

and what is the part called Iris marked in Blue?

Is iris means pupil?

From my understanding, Left_Eye gives you the center of the left eye, while Left_Iris gives you the center of the iris of the left eye, which is probably the same thing as the center of the pupil, since the pupil is at the center of the iris.

With these two points, you can do Left_iris - Left_eye and get a vector representing the direction of the sight.

With these two points, you can do Left_iris - Left_eye and get a vector representing the direction of the sight.

could you explain this? what is this vector?

I also wanted to ask -

I'm using the same data with another web cam (Left and right Eye pf Person 2 and Left and Right Iris of Person 2) - Is there a way I could get indication if the two person are having eye contact to each others?

Imagine you draw a straight line passing by the center of your eye and the center of your iris/pupil. That line will represent the direction of your sight. When you look at something, then at something else, your eye will rotate, its center will remain more or less static, but the iris/pupil center will move, making the direction of the line to change.

The vector (Left_iris - Left_eye), or (Left_iris_X - Left_eye_X, Left_iris_Y - Left_eye_Y, Left_iris_Z - Left_eye_Z)is a representation of that direction.

If you calculate that vector for both the right and left eyes and do a bit of math with them, you can get an idea of which point in space the person is looking at. That point being the intersection of each line prolongating the two vectors. If there is no intersection then the person either look infinitely far away, or has some form of strabismus.

This is all theoretical though, and probably you want to search for where the lines comes the closest from each other instead of where they actually cross (cause there is a high chance that they actually never cross given the roughness of the measures from Mediapipe).

This could help you to know if someone's sight is pointed toward someone else's head.

Thanks for your input! I will look into your idea..

--

the Z axis is how close/far away the person is from the camera..isn't it?