Max for Live Focus: PicSeq

One of the most common beginner Max patches uses the random object to generate melodies. My problem has always been that I don't construct melodies that way, so it's going to be blindingly obvious that I'm not making the choices - I'd rather find interesting ways of filtering and massaging an initial random source to produce something more to my liking (you can find an example of that approach in this Max for Live tutorial).

When it comes to audience-facing randomness, your own mileage may vary. But I’ll bet that we both keep our eyes peeled for interesting new generative plug-ins. Here’s a new one that I’ve been enjoying quite a bit: PicSeq from the folks at encoderaudio.

I’d first encountered the encoderaudio folks in relation to a very nice Max for Live implementation of Tom Whitwell’s Turing Machine – a favorite of many Eurorack enthusiasts. (Lately, they’ve been working to standardize their user interfaces and drop the "click on the teensy panel in Live to open the larger panel" approach, but I am still fond of their original front panel.)

PicSeq device is an image-based sequencer that locates itself beautifully within the tradition of image-to-audio devices. Transcoding images to audio is a pretty regular Max beginner's goal, and it takes many forms:

Averaging all or a part of an image and extracting data

Transcoding horizontal scanlines of an image as a sequence

Horizontal/vertical scans as parameter lists

I’m sure you can rattle off a few of your own.

It's not surprising that there are so many approaches - you're dealing with two very different perceptual modalities.

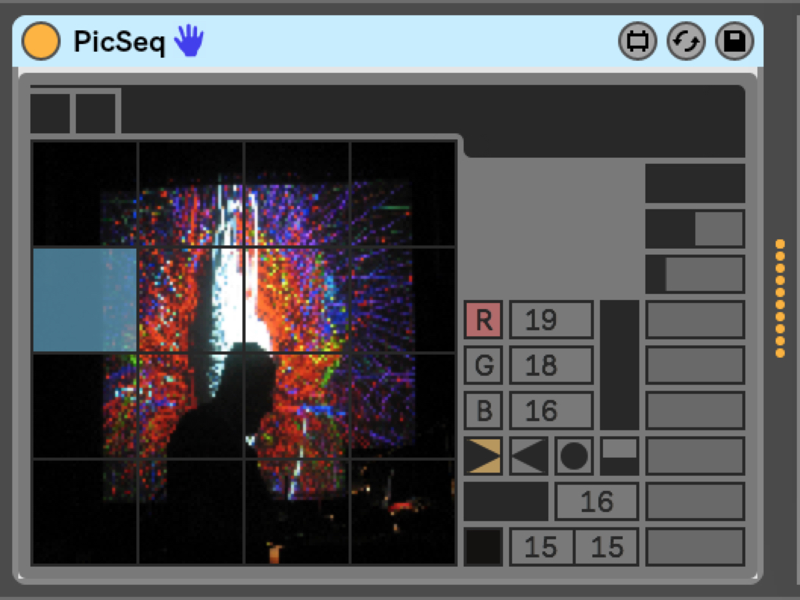

PicSeq derives its particular sequenced materials not by processing a complete image, but by converting it into a set of 16 subunits - the input image is downsampled to a 160 x 160 matrix and divided into 16 equal 40 x 40 pixel sections.

From that 40 x 40 section, PicSeq chooses a single pixel from the 1600 possibilities (which you can select using offsets), and is then analyzed for average red, green, and blue values. Those values are mapped to MIDI notes.

One of the great things about the explosion of idiosyncratic Max for Live devices is the discovery that everyone approaches what seems to be a single problem quite differently, and those serendipitous differences can provide some interesting possibilities. Those differences can vary from “Hmm….” (The sequencer counts from lower left to upper right instead of the upper left to lower right that I’d have expected) to “Wow.” (In situations like this, I’d have opted for averaging all the pixels inside the 16 subunits to produce the R, G, and B values instead of choosing a specific pixel.)

It’s pretty obvious that the pixel offsets allow for a much greater range of possibilities. In addition, consider the visual image itself – it’s quite likely that any two adjacent pixels in any image that isn’t random will bear some resemblance to its neighbors, which means that the sequences derived from adjacent pixels will.

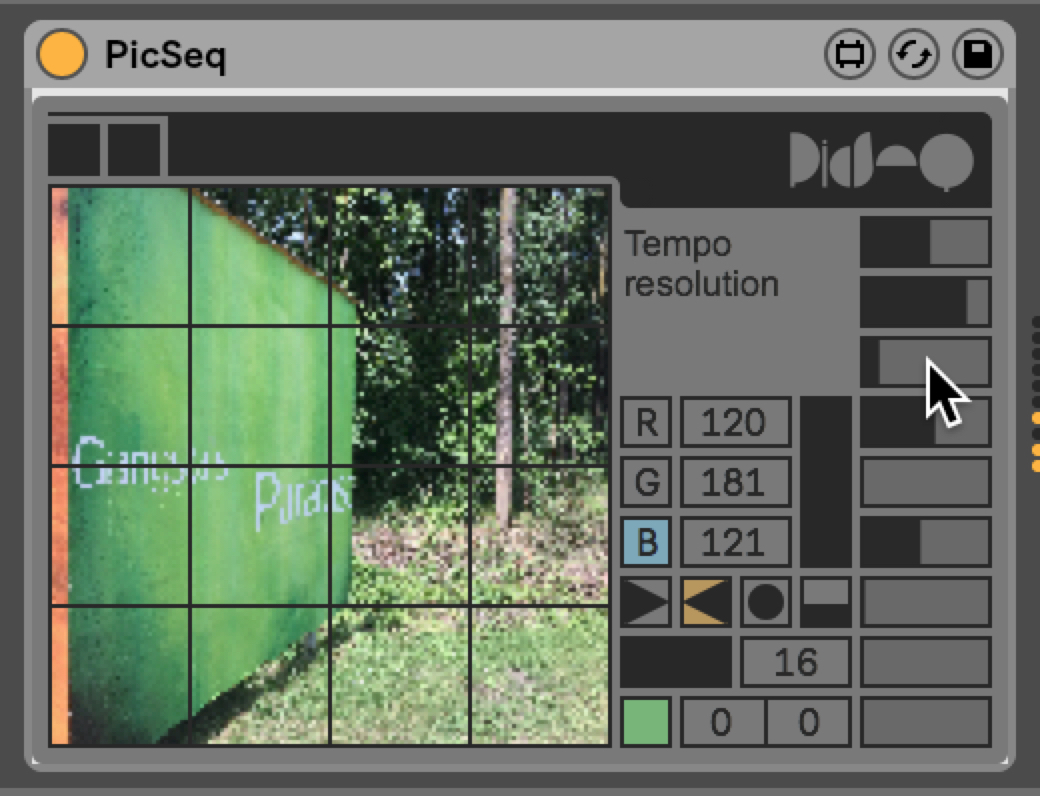

The interface is simplicity itself: drag and drop an image onto the grid to load, and you're off to the races. Wanna know about a control? Hover over it and check the doc window.

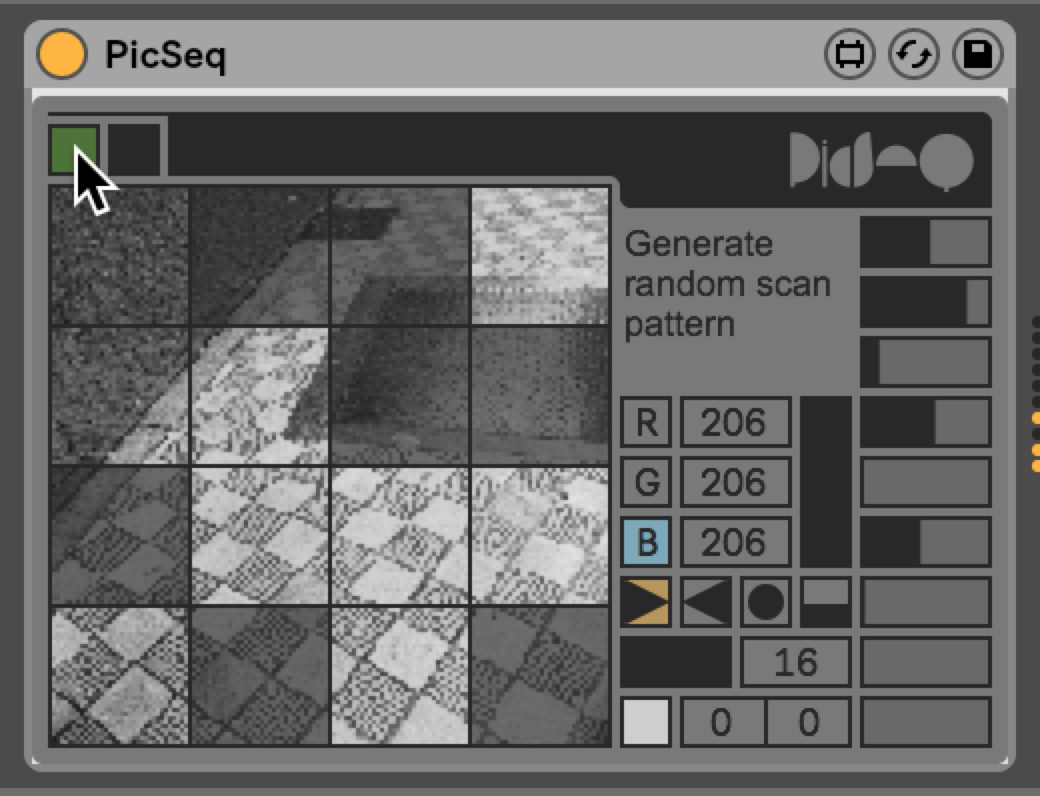

Want to turn sequence units off and on? Just click on an square in the sequence or hit the PicSeq's random scan pattern button.

Want to add a little variety? In addition to generating randomized scan patterns, the PicSeq's controls support generating randomized velocity, time resolution, swing, and note length, too.

There's a lot to like here. Give it a spin and see if it's not a great little tool for your set.

by Gregory Taylor on June 5, 2018