Node for Max Memory Issue?

TL;DR: Sending large volumes of data from Max to [node.script] over time (even with no processing in Node) appears to cause unbounded growth in Max’s memory usage.

Hi, I've noticed some strange behavior with Node for Max. It seems that the more data is sent from Max to Node, the more memory gets allocated and this increase appears to be unbounded over time.

This becomes problematic in long-running contexts for example, using Node to relay DMX data to hardware continuously over a long period. The memory usage just keeps growing.

I’ve tried to isolate the issue with a patch that sends a bunch of data to a minimal Node script that does nothing except receive messages from Max.

Here's the Node script:

const Max = require('max-api');

Max.addHandler('from_max_patch', (...data) => {

// No processing, just receiving data

});Is this behavior due to how Max handles data and memory under the hood, or is it a problem with the implementation of the Node for Max API?

Thanks in advance for any insight or suggestions!

Hi Gabriel, I ran your patch for 10 minutes and didn't see drastic memory increase (see video below). The heap stayed around ~5->8MB, though allocated memory grew to 10-15MB. This sounds healthy, and allocated memory should be reclaimed by the OS with pressure.

However, I noticed you're sending tens (possibly hundreds) of thousands of messages a second to node.script with [metro 1] + [uzi 100], perhaps GC is struggling to keep up (especially with V8's single threaded nature). I think 44Hz is standard DMX rate, have you tried this with something less frequent e.g [metro 23] to see if it makes a difference to memory allocation?

From what I can see in the N4M source, buffer allocations are properly cleaned up through the socket, so this could possibly be related to GC pressure rather than a true leak. But definitely worth testing at realistic rates to see if the issue persists (unless you do require this very high frequency?).

Dan

I've just noticed an issue that might be related:

Hey, thanks so much for taking the time to check this out!

Just to clarify a few things:

The memory growth I’m referring to is the overall memory allocated to Max by the OS, not just the heap reported in Node.

I’m fully aware that the number of messages being sent to node.script in the test patch is excessive, it was mainly intended to accelerate the issue for demonstration purposes. That said, some of the patches I’m working with do send large volumes of data at various rates over DMX (which might not be ideal, I admit).

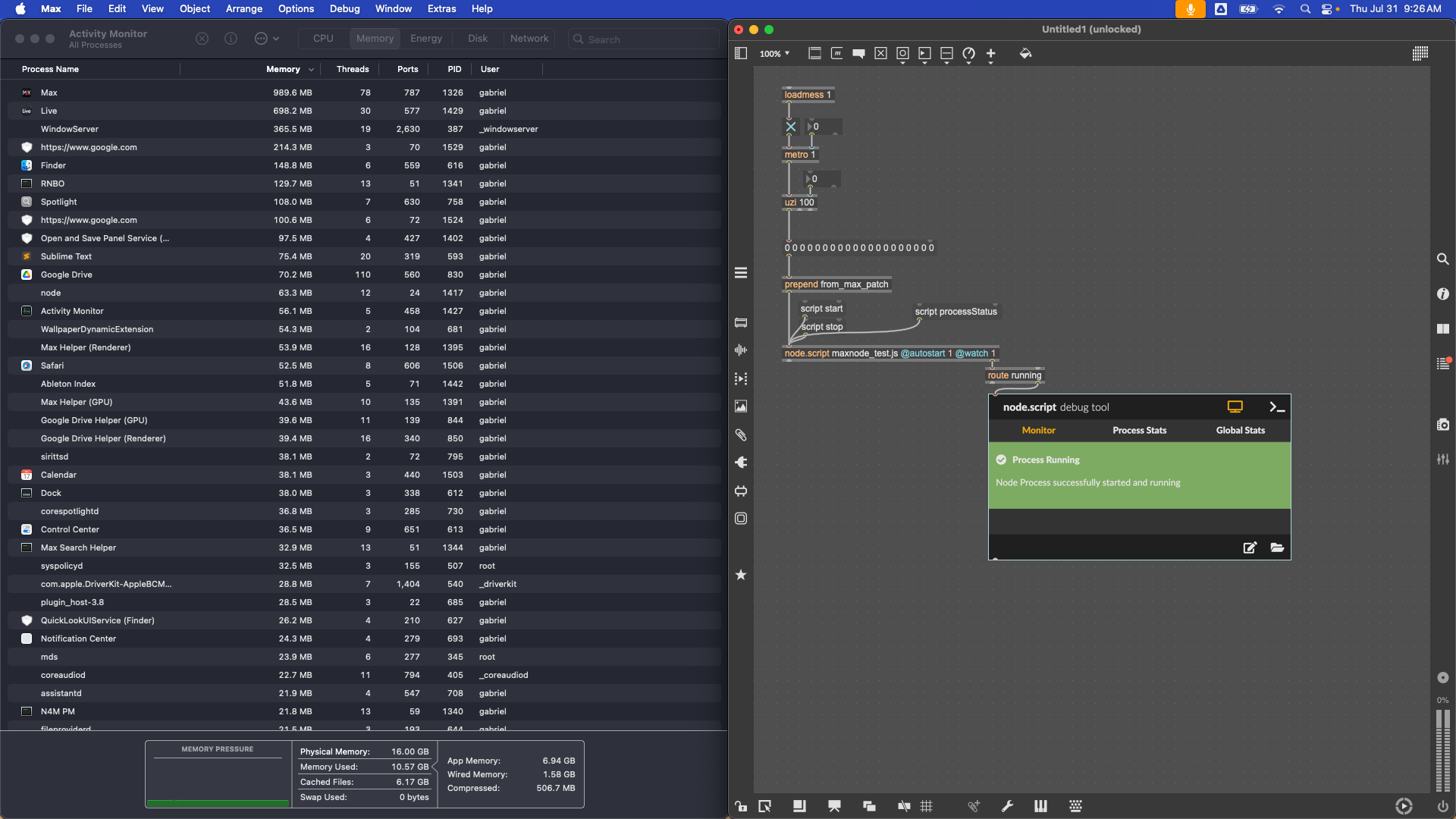

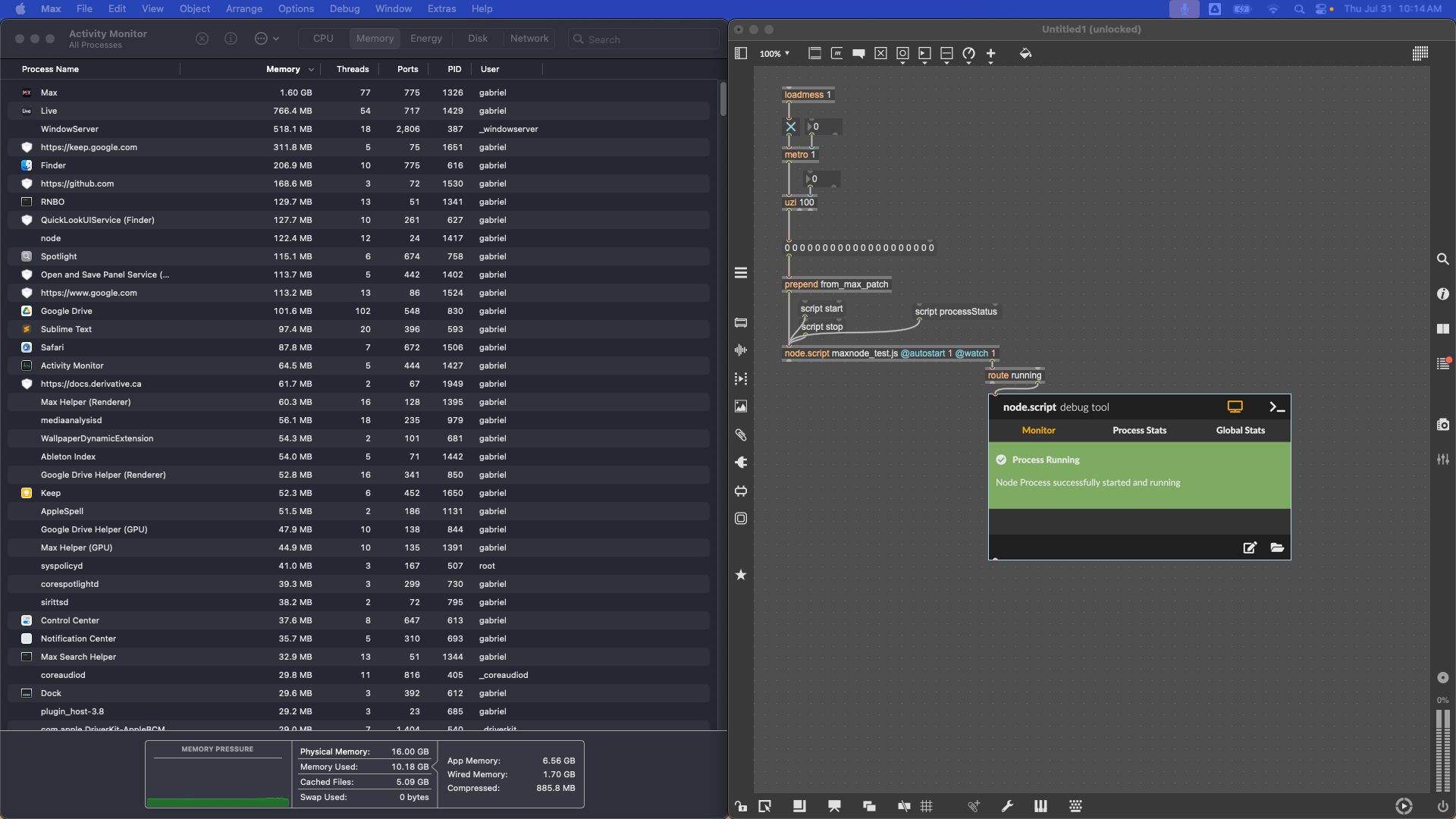

Here are a few screenshots to illustrate the memory increase I’ve been observing:

@DAN

I'm currently running another test patch where messages are sent at a much lower frequency to the same script. It’s roughly simulating a situation where 21 DMX universes (each with 512 values) are sent 44 times per second. This test is also way more gentle on the CPU.

The memory growth is happening much more slowly, but it still seems to be increasing over time.

My concern is that the growth appears to be unbounded, and that its rate is simply proportional to the message frequency and size being sent to node.script.

I'm going to run that test for a few more hours and post the result here.

const Max = require('max-api');

Max.addHandler('from_max_patch', (...data) => {

// No processing, just receiving data

});Ah apologies, I thought you were referring specifically to the separate Node process rather than Max's process.

This is rather strange, I haven't paid too much attention to the Max memory usage while using N4M (probably should have...) but I will revisit my project to see if I experience the same issue (I send similar continuous messages at about 20Hz). Perhaps there is something strange going on with the message serialisation in the node.script.mxo external. Will be interesting to see your result after a few hours of continuous throughput.

Edit: I ran this through MacOS Instruments and can see thousands of persistent malloc allocations (144 bytes, 80 bytes, etc.) in Max's process that correlate with the message sending. Functions like hashtab_storeflags and dictionary_appendatom_flags are being called but the memory doesn't seem to get freed. Not sure if this is expected behaviour or a leak, but thought it might be useful data for the devs to see.

Finally had time to finish these tests. These were done on a Mac M2 using Max 9.0.7, with a slightly modified version of the first patch I posted to reduce the message rate sent to [node.script]. Still no processing on the Node side.

I ran two scenarios:

Test #1

20 universes, each with 512 values

~44Hz message rate

Memory growth of approximately 135 MB/hour

Time: Memory usage

0 hrs: 260 MB

2 hrs: 520 MB

6 hrs: 1.04 GB

11 hrs: 1.75 GB

Test #2

50 universes, each with 512 values

~44Hz message rate

Memory growth of about 250MB/hour

Time: Memory usage

0 hrs: 271 MB

2 hrs: 780 MB

6 hrs: 1.47 GB

11 hrs:3.10 GB

These results seem to confirm what I observed initially.

What originally led me to investigate this was performance degradation in more complex patches running over time. In those situations, the memory growth seems to correlate with that slowdown.

As Dan mentioned earlier, it would be helpful to know whether this is expected behaviour or if it points to something like a memory leak.

The content of the Node script:

const Max = require('max-api');

Max.addHandler('from_max_patch', (...data) => {

// No processing, just receiving data

});For anyone reading this in the future: I ended up contacting support, and they were able to reproduce the memory growth. A bug report was created for their engineering team.