Creating Videosync Plug-Ins in Max for Live

Showsync’s Videosync application (currently Mac-only, but there is a Windows version said to be in development) caught my attention initially for the same reason it’d be of interest to many of you - because of the way that it lets me tightly integrate reactive live video with my Live Suite. The software just “made sense” to me based on the way that the Live application itself handled workflow: There were clear versions of video effects control that basically looked really similar to the Racks and Group Tracks I already was trying to get into the habit of using (and extending using Max for Live devices I’d already created). The downloadable demo was completely functional, so I could investigate whether it really was easy to use, set up with straight-ahead Syphon output support, and so on.

And the final kicker: There was a Plug-in SDK.

You read that right: I could develop video plug-ins using Max for Live. So I decided to download and install the demo, and to try creating video plug-ins.

For starters, I downloaded and installed Videosync from the Showsync website. Next, I grabbed a copy of their plug-in SDK from the website, installed it, and then added the location of the SDK I’d downloaded to Max’s search path as described in Videosync’s setup page.

I started at the beginning and opened the very first tutorial page - your first plug-in - and ran through the tutorial. It was well laid out and quite easy to follow:

Make and rename a copy of the sample plug-in file.

Edit the Max for Live device’s patcher file to load a shader file with another name.

Open the shader file in a text editor and modify it to suit your needs (In the tutorial, the shader code itself was modified to add a new parameter declaration to the device and to change a little of the behavior of existing parameters by modifying a few lines of the code).

Edit the Max for Live device’s patcher file to add the parameter to the patch. That involved nothing more than copying the patching associated with an existing parameter and using the Inspector for a number box to set the default value for the parameter.

Save and close the patcher, and presto! A new parameter.

I was a little surprised that it was that simple – I figured there would be a whole lot of exotic programming rather than the act of creating and patching in Max for Live that I was already pretty familiar with.

My Real First Shader

I thought I’d create a Videosync plug-in of my own by finding a shader that I really liked and creating a plug-in that ported the shader code.

I often prepare for something that I think is going to take time and be complex by identifying the “fun” parts of the task and doing them to fortify myself for the work to come. In this case, the fun part was finding the shader I wanted to use when building my plug-in. On the heels of the announcement of Vidvox's ISF shader format, I checked out their package, and – after a little googling – I found a wealth of materials out for on the ISF shader format, from Vidvox’s own set of standard shader examples to Joseph Fiola’s “shader a week” set of tutorials on github and – my personal favorite – a huge listing of user-contributed ISF shaders that you could browse by category, popularity, and order of submission.

So I spent a few… okay, I spent a couple of hours chasing through the shaders on display. The website display of each shader included a chance to see it in action, and also to check out the parameters for each shader in a nice interface that let you click and drag on a set of on-screen faders (or link that parameter to an LFO) to check out what they did to what you saw. After a whole lot of fun, I settled on one I really just loved to watch – and a shader that I’d love to have as part of my Videosync plug-ins: ColorDiffusionFlow:

I clicked on the download link and grabbed a copy of the shader file, ColorDiffusionFlow.fs.

My plan was to begin by repeating what I’d learned from the Videosync SDK tutorial: I’d copy a simple existing plug-in from their examples, and then modify the Max for Live patch to make use of the shader. In my case, instead of modifying an existing parameter, I’d be creating the patching to support all ten of the parameters in the ColorDiffusionFlow shader (rate1/rate2, loopcycle, color1/color2, cycle1/cycle2, nudge, and depthX/depthY).

Since that first shader tutorial covered how to change the plug-in patch to load a new shader and showed how to add a parameter, I had a good idea of what the process would look like.

I got to work by making a copy of the Lines plug-in folder and renaming it to match my new plug-in: CDFlow. I took the ColorDiffusionFlow.fs file I’d downloaded and dropped it into my new folder. I also changed the name of the Lines.amxd file to my new plug-in name, CDFlow.amxd

Now, it’s time to load my plug-in and get to work on porting the shader.

Videosync plug-ins look and act just like the Max for Live devices that you already know, with the difference being that they’re video devices. You add one to your Ableton Live set by navigating to the folder containing the Videosync plug-in you’ve just created by copying, and clicking and dragging the CDFlow.amxd device into the Effects area of a MIDI channel. When you do that, you’ll see a plug-in front panel with two parameters (they’re the ones that the Lines.amxd file you copied had in them originally. We’ll fix that), and the Videosync display window will appear. The display window is purple because you need to actually read in the shader file and assign parameters.

Click on the CDFlow plug-in’s edit button to open the patcher, and click on the Patching Mode icon at the bottom of the toolbar to switch from Presentation to Patching mode.

Here’s the Videosync plug-in in its initial state:

The patcher has two basic parts. To the left, an abstraction called videoDevice passes your messages to Videosync, which does all the heavy lifting of loading the shader code used in the plug-in. You’ll notice that the message box directly below the videoDevice abstraction contains the name of the shader file that the original example device loaded: Lines. All we need to do to change the shader our plug-in loads is to change the message box contents to name our new shader, ColorDiffusionFlow.

That part’s all set. The file ColorDiffusionFlow.fs will now automatically be loaded as part of our plug-in.

The rest of the patcher to the right is concerned with the plug-in parameters themselves. Again, the patch displays the original pair of parameters associated with the Lines example plug-in that handled rotation (radians) and line density (density). We’ll need to get rid of these parameters and add the ones our shader uses.

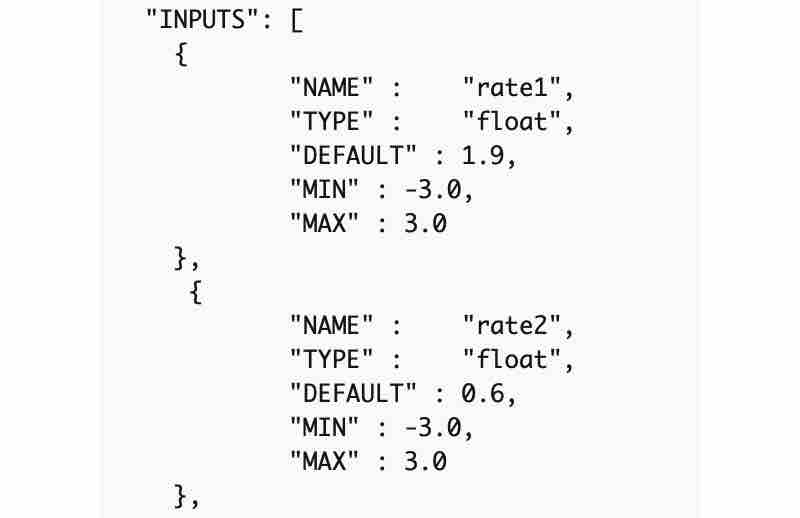

To get a look at the shader’s new parameters, we can open the ColorDiffusionFlow.fs shader file in a text editor. In the shader file, all the parameters we’ll need to set are right at the top of the file in the “INPUTS” section.

That portion of the shader code in the file tells us everything we need to know to set up our plug-in, and also provides some helpful data for good plug-in design. There’s an entry for each parameter that gives us the parameter name, the data type it uses, and both the default value for the parameter and its minimum and maximum range values. Here’s an example portion of the code:

By looking at this, we know we’ll have two parameters with similar names (rate1 and rate2) that both have the same minimum and maximum parameter ranges (-3.0 to 3.0). We also have default setting for both parameters (1.9 and 0.6, respectively).

All we need to do to finish up our shader port is to add live.numbox objects for displaying the parameter values, and connect them to a prepend object whose argument is the name of the parameter we want to set, and send the result to the videoDevice isf 1 abstraction.

Good patching design in Max often makes use of the ability to use the Max Inspector to set things like default values and minimum and maximum ranges for the display and output as well. The input section of our shader code gives us just the information we need. All we need to do is to add a live.numbox for every parameter, select the object, and then set the parameter’s default value and value range.

We do this by using the Inspector. The following example shows how we set up the rate1 parameter for our shader. We add a live.numbox object and select it, and then click on the Inspector icon in the right-hand toolbar to display the object Inspector.

To set the live.numbox object’s range, double-click in the data column for the Range / Enum attribute and type the value range pair in (-3. 3.). To set the default parameter for the object, make sure that the Initial Enable checkbox is checked, and double-click in the data column and type in the default value you want to set, as shown:

Once we’ve added the first parameter, save the patch without closing it, and you’ll notice that the display window for Videosync will suddenly display the shader. It’s working!!!!

The nice thing about adding the parameter ranges is that we can’t scroll the live.numbox object values out of parameter range as we play with the plug-in - that’s good design.

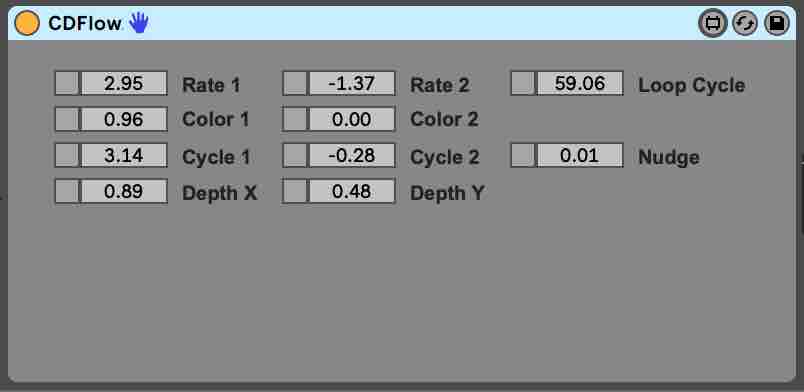

The rest of the work of creating the plug-in involves repeating that process for the other nine parameters associated with the shader: add a live.numbox, use the shader file to get the default and range values, set them in the Inspector, and then add an append object whose argument corresponds to each different parameter. Here’s what we'll have when the work is done:

Finally, select all of the live.numbox objects and the comment objects for the parameters we’ve created and choose Object > Add to Presentation to add them to the presentation mode. Once that's done, click on the Presentation Mode icon on the bottom toolbar to enable presentation mode, and arrange the live.numbox objects and labels to serve as the user interface for the plug-in.

When that's done, we’ll have a user interface that looks like this when the plug-in is saved and closed. That’s it. We now have a finished Videosync plug-in that warps and slides beautifully as a part of a Live session.

Flow Control - Controlling A Plug-In with MIDI

I can certainly take my lovely CDFlow.amxd Videosync plug-in and use it as a layer in a normal Videosync instrument, controlling its triggering and display by setting properties as part of a series of plug-ins. As I made my way through the Videosync tutorials, it became clear that I’d personally be likely to use it as the first plug-in in the sequence, treating as the generative “background” for my set. Since the plug-in slides and tumbles beautifully with no more input than an initial set of parameters, that would be just fine.

But my own aesthetic with respect to generative structures is to keep things very simple, and to take pleasure from the more minimal interactions I could use to roil or stir or recolor the flow. With that in mind, I thought that I’d follow up on the success of my first Videosync plug-in by creating a second plug-in – a sort of partner to CDFlow.amxd that could use MIDI data from my Live session to control the plug-in’s parameters.

As I mentioned earlier, I got myself in the mood for doing some more Max for Live patching by having a little fun, and it did it again – in this case, it involved creating a Live session whose MIDI tracks I could use to control Videosync plug-in. I fired up one of the step sequencer patches I had sitting around from a recent book project I did for Cycling ’74, grabbed some of the lovely free instruments in my Live Suite, and in a few pleasant minutes, I had something to my liking whose separate MIDI channels ran at different rates of speed in comparison to each other.

I figured that the “terraced” sequences meant that I’d have little trouble making sure that my Videosync controller plug-in was working. I now had a couple of MIDI channels I could use a source material for modulating my CDFlow.amxd plug-in parameters. Time to get to work on my CDFlow MIDI effect plug-in!

I started by grabbing a Max for Live MIDI effects template from my Live application’s browser and installing it on a MIDI channel by clicking and dragging it to the MIDI Effects area of the Device View, and then clicking the plug-in’s Edit button to display my patch. The Max for Live MIDI effects patch, like its audio effect and MIDI instrument cousins, is really simple: one Max midiin object connected to a midout object:

As the patch suggests, you can put what you want between the two objects. However, if you keep that connection and patch alongside the pair, you’ll have a MIDI effect that you can “stack,” since any MIDI input will be passed through in addition to any processing you want to do. That’s what we’re going to do here.

The CDFlow companion plug-in patch begins with some simple patching: a midiparse object to filter out all MIDI messages but note on/note off and velocity, followed by a stripnote object that removes all of the MIDI noteoff (velocity 0) messages. The result will be a stream consisting of two values between 0 and 127 for each MIDI note played:

Next, we'll add a pair of scale objects that will let us set a different input range pair for MIDI input, and then scale the output result to a consistent 0. – 1.0 output range.

I’m choosing to set that consistent output range value because 0. – 1.0 is the normal range for VST plug-in parameters, which means we can reuse this bit of code in other places (This is a really good patching habit to develop – always be thinking of how you can reuse your patches as you work). Scaling the input MIDI range will let us do a couple of useful things:

We can set my input scaling to match any range of MIDI notes for a channel in order to only grab a pitch range of a more complex MIDI track.

We can take a MIDI track whose note events are in a narrow range and the “pad” the low and high ranges of my MIDI input to produce outputs that are in a certain narrow range – the “interesting” range of one of the CDFlow plug-in’s shader parameters, for example.

Here’s an example of what I mean:

Here’s what we have so far.

The next question concerns how we send those values to our CDFlow,amxd plug-in.

Actually, that part is going to be pretty easy: all we’ll need is a send/receive pair for each of the ten parameters the shader program uses. For the sake of convenience, we'll use the parameter names that the shader code uses itself (rate1/rate2, loopcycle, color1/color2, cycle1/cycle2, nudge, and depthX/depthY). Deciding on the send destination for the MIDI note and velocity information is composed of two parts:

We've added a live.menu object whose contents are the word “off,” followed by a listing of the plug-in parameter names. When we choose a menu item, its position (sent out the left outlet of the live.menu object) is combined with the MIDI note or velocity data as a list consisting of an integer and the parameter name using a pak object.

The resulting list is sent to a subpatcher that handles routing the parameter data to the CDFlow plug-in: p CDFlow_destinations.

The subpatcher contents are simple: a route object looks for the menu item number (the lack of a 0 as a listed argument to the object means that when “off” is selected in the live.menu object, no data will be sent), strips off the menu item number, and then sends the floating-point value to a destination whose name matches the name of one of the CDFlow.amxd plug-in’s parameters:

Segmenting the patch in this way will mean that the only part of the patch that is specific to working with the CDFlow.amxd plug-in is the set of send objects used in the p CDFlow_destinations subpatcher file – If we ever want to re-use this plug-in when working with another Videosync plug-in, all we’ll need to do is to change the names of the send object’s destinations in that subpatcher. Here’s what the final patch logic looks like:

This is looking good. There's a bit of subtle housekeeping we'll need to do next.

Ableton has released an incredibly helpful set of production guidelines for creating Max for Live devices. Don't let the term "production guidelines" frighten you off - their document is the motherlode when it comes to describing the basic issues you're going to encounter when developing plug-ins, and their suggestions for how to resolve them. Grab yourself a copy of this document right now, and silently bless the folks at Ableton for their hard work!

Reading through the guidelines, I realized that there was going to have a problem with the MIDI note and velocity live.numbox objects polluting the Live application's Undo stack pretty quickly. Happily, the workaround was right in the document. All we need to do to avoid the problem is to select the live.numbox objects and set their Parameter Visibility attribute set to Hidden using the attribute's checkbox in the object Inspector. Problem solved - thanks, Ableton!

To finish up, we'll need to add some labels for the parameters for the UI and then select all of the live.numbox and live.menu objects and the labels and choose Object > Add to Presentation to add them to the presentation mode. Once we’ve done that, click on the Presentation Mode icon on the bottom toolbar to enable Presentation mode, and arrange the number boxes and labels to serve as the user interface for the plug-in.

We’ll also need to set the device so that the user interface is displayed instead of all the patchcords. To do this, choose View > Patcher Inspector to open the patcher Inspector Window, scroll to the bottom, and click on the checkbox for the Open In Presentation setting.

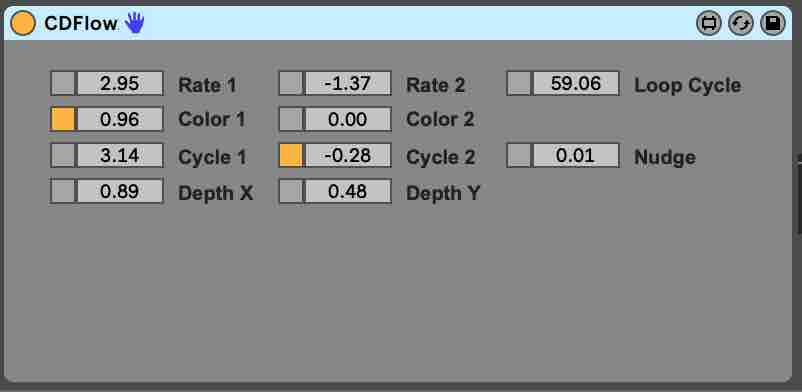

When saved and closed, here’s what the final result for the MIDI effect plug-in looks like:

Save the plugin-in (which I named CDFlow_MIDI_mapper) in the set’s Project folder so that it will be easy for Live to find when loading this set.

Learning To Stir the Flow

There’s one thing left to do. We need to patch together the logic that controls how the CDFlow plug-in responds when we send input data to the Videosync plug-in. This is going to result in making changes to the initial CDFlow plug-in, of course. The modified plug-in will do everything that the original did, but add the ability to receive input data from the CDFlow_MIDI_mapper MIDI effect plug-in, and to scale the received data to make the 0.-1.0 data range to match the parameter ranges for each of the shader parameters.

Remember how we considered the patching for the original CDFlow.amxd plug-in as essentially consisting of ten versions of the same patching logic (each one corresponding to one of the shader parameters)? I’ll be showing the changes in the CDFlow.amxd’s plug-in’s patching the same way, using the rate1 parameter as my example. Once again, here’s the original version of the patching for the CDFlow.amxd patch:

The CDFlow_MIDI_mapper MIDI effect patch included a number of send objects in the CDFlow_MIDI_mapper patch whose destinations corresponded to each of the shader’s parameters (you can see those parameter names by looking at the arguments to the prepend objects in the patch).

We’ll need to add a receive object for each parameter that references the shader parameter name using an argument (in this case, it would be r rate1).

We also know that all of our input data is in the same data range: 0. – 1.0 floating point data. However, that range doesn’t correspond to what the shader code expects. Remember how we made the user interface easier to use by looking at the INPUT section of the shader code in the ColorDiffusionFlow.fs file and entering minimum and maximum values for each live.numbox in the patch associated with a given parameter? We can use those same numbers as arguments to a scale object to scale the input data to match what the shader expects (in our example case, it would be scale 0. 1. -3. 3.).

So you might expect that all you need to do is to add a receive object for every parameter, connect it to a scale object whose ranges match those of the live.numbox associated with the parameter, and then connect the scale object up to the live.numbox, right?

Well, not exactly. There’s a problem with doing the patching this way - one similar to the Undo stack issues we took care of in our CDFlow_MIDI_mapping plug-in: the problem of user interface objects and the Live application’s Undo stack.

The problem is a simple one: If we connect any receive/scale object pairs to one of the live.numbox objects, the Live application’s Undo stack will grow every time a new value is received and displayed. So that receive > scale object > live.numbox connection is something we’ll need to avoid.

We're going with a simple solution to this problem – since we're not really all that concerned about seeing the received data, we’ll just add some patching logic that lets us choose to manually update shader parameters, or to enable a remote update that disconnects the parameter display. You may have a different idea about that solution, of course.

Here’s what the result looks like for the rate1 parameter. When toggled on, input from a CDFlow_MIDI_mapper MIDI effect will be appropriately scaled to set the shader parameter (although the live.numbox won’t be updated). When the toggle is off, we can change the live.numbox values to our heart’s content.

Once again, Ableton's production guidelines to the rescue!

As we did with the original CDFlow plug-in, we do the same patching for each of the other parameters associated with our shader. Here’s what the finished result looks like:

We finish the plug-in as before - by selecting all of the live.numbox and live.menu objects and the labels and choosing Object > Add to Presentation to add them to the presentation mode. Once that's done, click on the Presentation Mode icon on the bottom toolbar to enable Presentation mode, and arrange the number boxes and labels to serve as the user interface for the plug-in.

When saved and closed, here’s what the new and improved CDFlow plug-in looks like:

Using the Plug-In Pair

Now it’s time to watch and listen. Here's how I set things up to use the plug-in pair.

Since I intended to use MIDI output to control my CDFlow plug-in and since I also knew that I could use input scaling to change the nature of the output values, I decided to inspect the individual MIDI clips themselves in the Arrangement video. I could check on what the actual MIDI note range was and mouse over the velocity information to get an idea of what the velocity range of my clip was.

Once I’d done that and considered whether or how to adjust the MIDI inputs to create an output range I liked, I simply set the mapping ranges for the MIDI notes and the velocity values using the CDFlow_MIDI_mapping M4L device’s front panel. To enable data transfer to the CDFlow plug-in, I selected my destination from the live.menu items.

To enable receiving the data my CDFlow_MIDI_mapping MIDI Effect was sending out, all I had to do was to click on the toggles for the color1 and cycle2 parameters. Doing so enabled receiving the MIDI data.

After that, all I had to do was start my Live Session playing and watch the fun.

The Project file you downloaded contains a complete Live session that includes both the CDFlow.amxd and CDFlow_MIDI_mapping.amxd plug-ins I've created in this tutorial, and includes several instances of the CDFlow_MIDI_mapping.amxd plug-in spread across several MIDI channels to modify the ColorDiffusionFlow shader's parameters (I also added a Videosync feedback effect that slightly smoothes the transitions very slightly in a way I thought was beautiful and subtle.

Here's an example of my session in action:

And For My Last Trick....

I was really happy with the results of my plug-in development adventure, but decided to push on. I think it's appropriate to end this tutorial by including my next step beyond.

While I was really happy with my audio-reactive plug-in pair, I wasn't really making as good a use of Videosync's built-in plug-ins and functionality at all. So, after a bunch of tutorial reading and woodshedding, here is another Max for Live Videosync project that adds a Videosync rack and some track grouping to take things to the next level.

Enjoy!

by Gregory Taylor on October 15, 2019