Rainy Day Max: Return to the Forbidden Planet

I’m not sure why, but it seems like lots of beginning Max users think that the only way to do anything cool with Max includes hours of meditation and days of careful patching. While that’s sometimes true, there are a lot of useful and entertaining things you can come up with on a grey and rainy afternoon. Often, it starts with just playing around and having fun, combined with the kind of basic background understanding of how Max works that a little quality tutorial time will give you.

Here’s a rainy-day afternoon hack that sounds a lot more complicated than it was to do. It all began with playing around with an old favorite Max patch of mine – Forbidden Planet.

The patch has been around a long, long time as an example of how to do convolution filtering in MSP using the pfft~ object – it was even turned into a Pluggo plug-in back in the day. The current version of the original Convolution Brothers patch has been further hacked into the pfft~ form we have today by Richard Dudas (Or xoax, as he is known among Max afficianados. You owe him a drink, at the very least).

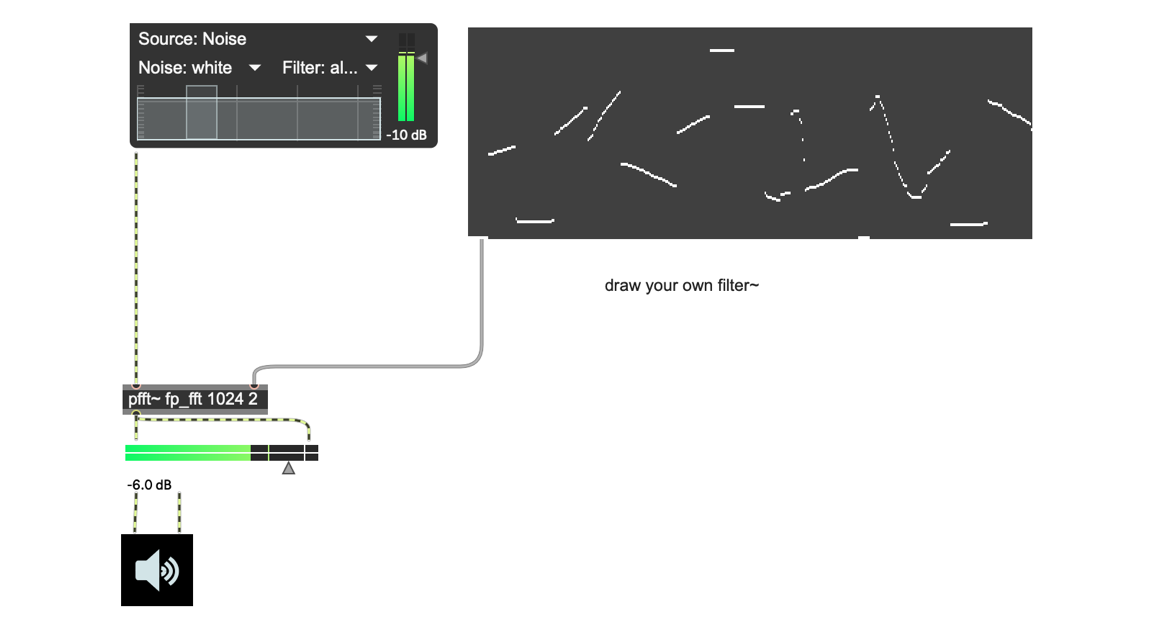

The idea behind the original patch is simple and elegant: using a multislider object, you can draw a contour for a 512-step convolution filter which gets applied to your input. It’s a lot of fun (I’ve included a copy of the patch and the abstraction the pfft~ object needs - fp_fft.maxpat in the download package).

I was drawing filter curves to compete with the sound of the rain outside my window when I suddenly thought to myself “There’s no real reason that I have to be the one drawing those filter curves. What if…”

And that’s how it began.

In these moments, I tend follow the “What if…” question by running through my usual approaches to generating and organizing variety – stuff like using random values or a drunkard’s walk to generate the list of values, using LFO outputs to write the list of input values (as I tend to love to do), and that sort of thing. In this case, I started to wonder about using Jitter as a source.

Jitter is great at creating and processing images, but it’s also useful because it works with matrices – large batches of data that represent the frames of the image being displayed, the OpenGL object you’re working with, and so on. In the case of visual images, each pixel in the movie contains 4 planes of data in the range 0-255 and is part of one big array – an array you can slice up in various ways to generate lists of data you can do other things with.

So I thought, “I wonder what I’ll get if I slice up a video image and grab a scanline and use it to fill the multislider? If I can do that, I don’t need to modify the Forbidden Planet patch’s innards at all. It should just work and maybe do something cool with little effort on my part."

(Author’s note: since I’m writing a more-or-less tutorial, what you read next not only condenses the time I spent playing around and checking the refpages – it also leaves out some other great ideas that came from just playing around and having fun. I won’t go into them here, but I just wanted to remind you how important fooling around and having fun can be.)

Slice and Dice

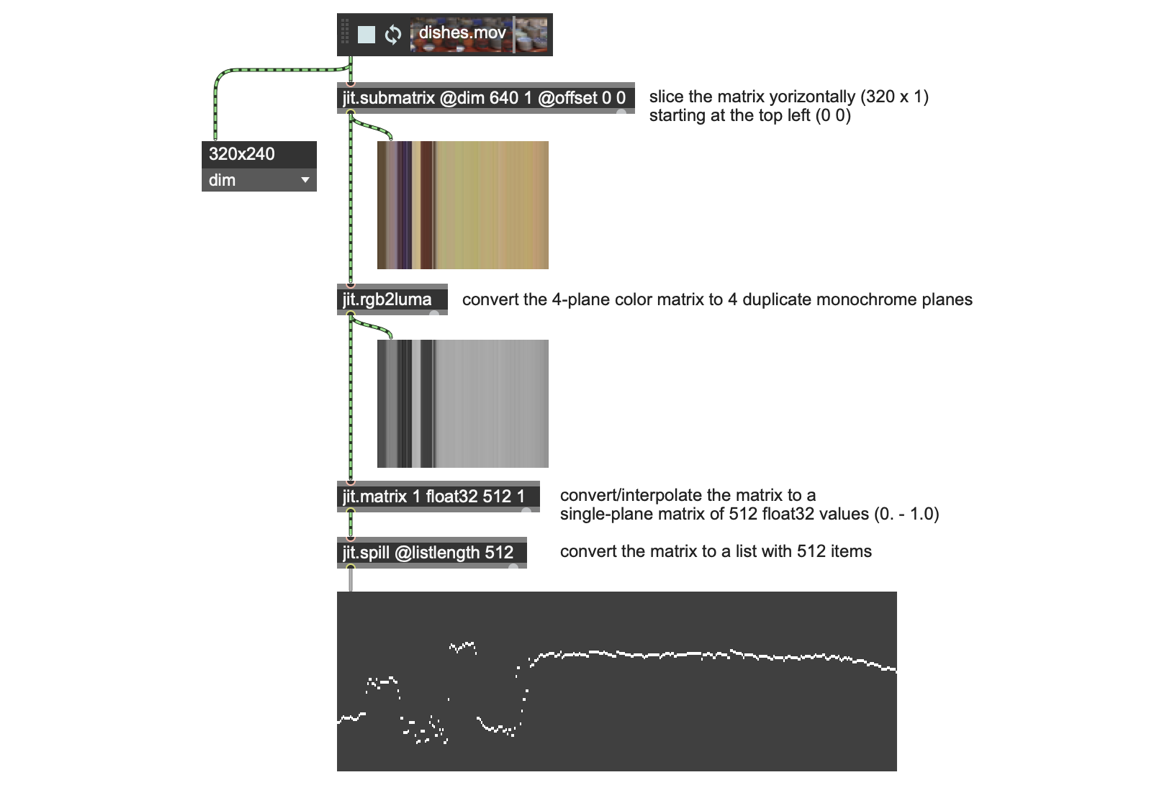

I added a playlist object to my Forbidden Planet patch, and then clicked and dragged to load a movie (I went with dishes.mov), and clicked on the circular button to enable looping of my movie. A jit.pwindow let me keep track of what I had as I worked. I was working with a video, so I knew I had a 4-plane matrix full of char data. What I needed was what the Forbidden Planet patch’s multislider contained: a single list of 512 floating-point numbers in the range 0. - 1.0.

Broken down into pieces, here’s what the problem looked like:

Create a horizontal slice from the image

Take that 4-plane char matrix “slice” and convert it into a single-plane matrix that contains 512 items, each of which is a floating-point number in the range the multislider object in the Forbidden Planet patch uses.

I’m breaking this down because lots of folks out there who are interested in converting images to data (that process of to-from or from-to has the fancy term transcoding) do this a lot, which means that this is a good thing to learn to do, and a useful skill to have. As is often the case in Max, there are a number of ways to do this; I’ll show you what I think is a quick and easy way:

To create the horizontal slice from the original image, I’ll use the jit.submatrix object, created to do exactly that. The object uses attributes (@dim and @offset) to define how you want to slice things up (the example below shows you what the output looks like)

I only need a single plane for my list. Instead of using jit.unpack and deciding which color plane to use, I’ll keep it simple: the jit.rgbluma object will output a 4-plane matrix where each plane contains the same information: a monochrome image.

What might seem to a beginner to be the trickiest thing to do – changing the number of entries in the matrix and converting the data from the char range (0-255) to floating point numbers (float32) is made simple by virtue of a feature of jitter matrices: they adapt. If you specify different attributes when you create a jit.matrix object and send it a matrix whose attributes are different, the receiving jit.matrix object will automatically adapt by changing the dimensions of the matrix and number of planes, as well as scaling the incoming matrix and converting the values.

After that, all that’s left to do is use a jit.spill object to convert the matrix to a list of values to sent the Forbidden Planet’s multislider object.

Here's what the process looks like:

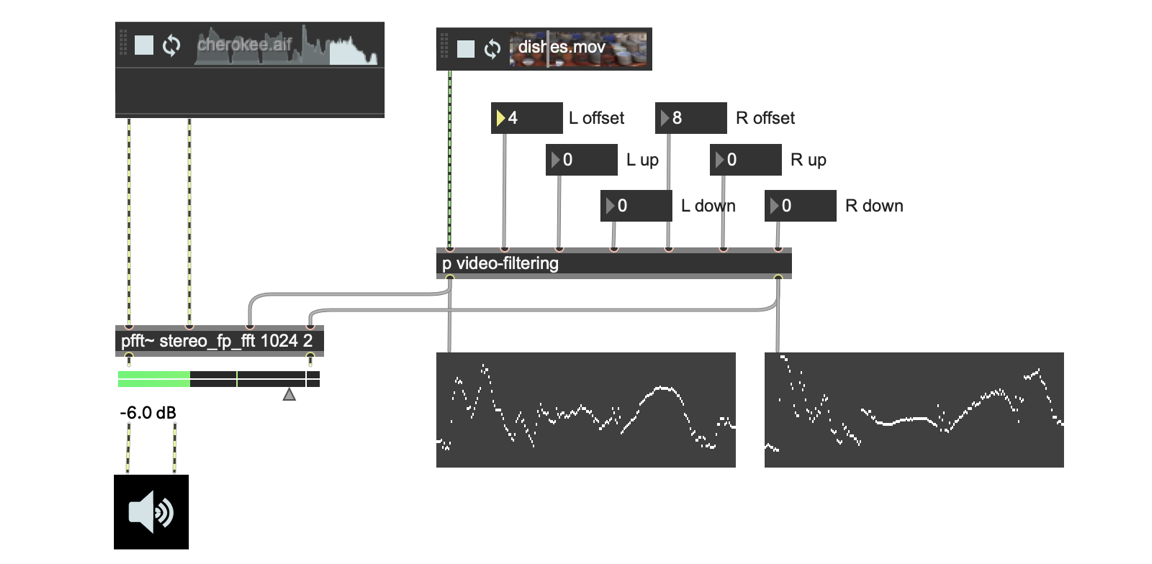

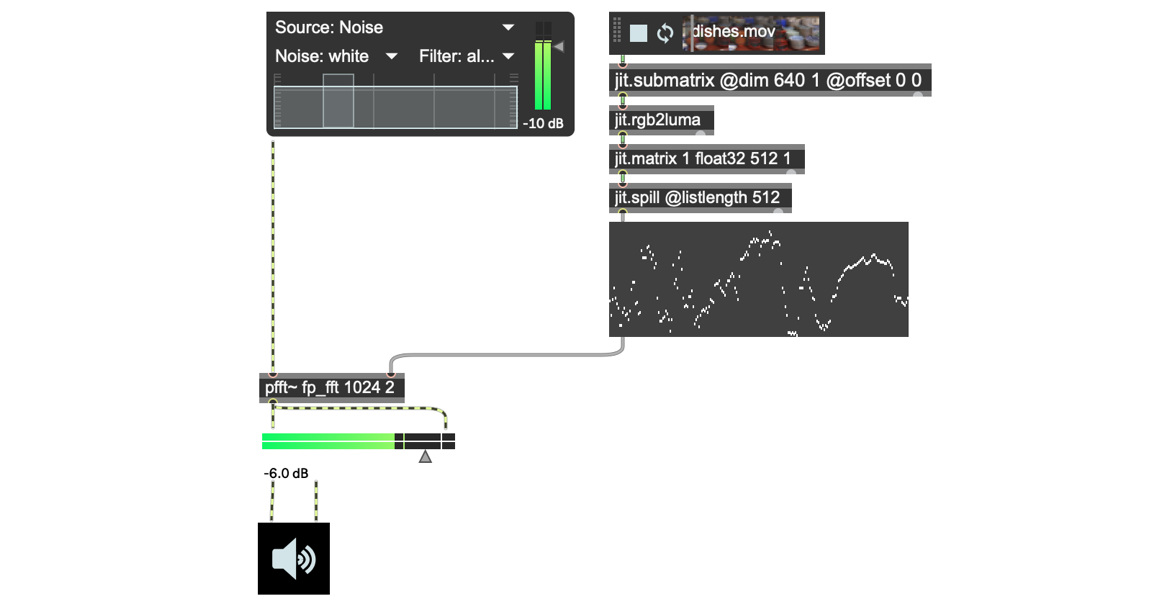

To keep things compact, I removed the jit.pwindow objects and connected the jit.spill object to the multislider in my original patch, which suddenly started making much more interesting noises.

Putting it all together (and taking it apart again)

My rainy day patch sounded kind of cool as it was. One of the great things about being able to reuse patches such as the Forbidden Planet is that you’re done quickly, and have more time to think about improvements.

While my patch sounded great, I was thinking that it would be awesome to have a stereo version. The lazy (or efficient? You decide!) way would be to just duplicate the pfft~ object and hook everything up again….

But I was thinking that I’d like to look inside and examine the patch loaded by the pfft~ object. How hard would it actually be to create a stereo version? Here’s what I found:

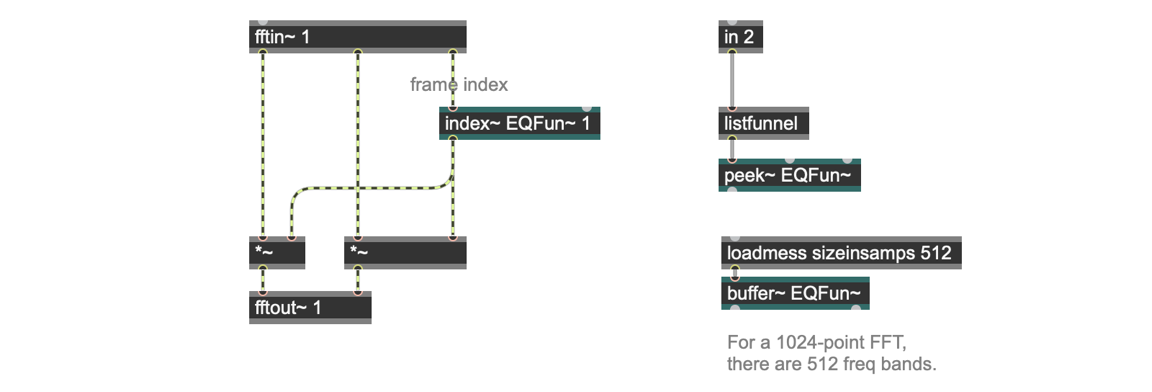

The inside of the patch wasn’t scary at all! I had a single-channel buffer~ object (EQFun~) that had list values unpacked by a listfunnel object and written into the buffer using using a peek~ object. The EQFun~ object was connected to the third outlet of the fftin~ object, which a quick look at the refpage explained output the frame index for the FFT.

So – all I’d really need to do would be to have a second fftin~/fftout~ object pair for the right channel of my pfft~, together with a second buffer~ that I could use for getting index values.

Wait… the EQFun~ buffer has only one channel. Why couldn’t I replace it with a stereoEQFun~ buffer and then both write the incoming list data to one of the channels and then have the peek~ object use one of the two channels to do the indexing? That ought to work fine.

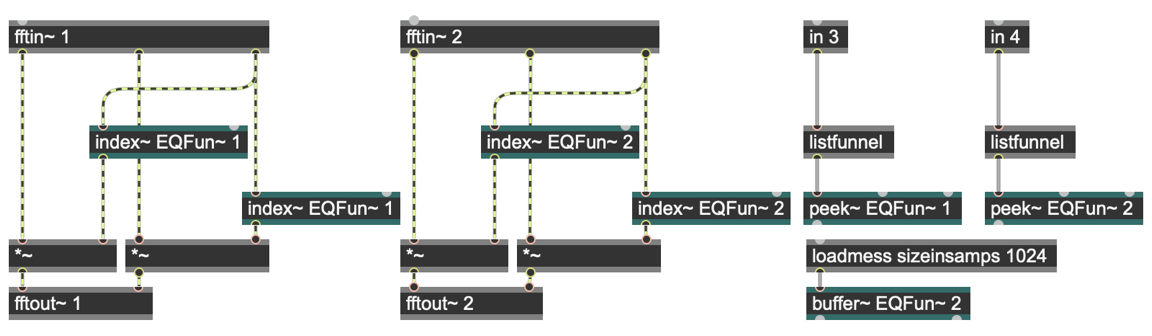

And it did. Here’s what the new abstraction (called stereo_fp_fft.maxpat) looks like now:

I had two more ideas from my Jitter life that I thought I might try, too.

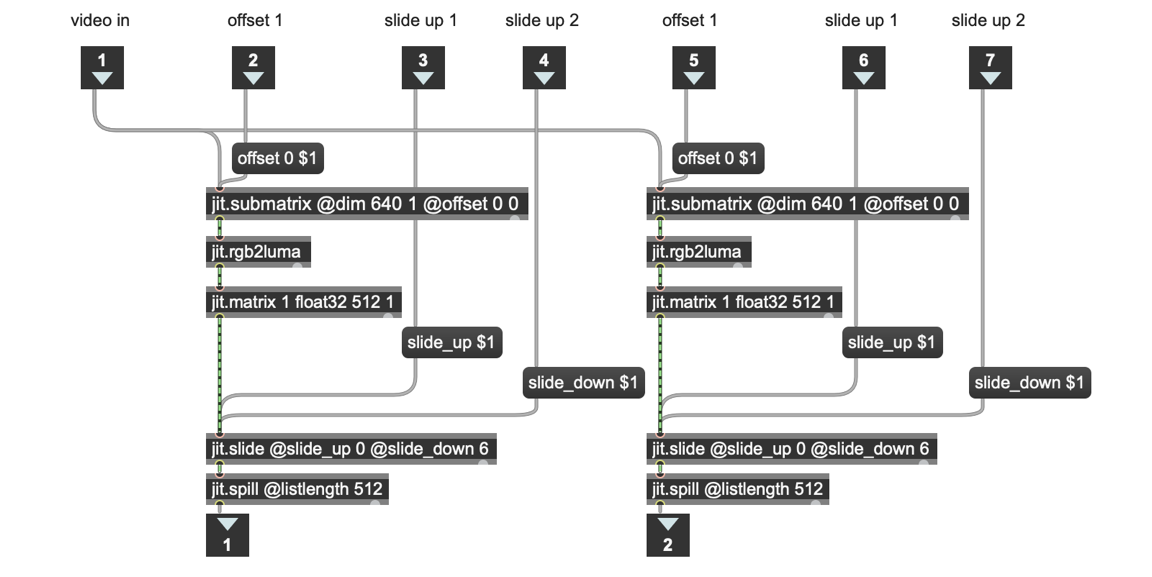

The horizontal slice I created was the very top of the video image (

@offset 0 0). Since attributes work as messages to Jitter objects, I could send the messageoffset (number) 0and grab any horizontal line in the video – all I’d need to do was to add a message bpx with the contentsoffset $1 0connected to a number box and then send that to my jit.submatrix object!I love smearing video images using the jit.slide object (okay, I’m actually doing cellwise temporal envelope following, but I think it looks smeary) and using the messages

slide_upandslide_downfor different effects. There’s no reason I can’t do that with the matrix that contains my list of values before I unroll it and sent it to the multislider….

So I spent a few minutes prototyping it and it worked like a charm - I could scan back and forth through the movie, and produce subtle, almost vocoded effects. And the fact that I was now working in stereo meant that separate channels could have separate controls, as well – setting the offset for each channel to near neighbors produced really subtle effects. After my proof-of-concept messing around, I rolled both of these things up in a nice subpatcher that kept things neat and tidy:

The result? Not at all bad for a rainy afternoon. I hope you enjoy these patches, and feel free to make your own improvements. Happy patching!

by Gregory Taylor on August 3, 2020