The Wearable Interface: An Interview with Bob Pritchard and Kiran Bhumber

It's all too common these days to encounter instruments and approaches to performance that are solutions rather than works in progress of processes. That isn't necessarily surprising. After all, all instruments are - to some extent - current solutions to one kind of problem or another. It's just that updates to, say, a piano, are few and far between. I always love the opportunity to talk to my friends and acquaintances about their work in progress. Not only do you get a sense of the challenges and solutions while they're in the thick of it, but it's also a great look at how your friends think. I love watching the string of prototypes, the occasional humorous glitch, the joy of the serendipitous solution.

So when I had a chance to sit down and chat with my friends Bob Pritchard and Kiran Bhumber on their collaborative work in progress - an interface for dancers and wearable instrument while it's in progress, I jumped at the chance, delighted to have articulate and generous people to talk to about work as it happens (to quote the Canadian Broadcasting System)....

The design of "new instruments" is always a tricky business, for all kinds of reasons: We've got this huge history about physical instruments we already know as ways to transduce small motor movements to actuate strings or redirect the flow of air, and so on. Questions about virtuosity come as part of the freight charges, too. Increasingly, we talk about instruments as "interfaces" of one sort of other, as well. So I'm always curious about new instruments or interfaces started - How'd you get here?

Bob: The UBC Digital Performance Ensemble SUBCLASS (it's kind of like a laptop orchestra, but not really) is made up of students from across faculties, and all but the performance specialists have taken Max/MSP/Jitter programming. We focus on tracking aural and physical performance gestures, using the resulting data to control synthesis and real-time manipulation of live audio and video. Over the past several years we’ve used such things as microphones, tablets, smartphones, Wiis, webcams, Kinects, and custom-built hardware. The ensemble members appreciate the very strong performance skills of the dance and music specialists, and usually augment those skills with software, rather than asking the performers to learn completely new ways of performing. So, there is an inclination to develop interfaces that take advantage of what performers are already good at doing.

Kiran: There has been a great deal of research on how dancers create audio/visual media through movement in space using non-contact sensors such as webcam and Kinects. However, in these instances, the dancer is restricted to the point of view of the sensor with their movement being a function of the space. In expanding this approach, I was thinking about how to create a system where dancers could freely move in space, and embody audio/visual media through physical contact with their bodies, in a way that is analogous to how an instrumentalist would perform (ex slide fingers up a neck of a string instrument). This lead to the idea of the bodysuit, which would consist of sensors that a performer would make contact with - essentially “playing themselves”. About six months ago Bob and I were talking after a new music concert and I asked how one would create a performance suit with sensors on it. Over the next few weeks we chatted and emailed about the topic, and then decided to move forward. Bob explained how different sensors might work and I gradually refined what my idea of the suit would be: it would have multiple, reconfigurable sensors that were more than buttons, it would be used by musicians and/or dancers, it would be aesthetically interesting, and performances would celebrate the human form and movement.

Can you talk a little bit about the bits of the current body suit "under the hood" a little bit (if it's not a huge secret, of course)? Were your design choices informed at all by what similar approaches *weren't* doing [a chance to expand on your comments on reactive fabric]?

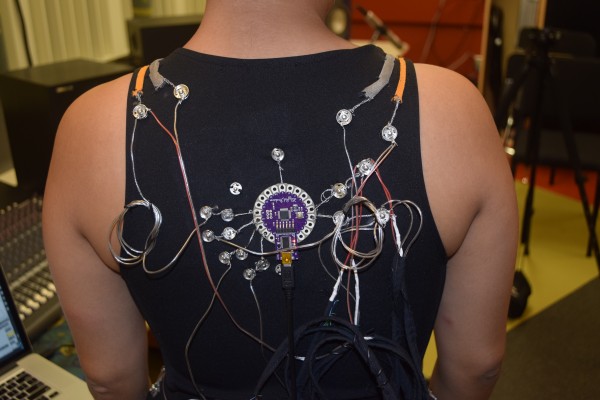

Bob: The current version of the suit uses parallel tracks of resistive and conductive fabric for each sensor. Simultaneously touching the two tracks (with a metal thimble or a highly conductive finger) completes the circuit, and the resulting voltage depends upon how far along the resistive fabric you touch.

Essentially, the voltage is read by the Arduino, the data is massaged a bit in Max/MSP to handle noise and do some averaging, and is then used to control scrubbing through samples or video. There are a lot of web demos of conductive fabrics such as KITT’s Zebra Conductive Fabric or on the Instructables website, but they tend to show turning lights on and off, controlling TVs, etc. We don’t see a lot of examples of using fabric for instrument interfaces in performance: we tried using other types of conductive and resistive materials (including analog cassette tape) but they weren’t a good fit for this project: soft membrane sensors shorted out over body curves, and other materials were either too stiff or not durable enough. We ended up substituting conductive fabric for the conductive thread because it gave us better continuous contact, and less noise. Working with the fabrics did have its problems: for the final prototype I hand tacked the fabric onto the suit, so it's a kludgey-looking bit of sewing! We now have professionals machine sewing the materials for the next set of suits. Originally we had a LilyPad snapped to the back of the suit, and it handled all the data. However, an interesting experience with conductive thread, electrical shorts, smoke, and sparks made us rethink that!

The prototype uses an Ethernet cable to communicate with the laptop. Obviously wireless is a very attractive idea, but - as Perry Cook likes to say - “The only thing worse than wired is wireless.” I’ve experienced data loss on wireless networks in performance, and it’s not fun.

It's hard not to watch the video [see below] and not think of the body suit as a general interface rather than something created to do a specific dance piece or something like that. I also expect that having a prototype working also means that the kinds of media you're connecting to it are, in turn, being modified by the person that uses it or the interchange of various technical features and behaviors that work well in the environment. Have there been any surprises in terms of how having the prototype changes or redirects what or how you're doing with it?

Kiran: We started off thinking of the suit as an interface for controlling sound, much like you might think of joysticks, or faders, or such, and all the testing concentrated on controlling the suit as a musician. I think we were both surprised at how different the results are with a dancer as compared to a musician. The dancer has much more “performative” movements when shifting from one part of the suit to the next – we weren’t expecting the difference to be so great but it should have been obvious – and the result is very elegant and engaging. The musician was much more involved with playing with micro aspects of the sound – scrubbing, retriggering, layering, and so forth, and played the suit more like known interfaces.

What does the "development cycle" of the body suit look like? How do you design or refine its behaviors in practice? Tuning general behaviors? Tailoring the interface to a specific user? Building a library of states or behaviors?

Bob: We began by exploring different types of resistive and conductive materials such as various threads, cassette tapes, and foils by laying them out on a desk and measuring changing voltages. And laying them out again. And combining them. And changing them, etc.

We were interested in being able to have bare fingers complete the circuits, and that worked for some materials, but those materials weren’t practical for attaching to a body suit. We settled on using conductive fabric, and then worked on eliminating the noise and jitter in the data coming off the circuits. We found that problematic and decided to try using membrane slide sensors in fabric pockets. However, the courier company lost our order (!), and Immersion systems didn’t have any more sensors of the length we needed, so we went back to refining the fabric sensors. We decided to have the users wear metal thimbles to eliminate most of the noise generated with finger conduction, and that complemented the idea of a sewn fabric-based interface.

Kiran: Each of the suits is tailored to a specific user, since they need to be able to easily reach the entire length of each sensor in performance. Part of constructing a suit is selecting the right size of bodysuit for each performer and then having a fitting session to tack on the fabric. The basic circuit is the same for each suit (sending continuous data ranging from 0 to 1023) but the tuning of the circuit and the default settings differs depending upon the piece – what samples or synthesis methods are being controlled, what happens in different sections, and so on. Since we are early on in the development of things the Max/MSP patches are fairly basic. It will be interesting to see how they develop as we work on pieces with video control, or on pieces where two or more dancers play each other. I expect that the design and placement of the sensor strips will also change as we create pieces and critique the results, and as we source different materials.

What's next for the work? What's next for its repertoire? What refinements suggest themselves to you as you work?

Kiran: In early November Marguerite Witvoet (new music performer and vocalist extraordinaire) will be performing with the suit at a conference at the Western Front, doing a piece by me where she controls samples and real time vocal manipulation. That same month two dancers will perform a piece by Bob at the UBC Museum of Anthropology as part of SUBCLASS’s concert opening an exhibition of textiles from around the world. I’m also working with a dancer at the U. of Michigan to create a work where I wear the suit and play clarinet while the dancer controls sample triggering and manipulation of my sound.

Bob: We’d like to develop multiple performer pieces, with performers (dancers and/or musicians) interacting to control their own and their partner’s suit, but like Kiran I’m interested in combing the suit with live processing of acoustic instruments. We also need to explore the whole issue of wired vs. wireless.

Kiran: We still have two analog channels to work with, and lots of digital. We could use the analog channels to allow the performer to switch between different modes, to control which samples or video are being used, what types of processing, and so on. Infusion systems has a Pi shield that opens up 8 channels of analog input on Raspberries so that might be worth exploring as well.

The suit raises the issue of body touch in performance, from functional and artistic/expressionist viewpoints. How do respond to that?

Kiran: Performance with the suit is meant to be sensuous, in the same way that dance performance is sensuous through the celebration of body motion and pose. The RUBS interface can make the audience more aware of the male or female body in performance, but it also gives the performer a strong self-reference, since there is a haptic component in – and self awareness of – the location and pressure involved in controlling the sounds and audio/video processing. Like much of Canada, Vancouver has an active contact dance and improvisation scene, so close body contact in performance is not unusual for audiences.

Bob: In a sense all instruments except for the voice are interfaces acting as extensions of our human form, and we use those extensions to express non-verbal emotions and ideas. The suit is still an extension, but it pulls the interface back to the surface of body, so in some cases there might be a closer identification of body gesture and resulting sound or video. The control interface is far more elegant than the textile keyboards, drum pads, and circuits that are found on ties and tee-shirts. Body touch is required to perform on those interfaces, but those are simply transpositions of an more “remote” interface onto the body, where they were never intended to be. We think the use of fabric sensors fitted to the body rather than hard or semi-rigid control surfaces results in a more artistic presentation and enhances the production and interpretation of audio or visuals.

by Gregory Taylor on September 27, 2016