Artist Focus: Walker Farrell

Sometimes interesting people and objects are hiding in plain sight. Take Walker Farrell, for example - nearly anyone who's attended a trade show like NAMM of Superbooth has probably run into him at the Make Noise Instruments booth. You probably know his voice from listening to tutorial videos.

And I'll bet you even figured that Walker's the kind of person who's a mix of employee and enthusiast. Maybe you've even had the chance to see or hear him perform.

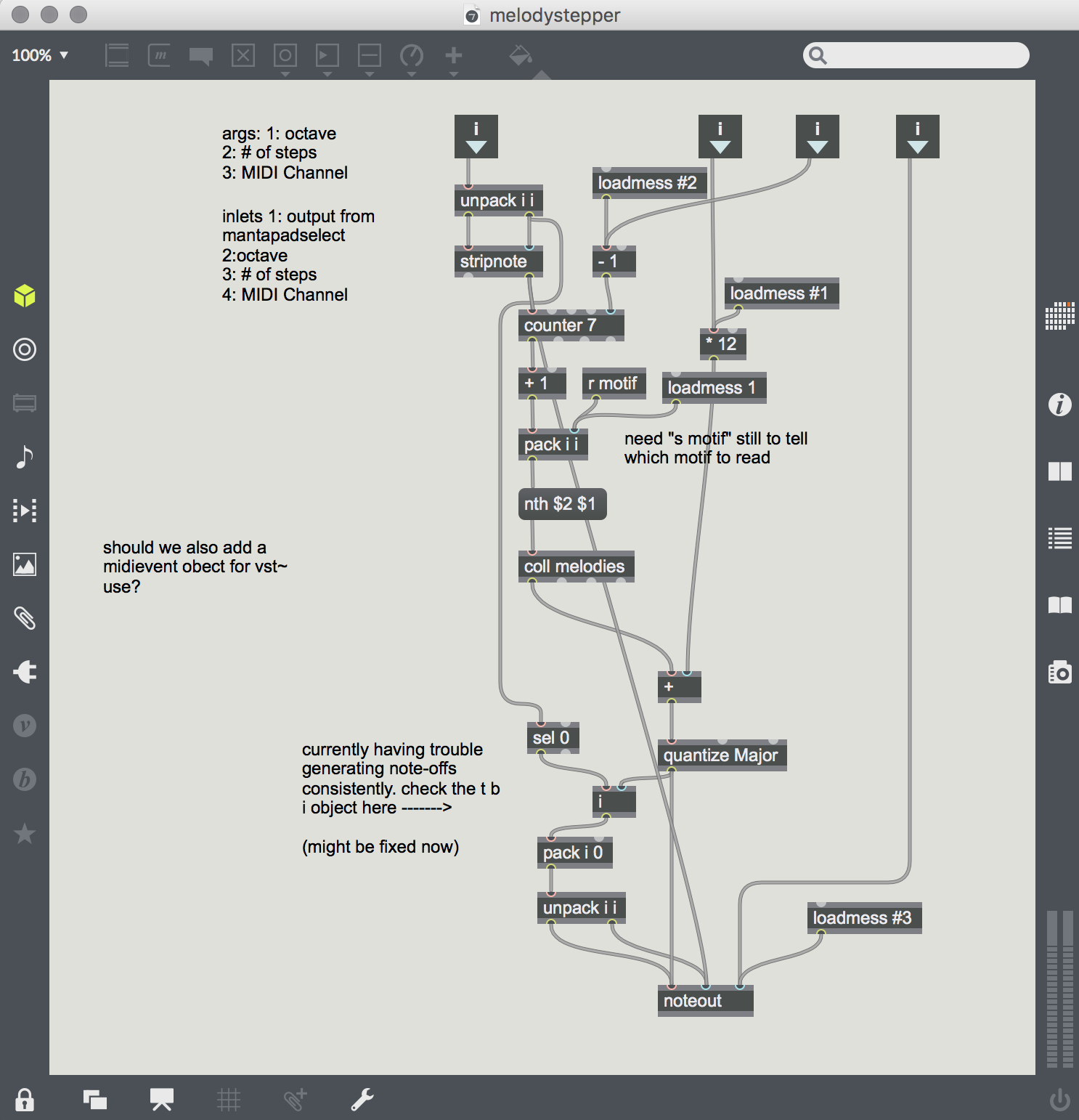

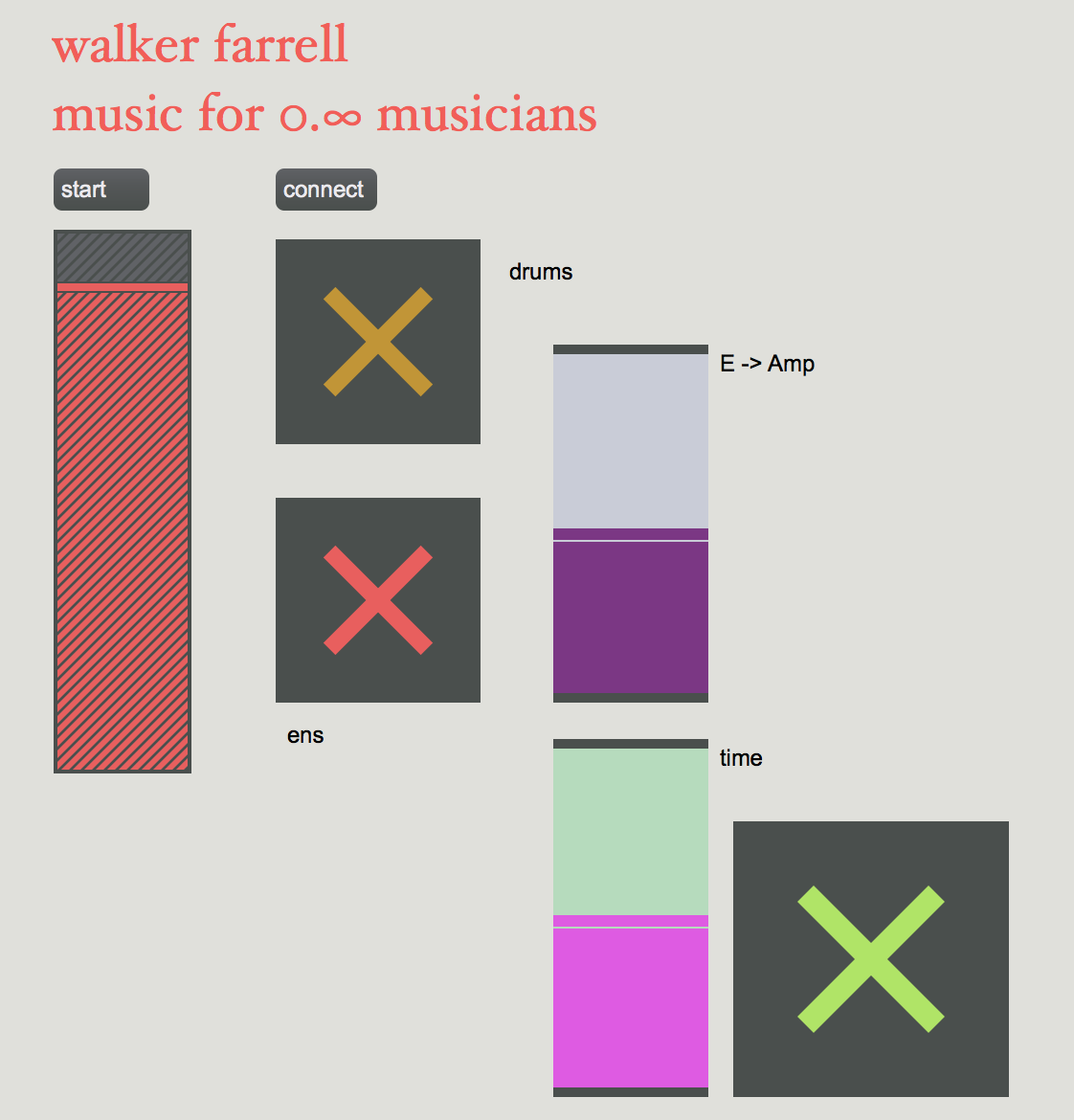

I haven't had that opportunity, but the appearance of Walker's recent Bandcamp release music for 0.∞ musicians, vol. 1 piqued my interest - for one thing, the "cover" of the recording was a Max patch! I thought this would be an interesting chance to sit down and talk to Walker about something besides analog synth modules, and to get a sense of how he came to his interests in the marriage of algorithmic composition and improvisation.

Your recent project music for 0.∞ musicians used Max to create an engaging synthesis of algorithmic compositional structures with real-time improvisation. Could you talk about how you started using Max and chose it as a tool for this project?

I first encountered Max in a college course in 2003 or so. In the context of this course the MSP side was not in use, and I did not really understand the purpose of Max. At the time, I was definitely interested in expanding my knowledge of sound design via synthesizers, but on the composition side I still unwittingly subscribed to this sort of Amadeus theory where the musician has complex musical structures stored a priori in the brain, and pulls them out and transfers them onto paper like a court stenographer. Some time later, around the time I started using modular synths (2008 or so) I began to take a different approach to composition. Modular synths encourage the building of complex materials by combining simple motions in various ways, and unlike the mythical Mozart's brain transcriptions, the musical results do not exist, even in the mind, until they are realized in performance. The change in my approach to music was significant and paved the way for my eventual embrace of the Max environment.

In late 2014 I finally got to wrap my head around Max enough to create things with it by myself and for particular goals. My early uses of it grew out of necessity: the laptop was the only instrument I could really use while holding my newborn son on my lap, so I spent many more hours with Max than I ever had before.

My initial goal for this project was to create a platform for group improvisation by a single performer. In other words something where I myself could play several instruments at once. I wanted the results to be determinate to some degree; I wanted most of the inbuilt indeterminacy to reside in the progression and juxtaposition of determinate actions, in other words, improvisatory. I tackled the patching as if I were running a modular synthesizer with an unlimited number of control voltage generation and processing modules, creating essentially 40+ copies of one "sequencer" with independent hand-controlled clocks. I suppose that at that point you could say I was specifically using Max to do something I could not do with hardware, but that grew directly out of my ways of working with hardware.

Much of your work involves using both software and hardware in interesting ways to create new sounds. How do you think they work to create interesting relationships between musician and instrument when used together as well as separately (MSP vs. analog signal processing)?

Software creates the possibility of complex operations being performed instantaneously, while hardware provides, in this particular case, mostly an instantaneous control surface that engages those complex operations in the software via real-time movement. In this first iteration of Music for 0.∞ Musicians, the software distributes "values" to each node and also serves as their "memory".

The nodes are activated by presses on the Manta, and the sheer number of nodes, when paired with the complex patterns available from my relatively well-trained piano playing fingers, enable complex results to emerge quickly in real-time. Relations between nodes create the structure in a virtual field. While playing, I feel as though the structure is emerging at all times, and my hands entangle with the many held states of individual nodes. The grammatical elements of melody and harmony are being discovered rather than played, while the phrasing and dynamics are the semiotic elements over which I have direct control.

Do you work separately with them?

I rarely work with software alone. I have explored generative music to some degree, and I enjoy setting up conditions for that, but I ultimately find it is my physical interaction with instruments that I find most musically rewarding, so my use of Max is kind of like "creating" a unique instrument between Max and the Manta as much as it is creating a piece of music.

I do work often with hardware alone. Usually when I play live shows I play with my modular synth, and I take a pretty different approach to this, both musically and in terms of how I physically interact with it. My tendency there is to set up a bevy of connections within which I have maximum leeway to make drastic dynamic dramatic changes at any given moment.

I also play piano for fun at home, and my practice there has veered gradually from classical playing to improv in the jazz idiom, following chord changes and doing substitutions etc. There's a rich tradition of this with many talented forebears, and I do not pretend to really be near a professional level there, but it's a fun release for me, and rewarding.

In all these cases, my practice is basically geared toward establishing a foothold for the best possible improvisation. I don't do much multitrack recording, editing, mixdown etc. I find those processes more stressful than fun, and I only have so much time. I'd rather spend a lot of time preparing my performance, then perform and be done, rather than spend most of my time embalming the result.

Many of us know you through your work with the Eurorack synthesizer company Make Noise. How does your work there influence your work and use of Max?

I could not possibly overstate my debt to Make Noise, in life and work alike. Working for the company has greatly developed my appreciation for aesthetic, creative, and social integrity. I now have electronic music upfront in my mind for 40+ hours a week, and then I go home and work on my own projects. The company's owners, Kelly and Tony, are incredibly generous to their employees, and also to the community here in Asheville, NC. There's no company I'd rather be a part of.

My work with Make Noise has also given me opportunities to talk about music with a great many of my favorite artists, which has been a fantastic learning experience. And my position has given me a lot more credibility than I previously seemed to have. More people listen to my music and ask for my opinions than ever did before. I mention this less out of pride or humility, and more because it has also led me to greater confidence in the value of my work, and from there to greater artistic ambition, which is important.

But thinking again on that approach that does not exist until it is heard that I mentioned earlier: I was well on this track before I met Tony, but his inspired approach to instrument design and the company philosophy of "discovering the unfound sounds" has really solidified and clarified this approach for me - it informs everything I do, musically and otherwise.

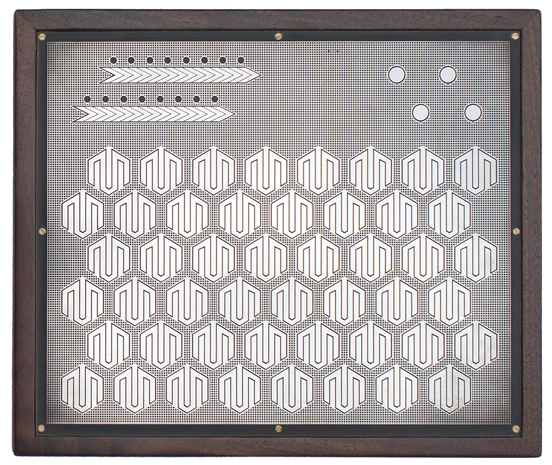

The Snyderphonics Manta is an interesting choice as an audio controller - what about the Manta drew you to working with it as well as using it on the music for 0.∞ musicians?

I initially tried using a Buchla Thunder I borrowed from work, which I liked because of its nonlinear layout. Unfortunately it had a couple problems that created obstacles. For one, I wanted Max to do most of the heavy lifting in terms of interpreting controller data, and the Thunder was set up to do lots of intricate programming of particular pads' output in its native interface, which is much slower and less precise than a modern computer running Max. Also, the Thunder was not very responsive to touch, and inconsistent. I found that I was having to press really hard for it to register presses.

The Manta, like the Thunder, has a nonlinear layout that was unfamiliar to my hands - unlike, say, a piano keyboard, where I already have many patterns inscribed on the memory of my fingers. Its interaction with Max is via an external object that translates all presses into simple data - pad number, velocity, pressure etc. So these raw numbers provided a blank slate such that I could use Max to program particular pads to instantiate differing complex processes. Ultimately, it means that the number of such complex processes at my fingertips is very large. I imagine I will use it for every project involving Max from now on.

When you consider working with hardware controllers, what kinds of features do you look for in the "next controller?"

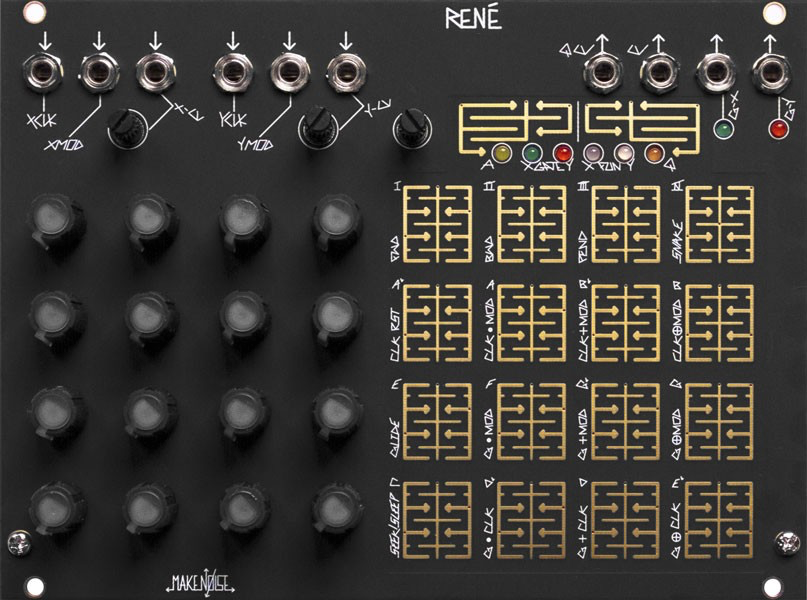

It would be totally dependent on my goals, but that said I don't foresee myself adding any controllers anytime soon. The Manta is perfect for what I thus far want to do with Max, and the Make Noise Pressure Points and René are perfect for what I want to do with modular.

Do new and different ways of interfacing suggest new ways of working to you (ways that perhaps benefit the relationship between performer and instrument or listener)?

Yes, absolutely. When composing my own music I am staunchly against repeating myself (perhaps to an uncommon extreme, as I also consider "repeating myself" to include transcribing anything from my head out into the sound world). The easiest way to fall into ruts is to use tools that respond exactly the same way every time you use them. While I appreciate the precision math available in Max, I am more interested in its capability to create environments where I can be expressive without the results of my actions being wholly determinate. I don't use black and white keyboards for electronic music, because I know how to play them already. Max and/or modular synths, in conjunction with less prescriptive control devices like the Manta or the Make Noise René, are platforms that let me reconfigure all relations of sound and vision with each new piece.

It's possible that some people who read this interview will begin to listen to Music for 0.∞ Musicians and quickly conclude that it does not "justify" all the thought I have put into it. I welcome this point of view, because another part of my goal is selfish, and accordingly will not satisfy those who judge the value of music on its ability to entice listeners even when torn from context: I have attempted to entangle the boundaries between musician, instrument and listener, such that I become the musician through the instrument, and I become the performer by being the listener. The recordings are recordings of me learning how to play by listening. The particular recordings' status as artifact of the project is arbitrary: they are necessarily an undertaking (attempt, beginning, embalming) of a process, not the process itself, which cannot be fully removed from the now in which it first took place. How it affects listeners other than myself is mostly out of my hands, except for those couple times I have performed this piece live.

You mentioned that there will be future iterations of music for 0.∞ musicians. What might we expect to hear in these new volumes as the project expands?

I have three major goals for the next iteration(s).

1. The first - and the biggest one - is a change in how the nodes constitute their identities. Currently they all share a single motif at any given time, and they are identical other than their placement in a timbral and pitch grid. I want the nodes to be sympoietic: a change in one node should lead to a change in another node. I hope to achieve this by having each hex plate on the Manta assigned to one of several possible node types. One of the node types is the one I used in the first iteration, that of just a basic stepping through a melodic motif with each press. I've started developing others for sound-file playback and oscillator banks. I want to start with at least four node types but I'll probably keep increasing the number of possible types over time, so that it continually evolves.

But perhaps the bigger difference is that the nodes are no longer atomistic, but rather "sympoietic". Each node type will have one control input and one control output (in addition to the controls generated by touch data). Each node's input and output will be assigned to other nodes randomly, so that every node affects other nodes and is affected by still other nodes. These connections, as well as the node types themselves, will be assigned anew each time the patch is opened, so that it never handles the same. Each node type will not only sound different from the other types, but have a different type of control input and output. So the ways that each node handles will be dependent on what other nodes are currently being used. I also envision having, this time, some nodes be momentary, as before, but others toggled. The toggled nodes could entail whole rhythms or melodies being played back while also affecting and being affected by the other nodes of course.

2. More interesting things being done with tuning and timbre. I plan to largely leave the multisampled instruments behind and move into just intoned realms using MSP and plugins, as well as more modular synth.

3. Incorporating visual elements. I plan one volume to delve deep into tuning systems, while any given performance also generates a score that I will send to an artist to create a video that will be released alongside the record. Another volume will mostly use modular synth (controlled by the algorithm as always) for the audio, while control data is used on Jitter matrices that I plan to load with elements from visual artists and then perform rhythmic transforms that are thereby in time with changes in audio. I have already started recruiting artists, though my Jitter skills are still in their early development.

by Zeos Greene on August 22, 2017