AI node-based tools: how about a new collection of Objects from C74 for AI image generation?

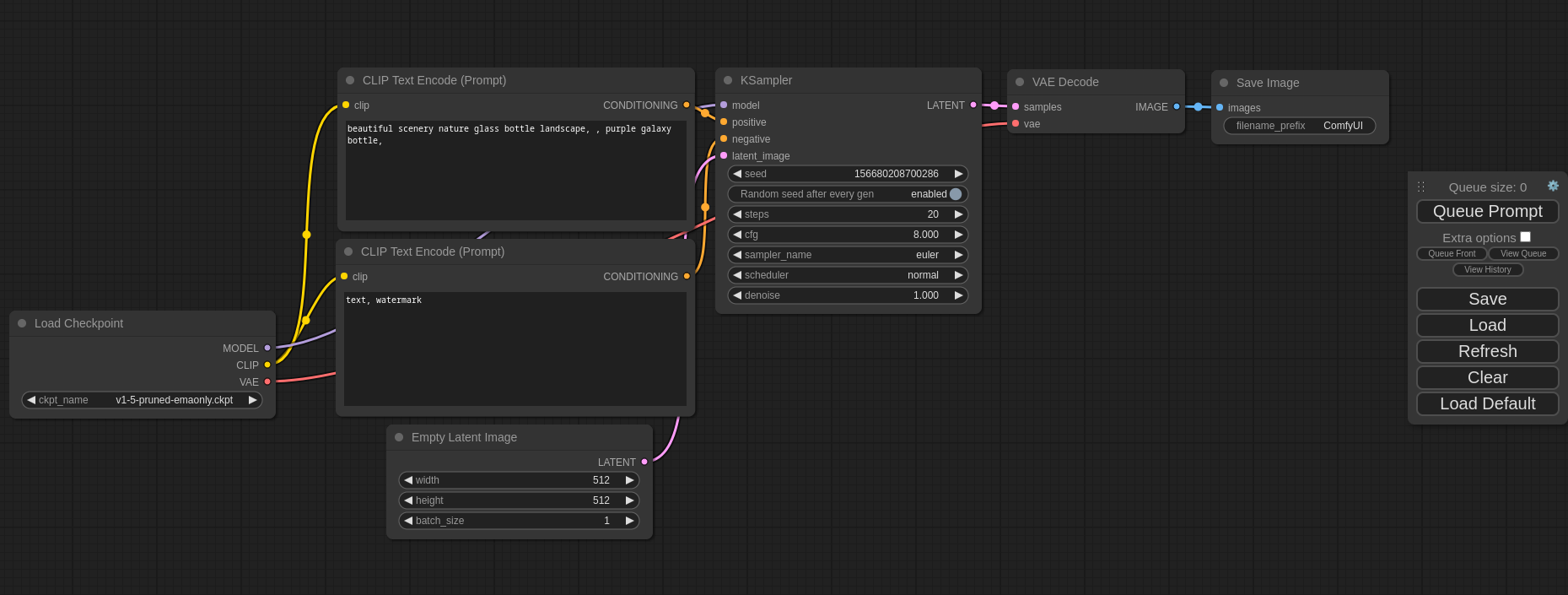

I am a long-time Max/MSP/Jitter user but have spent a lot of time in the last year working with various AI image generation tools, especially with a packaging of a local install version of Stable Diffusion known as Automatic1111 and an animation extension I use within that known as Deforum. I saw a node-based version of SD about 6 months ago called ComfyUI that I really enjoyed using, particularly as the workflow was so familiar to Max. Now another node-based tool called ainodes is out and creating some excitement. Curious if anyone in this community is using these tools? Also: can I dream that C74 develops its own collection of AI objects for image generation/ animation in some massive new upcoming upgrade? https://github.com/XmYx/ainodes-engine

I can’t believe C74 isn’t on top of generative AI yet?! Once upon a time they would have been all over a development in computing like this. Please share if you see any large model generative AI stuff for Max.

Absolutely! I have continued to work with ComfyUI and the more I use it the more I fantasize about C74 integrating it - or something like it - into, say Max 9. It would be as revolutionary a change to Max as MSP and Jitter were respectively and I think leveraging an existing platform like Comfy or Ai Nodes would drastically shorten the dev time. Of course I am not a dev so dont know how all these Python based code packages could be implemented into Max, and I am sure they are many other issues, like: generative ai is not real-time yet and so how that would work in a Max-based chain of image processing. Still the potential is mind-boggling!

Its not that we need another way to make generative AI Art per se. What is so different about ComfyUI from using something like Automatic1111 (the benchmark for the latest and greatest Stable Diffusion tech) is that the visual, node-based workflow allows you to layout a complicated chain of processing steps and visualize the whole rube-goldberg "machine" that you made, tinkering with the points in the chain as you go along.

A dev created a SD plug-in for After Effects, and its a bit dodgy, but playing with it I really got to see the power of integrating AI into an existing tool (like Adobe is doing officially across its product line). I am already moving stuff between AI tools and Max/Jitter and back again (also After Effects). But using AI as a step within the internal process of a tool that I am very comfortable with is so much more powerful than moving content in and out of different programs/contexts.

I imagine some of the community here is hostile to AI for the usual reasons, but see certain devs I associate w C74 (like Andrew Benson?) do some very interesting and powerful work w generative AI!

I mean until cycling 74 intergrates something, Someone could try and build a similar interface to comfyUI that this person has built for touchdesigner https://github.com/olegchomp/TDComfyUI. I think comfyUI exports to websocket via custom node someone else built and in input node.

ha, tomorrow i am going to compare exactly those two for a certain (image processing) workflow i need: comfyUI (for SD) vs touch designer (for dall-e).

since i need full automation and prefer dall-e, it is relatively clear who will win. but that free 1 mb open source utility should get its fair chance.

directly in max would be ideal of course - and it does not even require to be UI objects, isnt it?

I've been playing around with scripting SD through Node.js -- mostly by using the websocket API in Automatic1111. It works pretty well once you find out what the actual API is, but to be honest this space is evolving so fast, the API is changing every few months. Scripts I wrote for a job a few months ago don't work anymore. On top of that some of the most interesting stuff is in the extensions (e.g. things like ControlNet), where API documentation is even poorer. I've used API calls taking 60+ arguments, half of which I have no idea what they do, but somehow it is working. Even getting an explanation of what all the parameters really mean and what they do is hard...

They are also unstable in the buggy way. E.g. for a project I did in August, which feels like an age ago in this space, I was constantly running into out of memory bugs every few minutes in an upscaling script in SD. I managed to find the problem: it was a missing tab character in a Python script that was preventing garbage collection inside a for loop (this missing tab meant that it was garbage collecting after the loop finished). I submitted a pull request to fix it and that went in last month I think. There are *many* bugs like this in these code bases, which is hardly surprising given how rapidly everything has been developing in the last 18 months, and how much competitive attention is being given to the bleeding edge.

Anyway, this is all to say that anyone building a UI interface to these things is going to be overloaded with the race to keep up with the latest API changes, new models and features, how to present these usefully to a user, and bugs with things that inexplicably no longer work.

My point is, I know first hand that it would be an awesome thing to have, but I also know that it would be an incredible amount of labor not just to build it, but to keep it running and deal with all the rapid API changes, new models and features, new bugs and workarounds, and how to present these to a user in a way that anybody curious to explore it would be able to make sense of. Much like many of the SD tools themselves, this perhaps sounds more like a project needing dedicated community development.