Getting *exactly* 1 tick interval out of 4n master phasor

I am seriously struggling with the math or implementation with this.

I need a 4n phasor~ and derive *exactly* 1 tick interval from it. It must be the exact synced tick value that I am able to get from phasor~ synced to 1 ticks. I am putting emphasis on the *exact* here.

MSP or Gen~.

Can anybody please help me out and point me to the right direction? I am seriously lost.

Thank you

4n = 480 ticks.

in tempo nn 1 tick = 60000 / (tempo * 480.) ms

or

1 / (60 / ($f1 *480.)) hz

what do you mean by derive ?

and what is "exact" vs not "exact"?

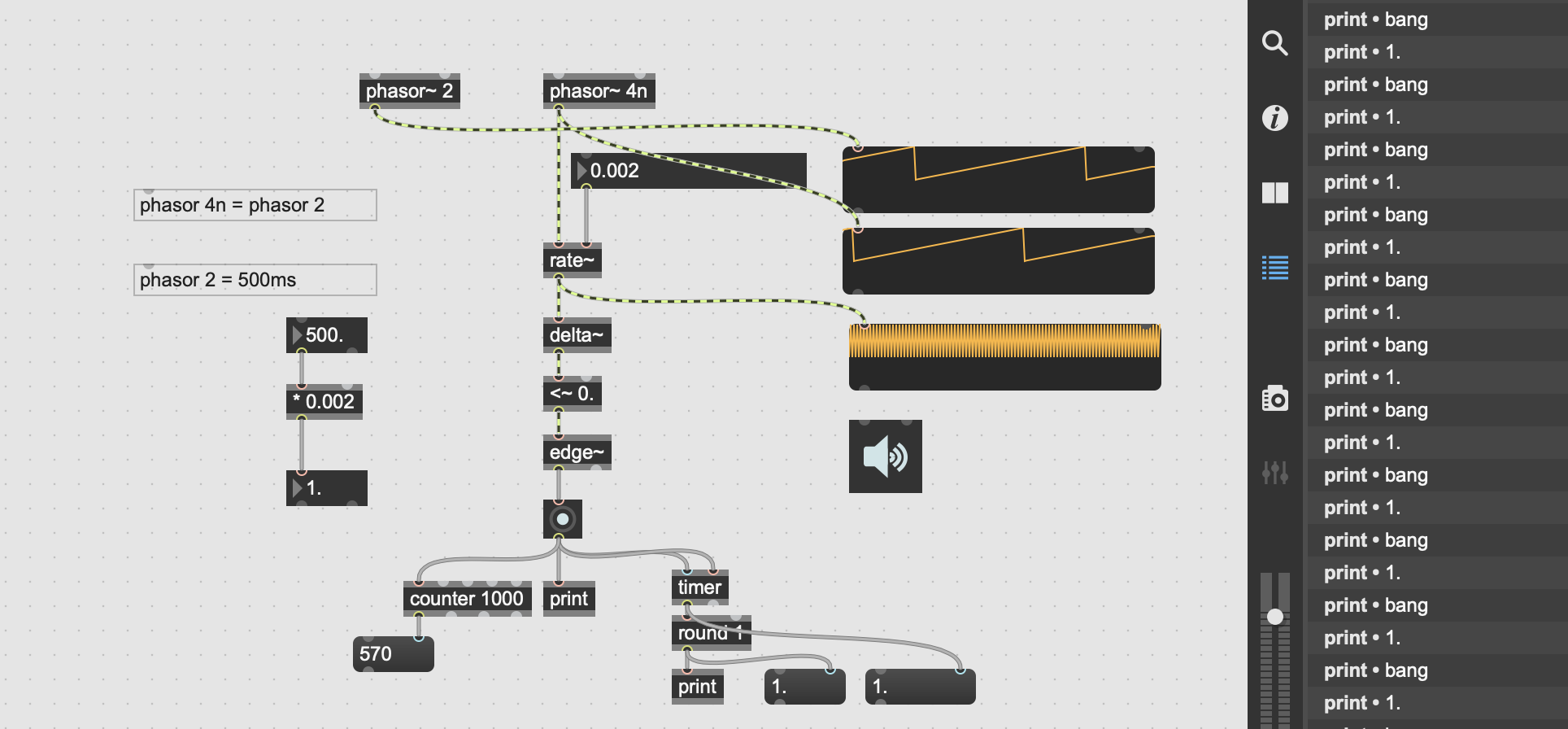

By derive I mean attaching rate~ object to the phasor~ and getting exactly 1 tick interval out of the rate~ object.

Because when I divide 4n phasor by rate 0.0020833333333333 (one quarter note divided by 480 ticks) I am not getting 1 tick but 1.451247 ticks as measured by timer object.

And when I measure metro with interval 1 tick and quantized to 1 tick I am getting yet a different value 4.353741.

This confuses me.

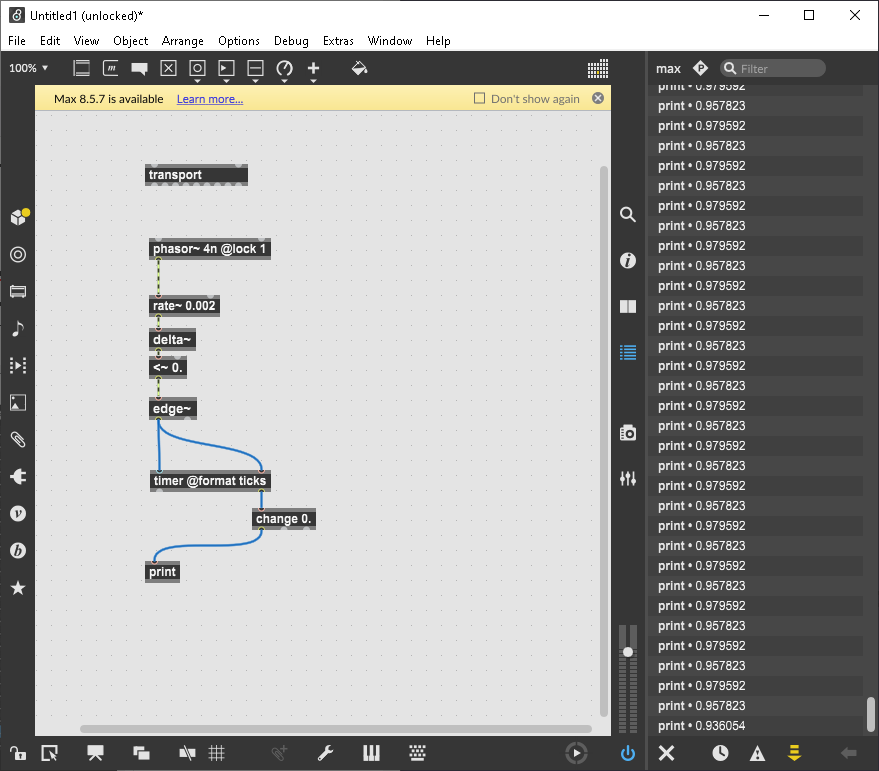

EDIT: Hold on... I have now measured phasor object with interval of 1 tick and lock 1 and I am getting the same value as with rate: 1.451247. So is this a precision limit?

check your math again

1995: let´s use computers to sequence stuff in floating point, samples or vectors, because that gives us a better resolution than MIDI.

2024: oh, komputer haz tix now. lez use tix.

OK, so the reason I was getting different value of 1 tick than you, Wil, is because my signal vector size was 64. When I reduced it to 16, I was able to get to 0.957823 ticks as measured by the timer.

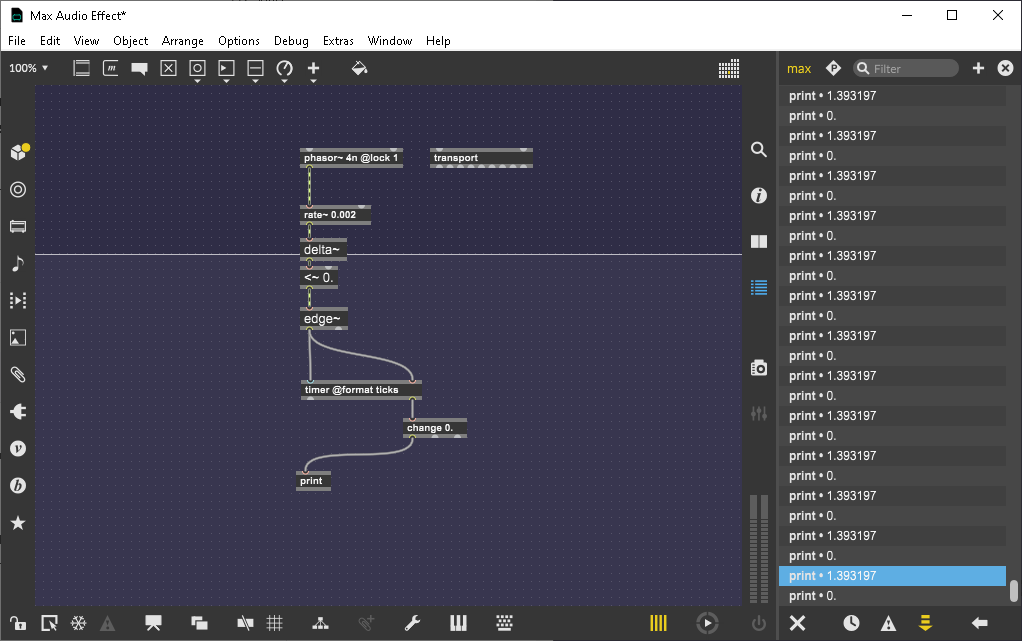

The reason I use ticks, Roman, is because I am trying to sync a M4L device to Ableton's transport. And unfortunately this is where I am stuck with 64 signal vector size. The best M4L can do in my patch is 1.393197 ticks as measured by the timer.

I tried calculating samples but couldn't figure out the count~ reset being sample perfect/good enough with the transport and got timing issues. I am not dismissing a possibility my implementation was wrong - I am a total dummy when it comes to signal processing and math.

Anyway even with the 1.393197 ticks I figured out a good enough sync in M4L for now. I wish I was able to do music exclusively in MSP/Gen~, as M4L causes me a lot of issues, but it is what it is, I guess.

yep, it is 1.3ms because live haz has 64.

and if you have a good reason to do something, then you can ignore comment such as mine above. you only have to convice yourself that you actually have one. (whenever i see an edge~ object in those sync patches i get doubts.)

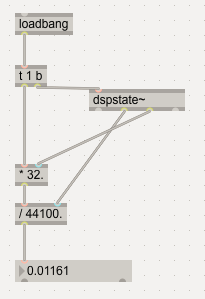

it is good practice to build patches so that they will work under different conditions. classics such as samplerate or vectorsize should be "visibly" included in the math part of the patch for better readability and debugging - you can always derive those dynamic values from the system. then it keeps working when you change settings or switch to another runtime.

btw. have you looked yet into [plugphasor~]?

I think part of your problem is timer is lying to you because it can only run on event boundary (every 64 samples). Have you tried using plugsync? I had the best results in synchronizing my Max based sequencers to live sequencing and got it totally locked in by triggering off plugsync.

You can definitely get them locked in, but I don't know if you can get event-max threads to reliably report your timing - event messages area always jittered by the sample vector in Live as it always runs in "audio in interrupt" with a vector size of 64.

@Roman's and @Iain's points are valid.

If you care for accurate timing at the level of milliseconds, the best thing is to keep everything in the signal rate domain (MSP objects, gen~, etc.) as much as you can. An object like [edge~] is a boundary between signal and message domain, which *at best* will be limited by the current block size (aka vector size). Note that [timer] is also message domain, so the accuracy of what it can measure may also be limited.

Also yes, look at plugphasor~.

One point worth mentioning following on Graham's answer is that it also depends on whether you need accurate timing in the moment, or just overall. Objects like Max's metronome and my own delay and clock handlers in Scheme for Max use the modern Max clock facilities, which correct for the jitter such that they will not drift further out than the one vector even over long periods of time. (So you might be off by a singal vector at any time, but you won't get further off.) For me, I get enough advantage out of using Max message/event based logic that I can happily live with the jitter of one vector as long as the jitter doesn't accumulate and start times are synchronized. For other purposes (like say layering transients really precisely) that might not be acceptable. My sequencers run in the event thread but get *triggered* off plug sync, and I'm fine with them being off by <ms in either direction. The important part for getting them usable was ensuring the triggering process didn't acidentally introduce an extra vector of delay. Or worse, depend on Live API observation, which will delay until the next pass of the low-priority thread, which can sometimes be a badly audible delay. Real human beings are like this too - a regular drummer has a lot of jitter (multiple ms), and in the case of very good ones, that microtiming is part of their personel feel and is desirable.

On the other hand, if you really want to get into the kind of sequencing you can do entirely in the signal domain, definitely check out Graham's book with Gregory Taylor on Gen! They go deeply into it and it's well worth reading.

https://cycling74.com/books/go

"overall"

yep. as soon as you additionally want to slave your DAW to a drummachine´s MTC / midi start & stop, all those max/msp surgery between data rate and signal rate becomes even more pointless.

Thank you very much to you all! Some very important points and tips in this thread that will help me with the next steps.

For some reason I haven't realized that the timer is event based although it's completely evident by the fact that it accepts bangs... I am a dummy, what can I say.

plugphasor~ is news to me, I will check it out!

The M4L device I am working on is outputting midi, so there is no need for sample perfect sync of individual events on the output. But there must be no overall long-term tempo drift. That's now sorted and the device works. So thank you all again!

My main problem was figuring out if the objects I wanted to use are actually giving me the intervals I was after and where were the desync issues actually coming from. I wasn't even sure the transport and plugsync~ objects returned 1 tick until I realized the vector size influence and the timer limitations so in the end I was really confused by it all (the fact that plugsync~ has "double" on its tick outlet confused me even more).

Overall it was a cluster**** and it took me several days of hard and frustrating work to figure it all out. But lesson learned and now I have a sequencer with various sequence interpolation features, fluid shifting tempos, free drawing interface for sequencer step positions with 1 tick resolution and several tempo resync methods. So I think it was worth it! Important experience.

And I am definitely interested staying signal-based only in the future as the precision really intrigues me but that would probably require me to stay exclusively in Max MSP which is something I am not ready for yet.

@Roman

For me, it was the need for rate~ object fluid tempo manipulations that led me to MSP/gen~ implementation into my overall event-based device. I couldn't find any satisfying event-based equivalent to what rate~ object connected to phasor~ can do.

Just to throw an additional possibility in there... I ported Victor Lazzarini's csound~ object for Pd to max last year and it allows one to make both event and signal messages from various control rates. I have found this to be a really handy addition to the toolkit myself - with csound it is trivially easy to have audio rate sequencing done at control rates, saving a lot of cpu cycles. (You can do this sort of thing with poly~ in Max, but it is *much* more cumbersome). Personally, I find any of Max event time, Gen, or csound most useful depending on the particulars of the task.

Object: https://github.com/iainctduncan/csound_max

Demo: https://www.youtube.com/watch?v=ZMWpfdoe2fw&t=3s

The help patches show making control signals.