output MTC based on SMPTE?

I'm back again, and I see there was a lot of infos and questions showing up.

This became a lengthy thread, I am think about deleting irrelevant stuff

from my posts, so that one can easier find important info.

To the questions :

If deck and Live are allready playing and nothing but play position changes

it would be enough to send new time.

Same would be if one just wants to change speed while playing.

But the problem is that Live reacts differently to position changes depending

on if it is playing, or if it starts again after a pause, or plays the song very first time.

And which message one used to tell it to start again.

There is a documentation about that, but it does not explain how it works exactly,

one has to discover that by sending messages and watching what happens...

Here are messages in detail:

set is_playing $1 starts or stops Live, but playback starts allways from 0.

call start_playing starts or resumes Live Transport

call continue_playing starts or resumes Live Transport

call stop_playing stops Live, if one sends it twice , it rewinds Live to 0.

The difference between start_playing and continue_playing seems

relevant only if one changes position in Live Transport manually.

start_playing starts playback from currently set and visible Bar/Beat count

continue_playing would continue from position where Live stoped playback,

regardless of what is shown in the Bar/Beat counter.

But both above messages don't respect new position message received from BLT and max4Live.

(message is set_current_song_time nn in ticks)

For that reason one should allways send all 3 messages when

live has to start playback.

And sending /master/time nn periodically to keep longer Songs synced

would be just fine.

--------------------

If preventing of previous Master Deck to send enable 0 message when Master deck changes

makes difficulties, we could simply disable receiving of enable 0 message for some period whenever enable 1 message comes in.

I guess that deck sends enable 0 messasge when it stops, which then

allows other deck to become master, if it was engaged.

1 second of time would be a safe length to disable enable 0 .

I can't imagine why should one start a Song and stop it 1 sec later...

--------------------

From my tests, it seems best practice to use

call start_playing followed by speed and time.

That creates smallest jump when Live restars playback.

Both BLT-Live.amxd versions I posted use call continue_playing.

That should be changed in next version.

If that can be of any help, Live Api has also direct ways to Solo the tracks,

or manipulate many parameters, if one wants to keep all in OSC,

instead of going both OSC and Midi.

-------------------

James, I will send You all code I have done as max patches,

including mod of Your SMPTE.app, generation of MTC, talking to Live via UDP etc

But let's get this stuff for Johnny work first.

I will post new max4Live device as soon as It gets clear if mentioned

disabling of /master/enable 0 message is needed or not.

AND HAPPY BIRTHDAY !!!!!!

Hi guys,

Thinking about the /master/enable 0, here's my 2 cents:

I think what makes the most sense, is that a /master/enable 0 only needs to be sent when there is NO activity on the Master deck.

If song A fades out at the end, and song B already gets started, eventually the master deck will switch to deck B, but since there is activity going on, no /master/enable 0 should be sent. Only when the master track ends or gets paused (in other words: when the music is OFF)

Thanks for the clarifications, Source Audio, I can definitely work with that information. And it should be no problem to only send /master/enable 0 when the (only) Master player has stopped, which is to say, there is no music playing, as Johnny would like.

And I agree, let’s solve his problems before I get distracted by learning more about how your code works! I am only curious, and am very busy right now, so staying focused is important, but I very much appreciate the offer for later.

I have my studio network set up with the CDJs on it and am experimenting now, but I realize I don’t know how to set up BLT-Live.amxd… but ah, I see, it opens in Live, and lives on a track, and I can open it up in Max and inspect the patch. Cool stuff! Ok, so I need to send it OSC messages on port 17001, it seems. I will give that a try.

Which, I guess makes sense, given that was the port I chose for my own SMPTE max patch, now that I remind myself! Of course we are going to need to use different ports for that and BLT-Live.amxd if you want to be using both at the same time.

And Johnny, to answer a question from earlier, I think it would be cleaner and easier to understand and maintain if we used two separate triggers for the MIDI stuff to pick a track, and the OSC stuff to control the transport, just so it is clear which code is required for which purpose. But if, for example, you only want the OSC stuff to run when the loaded track is one that is recognized, then we might want to combine them, or at least be sure that the track map is in a global so it is accessible to both triggers… and I now see that you have done exactly that, using the idea of having an [artist, title, album] tuple as the map key. Great.

And I see that you already have OSC clients set up in both your triggers, so I guess I will leave it that way. I will add the transport controls via BLT-Live.amxd to the “Midi to Ableton” trigger, renaming it “Midi to Ableton and OSC Transport Control via BLT-Live.amxd” and I will rename the second trigger “OSC to Max for SMPTE”. Since my SMPTE patch has a configurable listening port, let’s change that second trigger to use port 17002, ok? That way when you are using both that and BLT-Live.amxd, they won’t be fighting over port 17001.

Hi James,

You are right saying keeping the Midi and OSC code in separate triggers will result in cleaner code. However, since the Ableton transport only needs to run when a recognized track is being played, one could also say that it makes sense too to combine them. I'll let your expertise decide on which is best :-)

Yes, since I will be using your SMPTE app and the AMXD simultaneously most of the time, port 17002 is good.

On a sidenote, I'm wondering if using the ClipSMPTE plugin in Ableton to generate SMPTE will give good results (= low latency). Will have to check on that once the transport is running smoothly.

I have run into a big snag. It turns out that the series of events that happen for a trigger that is watching the Master player when the master player changes are very different in the scenario where you press Stop on the master player (in other words, multiple players are on-air, so the track you are moving away from was still the Master when you stopped it), and when you have fully faded out the formerly Master player, so the Master moves to another player while the track is still playing. In the former case, the Trigger will be deactivated when BLT sees the master player stop playing, and then immediately reactivated when it gets a packet from the new master player. Which will make your MIDI code work, and allow us to have the TimeFinder track the correct player, and so on. But it leads to the spurious /enable 0 messages you were seeing. Even more of a problem is the latter scenario: when it is a fader-driven switch to a new Master player, the trigger never deactivates and reactivates, because it never sees a Master player stop. Which means your MIDI will not get sent, and the trigger will be simulating timcode for the wrong player.

I am going to need to write some clever code to handle this, using the Tracked Update expression to notice if the Master player has switched on us during a crossfade, and do the things we would have done in the deactivation/activation expressions in that case. I will also need to not immediately send the /enable 0 message after deactivation, but instead start a timer, and only send it if we have not seen the trigger reactivate within a fraction of a second.

I think that with these changes, we may be able to get it working the way you need. But I can’t do them right now, as I need to go to that birthday dinner I mentioned. I will continue working on this during the week, and send you some things to try as soon as I can, and I should have much more free time next weekend if it comes to that. It is really a pity that this is happening when I am so very busy at work, though, and have forgotten so many of the details of Beat Link Trigger since I have not been able to work on it for so long. Even so, we should be able to get this working for you.

Actually, we may not need the fancy timer if we always send the time and speed after starting playback again with /enable 1. So let’s try that approach first, it’s much simpler. I’ll add code to your trigger to detect stealthy Master player changes and make up for the missing deactivation/activation events, make sure that whenever we start the transport we follow that with the current time and speed, and add periodic time and speed updates to maintain sync. That seems like a promising approach to me, and would be worth testing for a while with your setup and tracks.

@James - I am more relaxed now, knowing that You can open

and edit the BLT-Live. amxd plugs.

That makes things much more efficient, You don't need to wait

for any answers etc.

Just please, replace that call continue_playing with call start_playing.

I am away today, but please just post any questions if needed.

Here is modified plug, including that gate for enable 0 messages

Hi James,

Woops that sounds like a complex problem. I hope you can figure out the solution without the fancy timer, and I'd be happy to test it.

In a very simple setup you can even test it yourself, by adding the same MP3 you're playing on the CDJ to an audio-track in Ableton, and see if they run/stay in sync.

Let me know if I can help in any way. Happy to assist!

Thanks, Johnny! Although it is somewhat complex, it is absolutely nothing compared to what it took to implement Beat Link and BLT. It will not take me long once I have a few hours of focused time available to work on this, without my brain full of the designs I am building for my project at work. I will send you something to test as soon as I can, and appreciate your advice about how to test it locally myself, although for now I’d rather use the limited time I have available to work on the code than try to figure out how to change my MIDI routing and configuration to match your connections with Live.

Hi, Source Audio! I’m trying to figure out what you meant by a gate for /enable 0 messages. We should not need one, as long as I always send the time and speed after /enable 1, should we?

OK, Johnny, I have done a lot of work on your triggers. They are now the most complex and full-featured of any that I know of (the first one uses every type of expression that I made available). I am attaching a modified version of the trigger file that you sent me. It seems to be more or less working the way I want, although I did not have time to set up proper tracks in Live for a real test, so I will need to get definitive results from you. I am also attaching a version of BLT-Live.amxd which includes the change that Source Audio most recently requested, in that it uses start_playing rather than continue_playing.

I found a couple of small mistakes in your triggers; unfortunately I don’t remember all the details, but I have tried to reformat them for better readability, and have added more (and corrected) comments. Here is a summary of the main new features in BLT-Johnny-James.blt:

Both triggers are now only enabled when a recognized track is playing in the Master player.

The SMPTED trigger uses port 17002, so be sure to change your SMPTED configuration. I left the BLT-Live.amxd trigger at port 17001 since that does not currently offer support for user port configuration. We can make it fancier once the basics are working properly.

The Activation and Tracked Update expressions work together to properly handle crossfade situations where the Activation expression is not invoked if there is a change in Master player. (Experimenting today, I found that was not always happening, but we are extra cautious now.)

The Beat expression in the BLT-Live trigger will resynchronize the position on every down beat (the first beat of each bar) to protect against clock drift between the CDJs and Ableton. (We can adjust how often that is done if this seems like too much or too little.)

The Global Setup expression opens the Player Status window for you so you can be sure the TimeFinder is running, which is needed for timecode-related features to work.

There are likely to be some problems, and things you would like changed slightly, but hopefully this is a much closer starting point. I am happy to continue helping, but I wanted to get something into your hands so you could start testing!

Hi James, thanks a lot for this! Will try to test it out asap and report back to you.

Quick question: You mention to update my SMPTED configuration. Where do I this?

@James - with gate to enable 0 message I meant a gate that disables reception of that message for a short time after enable 1 message comes in.

That would make sure that stop of previous Master deck will not stop Live when new Master Deck sends start message.

But if You can make sure that only current Master deck sends it's status,

then it of course is not needed.

@Johnny - James means to set UDP port on SMPTE.app that You use to send SMPTE to Resolume to port 17002.

Here is a version of BLT-Live.amxd that has configurable UDP port and displays version number, so that You know which one You are using, when things get updated.

It is exact copy of the one James uploaded in the last post + port select and version display.

Hi James,

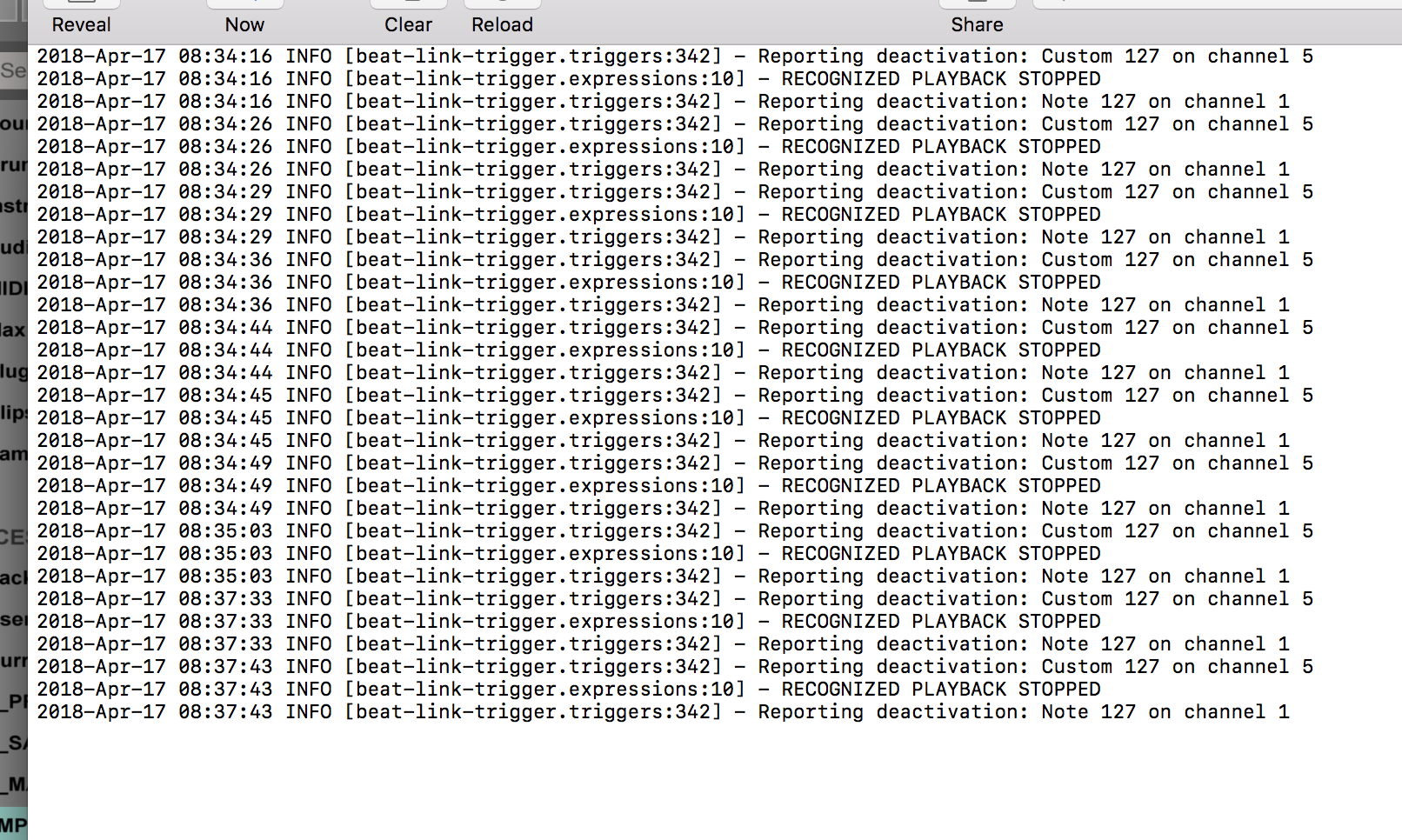

I gave your triggers a real quick testrun before leaving for work. I played some tracks on the CDJ's (which are in the tuple), but no transport started running in Ableton. I checked the logs, and it appears no track gets recognized, no midi notes to activate the tracks and no OSC messages get sent, only deactivation commands.

Also, when a track gets loaded on the master deck, I think the "Recognized playback stopped" line and 2 "reporting deactivation" lines are being logged TWICE. This was already the case as well in my own version of the triggers. Is it possible to prevent this being sent?

I'm a bit worried some tracks could get activated when loaded (and then get deactivated when the track is being played)

I will have a lot more time tonight to test these triggers more in detail.

Attached is a screenshot of the log.

I don’t know what is going on there, I will need more of the log, along with a precise description of exactly what you and the players were doing at the timestamps mentioned in the logs. But I won’t have time to look at or think about that until after work and dinner this evening. Sending the actual log file is better than a screenshot of the last few lines, when we are doing that kind of analysis.

I do notice one thing, though: The second trigger, OSC to Max SMPTED, is configured to send an actual MIDI note, we should change that since we are actually using it for other purposes. In the Message menu, change the selection from Note to Custom.

Next, it sounds like you are a bit confused about the control flow and event processing in Beat Link Trigger, which is not surprising as it is quite subtle and complex. It would help if you could learn a bit more about the details of the DJ Link protocol (as described in the Dysentery protocol analysis document, although maybe one of these days I need to write a more user-oriented document that describes highlights of the important bits without going into quite so much network detail…) and review how BLT reacts to it. But here is a quick summary of what looks like a bit of confusion in your last message: Tracks do not activate or deactivate, Triggers do. Because we have two separate triggers both watching the Master player, we are going to see log messages for both of them whenever the triggers activate or deactivate. One is sending information to Ableton, the other is sending information to Max/SMPTED. And remember that activation means that the player they are watching has started playing when the Enabled filter has returned true in response to the most recent packet from that player, and deactivation happens when either that player stops playing, or the Enabled filter returns false in response to a packet from the player when the trigger was previously active.

I cannot explain, however, how we could see multiple deactivation messages for each trigger without any activation messages for them in between, as was visible in your screenshot. We are going to need to investigate exactly what is going on with your system and network, and I will need to study that part of the BLT code again carefully, as well as the full log files. We may have found an issue in the internals of BLT itself. But we do have time to track it down.

Also remember that when you are in a situation where you are not working with SMPTED, you should change its Trigger’s Enabled menu from Custom to Never, to simply ignore it. Then when you actually want to use it, change Enabled back to Custom.

Source Audio, thanks for explaining where to change the SMPTED configuration! And the reason I thought the gate is not necessary is that players don’t report status to your AMXD, the trigger does, and even if it deactivates during a transition between players, the deactivation will always happen before the activation, so the transport will end up enabled with the new position and speed. Once we sort out the mysteries I was describing above.

Hi James,

About the logs, I deleted my logfile before testing this morning, so this is everything that was logged after loading and playing some tracks. I literally only loaded tracks, played them, paused, loaded another one, nothing else. This was all done on 1 deck only, I didn't crossfade to another deck either.

I think my words were a bit misunderstood: By 'tracks activating/deactivating' I meant exactly that, not in BLT but in Ableton. The way all my audio and midi tracks are set up in Ableton, is that they are all deactivated (grey) by default. Only when the midi note is being sent when a recognized song is being played, the audio&midi track becomes active (yellow). The reason that all my Ableton tracks are deactivated by default is simple, because otherwise all those tracks would play at the same time.

I'm a bit puzzled myself with the deactivation messages getting sent twice on track load. I figured it could have to do with the fact that the CDJ's do a quick run-through through the song (jumping from cue point to cue point) when loading a song? I'll play around with it some more and see if I can find an explanation for this behaviour. Right now it's not a huge problem, since the messages are being sent twice (which means that the Ableton track gets activated and deactivated in the matter of about 1 second), it would be worse if the deactivation messages were only getting sent once. Anyway, I want to get to the bottom of this, 'cause it's weird.

I'll test some more with the SMPTE trigger set to 'Never' in a couple of hours.

Ah! My apologies for misunderstanding you. And thank you for reminding me about the weird behavior that occurs when tracks have hot cues in them. I had none of those in the tracks that I was testing with last night, so I will be sure to add some when I get back to testing tonight.

Hi James,

First of all, thank you sooooo very much for all the hard work you've put into this. I feel we've taken a big step towards the final result these past days, and it won't be long till we get there. I can already smell it!

I had the time to test out your triggers some more and here's the remarks that I have so far:

- Got an error when I started BLT "Unable to use Global Setup Expression. Check log for details". Turns out, there was an error in the 4th line to show the player status (beat-link-trigger.triggers/show-player status is not public, compiling...). I removed that line and replaced it with "(seesaw.core/invoke-later (@#'beat-link-trigger.triggers/show-player-status nil))" like you had provided in an issue posted by me on github, which fixed it.

- When a playing track is being paused, no midi note is sent to deactivate the Ableton tracks. Then, when I resume the track, the midi note is sent which deactivates the Ableton track. When a master track ends, the Ableton tracks also don't get deactivated. I tried copying the code to send the midi note in the activation expression and paste it in the deactivation expression, but then I got a "Unable to resolve symbol: midi-note in this expression" error in BLT.

- As long as I keep my pitch at 100%, things run pretty well. However when I change the pitch up or down, before or during the track, the midi track runs out of sync. I was hoping that the resynchronization was gonna pick up on that and get the sync back on track, but it didn't. Also when I fastforwarded a track during playback, the sync wasn't spot on anymore either.

Johnny, it sounds like you are not using the latest build of Beat Link Trigger. You should be able to use the version of the Global Setup Expression I sent you if you are using the latest 0.3.8 preview that I put on GitHub a few weeks ago. Could you check that and let me know? If that is not the case, then I need to update the version on GitHub.

When it comes to deactivating the Ableton tracks, you are correct, there was no note being sent. That is how it was in the version you sent me as well. What note(s) should be sent to deactivate the tracks? I can put that in to the deactivation expression for you this evening.

Finally, the issues with sync at different pitches make me believe that the code in BLT-Live.amxd is not quite correct at figuring out where to position the Ableton track when we are working at different pitches. Perhaps Source Audio can weigh in on that? Otherwise I will need to find the documentation for the Ableton instrument API that he is using and see if I can figure it out for myself, and I’m not sure when I’ll have time for that, although I certainly find it an interesting thing to explore.

Anyway, I agree we are getting closer, and have confidence we will be able to get it working just the way you want.

Hi James,

yes it's definitely the 0.3.8 preview version that I'm using. However, I downloaded it again, and now it doés work. Maybe I was using an older build or something? Weird.

Oops I had been experimenting with some code right before I sent you my triggers, and the midi note to send at track pause/end wasn't included when it should have. So yes, I would like that note being sent. Thanks

About the sync: I played around with the pitch while the track was playing, and that messed up the sync quite a bit, which isn't really the biggest problem to me, since I won't be playing around with the pitch (at all) in a live setup anyway.

However, the sync is also off when the pitch is changed BEFORE the track starts playing, and that's a bit of a problem because right now this basically means I have to play my tracks at pitch 100% (instead of 103%) in order to run in sync.

Hopefully indeed, Source Audio can shed some light on this one.

It sounds like the time and/or pitch math is definitely going wrong. I wonder if there is any way to see the OSC messages that are going to the .amxd? I might be sending the pitch and/or time in the wrong format or with the wrong labels and causing them to be ignored, or perhaps we need to review the math that is being used to try to translate the pitch to tempo, and the time to a song position in the Max patch itself.

I’d be happy to write the code to send the deactivation notes if you let me know what they should be.

And yes, the unfortunate thing about preview builds is that they change over time as I add new features before bundling them as an official release. One way you can check if the one you are running is likely to include a feature or change is by looking in the “About” window, it shows the date on which the preview was built. If that is earlier than I talked about the change (or made the commits on GitHub) then it won’t include them.

Hi James,

the exact same midi note/channel that gets sent in the activation expression, needs to be sent again in the deactivation expression. I tried copying the code snippet to the deactivation expression myself, but I got an error.

If Source Audio can weigh in on the math of the positioning, I think we're getting pretty close to finishing this and I can get started programming all my tracks! Really excited!!!

I see. I can do that after dinner. But would it be possible to use a different note, or perhaps to send the same note with velocity 127 to mean enable, and with velocity 0 (as a note off) to mean disable? It just seems much safer if we could use a distinct message to mean “enable” versus “disable”—that way if anything ever does get missed or duplicated, tracks do not end up stuck in the wrong state. Can Live be configured to work like that?

And I agree, it does seem like we are getting very close!

Hi James,

I don't think it is possible to map 2 midi notes to 1 parameter in Ableton. I just googled it, and found something that it could be possible through a workaround in M4L, but i'll have to read up on that. If that could work, I would opt to send the same midi note, but perhaps to a different channel.

I just tested mapping a note with velocity 0 to a button, and that worked, so maybe it's easiest to go with that (for now).

Well, as I have run into a very complicated snag at work, I may be stuck dealing with that all night, so before I go home to cook and eat, I am sending you a new version that for now sends the same note during deactivation. If you can point me at the documentation or articles you found while googling about what parameter you are mapping, I may be able to help think about a better way to do it.

In order to be able to do this, we have to save a copy of the note and channel that the activation expression used into the locals, because the deactivation expression is not necessarily going to be able to look them up any more. If the trigger deactivated because an unrecognized track started playing (or even a different recognized track has started playing), then trying to look the track up in your track map will not give you the right results any more. There is no alternative but to stash them in a place where we know we can find them later, which is what I have done.

Also remember that not only does the Deactivation expression need to do this, but the Tracked Update expression needs to in case a crossfade has taken place without the trigger deactivating.

This stuff has become complex enough that I would not expect you to be able to write it yourself unless you decide to dive into becoming a Clojure programmer, so feel free to send tweak requests to me. But I am trying to add explanatory text to the code in case you are curious to see how I have done it.

Unfortunately I can’t test this in any way because my players are at home… hopefully I didn’t make any ghastly mistakes.

And as I feared, I had no time to work further on this tonight. If we are still having issues this weekend it will be worth having you send me your Ableton file and a few tracks so I can reproduce the full configuration here and iterate quickly on solutions.

Here is math to calculate time based on postion in ms and pitch as %.

(60000. / 120.) / pitch = 1 tick

Ableton accepts position in raw ticks. 1 tick is 1 quarter note.

in speed 100 % or 1.0 or 120 BPM that is 500 ms

If Song position is 10000 ms, that is :

10000 / 500 = 20 ticks

----------

If speed is 1.03 or 103 % than Tempo is 123.6

60000/123.6 = 485.436893203883495 per tick

Position 10000 / 485.436893203883495 = 20.6 ticks

I tested that by sending position in ms to Live and timeline allways

aligned to sent time.

-----------

That is in theory correct, but what I don't know is

how Loop markers in CD Players are set, do they have absolute time set ?

Based on Track's own BPM/ Bar Beat position ?

Are they hardcoded, or they maybe get sent depending on CD Players

own realtime calculation depending on time/speed factor ?

I have asked that question as many others in the past, without answer.

It seems now it is time to clarify that.

---------------

So simply - what is being sent from BLT if position in Loop Marker

is set at 8000 ms, and speed of playback started with 1.02 ?

Original 8000 ms , or 8000 /1.02 ?

In later case, we should calculate ticks using only 120 BPM

---------

And there is display of received OSC messages in the BLT-Live.amxd

it displays last received message

Hi James, Here is the link to the wetransfer zip file with a simplified version of my Ableton project: https://we.tl/OSZjWNVYeh

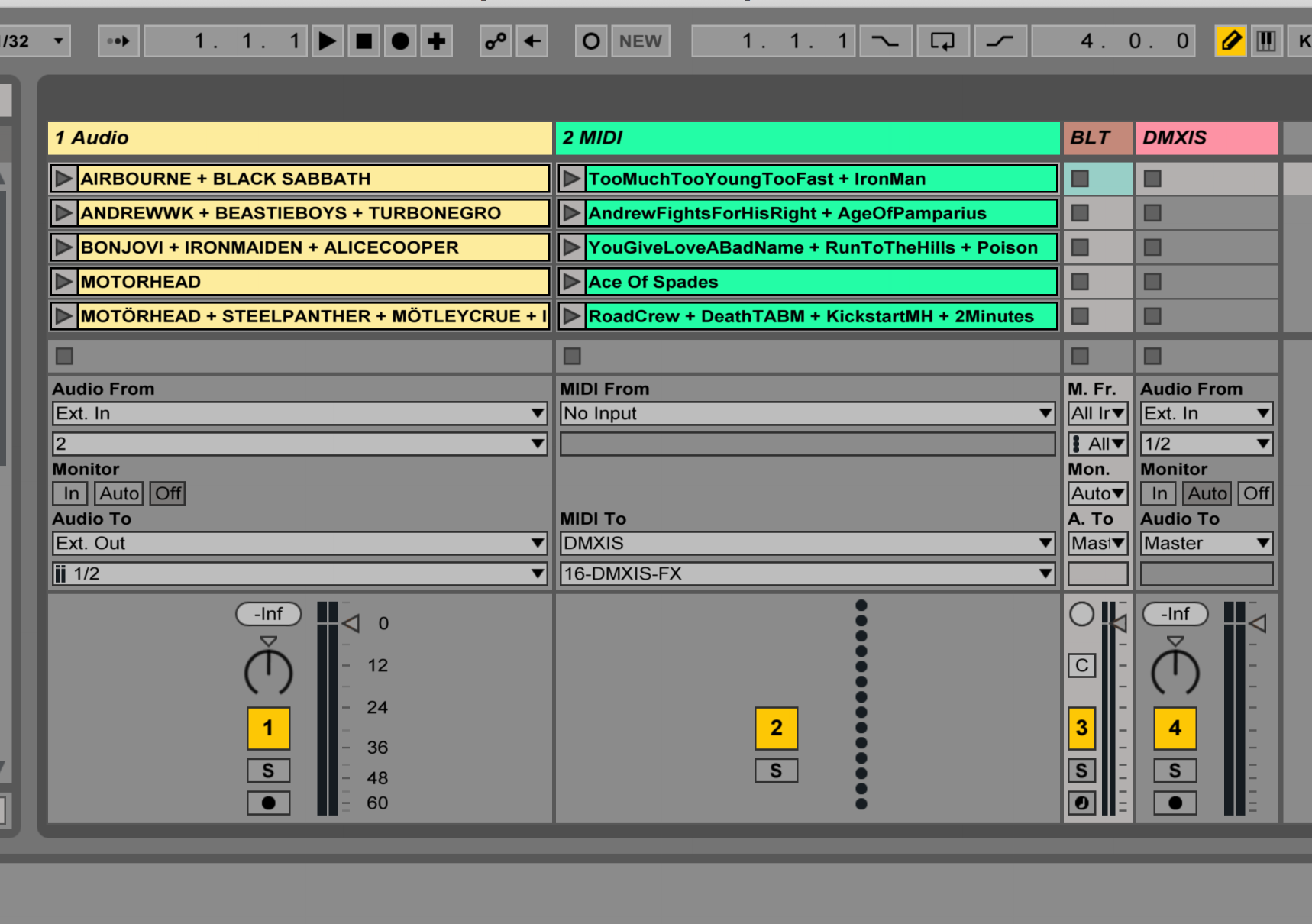

This project has 4 songs (4 audio + midi tracks).

The audio tracks can be found in the /Samples/Imported directory. These tracks need to be added to Rekordbox and exported to USB to go into the CDJ.

(FYI: There is a slight chance you will get an error when opening the project, because of the DMXis plugin which is included, which connects to my USB-to-DMX box. If so, the whole Dmxis track can be deleted without a problem.)

So when a track is played on the Master deck, a midi note is sent from BLT to Ableton, to activate both the audio and midi track of the song. When the song is paused or has ended, the midi note is sent again to Ableton to deactivate the tracks again.

Source Audio, thank you very much for your explanation of the time calculation! I think what needs to be addressed is how BLT can make sure that things stay in sync when the playing track on the CDJ is being manipulated manually (pitch adjust, fast forward, looping, ...).

I think if a continuous positioncheck is being executed, eventually Ableton will always get back in sync anyway. If the time calculation math gets completely right, I think all those scenario's are covered at once.

Source Audio, I think I understand what is wrong! As I was half-asleep last night I remembered you talking about adjusting your time calculations based on speed, and you should not be doing that. The time values reported by BLT are absolute positions into the track and they are not affected by BPM or playback speeed. In other words, A time of 1000ms into the track is where playback reaches when the track is played at normal speed. So if you are playing the track at double speed, you will reach that point in 500ms, but BLT will still report that you have reached 1000ms into the track. So when you are positioning the Ableton track, you should ignore the speed when you are translating time values to position values, because the same points in the audio track are always reported with the same time values, regardless of how fast the track is playing.

It is also the case that cue and loop points in the track are absolute times in the track, so they are unaffected by playback speed. If a cue or loop jumps to 20000ms, it is always to 20000ms, no matter the pitch.

James, your remarks feel like a bit of a breakthrough and they make perfect sense to me! I feel we’re on the right track!

Now that clears it all, so calculations will allways be in Tempo 120.

I will upload modified BLT-Live version in few minutes

Will test both the updated BLT triggers and the AMXD the minute I get home from work!!

This does indeed sound like a breakthrough! And thank you for sending the Albleton project, Johnny, that will come in handy if we need to do some fine-tuning this weekend. Also, I am familiar with the DMXis plugin: that was the first DMX interface I bought, and my first attempts at synchronizing lights to music were in Ableton using that very plugin. I ended up finding it not flexible enough, and that was why I wrote my own afterglow lighting control software, which led to my research into how to synchronize with CDJs, which led to Beat Link and Beat Link Trigger. So in a way we have come full circle. ^_^

Hey guys,

OK i've been testing this new update and I've got to say: THIS IS AWESOME !!!!!

It works really really good, almost perfect, and I'm super happy about it!!

I've played around with a couple of tracks and here's my remarks:

WHAT WORKS

- the sending of the midi notes to (de)activate the Ableton-tracks works perfect. Not a single time it went wrong.

- playing songs at pitch 100% works pretty much flawless. Ableton stays in sync the whole song. Crossfading tracks work perfect. Fast forwarding songs keeps the sync on track. This is exactly how I want it to be, and it is. Awesome!

- Looping works perfect, sync stays on track perfectly.

WHAT NEEDS MORE ATTENTION

- There's still a problem with the math of the pitch. Whenever I play a track with the pitch above or below 100% (I tested with the pitch set to 106%), the sync tends to drift off. My light cue's then tend to fire a couple seconds too early.

A really strange behaviour however is, that when I put the pitch back on 100% (while still playing the track), the sync is almost immediately back on track. So in short: As long as my pitch stays on 100%, all is well, but whenever I change it, it runs out of sync. And since I prefer to perform my tracks at pitch 103%, this is a bit of a problem...

- I kept an eye on the logs while testing tracks, and from time to time a weird "WARN" message was logged, which I wasn't able to reproduce consistently, and which appeared at seemingly random times while I wasn't even touching the deck. I'm not a 100% sure if these messages occurred with the pitch at 100% or 106%.

Does this mean anything to you? It's got me completely puzzled...

----

2018-Apr-18 21:47:07 INFO [beat-link-trigger.triggers:321] - Reporting activation: Custom 127 on channel 5

2018-Apr-18 21:47:07 INFO [beat-link-trigger.expressions:5] - TRACK STARTED: 1334 - Airbourne + Black Sabbath - TooMuchTooYoungTooFast + IronMan

2018-Apr-18 21:47:46 WARN [org.deepsymmetry.beatlink.data.TimeFinder:443] - Received beat number 511 from XDJ-700 2, but beat grid only goes up to beat 171. Packet: CdjStatus[device:2, name:XDJ-700, address:169.254.143.216, timestamp:50743222981569, busy? true, pitch:+0.00%, rekordboxId:1314, from player:2, in slot:USB_SLOT, track type:REKORDBOX, track:4, track BPM:151.0, effective BPM:151.0, beat:511, beatWithinBar:0, isBeatWithinBarMeaningful? false, cue: --.-, Playing? false, Master? false, Synced? false, On-Air? false]

----

I attached the logfile to this message. You can ignore the stack trace at the end of the logfile. That happened when I unplugged my laptop from the network hub.

Those warnings are not anything to worry about. They reflect strange behavior by the players when they are looping at the end of a track, but they do not cause any problems for BLT.

Can you be more specific about what you mean when you say the sync drifts off? Does it fix itself once per measure when the Beat Expression sends the latest /time command? Or does it just get worse and worse over time? If the former, perhaps the speed calculations are not right… If the latter, are you using the latest BLT-Live_V101.amxd with corrected time calculation? Can we see whether my Beat Expression is working and sending new /time commands once per measure? Being excruciatingly precise about the way in which sync fails under the different scenarios (pitch faster or slower than 100%) will be key to understanding what is going on. Testing with radically faster or slower pitches to see what happens might be interesting. But BLT simply translates beat numbers to times based on the track’s beat grid and passes then on, so there is no way for it to gradually drift out of sync; there must be something else happening.

Oh, and I should say that I am delighted to hear about the things that are working the way you want them to. ^_^

Well this is a bit stupid...

At the beginning of my test session I removed the original AMXD and replaced it with what I thought was the newer version. Turns out I put the old version back in. My bad!!

So yeah, I added the the new AMXD and I can only say: This is it! THIS IS IT !!!! This is really running how I want it to run, and I've got a feeling that it's running pretty stable as well.

I'm gonna test this some more and try to find some bugs, but I've got a gut feeling that we are pretty much READY !!!

I can't believe how grateful I am for the help the both of you have provided so far with this project, and I'm SO looking forward to taking this beast on the road !!! I really don't think you guys have an idea of what you have achieved here. Never have I seen a sync between CDJ's and Ableton in this way! I know some other projects exist online to sync tempo's between CDJ and Ableton, but never have I seen a position sync. This is huge !!!

Thank you !!!!!!

Johnny, that is absolutely wonderful news, thanks for letting us know! What is the name under which you perform, and what are some of the festivals you’ll be at? I will try to see if I can find any live streams. :D

I still may want to spend a little time this weekend researching if we can use a slightly different approach to enabling/disabling tracks in Ableton, which uses distinct messages for each operation rather than a toggle, because even though it seems to be working fine so far, long experience with integrations tells me that is a slightly safer approach in the long run. But it is by no means critical. I also want to learn a bit more about the things one can do with .amxd files now that Source Audio has demonstrated this brilliant approach, the possibilities intrigue me. And once we settle on the final approach I definitely want to write it up in the User Guide.

Good news !

I would suggest You trash all amxd versions except the latest one,

version 1.01 , it is visible in the plugin GUI.

And now good luck with performances !

Hi all,

Yes I'm superexited about where we are right now. I'm going to be testing this a lot more in the coming days, and perhaps some small hickups might show up, but as it stands right now I feel confident enough to take this to a live environment.

Yes James, you are making a valid point about the enabling/disabling tracks. I myself would also love a different approach to this process, but right now I'm not sure how. I know for a fact that one button in Ableton can't be mapped to 2 different notes. I would definitely prefer a scenario where we could send a separate message to enable and disable tracks, which will always behave the same way (say the disable message is sent twice by accident, I want the track still to be disabled then, where in the current setup, the track would first get disabled, and on the second message would get enabled again).

I'm also curious if the current AMXD could be packaged in a standalone app, so users who don't have a Max4Live license can use this.

I'm currently also thinking about the possibility to have the SMPTE generation and Resolume run on separate computers (Macbook Pro's). I know linking them with a mini-jack cable is an option, but I'm curious if another solution exists. Bear notice: This is a scenario I want to use on a bigger festival where I'm bringing an extra VJ to handle the Resolume part, and where WIFI is unavailable.

The name of my 'act' is "Goe Vur In Den Otto" which loosely translates in dutch to "Music that's good for listening in a car". Kind of a tongue in cheeck name. We are 2 people, I'm the DJ and my friend is the MC. We've been around for about 5 years and used to do a radioshow on one of Belgian's biggest radiostations. We mostly play popular (hard)rock music. The biggest festivals we are playing this year are Graspop Metal Meeting (which is the one I referred to above for the SMPTE), Lokerse Feesten (that's the picture I showed you on Gitter), Rock Werchter (Belgium's biggest festival), ... You can definitely find some funny video's from us on youtube. I'm the guy with the beard :-)

So with the Ableton sync working as it basically should, I've got a couple new questions:

- Pure hypothetical: How much work would it be to convert the latest AMXD into a standalone app that generates MTC, so Ableton can slave to it with the same quality of results that we are currently realizing with these last updates?

- And while we're at it, how much work would it be to combine the SMPTE generating app and the latest AMXD into 1 single app that does it all? The MTC app needs to be the exact same behaviour as the currect AMXD (sync to master CDJ deck), and the SMPTE app needs to be a stereo signal where the left channel is deck 1 and the right channel is deck 2 (similar to the already existing app James made, of which I have learned that this is merely a proof of concept and not really)

I'm thinking about what would help the most people that want to get into this, and I think that is a standalone that can output MTC and/or SMPTE (simultaneously) without the need of a Max4Live license.

Johnny, that looks like a fun show indeed, I will have to watch for it! ^_^

Could you please point me at the Ableton documentation which describes the mechanism you are using to enable/disable tracks, so I can see if there seems to be a way to use it in a non-toggle fashion? Or even just tell me the name of the parameter you are mapping? But please give as much detail as you can, because I only dabble in Ableton, I am not a regular user let alone an expert.

Your question about making the AMXD a separate app doesn’t really make sense. The whole point of AMXD is that it is something which runs inside of Live and thus can do cool, tight, and deep integrations like Source Audio achieved. It was a brilliant idea, and cannot be done any other way. It would be possible for someone to build a standalone application that sends MTC, and for someone to build a real standalone application that sends SMPTE (once again I must remind you that mine is only a proof of concept, not a serious engineered, efficient, supportable product). However, MTC does not sync speed, it only syncs location. You need a separate channel to sync tempo, and it would probably need to be MIDI clock, which has a great many disadvantages, it is an ancient protocol, poorly designed, and not suited for general-purpose computing hardware. Ableton Link is much better, but I do not know if you can combine Link and MTC. So the AMXD is going to give you much better and more reliable sync than jury-rigged combinations like that. Also, I thought that starting with Live 10, Max for Live is bundled, and nobody needs a separate license for it any longer?

I would also caution you against using WiFi in a performance context. It’s not reliable, it suffers too much packet loss. BLT cannot reliably track CDJ state over WiFi, and other protocols have problems as well, even before you get into the risks of interference from hardware installed in the venue and brought by audience members. You should always run quality shielded physical high-speed network cables with good network switches between your performance components.

As to how much work it would be to build those standalone applications, the only people who would be able to answer that would be developers who were interested in building them, and who spent enough time studying the problem to give you a solid answer. Unfortunately, those things are too many steps removed from my own interests and goals for me to undertake the tasks. Even Beat Link Trigger is a side-project of a side-project of a hobby, which I created as a favor for someone who was interested in the things I had been discovering about CDJs for use in my open-source lighting software, and released to the world as a gift.

The thing is - amxd is amxd, means it talks to Live using Live API from max4Live device.

I am not a Live user , so my interest in that is fairly small,

but from what I understand, Live does not have native support for OSC,

but can use remote scripts, or whatever they name it, sort of python wrappers

for live Api. I am sure people here on forum have used that in the past.

-----------

But You are allways mistaking OSC messages to Live with MTC.

MTC is something totally different, I explained it many times,

You even have Standalone generating MTC.

(by the way You never reported how it worked)

The problem I had using smpte~ external together with MTC generation

could be maybe solved, I gave it up because I was sure that directly

controlling Live was a better way, than generating MTC.

But if You want I could go on with the idea.

2 SMPTE streams + MTC (and i mean MTC)

--------

On the other hand, generating 2 SMPTE streams could also fit

into BLT-Live.amxd.

Yes, directly controlling Live is much better than MTC. We are in violent agreement about that. :)

Hi all,

let me respond to the past messages here chronologically:

- James, about midi mapping, i'm not sure if your question meant for me to explain how midi mapping works in Ableton? If it was, here's a small video on youtube which explains it real fast. The midi mapping part starts around 4:40.

https://www.youtube.com/watch?v=eStv6TsX95M

So how my tracks are mapped in Ableton is fairly simple. I have a midi note mapped to the track activator buttons for all my tracks. The track activators are all the yellow rectangles in this picture http://www.subdivizion.com/wp-content/uploads/2012/12/Re-Enable-Automation-.png

If they are yellow, this means the track is active and audio running on this track will be sent out to the master. If you click the yellow rectangle once, it becomes grey, which means the track is deactivated. By default all my audio&midi tracks are deactivated (= grey). When a recognized track in BLT starts playing on the master deck, a midi note is sent to Ableton to activate the correct tracks. Once the song is paused or has ended, the same midi note is sent again, and deactivates the track again. Usually, at the same time another track (the newly started song on the CDJ's) becomes activated, and so on..

About my AMXD question: Ofcourse I understand that the AMXD (which i love!) is more efficient than a standalone app. That being said, I was wondering that different users might want to do different things with syncing CDJ's. In my case, I want to sync it with DMXis light cue's in Ableton, but I can very much imagine that other users for instance want to run a SMPTE signal into a lighting desk (like the GrandMA2) to program a big show. Therefor I figured that a standalone app (like your SMPTE daemon) would be interesting to fit both my needs with Ableton, and other people's needs to use it for other things as well.

Since we've established a very smooth running sync in Ableton through OSC messages to feed the AMXD, I was only wondering if the same logic could be used to generate both SMPTE and MTC on the fly. I even think that for an app like that people would pay money to be able to use it (I know I would).

Now, I know the both of you aren't a fan of this idea, and I respect that, that's why I clearly stated it was a pure hypothetical question. I only wanted an idea from you guys how much work you estimate would go into creating said app, based on the current situation.

I'm very much aware of the fact that OSC and SMPTE/MTC are completely different things, I just really would like to know if it is possible to generate SMPTE & MTC, based on the OSC messages that are currently being sent by BLT. Really curious about that.

Yes WIFI is absolutely not an option, never, nowhere. I'm currently looking around for ideas on how to feed SMPTE from one computer to another, and there are some possibilities. I just have to dive into it some more to fully understand how things could work.

Another idea that I had was to connect 2 laptops to the CDJ network hub (in a 2 deck setup) running the same BLT triggers. I don't have 2 laptops handy right now so I can't test it, but do you think that could work?

About the MTC app you created, Source Audio: I played around with it quite bit, but I think we both gave up on it (to sync CDJ's with Ableton) in favour of OSC and AMXD. I remember back then that we hadn't established a stable sync yet, since the math of the positioning wasn't yet how it needed to be (and how it is right now). And yes, I would still definitely be interested in an updated version of your MTC app, to a version which generates MTC from the master track, and a dual SMPTE signal from the 2 separate decks, with midi routing options for the MTC, and audio routing options for the SMPTE. Ideally,

By the way, I've been playing around with the current setup some more, and honestly, I can't describe how happy I am of what you guys have achieved getting this to work. Thank you guys so much !!

By the way, Source Audio: I think I remember you once saying you created some sort of OSC simulator to test the AMXD with. Is this perhaps something you would be willing to share?

I'd very much be interested in using it so I can test my Ableton tracks without having to connect my laptop to the CDJ hub. I'm more comfortable with programming my light cue's in Ableton while I'm lying on the couch with my laptop on my belly, than I am standing behind my DJ setup in the basement :-)

Thanks for clarifying that! I am sorry if I sounded terse, I am busily preparing for a major client demo tomorrow morning, so I was a bit rushed. What you say makes sense, and I definitely understand why people might want to use MTC. And yes, I think that if the same time interpretation fix was done with the MTC application as was done with the AMXD, it will work just fine for that. I might also someday add native MTC support right into BLT, since I can generate it from Java. I just have not had time yet. I personally will probably never write an SMPTE generator because you need to do that in C, and my C skills are rusty enough that I am not the best person to tackle it. However, though I protest a lot about my SMPTE daemon being a proof of concept, Max is pretty stable, and if you test it enough to be happy with it, it might be a good solution.

I will take a look at that mapping video when I have some time (after this demo!) and see if it explains a way of mapping in a non-toggle mode. Thanks!

I would not mind doing this 2 things for You :

1.- create max standalone to send start, stop, position and speed to UDP port

(that is what I used to test BLT-Live.amxd, I have no CD Players)

If You need that app to also send midi messages to control tracks, then You have to give me the details.

Maybe You also want to have audio Player inside that will output it's current

position n ms ?

But I have no ways to simulate CD Players, I don't know anything abour latency of messages.

To make it short - tell me exactly what to send via UDP to Live.

2.- Standalone app that would output MTC and 2 SMPTE streams ...

If I had source files of SMPTE external, or even at least help or reference file,

maybe I could get better arround midi stuck problem in MTC generation.

Again here - I need exact messages to each of the 3 outputs:

UDP port, /left/... /right/.... /master/....

I am not interested to create or sell any commercial app, in first place because of

smpte~ external, but also because I dont want to maintain, offer any support

to eventual users etc.

----------

MTC app I made for You had NO MATH in positioning.

Because position was taken directly from phasor and sent to Live.

BUT MTC is slow because every second frame gets sent,

and that makes in 25 FPS (1000ms /25) * 2 = 80 ms update interval.

So if Live did not sync corectly to BLT, that has to do with MTC

and there is nothing I can do to make it better.

Hey guys,

I've been giving the whole (osc or)MTC and SMPTE integration, as well as the safer way of midi mapping some more thought, and I think I've got a better idea now about what would be ideal. It's become very clear that dual SMPTE can't work in Ableton, for the simple reason that Ableton only supports 1 stream of transport, and dual SMPTE means 2 streams of timecode that run independently of eachother. You simply can't input 2 timecodes (or AMXD syncs) into Ableton.

For a single SMPTE stream on the other hand, there is no problem at all, and can perfectly be implemented in Ableton as it is right now with no updates necessary to triggers and AMXD. Simply use the ClipSMPTE plugin or have a SMPTE audiofile on a separate track that is routed to a different audio output, and you're done. I played around with ClipSMPTE for a little bit, but perhaps I was doing something wrong, but the timecode generated wasn't always correct.

Concerning the midi mapping, there is a rather simple solution that I overlooked which makes so much more sense, and that is to put my audio/midi tracks in Ableton not in Arrangement view, but in Session view. In that view I can map the scene launch button of a scene (= a horizontal row in Ableton) to one note, and have the deactivation expression in BLT fire a different note which is mapped to the "stop all clips" button. For both buttons, you can press them as many times as you want, they will always yield the same result (pressing the 'launch clip' button twice will not result in the clip starting and then stop again, but it will just keep on playing) Actually it makes so much more sense to move all my clips into session view, on so many levels. It results in visually much clearer overview, it's much easier to manually switch from scene to another one by the simple click of a button, it's DEFINITELY the way to go. There's only a small update required to the trigger, which is that the deactivation note only needs to be sent when the MASTER deck has stopped (but definitely not when a track comes to its natural end, when that track is not on the master deck anymore, like it is right now). Does that require much code rewriting? I would assume not (but correct me if I'm wrong)

About the standalone app, here's how I visualize it: Check out the dual SMPTE app that James created here, which also shows the unlocked patcher mode of the app: https://github.com/brunchboy/beat-link-trigger/blob/master/doc/README.adoc#generating-two-smpte-streams The standalone app can look quite exactly the same as the 'SMPTE dual daemon' that James made, with the addition of the MTC part. The MTC as well as the 2 SMPTE streams can all be set to 25 fps by default. James' app responds to /left/enable, /left/speed and /left/time (and for right the same thing), but feel free to use whatever you think is best.

I would prefer an offset function for both MTC and SMPTE separately (same offset for both SMPTE signals is fine); By the way, if track positioning is updated every 80ms, that is not really a problem for me. I can live with that. The SMPTE app listens to port 17001 (but you can change it to whatever you want), and MTC should listen to 17002 (like the AMXD currently does) I would also like the possibility to choose to which MIDI device the MTC is sent to, as well as to which audio device the SMPTE is being routed. In my case, the MTC will be routed to IAC Bus Driver 1, and SMPTE to Soundflower (2 channel version). Output of in MS in the app is definitely a very good idea and can be quite big (I'd love something like this: https://static1.squarespace.com/static/55f05c0ce4b03bbf99b13c15/560bf68fe4b0c1fb180beb33/560bf690e4b00a287e5ecef8/1443624616217/tcds46.png) The app doesn't need to send midi messages to control tracks, I prefer to keep this in BLT.

If you need any more info, i'll be happy to provide it to the best of my abilities! Thank you!!!

No problem, I can extend the SMPTE + MTC app with 2 channels.

But I must clearly say that if You use BLT-Live.amxd and just need 2 SMPTE streams

for resolume, DON'T use app that can send MTC !

I only have smpte~ external extracted from the SMPTE.app James made,

I have no help file or source code.

That would be helpful to troubleshoot relation between smpte~ external and MTC .

If You have any of that, plaese upload it.

------

And Live can well send 2 SMPTE Audio Streams out, independent of

own transport or position.

See - max4Live plugin can do almost all that Max Standalone can,

so it should be no problem to have max4Live device

that would send SMPTE out, in same way as the SMPTE.app itself does.

Only Audio driver and output channels might be more flexible if

one uses Standalone app.

-------

if I make that MTC App for You I will include sending

of full frame messages. I don't care if Live responds well to it or not.

MTC is meant to sync other apps, for Live we have a better solution.

Full Frame messages get sent when Master Transport stops,

or jumps to different location, but not if playback continues.

And that display HH:MM:SS:FF - I need size in pixels

that You want on the screen.

Also is there a prefered position on the screen where the standalone should open ?

Bottom - Left ? Or Top Left ?

That's understood: I will definitely not be using the SMPTE+MTC app together with the AMXD. About the help file / source code, is this something you could provide, James? I don't have it myself.

The problem with the dual SMPTE in session view in Ableton is, that each SONG resembles a horizontal line (= scene) in Ableton, and you can only play 1 scene at a time. Since the dual SMPTE takes its info from 2 SONGS, I believe it requires for Ableton to play those 2 songs also simultaneously (but at different positions), so I don't think it would work the way it needs to work.

Therefor I think keeping the SMPTE in a standalone app is the better approach. But if you can prove me wrong, I'll be all ears for it!

Perhaps adding a checkbox to both the MTC side and SMPTE side to enable/disable the whole function is a good idea? They should both be checked (=enabled) by default.

Can you position it top right?

SMPTE generation from Live would not be dependent on Song, Transport, or anything else.

If track where the plug is inserted is active, it would just receive values from BLT

and create smpte, I am wondering why is that so difficult to understand.

---

I will position dual SMPTE+MTC on top - right.

What do You want to see in that big HH:MM:SS:FF Display ?

You have 3 sync timers going out ?

----------

Each SMPTE and MTC should have own selector for UDP port,

Selection of at least 25 and 30 fps, On/Off Switch, Offset in ms

and in addition MTC should have MIDI out selector.

Audio Outputs are handled by audio setup.

Why all this - well You say You want to give others option to use it,

so maybe some unit needs 30 fps... or someone wants different UDP ports

I would only set default values to 25 FPS, UDP ports to

17001 for both SMPTE and 17002 for MTC as You wish.

Offset = 0, all 3 sync streams Off.

All values will be stored in simple text document.

Do SMPTE need Output Attenuator in db ?

Wow, there is a lot to respond to here, and most will have to wait until after my client demonstration, but to begin with I want to agree with Source Audio about the impracticality of trying to sell something based on these pieces: the commitment to support and maintenance would be utterly impractical, and I already make plenty of money from software in my primary employment, this stuff is done for fun and love. And looking back, if I had been billing anything like standard consulting rates for all the time I put into researching the DJ Link protocol, how to access the databases on the players, building the Beat Link library, and then building and helping people integrate with Beat Link Trigger, it would have exceeded a million dollars some time ago, and nobody in this market would be able to afford paying that kind of costs, even shared among the people who really want this stuff.

But even more fundamentally, I don’t think it would be ethical for me to try to sell anything in this space (despite the existence of other people who are), because there is absolutely no guarantee that Pioneer will not release a firmware update tomorrow that breaks everything permanently.

Source Audio, I did talk some with the author of the smpte~ external, and he is not able to offer any support or enhancement of it. I was hoping that he could at least port it to Windows so people on that platform could use my standalone Max app. He also doesn’t have time to clean up the source code for public release, although I may have a copy of it if there are specific questions you have. I can also try and see if he will share the source with you.

More later, including thoughts about the Session View approach, which does sound promising.

No problem about source code, I hoped it could help deal with the external better.

But now to windows version of Your SMPTE app.

If one would just create SMPTE file with 25 and 30 FPS, and include it

into standalone, let sfplay play it in needed speed, that would do.

mono file of 10 minutes would be long enough I think for most DJ-ing people.

I am replacing phasor~based counter for SMPTE and MTC generation with simple metro

and counter combo. Easier to deal with.

My understanding is that you cannot simply slow down the SMPTE audio, you need to play it at the correct speed, but skip or repeat frames as necessary to track the adjusted time rate, which would not allow that simple audio file approach.

OK Source Audio, I'm sorry, I wasn't aware the AMXD was capable of doing that. I'd be happy to test that.

About the standalone version: You can forget about the checkbox idea I suggested. If the BLT trigger for the SMPTE is disabled, the SMPTE part in the standalone app won't be fed anyway.

About the clock display: I think showing the master deck makes the most sense (and if you insist, adding also the separate decks as well, but smaller, is also ok for me, but not a must)

Yes, there is 3 sync's going on. 1 for the master deck (for Ableton), and 2 for the SMPTE (for Resolume) Yes indeed, each SMPTE and MTC get their own UDP port selector, and at least 25 and 30fps as chosable options (with 25fps selected as default). Offset = 0 by default, and streams off.

James, I could be completely wrong, but I remember reading in an article once that when SMPTE timecode is played on the CDJ's as an audio track going straight into the laptop to feed Resolume (which was pretty much how Armin Van Buuren used to do it, it's on youtube where he explains this), and you play your song with the pitch up, the master tempo button on the CDJ has to be OFF, otherwise the signal won't be recognized anymore by Resolume. I'm not aware of frames that need to be skipped. Don't think this is really necessary.

People may have been getting away with that, but I believe it is in fact a violation of the SMPTE standard and would not work right with all SMPTE receivers, the carrier signal is supposed to be at a particular frequency, and if you slow it down or speed it up, it is of course not at that frequency. Of course Master Tempo needs to be off, though; trying to feed a digital signal through granular resynthesis, which is what Master Tempo does, will result in pure garbage. The safe way to do it is to send SMPTE at the correct frequency, and just alter the digital payload appropriately to drop or repeat frames if you are running fast or slow.

And if I recall correctly, that is how the smpte~ external does it; when you are running at nonstandard speeds, it uses a phasor~ to control the current timecode value, and queries that to determine the frame number to report in the SMPTE signal, but always sends it at the correct frequency.

Ok, then i take back my words and stand corrected :-)!

We are in good position not to have develop anything commercial in that direction.

About varispeed SMPTE - it all depends on hardware.

If one has professional gear than varispeed tape playback

which has SMPTE recorded on a track, works to some extent.

There were also freewheeling modes for fast forward, rewind,

I used it myself in old days with Otari 16 Track, digi SMPTE Slave driver, Motu digital piece etc

Even cheaper solutions could accept small change in speed,

so idea of playing back audio track could work in SOME cases,

definitely if no speed change is needed.

But now that we have a working solution for Your personal needs, Johnny,

one could leave that large world of other users search themselfs for their solutions...

At the beginning of this thread, I have looked arround for information that could

help me understand what BLT was doing, what CD Players were sending,

and found quite a few links, like www.timelord-mtc.com etc etc

BLT is extensively documented and fully open source at all layers, so you can see exactly what it is doing, and I have shown my work and published my research every step of the way. It’s a Clojure program that provides a user interface and an integration platform on top of my Beat Link library, which is a Java open source library for interacting with the CDJs and mixers. The underlying research which allowed me to create Beat Link was performed with the help of my dysentery project, which is where you can find my analysis of the protocol that the CDJs and mixer use, and which BLT reacts to using Beat Link.

Now that my demonstration is successfully concluded, I do plan to find some time to respond to earlier messages in this thread. But first I want to enjoy the much-delayed arrival of spring weather, and spend some time outdoors!

All right, I am trying to catch up on all the discussion that happened while I was too busy to respond… It looks like everyone now understands that an AMXD can do anything that one or more standalone Max applications can do, in addition to tightly controlling Ableton, so that sounds like a very promising direction to pursue for Johnny’s particular show control goals.

Johnny, are you pursuing the approach of using Session View to launch your lighting control clips? If so, would you like a modified version of the Triggers file that sends a separate “stop all clips” message instead of re-sending the same message that was used to launch a track? All I would need to know in order to build that for you would be what note (or other MIDI message) you want sent as the stop message. But are you sure that you would only want to send that when all playback has finished? Surely, in the situation where you have cross faded to a different track, or stopped one track so another becomes master, you want the original clip to stop? I would think you want Stop All Clips to fire whenever the current master either stops or changes to a different player, followed by the message to launch the new clip for the new master.

And thanks, Source Audio, for the details about working with different kinds of SMPTE sync hardware, that was quite interesting. Also, just as a side note, the Timelord-MTC solution that you linked to is using my discoveries about the protocol in order to generate MTC. This is a small world. :)

It is a bussy time, so my contribution is going to be a bit less frequent.

I finished DualSMPTE+MTC.app, as Johnny asked for, here are details,

please confirm that all is as You wish, then I'll upload the app.

MTC listens to UDP port 17002, messages /master/...

SMPTE1 listens to UDP port 17001, messages /left/...

SMPTE2 listens to UDP port 17001, messages /right/...

----------

UDP port, Frame Rate, Offset in ms , Output Switch and SMPTE attenuator are stored in a file. Also midi Output device for MTC.

Output switches are not switching UDP receiver, but output of MTC and SMPTE.

Here is GUI , it positions itself top/right on the screen:

Hi all, been out camping for the weekend, and ready now to start working on this again.

I've been looking into working in the Session view instead of Arrangement view, and it certainly feels like the better solution, for a number of reasons. I've been testing with a few tracks, and unfortunately I've gotten a bit stuck with clips in the session view not launching at the correct position (but always from the beginning of the clip). I've tried various things, including launching the clips in Legato mode, but so far I haven't found the correct solution to glue the clip position to the global time/position. I posted a question about this on the Ableton forum, and hope to find some help there.

In the meantime I'm going to keep things as they were, in Arrangement view.

Source audio, I would prefer to have MTC listen to 17001, and SMPTE1 & SMPTE2 to 17002. Besides that this looks correct.

PS: Could you also upload that OSC test app you made, so I can test my setup without the need of always connecting my laptop to the CDJ network hub which is in another room. Thanks!

Hi Johnny , the reason I set ports like that was Your post from Apr 20 2018 | 11:45 am :

I would prefer an offset function for both MTC and SMPTE separately (same offset for both SMPTE signals is fine); By the way, if track positioning is updated every 80ms, that is not really a problem for me. I can live with that. The SMPTE app listens to port 17001 (but you can change it to whatever you want), and MTC should listen to 17002 (like the AMXD currently does)

-------

I will change that now to MTC 17001, and both SMPTE to 17002.

In a standalone it is no problem to have default value set to whatever,

and recall stored setting a bit later.

It could become a problem in a Live device, becuse I have no idea when loadbangs get executed

in respect to stored values in a Song...

So it is allway better to set default values to what one really wants.

--------

I will upload the app today.

On the list are also dual SMPTE only version , Live device that includes

BLT-Live and 2 SMPTE streams, and OSC Sending App to simulate BLT.

For that last one I thought of 2 options :

A simple one would be to send 3 values like BLT does

/master/enable /master/speed and /master/time

That means You can set speed and send any position to Live

to realign.

As You have Audio Track in Live You can listen to it in Live.

---------

Other version could have audio player, You would load

Song that You want to work with into it, and than let it play

using Loop points, speed etc.

time update will than behave like CD Player....

But for both versions we are talking about master track only.

-----------

So please just let me know what do You prefer.

-----------

About Your Song selecting problem, session view , arrangement view -

have a look in the Live Api documentation :

https://docs.cycling74.com/max7/vignettes/live_object_model

I tested selecting Clips and maintaining current time position.

Clips were set to trigger and legato.

It worked, but one has to first fire Clip numbers and then update time.

All called from max4live device

Hi Source Audio, I had a look at the documentation, but need to study it some more for me to understand.

Right now I'm not completely sure anymore if the clips have to be triggered in legato-mode, because when a new scene is launched (= a new track starts playing), this new scene doesn't have to start at the position where the previous scene left off. Instead it has to start at the transport position.. Correct me if I'm wrong.

Did you make a max4live device for this? Or does one exist?

Hi Source Audio, the built-in audio player isn't really a priority for me. It's nice to have, but not essential at all. Indeed I can listen to the audio in Ableton anyway.

About the song selection: In Session View the whole activation/deactivation of tracks is handled quite differently. In fact, the only time a track needs to be stopped in Session view is when the master track is being manually stopped (NOT when the track ends when another deck is already the master).

A scene (the horizontal row which contains both the audio track and the midi track) is being triggered at once with the scene launch button on the right. Whenever the master deck changes on the CDJ, a new scene is launched (and if the first track is still playing, it ends now).

So in terms of crossfading, tracks only overlap on the CDJ's. In Ableton, only the master track plays, so no crossfades happen there.

Are you sure about the first track ending when a new scene is launched? Perhaps you have your Session view clips configured differently than mine, but that was not how they worked for me when I was playing with it (otherwise how could you have multiple samples playing at the same time?)

Also, why use different ports for MTC and SMPTE when they are all being generated by the same AMXD? You are already using different OSC paths for the different parameters, so you really only need one OSC listener on a single port, right? The need for separate ports existed only in the world where you had separate standalone applications running in different processes for handling MTC vs. SMPTE.

And in the long run it will be better for MTC to be generated directly by Beat Link Trigger as a new Trigger message type. But who knows when my real work will slow down enough for me to think about implementing that. ^_^

Hi James,

as far as I know, Ableton is only capable of playing 1 scene (or 1 clip in a column) at a time, so once a new scene is launched, the previous one stops automatically (that's why a deactivation midi note is not necessary).

Perhaps it could be possible with a Max4Live device, but that isn't necessary for my setup. The only track playing in Ableton should be the master track.

You are correct, 1 OSC port could work as well.

Having BLT generate MTC would be really great, but at this point I can survive without.

Ah! Thanks for the clarification, I was missing the fact that you were putting them all in the same column. Great, that makes sense. If you ever get the positioning working properly in that mode, I can make a simpler version of the Beat Link Trigger configuration that sends the Stop message only when all music stops. At that point I will want to start with your current version, though, since you will probably have added a bunch of other stuff to it.

Yes to me putting all the audio tracks in 1 column, and the midi tracks in another makes the most sense to me. I can midi map a knob to scroll through all my songs this way, among other reasons.

I was surprised to find that the clips in Session view don't respond the same way than in Arrangement view. I hope a solution exists where the clips follow the main playhead in Ableton, because it would simplify my setup quite a bit. However if not, I'll stick to working in Arrangement vieW.

That's what I tried - set 2 Tracks, each having 1 Midi and 1 Audio Song per Clip Insert.

At the end grouped the 2 tracks, because that made selecting Clips easier.

That way, using call to Live to select the Clip number , and sending

new time and speed starts new Clip/Song from wanted position in the Session view.

In fact it is possible to both -> grab the current Live's Transport

position, or receive new from BLT.

The timing of messages has to be in proper order, than it works just fine.

Sending position messages over OSC, and Clip Select via midi will not guarantee the order of messages.

--------

It would help me a bit to go on with AMXD which also sends 2 SMPTE signals out if You would decide on UDP Port numbering.

Shall I include port separation or not ?

That makes sense. It would be easy to turn off the MIDI sending in the triggers, and use an OSC message to select the clip and start it playing, if you could describe the necessary parameters. We should probably use another OSC message to stop playback then.

That sounds promising, and there is no hurry on this! Please let me know what parameters will be needed in the OSC message for clip switching. It sounds like a clip number, an integer? If so, how would Johnny find out what that number is for his clips so he can update his track map to hold these parameters instead of the current MIDI note and MIDI channel?

Source audio,

I tried grouping the audio and midi clip in session view, but this option is greyed out... Am I doing something wrong?

Below is a screenshot of how my clips are organized, and how I really prefer them to be.

Since James pointed out that both MTC and SMPTE can use the same port, I can definitely live with that. It's what you prefer.

Just a thought, but isn't it possible to have OSC trigger the Scene Launch button (the triangle icon on the right)?

About the parameters: Right now every song in BLT triggers a midi note. How about if I name my scenes that same midi note?

Been trying out the MTC+SMPTE app.

For the most part it works decent, however a few things:

- The big timer is very good.

- The clock doesn't work when the MTC line is set to 'Off'.

- I can't get the SMPTE 2 to work. Both CDJ decks output on SMPTE 1. Also the 'Start' button in the app for SMPTE never turns red when deck 2 plays. However the SMPTE that's being sent is correct. I'm guessing this has something to do with the triggers...

- Both "Current Position" timers for the SMPTE don't work. They always stay at 00:00:00:00

- If I move the pitch on my CDJ to +6%, the speed changes to 1.1 instead of 1.06