Sending a prompt from a patch to server (llama or chatgpt)

Hello.

When prompting form max the udpsend object changes the text adding a comma or "text" so if I prompt: what is the capital of France?

From text edit. Terminal shows: anything, stext is the capital of france?

From message. Terminal shows: anything, swhat is the capital of france?

I've tried regexp but nothing seems to work.

Ideally, I would like to send the prompts from messages box.

Any ideas will be appreciated.

Irbach

does the llm api actually require anything "text what is the capital of france?" ?

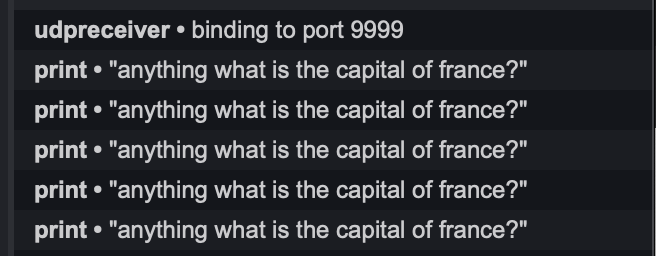

What are you using in terminal to monitor the incoming UDP packets? nc? I'm using nmap with ncat -u -l 9999, resulting in this output:

The commas are still at the end (must be a message separator for UDP) but using a mix of [route text] and [tosymbol] the Max console prints the string without extra formatting. The "text" you were seeing is automatically prepended by [textedit], so we just remove it using [route text]. In this particular case, when sending over UDP, its simpler to treat everything as a string (tosymbol) - this will help eliminate separators like "s" being prepended.

Here's the updated patch. If the comma is a problem you can always clean it up on the receiving end if it's still present (unless this is a direct call to the LLM server, I don't think it would matter too much if it's still present).

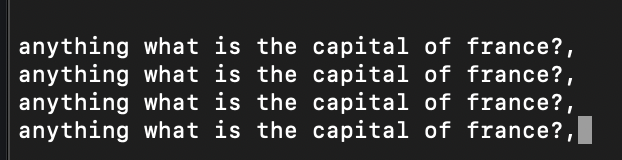

Thank you! Yes, I understand that the LLM does not accept the comma at the end and regexp is not doing the job in the json file. The patch that you sent works fine but shows in the terminal as:

Received: what is the capital of france?,

Prompt: what is the capital of france?,

It seems that Max adds the comma at the udpsend so no matter what I do before it gets like that to the terminal, BTW it does not show messages in the console now.

Maybe there is a better way to prompt an llm from max...