export PNG image (beyond jit.world) with color space information

Hi there

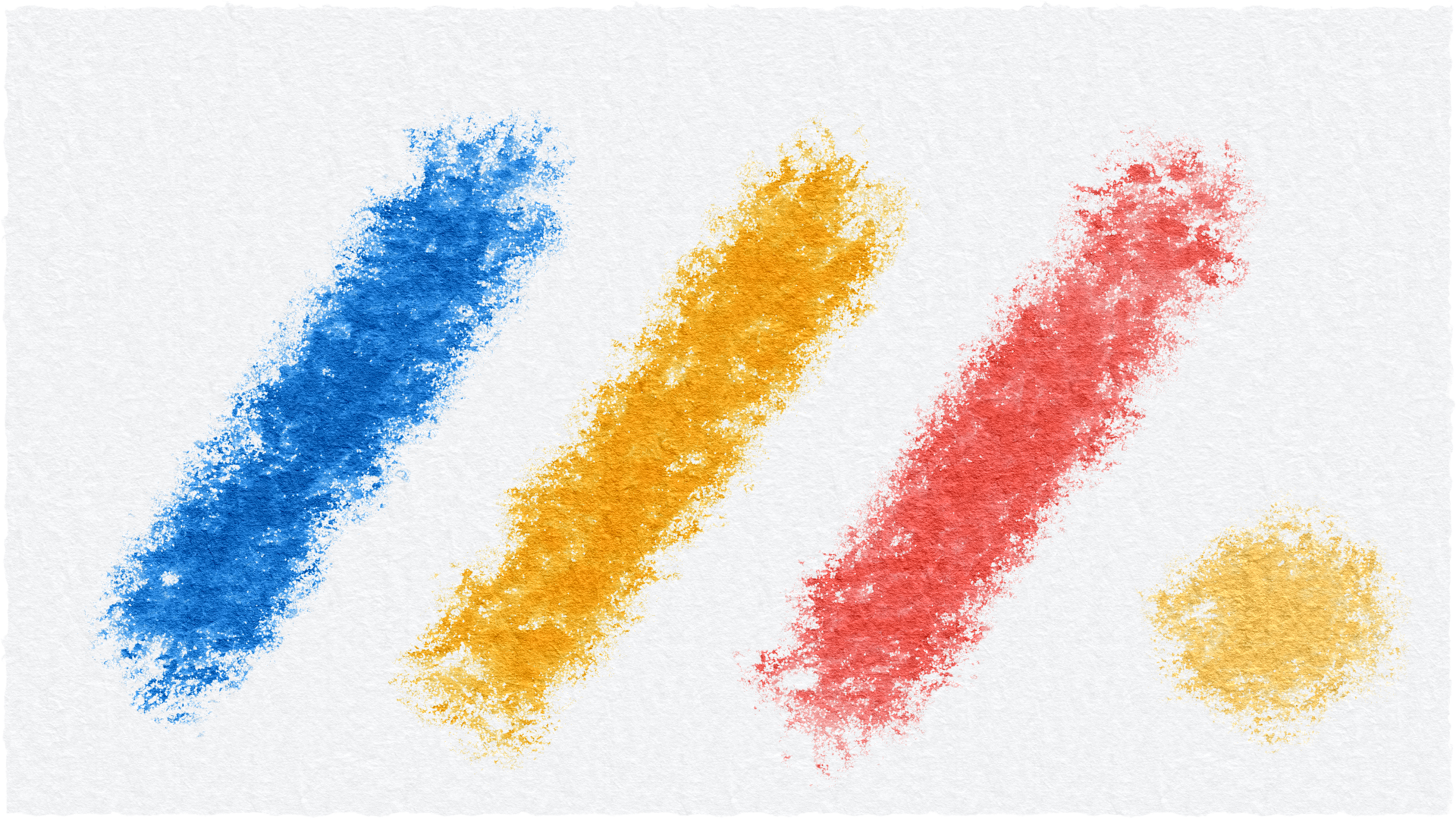

I am using digital artwork images (PNG) that have sRGB color space information to create audio visuals inside Max with Jitter.

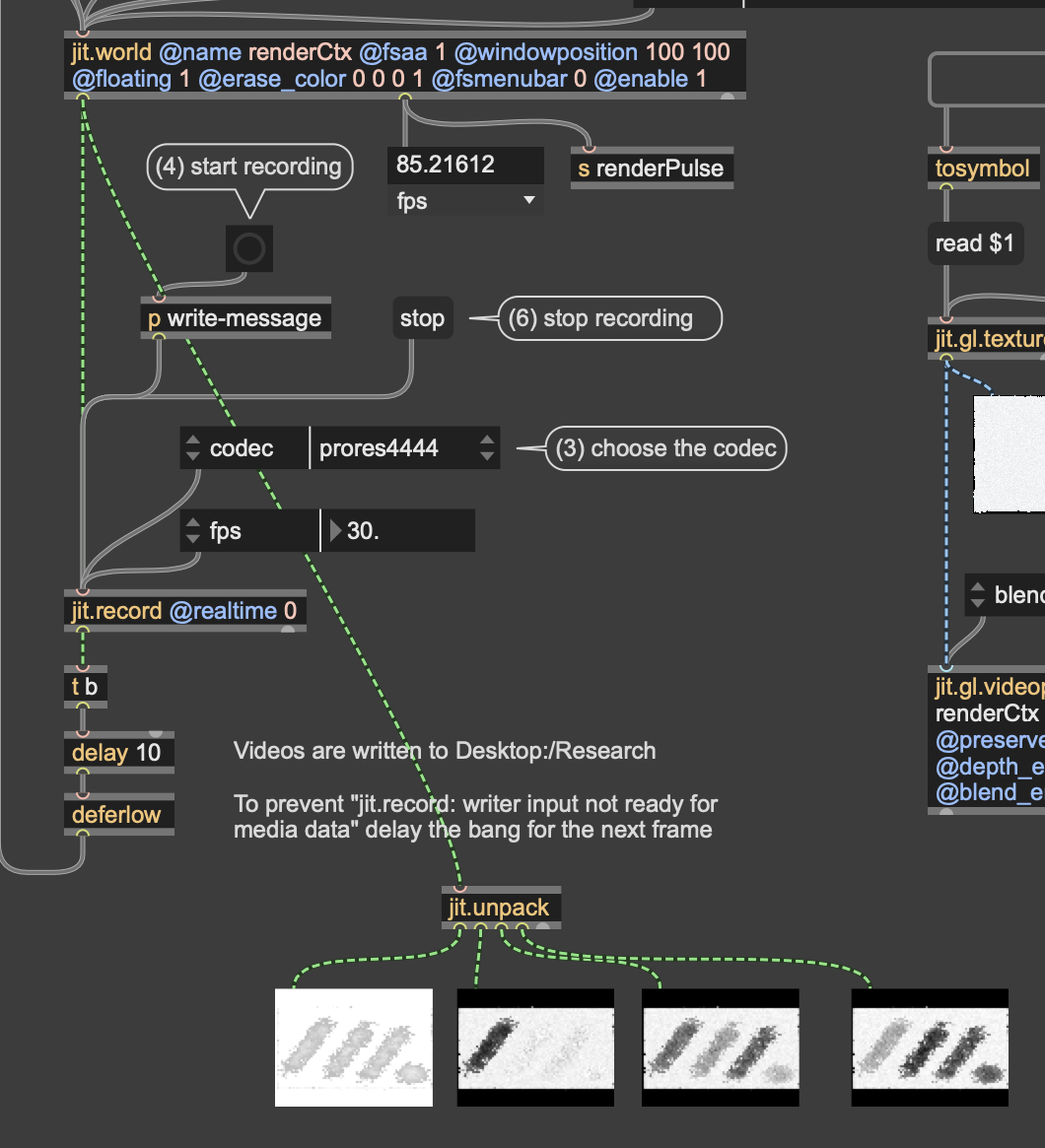

Lacking a proper way to record the jitter output as a 4K video - the ProRes 4444 Codec has bug I reported to Cycling 74 Support in March and they are working on it, but no information when it will be resolved - I try to take the PNG image route.

Following the Max user guide I use jit.maxtrix and exportimage - but I have no control (or missing the knowledge ;-) over the metadata that is written into the PNG files, hence they lack the sRGB color space information.

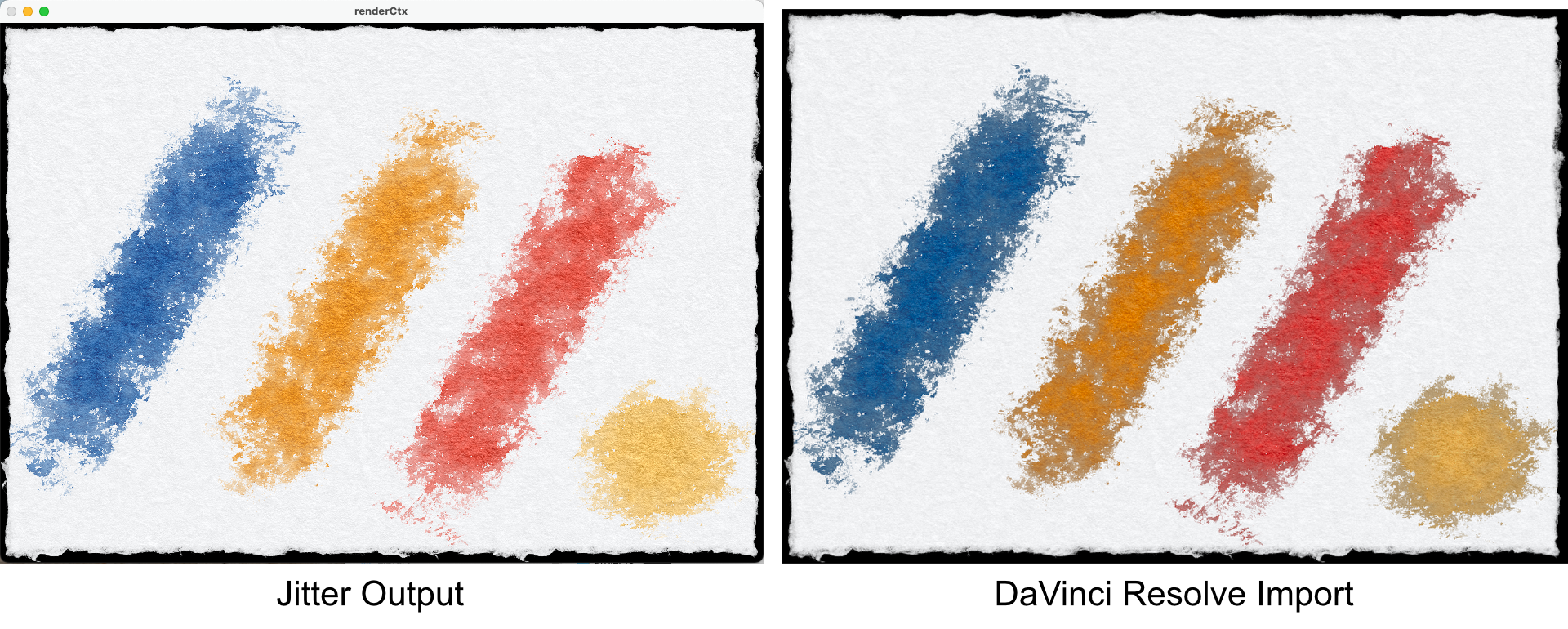

The problem : importing the PNGs (as an image sequence) into DaVinci Resolve to add the audio and render the video, the missing color space information leads to a very different colors - or in other words : the visual composition is ruined ;-).

The attached image "Jitter Export in DaVinci Resolve.png" makes it obvious.

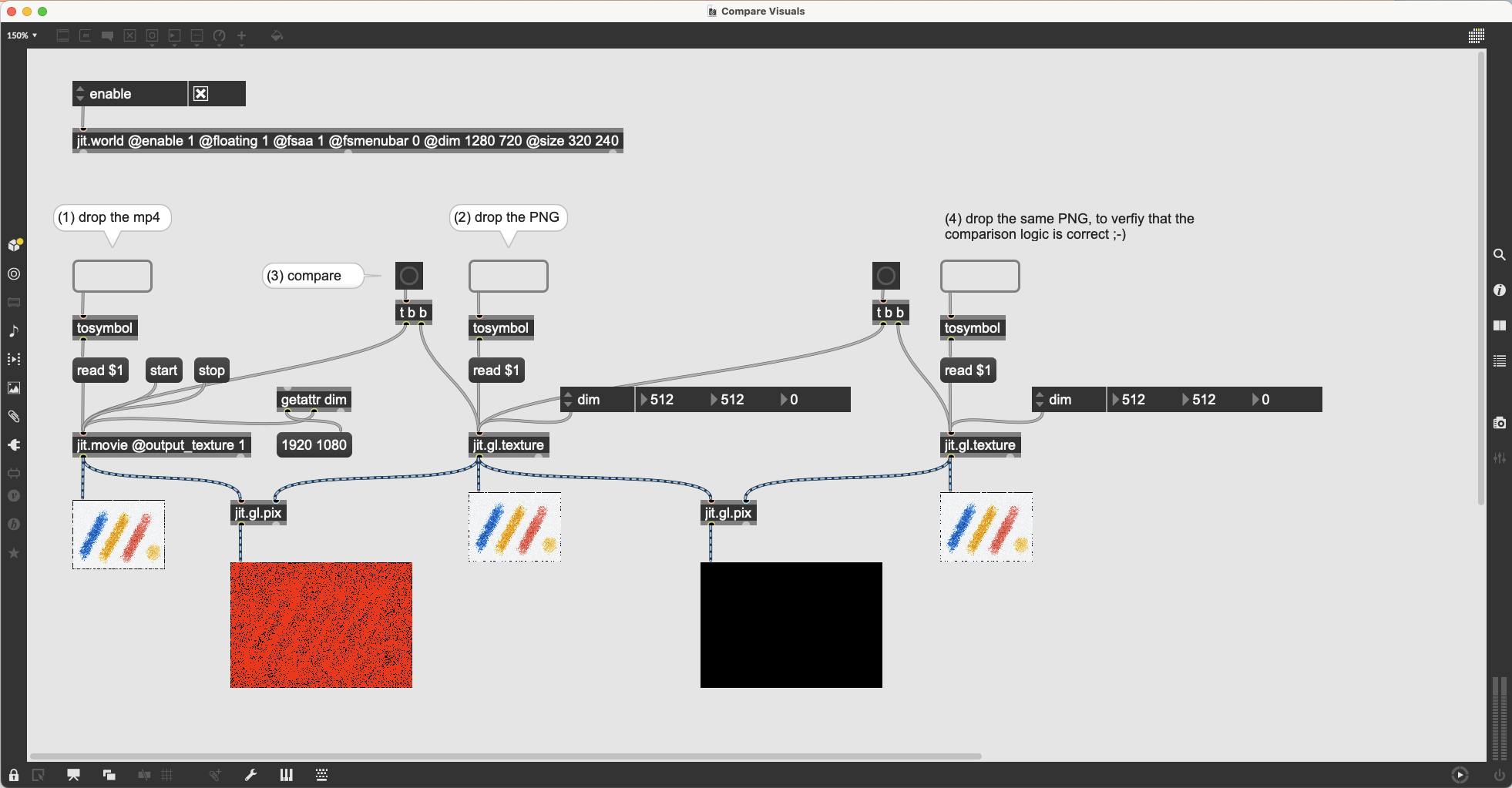

With the attached patcher and the 2 sRGB images (Canvas.png and Colors.png) this problem can be reproduced - just follow the bubble comments in the patcher.

+++

So I wonder if I am missing any option in jitter to write appropriate color space data into the PNGs.

By the way - the C74 article series "polish your pixels" and especially the Color Science demystified article is great on that subject and explains the RGB <-> sRGB challenge and solutions in jitter very well, but it lacks information when it comes to "store the stuff to disk".

As a workaround I see 2 possible routes :

(1) write the missing metadata into the PNG by myself using a nodes.script and a npm package that does the file IO for me. A first research makes me believe that there are 2 essential PNG chunks that matter : gAMA and cHRM.

(2) using Syphon and maybe Syphon Recorder to grab the images outside of Max and apply the metadata there - but I don't know if setting the PNG metadata is even possible in the Syphon apps.

The challenge : I hope that some day I can make this tool available to others and hence I want to limit the amount of secondary tools needed. So the Syphon route that requires another external app (Syphon Recorder or OBS or ?) is not that what I really want.

On the other hand, I am fine with saving the visual composition to a PNG image sequence so that the other users only needs a video software like DaVinci Resolve (or I use ffmpeg and the shell object to render it from inside the Max patch) - but for this I need the correct color space data inside the PNGs.

What these metadata have to be, can be retrieved (via a node.script) from the original PNGs, that are exported from what ever digital artwork software that creates the "input images" for the jitter patch.

Any ideas are very much appreciated

+++

Does SyphonRecorder (or OBS) give "proper" results with ProRes4444 when loaded in Resolve? Or is it the same as directly from Jitter?

Wondering if this is an alpha premultiply issue as opposed to a colorspace issue.

@Rob : I can give it a try later (because I have no Syphon pipeline setup on my Mac, nor patched in Max yet) - currently I am on the first route trying to write the PNG metadata in a node.script.

The attached patcher is the one I shared with the C74 support - so if you have a Syphon pipeline going, you may check it yourself in the meantime, using the 2 (sRGB) PNG images from the post above.

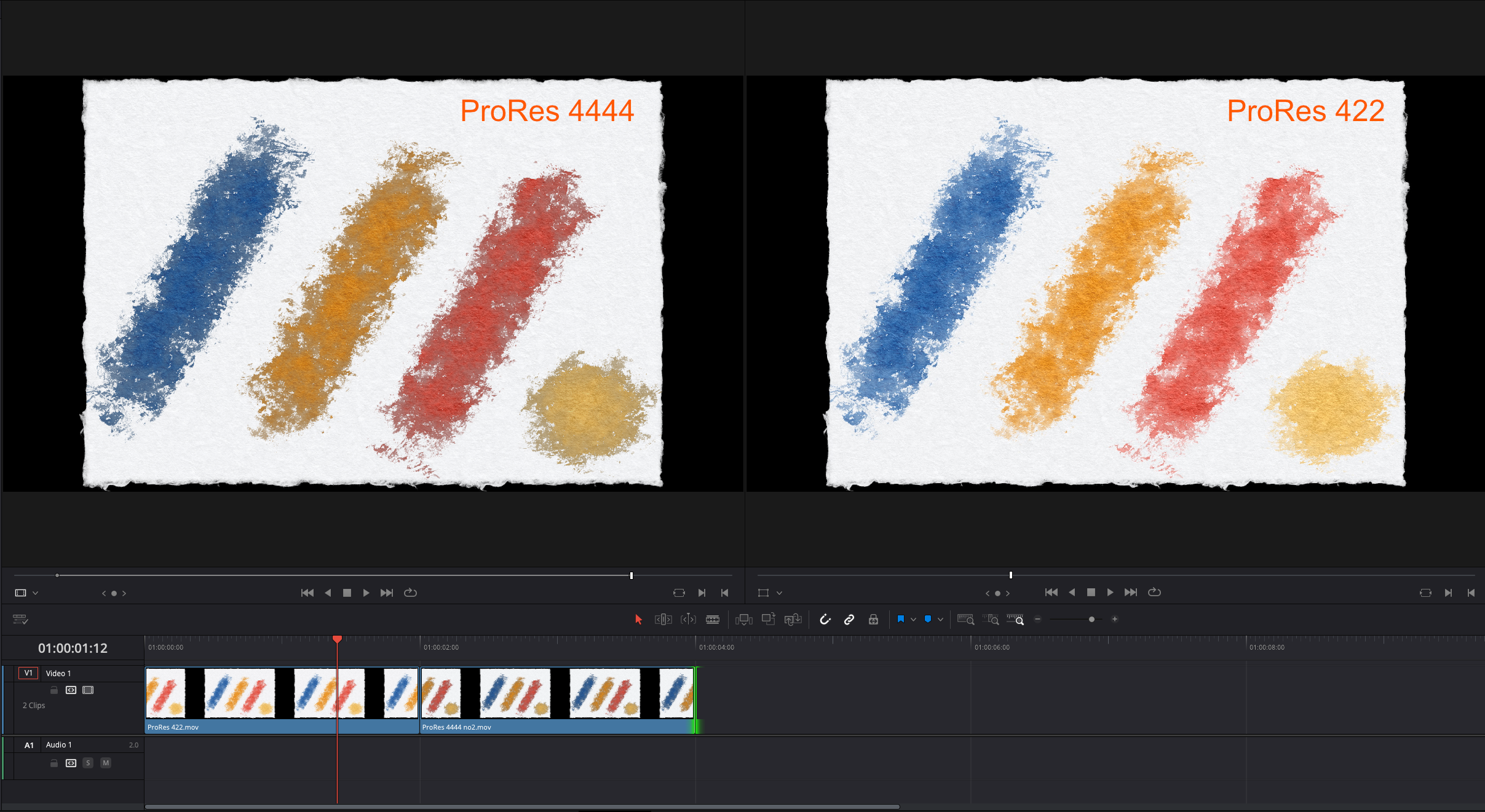

The image "ProRes 422 vs ProRes 4444" shows the "video frame" in Quicktime, when exported with these codecs via jit.record from the attached patcher - technique taken from your article by the way.

so the prores 422 one is "correct"? If so I think that indicates some type of alpha premultiplying on the player.

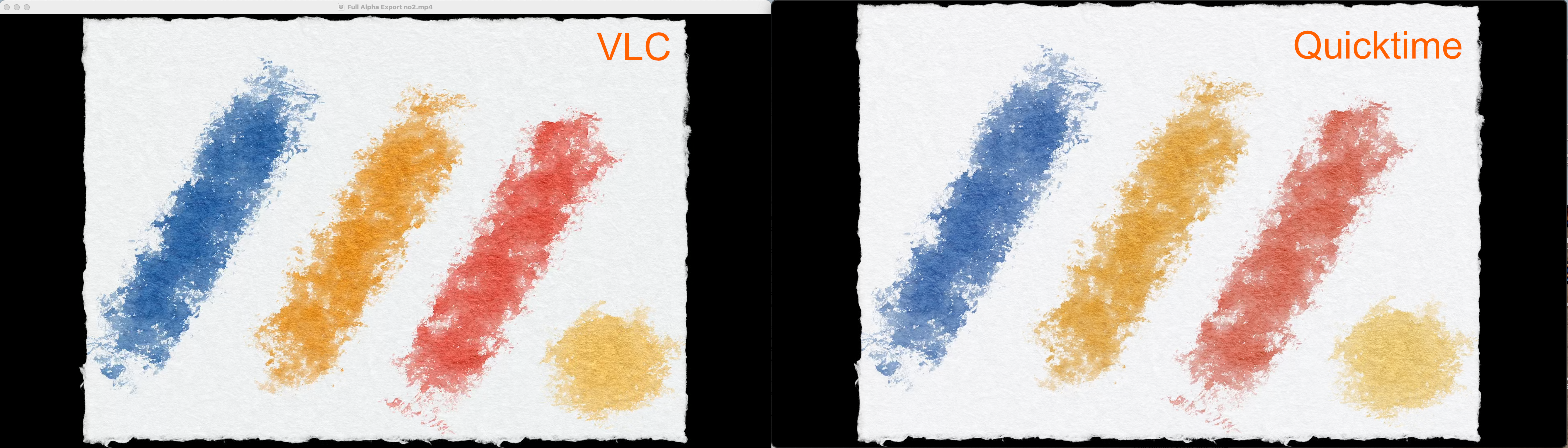

I'm fairly certain this is an issue with premultiplied alpha vs straight. If you open the recordings in VLC player they look as expected. Not sure about Resolve but some clients allow you to interpret the source footage as pre-multiplied or not, so that might be enough for your use case.

We might be able to add this meta information to the recording, which I think would indicate to quicktime how to interpret, but I guess it defaults to assuming premultiplied, and we export as straight.

But I don't think this has anything to do with colorspace.

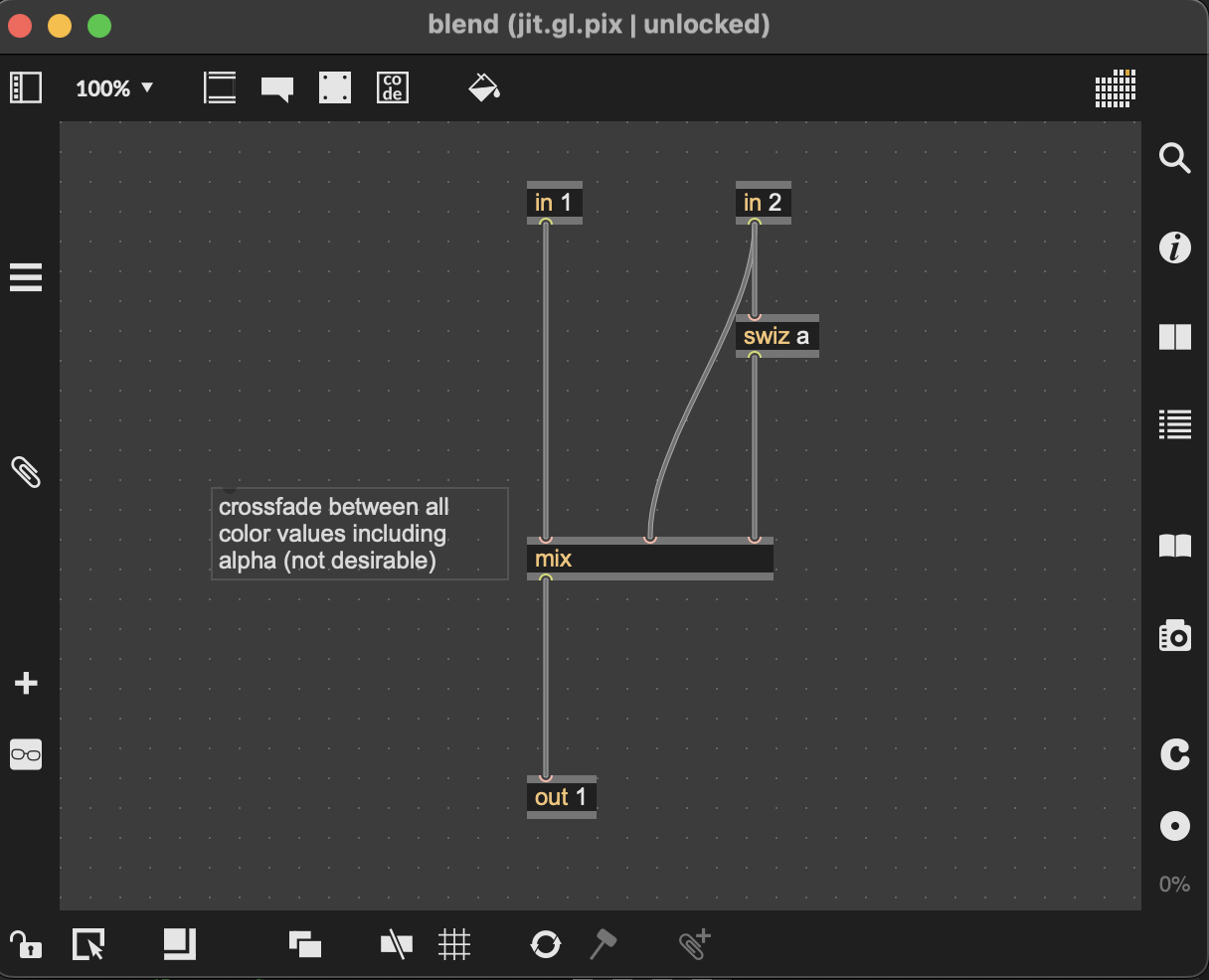

here's your patch with a manual premultiply pix on the output, which to me looks exactly like the quicktime player view of the recording, indicating that premultiplying is the culprit here.

Thanks @Rob for your investigation ... I will reproduce it soon, see what happens with Resolve and report it back.

I stopped my research back in the days seeing the results in the Quicktime player - so its great to know about this pre-multiplied alpha feature of video clients - thanks for sharing.

Concerning color space : this issue still remains for PNG that are created via jit.matrix exportimage.

Or in other words, you solved the initial issue from March, but not the current one ;-) ... which is nevertheless awesome !!

The thing is that working with PNG images instead of video exports makes sense in some scenarios, as e.g. Apple ProRes cannot go beyond 29,97 fps and supporting 100+ Hz Monitors is another thing I want to achieve, as it makes a significant difference to the viewer especially on bigger screens - modern Smart TVs are great digital art surfaces for several art applications (incl. art installations).

I am currently working on writing the corresponding PNG metadata via node.script (after the fact) and I think it should work.

Because theses PNG chunk data are related to the sRGB Standard "IEC 61966-2-1" (see sRGB Chunk Spec on W3.org) they should be always the same (static and not dependent on the artwork).

So if I got a proof of concept & technique going (will share it here), maybe C74 can make it possible to expand the exportimage message with some additional parameters to do this direct on export - because iterating over a lot of files and doing IO on them (each of them MBs in size !) in a second pass, is just a waste of time as it does not contribute to the art itself ;-).

Some further thoughts on this after discussing with a colleague (leaving aside the colorspace conversation for now).

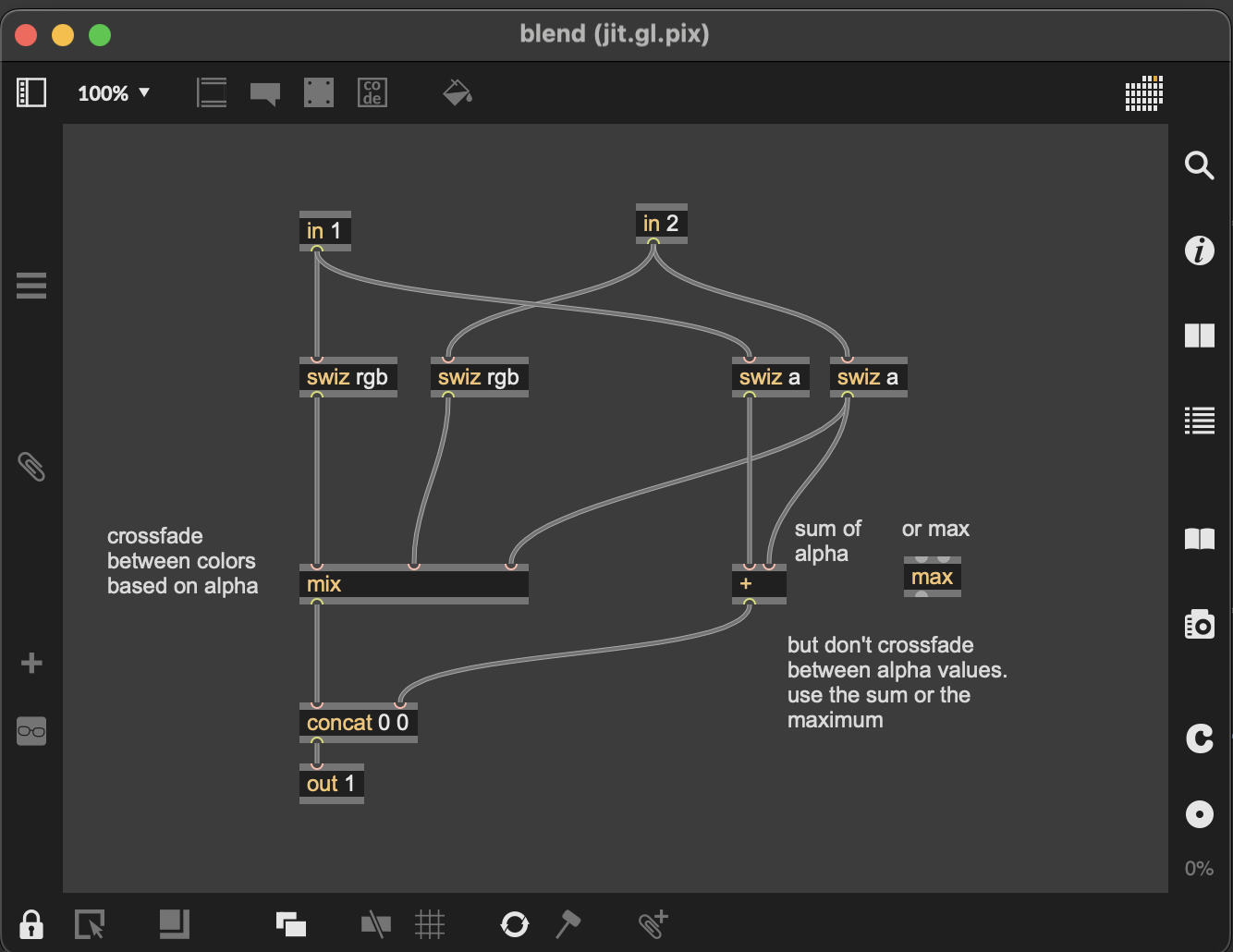

The issue here seems to be the way OpenGL is combining the different layers alpha channels, which might not be expected or what we want for image export. Rather than accumulating alpha it blends it, giving this as a final output channel:

There is nothing currently in place to allow for accumulated alpha, though we can look into it for a future update.

That being said, it occurs to me the simplest way forward for you to get an exported image that matches what's in your jitter window is to simply set full alpha channel prior to export, since it doesn't look like the current alpha channel has useful data (and causes the problems you are experiencing). That might be enough for your needs at this point.

basic example of this in case it's not clear:

Let us know if that's not enough, as there are some other (more complicated) things you can try as well.

@Rob - thanks for your further research & suggestions ... this touches an area I was already curious about : handling the layer blending myself - I come back to that later.

I just want to report back my results regarding your first thoughts : alpha premultiply - as mine are different from yours.

The reason might be, that we are using different versions - most importantly I am still using Max 8 (8.6.5) and I am also on MacOS 15.4.1 and an Apple Silicon M1.

For my test I used the attached patcher (Research Video Codec v3.maxpat) from above and expand it from 1 to 5 seconds export - everything else is the same.

1) VLC does not playback the Apple ProRes 4444 export correctly - the transport bars shows progress, but no visual output : the app stays in the MacOS Finder alike view and does not even switch to the video player view.

VLC playback of the Apple ProRes 422 export is fine - and the colors are like I expected.

VLC is up to date with version is 3.0.20.

As your tests in VLC lets the Apple ProRes 4444 export appear visually correct, or the same as the ProRes 422 export, it makes me wonder if Max 9 has also been updated in this area (jit.record or Codec handling)

(2) DaVinci Resolve shows the same issues as Quicktime Player - see the attached image below.

Hi @Rob - I tested your approach "set full alpha channel prior to export" and looks like we are getting closer - maybe we are even there, but my perception fools me ;-).

If there is a difference to what I expect, I guess the solution is doing the layer blending myself and not via jit.world / OpenGL - although this makes me wonder how video software like DaVinci Resolve handles color space information, when importing image sequences ... but I come back to that later, when I got something Max patched that works.

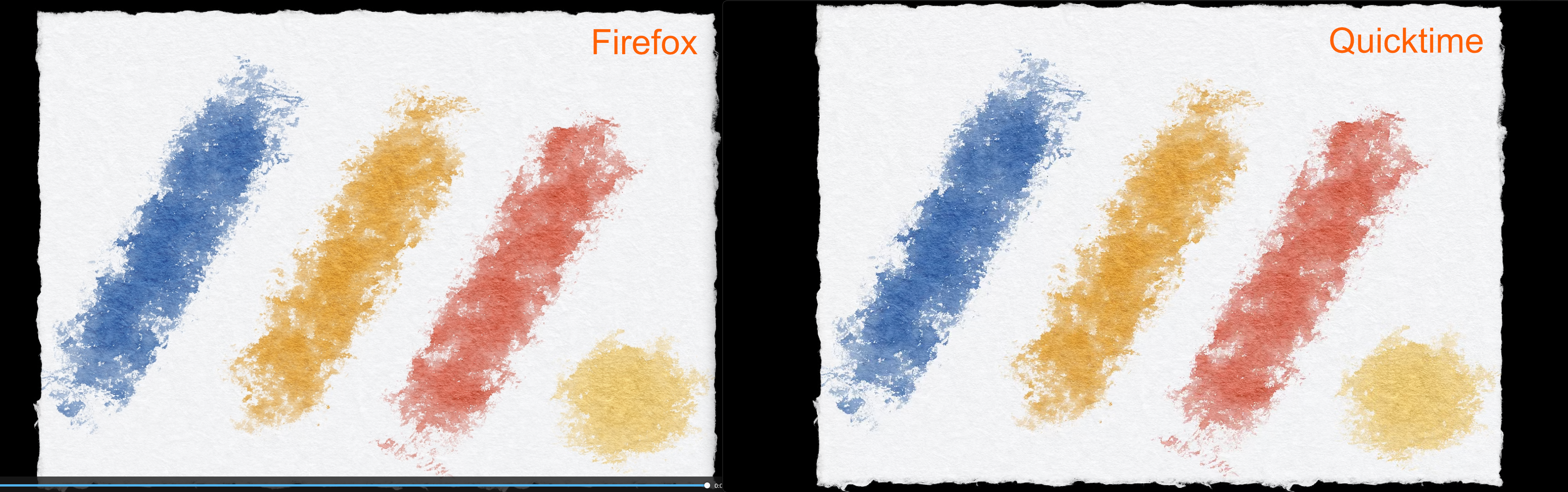

Regarding "full alpha channel prior to export" and testing the MP4 H265 video output of DaVinci Resolve (based on the jit.matrix PNG export sequence) in different video players, I got a surprising result that points back to what you have mentioned regarding premultiplied alpha vs straight.

There is a significant difference in colors between VLC and Quicktime and less but existing one between Webbrowser (Firefox) and Quicktime.

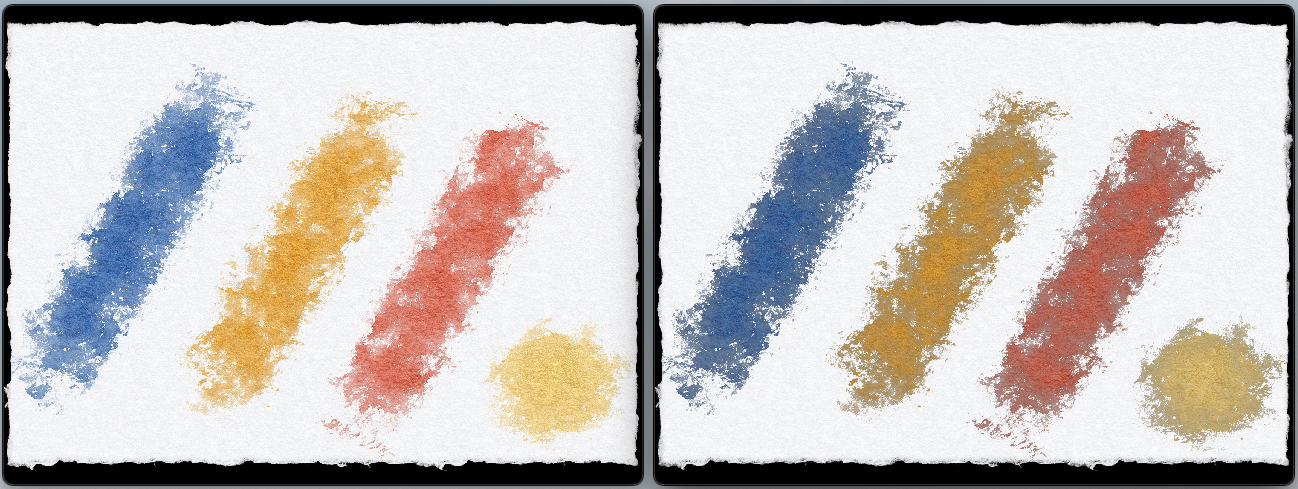

@Rob - after a short break and looking at the colors again ... I still see a difference.

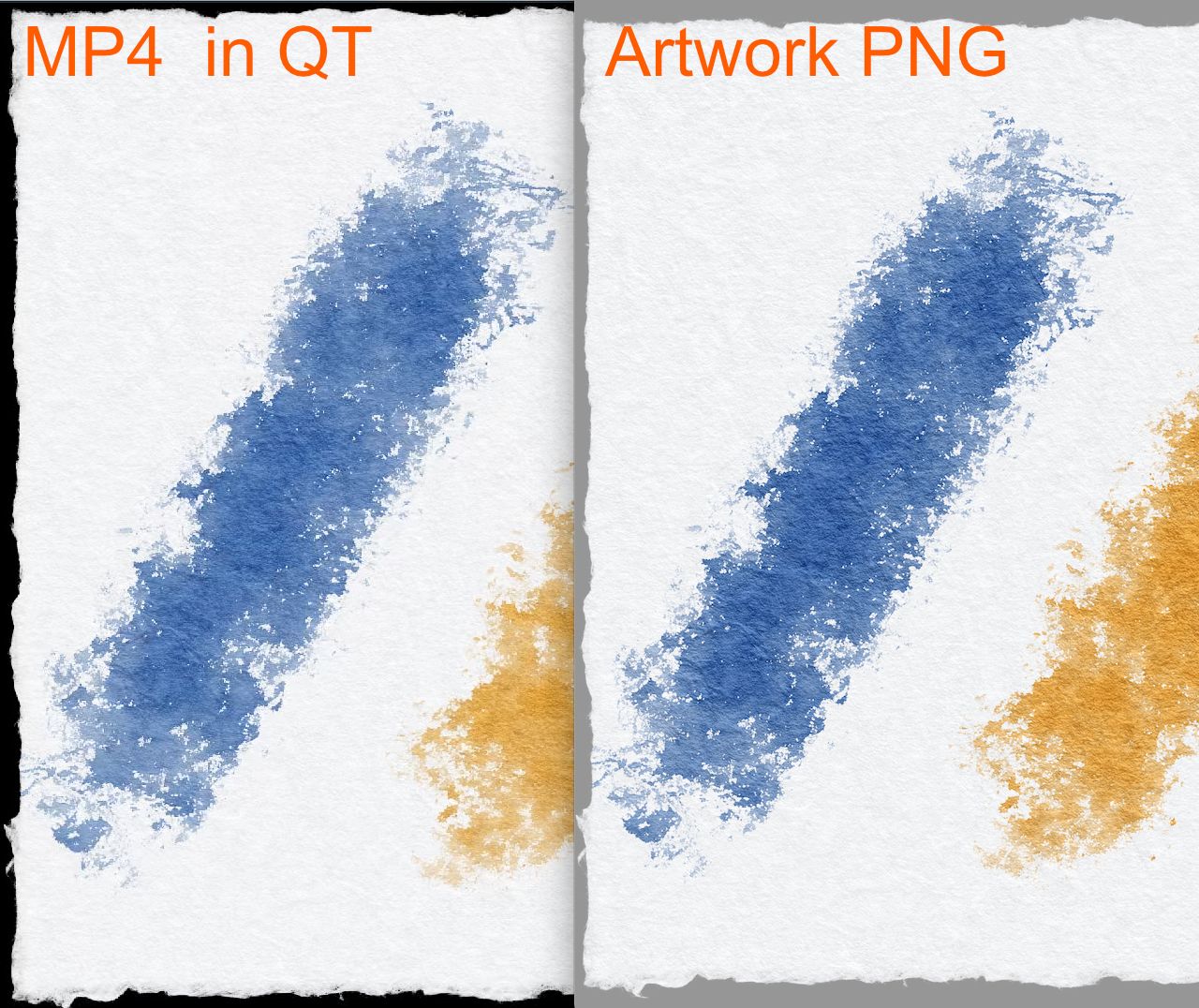

Below there is a comparison between the original artwork PNG (which has sRGB Color space information set and hence the Viewer can respect it) and the Mp4 H265 in Quicktime.

I see more contrast and bold colors in the dark tones of the blue in the original artwork PNG - the Mp4 looks more washed out in these areas.

As the Mp4 was made in DaVinci Resolve from the jit.matrix PNG export, which have no sRGB color space information (hence Resolve does not apply it), this can be the cause of the less powerful blue tones - as this lack of color intensity fits to what's written in the C74 article.

But it can also be a Mp4 / h265 compression artefact.

To narrow this down I will write a node.script that writes the sRGB metadata into the PNGs after the export and see if this makes a difference - I will be back with the results.

@Rob : setting full alpha channel prior to export (but doing it via shader on the GPU) is sufficient for me now, enhanced by doing the layer blending myself and not via jit.gl.videoplane and hence getting alpha blending out of the hands of OpenGL into mine ;-).

Regarding the color differences mentioned in my last post : this is something happening in DaVinci Resolve and I guess an artefact of the Mp4 h265 encoder and has nothing to do with Max.

Although ;-) ... the Mp4 and PNG file have different color profiles set and hence I am not 100% sure if I compare apples with oranges - this color space and color profile thing is still something I have to observe in detail and maybe worth to discuss in a dedicated forum thread.

By the way : setting additional PNG chunks via NodeJS is possible, I got it done with the npm package "png-chunk-editor".

+++

For anyone who might be interested : I did a test with a PNG export (color profile is sRGB) of the artwork software, imported it into DaVinci Resolve and rendered a Mp4 h.265 Video from this 1 still image (no Max patcher involved) - see the files attached.

Comparing the video frames with the original PNG (see the Max patcher attached below) makes it obvious that a lot of (nearly all) pixels or RGBA color information is different : pixels that differ are red, so the encoder "mangles on the colors", I guess for compression purposes (or the DaVinici Resolve image import does it ).

Thanks for following up here with all your findings!

Locally, while diagnosing this issue, I had made a version which did the layer blending using jit.gl.pix to correctly combine the alpha channels using summing or max in case you wanted to retain a combined alpha channel for output.

What OpenGL's blending mode is currently doing is the following (i.e. the alpha channels of source and destination are also alpha blended, rather than summing/maximum/etc).

In the future, we can look into improved alpha blending modes in jit.gl.* objects which allow for specifying different blend modes and equations for the alpha channel vs the RGB color data. At this time however, that isn't possible without making custom shaders.

p.s. great tip on the png-chunk-editor npm package. Thanks!

Thanks Joshua for the details - these coding details are really helpful ... sometimes some code with documenting comments at its side, explains more than 1000 words ;-).

Improved alpha blending modes in jit.gl.* objects is a great idea.

The reason being : there are a lot of blending modes developed, whose formulas can be found online (and hence be repatched in jit.gl.pix at the cost of lot of patching time ;-), and lots of them are supplied by C74 as .jxs shaders ...

... but being able to handle RGB and alpha differently and using alpha as a parameter of the blend formula not only "inside" jit.gl.* objects, but also as Gen Jitter operators would be awesome - something in the line of the mix operator.

The point being : all the know color modes have their creative use cases, but none of them is flexible enough to be modified and being applied to different areas of the images in 1 easy to patch "environment" - or in other words : without separation via masks and merging together further down the patch cords (which is cumbersome).

I love the potential that jit.gl.* offers me in 2D artwork and being able to blend layers (textures) of the artwork in different areas with different & parametrized blend modes in an easy way inside jit.gl.pix - having powerful blend mode Gen Jitter operators at my disposal - would be a dream come true !!

+++

And ... improved alpha blending modes ... please alongside with an article explaining them, which will give jitter beginners a chance to patch with satisfaction (instant rewarding patching is essential for keep on exploring and not to give up to early) and not be forced to take a ride into a rabbit hole ;-).

And I think its also helpful and especially inspiring for experienced Jitter patchers, as its a source of inspiration and create ideas for new approaches - as for example the article series polish your pixels, which is excellent!

E.g. these common OB3D properties : blend_enabled, blend, blend_mode. These are also present for a jit.gl.pix object. Can you imagine how confusing their existence and lack of information about how these properties and when they are applied, is - especially for someone diving into this for the first time.

The jitter OpenGL pipeline is an awesome tool, but its missing a documenting and explaining birds eye view that brings the core patching objects into the picture with gradually evolving technical details ... so that one can really get an understanding by reading it.

Yes, leaning by patching is essential and it will always be and for this the existing tutorials are great.

But a top down, birds eye view, bringing the jitter objects together one by one in meaningful way would be very valuable - I think the point would be : the results of the example patchers that are developed through that article series should not create awesome art, but keep things simple and visually easy to understand, so that the mechanics and inner workings of the jitter OpenGL pipeline becomes clear and hence the foundation is given for own explorations, enhancements and creative expression.