key frame - synch/automation in jitter

hi everyone,

just opening a thread to discuss this. As i m trying to automate a full piece in jitter with evolution in timed format, i went through the idea to drive it from live ableton which i used to do for basic not precisely timed automation. But then i m facing a relative headache for which i cant get a direction in the case of procedural parameters that runs on the jit.world metro.

Somehow we can sayJitter counts in frames right? I use that counter to drive cameras and other stuffs.

At the end you can imagine that counter as a jitter time line - made of frames #id. But this time line doesn't exist in max. By itself it's not really problem.

Now I m trying to synch Ableton time line to this frame time line so when i scrub in ableton time line i also get the right position in frames. I ve tried several options like converting millisecond to frame and report that number - but as fps is not stable, it obviously cant work.

In TD they implemented a key frame panel that reports progression in absolute frame and also would listen to smpte, where you cant automate operators/parameters accordingly to time-lined frame number.

I can't think of the appropriate strategy here. Any suggestions? Would there be another solution than build up a entire key frame interface in max as the one existing in TD?

thx!

so it's very archaic, but my workaround is to store upstream the theoretical frame number for a given simplified time cue, like for each whole minute, at a base of 30fps. It loses fluidity on the jitter side a bit but the project was going to be renderd at 30fps anyway.

This allows you to scrub in live by subsections before meetings cues which will reset to the right corresponding frame counter at the other end.

Like I said, it is super archaic. But I don't see a way to track the jit.world fps variation throughout a writing project where it will vary unexpectedly. Also main interest is in term of workflow itself to avoir playing the whole project from beginning to get the right positioning etc.

any other suggestions?

Port your patches to TouchDesigner, if you don't want to waste time ;-)

I am still wondering about why there are not more people missing a keyframe editor in MAX and a decent sync solution to Ableton Live

I tried a lot of things in MAX regarding keyframe editing https://cycling74.com/forums/editor-for-keyframe-animation.

I had lot's of hope with the new features of the [mtr] object, but currently it is too buggy or is lacking features

https://cycling74.com/tutorials/best-practices-in-jitter-part-2-recording-1/replies/1#reply-5f9ae44ca9daae2b710a9f58

But to be more on spot to your questions:

A) I think you may benefit from sending bangs to [jit.world @enable 0] from Ableton Live instead of [jit.world @enbable 1] running its own clock.

B) If you can afford it (in my case it doesn't work because Ableton Live already consumes 10%-20% ofmy GPU) you could use only MAX for Live to get rid of OSC or UDP headaches.

C) I think in the LOM https://docs.cycling74.com/max8/vignettes/live_object_model you will find some topics regarding transport. Or have a look at the TDA_Master m4l-patch from TDAbleton-package in TouchDesigner. - Also [transport] object should work in MAX for Live

man sorry i missed you post! we are on the same page.

I think most of users make real time random algorithmic stuff . But when it comes to really write a full piece made of timed procedural things and synch with sound it's another story.

Most of the time i record small parts and edit in other software - but then you lost the interest of generative material , and for projects that needs a precise writing and the whole perspective of progression it's necessary to stay in the generative world. Automate an entire piece although requiring a gigantic work, offers the advantage among a lot to be able to edit and improved the work.

Thanks for the advices. I havent tried the bangs to jit.word. Actually for some reasons like saving ressources, my control (Ableton) machine is on a mac, and jitter runs on its own gpu on windows. They are linked through m4l and osc. I have tried some lom tricks, but damn like the transport objets seems to be too unprecised and irregular to calibrate a smooth fps. But i will give a deeper look then.

For now i created a track on ableton that trigs each 15sec the corresponding frame count at a rate of 30fps and sent it to a counter in jitter to synch all process depending on that count while the jit.world is also set synch 0 to 30fps.

But i definitely agree, this is a huge lack in max/jitter, something that make its use in the synch world with sound for instance very complicated.

thx!!

In TouchDesigner it is only 3 clicks to set a keyframe for a parameter and then you can edit it in a comfortable curve editor. I wish this would be possible with MAX. But I guess without many users writing feature requests to the Cycling Support this won't happen.

But also if such an editor would be available there remains the question of synchronisation to external processes.

my control (Ableton) machine is on a mac, and jitter runs on its own gpu on windows

Yes this also my setup.

I gave up to record all automation in Ableton Live for complex patches containing hundreds of parameters. I try to keep most of the automation inside MAX - [pattrstorage @subscribemode 1] all relevant parameters have scripting names. I interpolate between presets by sending float values from Live to [pattrstorage]. ([mtr @bindto] provided it is bug free and compatible to [pattrstorage @subscribemode 1] would be even more helpful for this.)

I use a modified Envelope Follower M4L patch and send triggers generated from it to trigger processes in Jitter ( [mc.function] in my case only a few seconds duration). I don't know if this works for your use case.

In order to reset counters if I scrub through the timeline I use information from [transport] in a M4L patch e.g. raw ticks. I trigger the [transport] with half the interval my Jitter runs at (eg. transport triggered by [qmetro 8] and [jit.wolrd @fps 60] (Different to my suggestion from the previous post I let Jitter run with the [jit.world] clock, just because it works for my use cases.)

just curious, have either of you tried something like Vezér ? - https://imimot.com/vezer/

or ossia score ? - https://ossia.io

i typically use Qlab to control my max projects, because i have less a need for keyframe curves and more of a need for cue organization and control.

hi rob,

yeah i know both of them and also use qlab. Qlab is good as you said for scene kind of things (when it triggers pre. rendered material for instance). I do not have actually any trouble to trig stuff from ableton in jitter with maxforlive even curved parameters that are ok to say "approximate". As long you create your own osc device in m4l it would work as vezer or qlab would do (vezer has nice curves and ease presets though). My pb is really in terms of synch for things, that uses a value correlated to the frame count . As everyone i use a lot of fonctions to move things and camera like this very basic example :

It really works based on frame count of course , to whatever the fps is. But. It very hard to synch on a frame count from another media... if the fps is not stable. That's why people made smtpe right? let say i have some music playing and i need that the position of the cam at minute one should be at an exact position and exact frame count with the world synch to it.

Let say i use the transport info in ableton in ticks and convert it in ms and then frame and report it to jitter so it will follow that counter and not the one triggered by the world, then as the first little fps irregularity, it will create an offset and the camera will haha jitter

My solution is to force a threshold value of fps (synch 0 @fps 30), then convert frame value for each 10 or 15 sec and have it sent to this jitter counter from value placed on ableton time line - so i can scrub in live and will be sure what's played in jitter will be the same position in the pattern i ve asked. Not sure im clear.

that's why a key frame editor will help to define curved value in a time line in max, and then we can settle down a smtpe count to ensure it will synch to it

i tried to bang the jit.world from another counter as Martin suggested but doesn't seem to work. Could be a direction though to synch a jit.world to an external counter-media, like.. ableton for instance. So we have the jit.world sending bang to a counter with the same value that comes from live.

yeah if the problem is simply keeping synch with ableton, can't you use Martin's example above to convert the ticks to milliseconds (via the translate object) and then from their to frames or whatever you like?

I fail to see how smpte is in any way useful to Jitter.

yeah sorry im still a bit messy in my explanation. Wish we could all just speak our mother langage sometimes especially when it need clear explained details.

I will test this amxd bellow just for the fun (i left jitter in it for the example but that's not what it is in real), but as you can see it not super smooth. I m reporting the frame number from calculation based in absolute time line in ableton then send it to a counter to animate gl, like cam, which are rendered through jit.world metro - the idea is the be able to scrub in ableton time line an be sure that gl position in the writing will be the same - and then use this counter number as some kind of frame ID to structure everything in the project as you would do with a key frame. But I think this method it's not precised enough to have something smooth enough in jitter, likely because of the different environnements

involved (ableton/max) while we would need in the case of procedural calculation paradigm a very precise synch. Ofc at 30fps it's even worst.

I have a workaround for now, with sending theoritical frame number each 15sec and send it to the counter, rather than this continuous calculation from ableton, but it involves to make a specific track with osc data, and automation in live, which is still ok.

No sure im clear :) but still curious to hear your guys thoughts

Hello Fraction, while you modified your last post I was writing this one here so please give me some time to understand.

Here I send you a more clear version of what I was referring to.

This patch doesn't use a counter anymore, because counters are prone to dropped clock pulses.

yeah sorry my previous (deleted) message was wrong - thought nobody would had time to read it :)

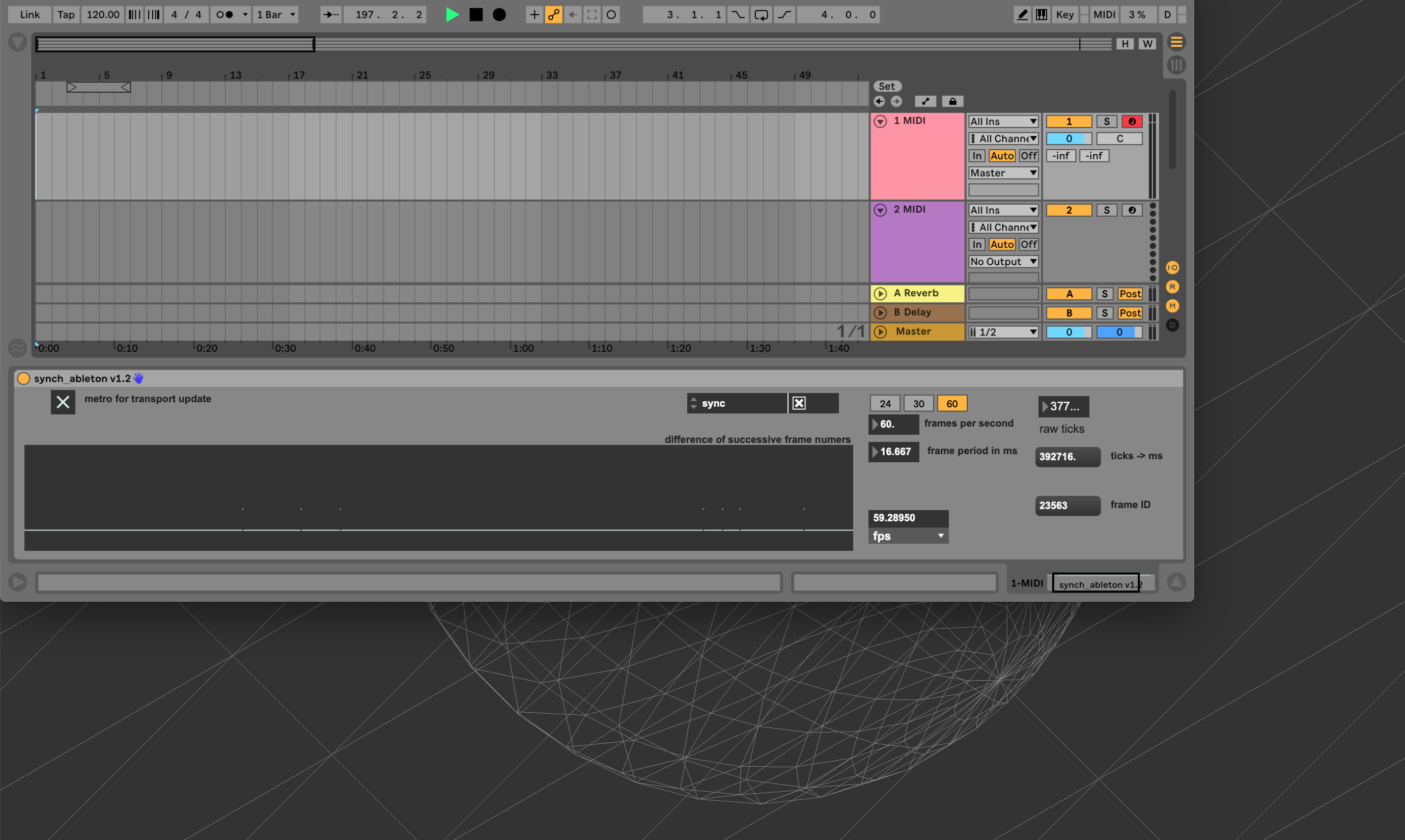

So I think (unless you mother language is German) it is best to let the code speak. I modified your amxd-file. It now obtains its clock from the same source as the frame numbers (similar to my approach in the last post using a [change] and [t b]). I also added a multi slider that visualises the difference of successive frame numbers. And I think I can see the dropouts that are root cause of the slight stutter that you report- not completely smooth.

First I was fist thinking about wether 30fps are too slow for a smooth sampling of the rotation you create with the [sin] [cos] calculations, but I think is related to something else.

So how to proceed? I think it is worth to change the initial clock source in your patch -> [qmetro 33 @active 1] to something that is derived from a [transport] or [plugsync].

thx! let me try this afternoon

so i tried :

- the patch i shared above

- your patch

and compared it to the technics i aforementioned.

It looks either your patch or mine are not satisfying. It creates tiny little offset that kill the smooth.

Also, worst, jit.world seems it doesnt really enjoy taking external bang - its frame rates falls down dramatically here, means it creates even more little offset. Having it using it's own clock is better but still super nice.

The best i can observe is to have those little "frame" reset i put in the time line which indicate to a counter in the jitter project where it should be and leave jit.world render at the constrained @displaylin 0 @fps 30 in my case.

Yes I can also see that sometimes it stutters (accompanied with difference of successive frames >=2).

This really means that this synchronisation strategy doesn't work.

jit.world seems it doesnt really enjoy taking external bang

...I can confirm that there is something weird.

Ok, I have a better understanding of the problem space and think I can offer some suggestions. Agree you should not be taking timing directly from the transport (e.g. ableton), and should not be triggering jit.world via bangs or anything like that.

The problem of synching two disparate animation timing systems is usually best handled by comparing the difference in timing between them, and if it crosses some threshold adjust the rate of the subsystem until it is back in synch with the main system (e.g. adjust the jit.world based time until it synchs with the ableton based time).

the logic for this is not difficult, although as with most things in Max, exact details are best matched to the exact situation, but I think a general purpose solution is possible.

as mentioned above, you want to get timing from ableton via UDP, and convert that to ms via translate. you can then use the jit.time object as your source of truth for all Jitter animations. you can then compare the times and adjust the speed attribute to compensate for timing disparities. The attached patch demonstrates how I would go about this, and substitutes a transport object for Ableton timing. You can adjust the tempo of the transport to see the compensation mechanism kick in. as mentioned in the comments of the patch, any jit.time or jit.anim family object can use this speed adjustment to synch their timing (whether animation is absolute or relative).

the problem of "scrubbing" on your main timeline is not handled by this synching solution, but should be easy enough to solve using an offset value to the jit.time. I'll have a think on that, but wanted to see if this gets you on the right track.

looks awsome. thx a lot rob. I m looking fwd to try your solution, Monday likely now.

I will share feedbacks for sure,

have a great week end!

-e

thx rob , it worked ! still required to have a stable fps on jitter side obviously, means that you need to lock it on the jit.world.

I stick with my first option for this project because it was already quite advanced, but will migrate to your solution for the next ones!

cheers!