M4L devices and Jitter Texture sharing

Hi all, I am attempting to generate and send a texture from one Max For Live device to another device and have an easy drag and drop videosynth that is running inside Ableton Live.

The issue I have is if I build, for example, a vsynth device and then just stick the jit.gl.texture output of it into a send/receive pair - the 2 devices work for a second and then error out.

I assume its a bit more involved and there needs to be some sort of shared jit world or context.

Then I resorted to simply using the spout send / receive objects and assumed that would solve it with perhaps some frame/resource loss but that also works for a moment and then stops working.

What is the proper send/receive / texture sharing between multiple M4L devices. I think with sound and note data etc its really plug and play but with gl I am missing something.

A simple example would be awesome thanks!

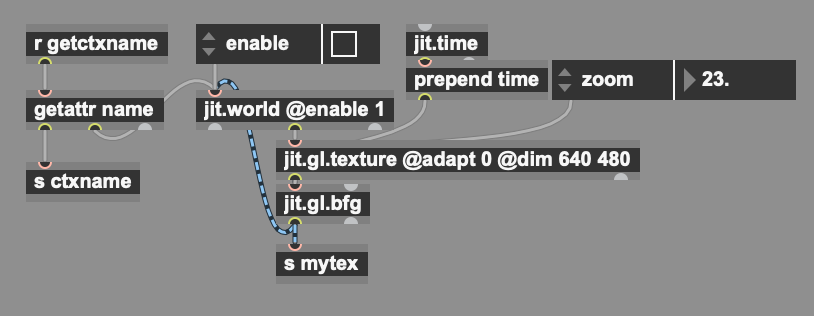

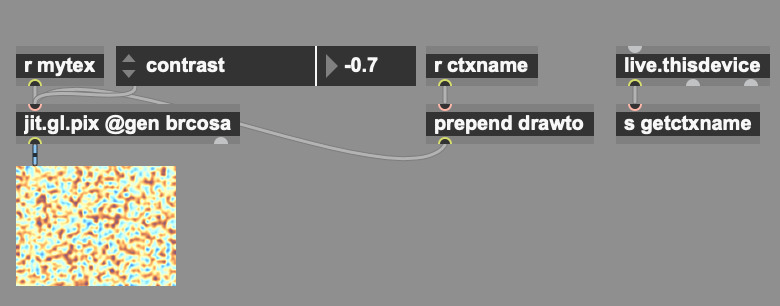

texture sharing across devices is possible, but you must make sure you are managing drawto attributes of any gl objects in those devices. I think the best way to do this is have a main context device that manages the jit.world and sends the context name to any client devices that request it.

A simple live.thisdevice connected to something like s getctxname in the client, with a corresponding r getctxname connected to a getattr name in the main device.

as long as every jit.gl object in the client device receives the proper drawto name, you should be good.

Attached these two example devices for testing.

Thanks a lot - I stumbled on the solution with VSynth but big thanks for this tutorial.

As for the Vsynth with Max for Live - if anyone else is interested - just put your cornerpin object as the "last" device in your chain, not into one "master" device. It seems that was the issue there.

example: You can make a M4L device for just your render object, then make separate devices that mangle the video, and then make a final output device for your spout sender and corner pins.