Google MediaPipe in Max (n4m, jweb) : Hand, Face, Body tracking

Adding all links to examples using Google Mediapipe in Max here. Will update these as the collection grows. [updated 2023]

jweb

Rob Ramirez Mediapipe : https://github.com/robtherich/jweb-mediapipe

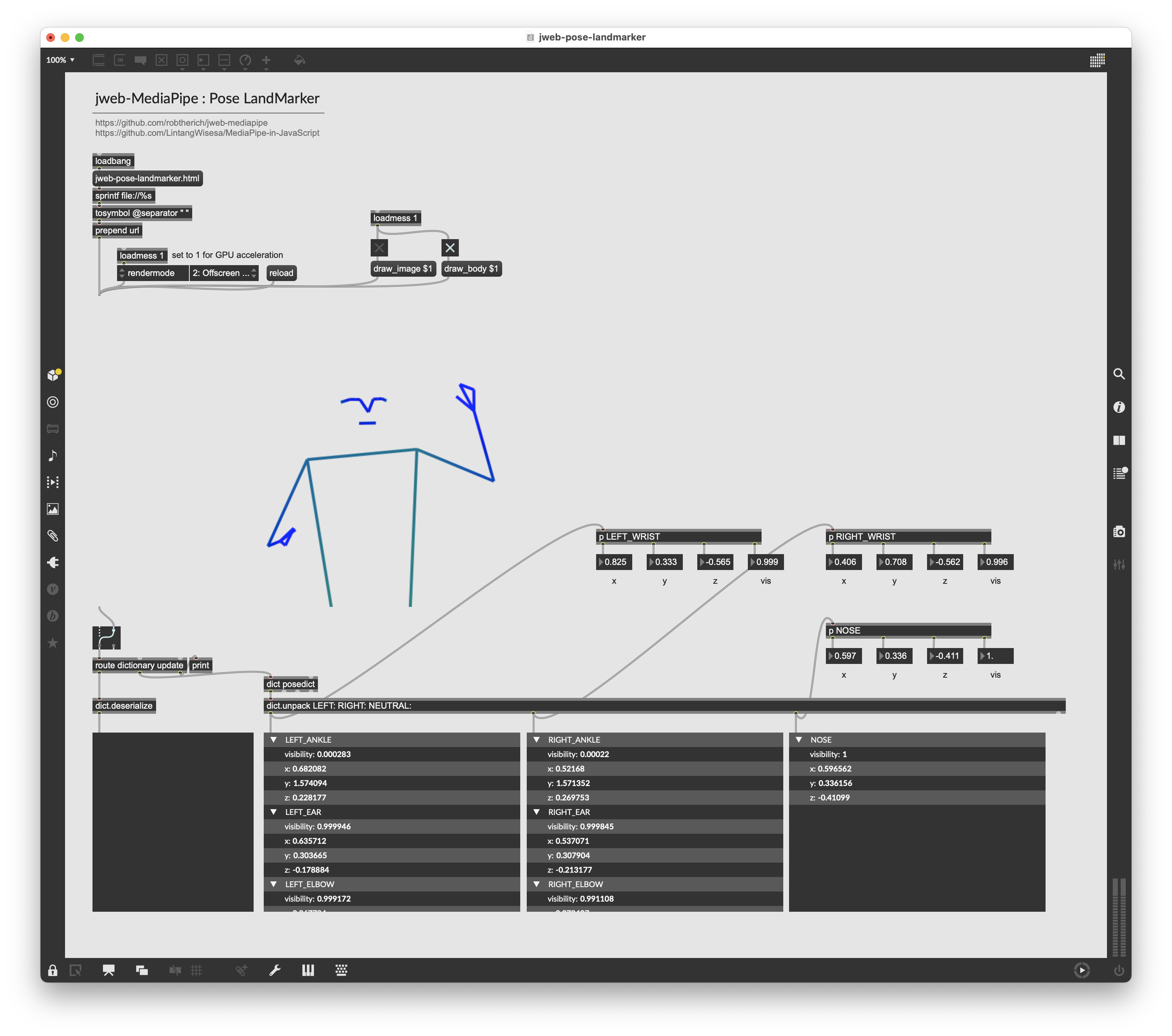

Pose (body) : https://github.com/lysdexic-audio/jweb-pose-landmarker

Hand tracking: https://github.com/lysdexic-audio/jweb-hands-landmarker

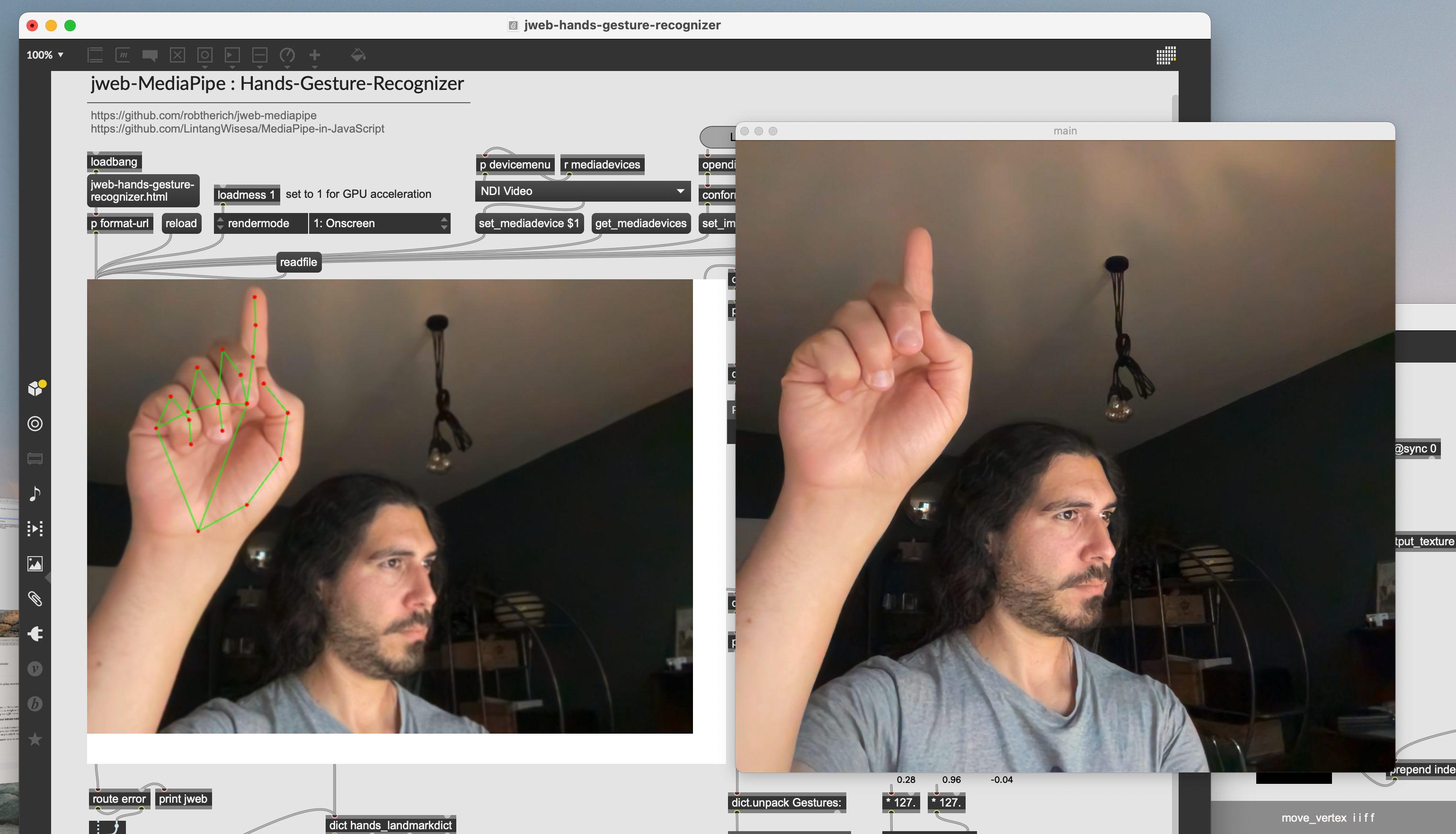

Hand gestures: https://github.com/lysdexic-audio/jweb-hands-gesture-recognizer

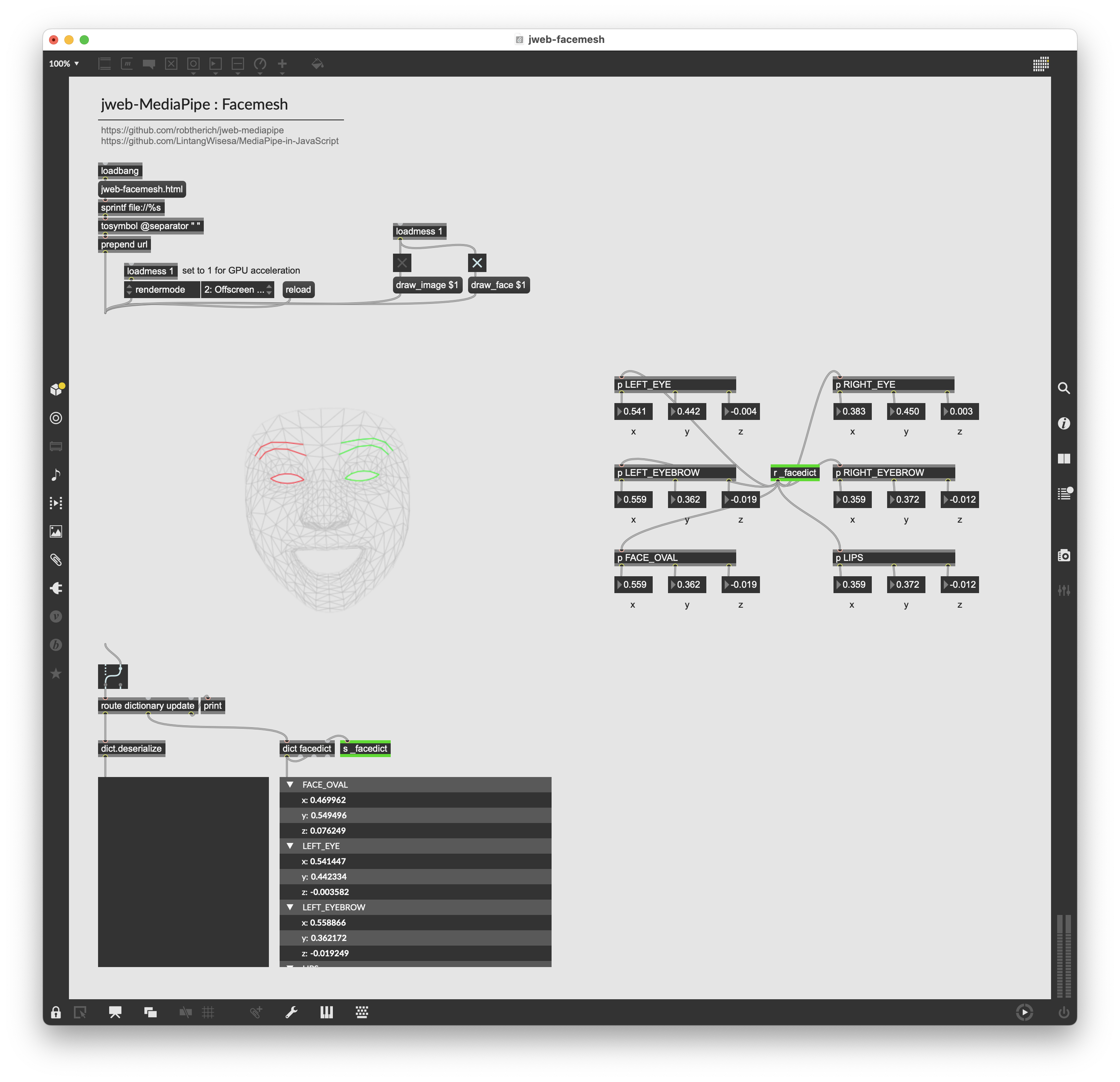

Face tracking: https://github.com/lysdexic-audio/jweb-facemesh

Face gestures: https://github.com/lysdexic-audio/jweb-face-landmarker

Object detection: https://github.com/lysdexic-audio/jweb-object-detection

node for max

Bodytracking : https://github.com/yuichkun/n4m-posenet

Handtracking : https://github.com/lysdexic-audio/n4m-handpose

Facetracking : https://github.com/lysdexic-audio/n4m-facemesh

----- Original post -----

Hi Folks,

I've made two new contributions to the Node For MaxMSP Community Library wrapping Google's MediaPipe (in a similar fashion to PoseNet using Electron) for these two:

Handtracking using HandPose: https://github.com/lysdexic-audio/n4m-handpose

Facetracking using FaceMesh: https://github.com/lysdexic-audio/n4m-facemesh

Keen to see how they work on different operating systems, pull requests and bug logging via the issue trackers in the repo welcome :)

Stars also welcome!

super.

between 5 to 15 frames on OSX 10.15.3 Max 8.1.3

here ya go: ⭐✰⋆🌟✪🔯✨

Wasn't expecting this kind of a negative/unhelpful response - but I'm actually getting ~20fps on Mojave on my mbp for both scripts. I thought this might be useful to share with the Max community. Hopefully some people here will find it useful.

If you run Facemesh on the cpu instead of webassembly you'll likely get 5fps, so stick with the WASM option.

Thanks a lot, really nice!

Handpose is also around 20 fps. My framerate with facemesh is not as high as yours it's around 5 till 10 fps maximum (wasm)

Max 8.1.3

Mojave 10.14.6

MBP 2,9GHz i9 - intel UHD graphics 630 1536MB

Amazing - ~40fps with webgl MBP 13" +egpu.

Awesome, thanks for the reports folks!

My stats are almost identical to Aartcore's, except my GPU

Max 8.1.3

Mojave 10.14.6

MBP 2,9GHz i9 - Radeon Pro Vega 20 4 GB

Hi this is really great, I can't believe it took me this long to try it out. Thanks for sharing. Perfect for just tracking my hand instead of trying to do that with posenet.

I am seeing similar numbers, with my mbp i7, radeon pro 560.

hand pose is around 20 fps / facemesh was getting 15fps

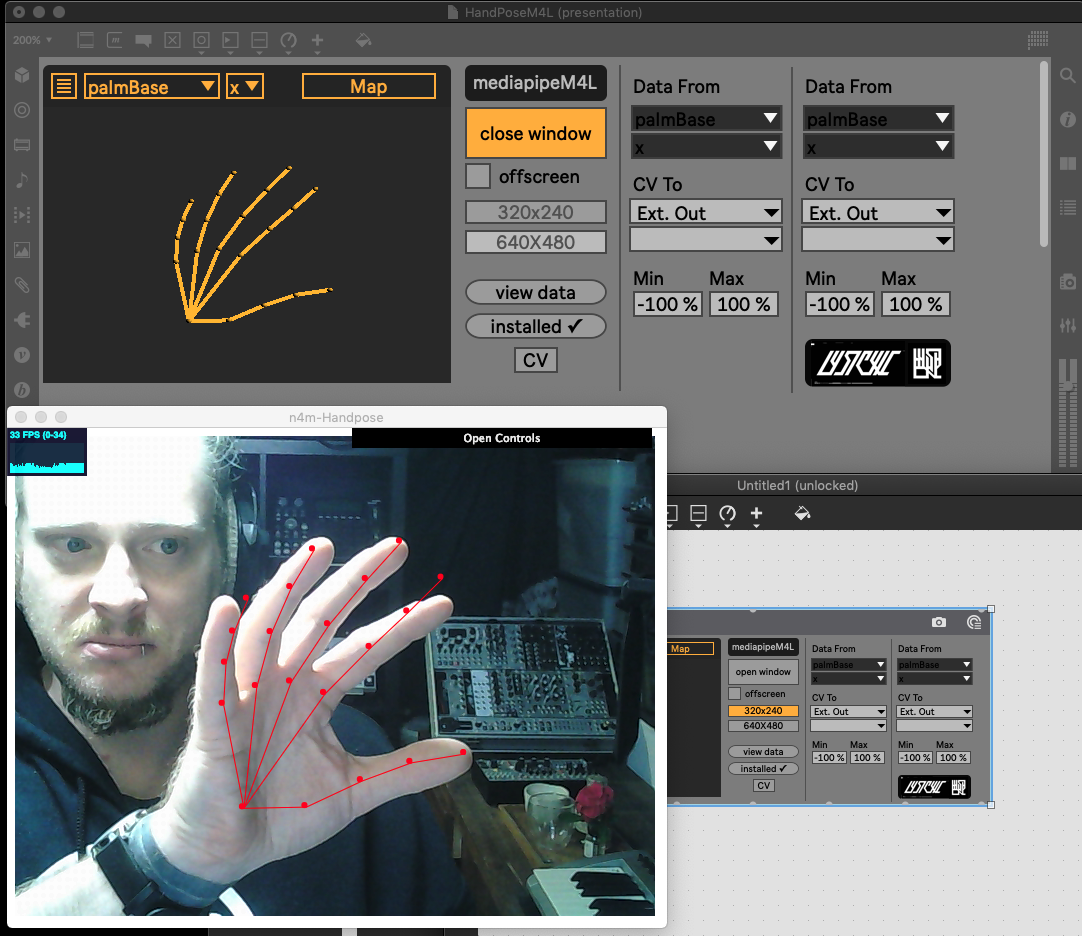

quick question, has anyone tried lowering the resolution for better performance? I just changed the dims in the camera.js file to 320x240 from 600x500 and i think im seeing better performance.

Hi. This looks amazing. However, after downloading the dependencies, when Electron opens I just get a blank screen, and it doesn't launch/open my webcam. Is there something I might be doing wrong?

Hi folks - I've now wrapped both of these to two Max For Live Devices which are really fun to use. I'll be adding these to the repos early next week for everyone. I've also added a whole bunch of improvements to the scripting (offscreen rendering so you don't need the window to be visible to get the data, full model controls for handpose, replicated FaceOSC's gestures for Facemesh, so you can use "mouth width" and "eye height" etc) and more which I'll show you when I commit the updates. :)

I've quick question, has anyone tried lowering the resolution for better performance? I just changed the dims in the camera.js file to 320x240 from 600x500 and i think im seeing better performance.

yep! that's a great idea. I've actually added params for window size into the next update that you can control from max when you spawn the window - I might default them to 320x240 for better performance for most people

Hi. This looks amazing. However, after downloading the dependencies, when Electron opens I just get a blank screen, and it doesn't launch/open my webcam. Is there something I might be doing wrong?

Hi, thanks for your interest in the project! Could be a few things, firstly - check for a .log file inside the folder to see if everything downloaded correctly from npm. If not, it'll be inside the log. Second, could be security on your OS (catalina can sometimes be a pain with this), haven't tried on windows but heard success from windows users.

You can try toggling your video device from the GUI controls also to see if that works. In the menu bar for the electron window there is an option to "Enable Debug Console" - if you open that up and see an error message post it here or in the issues tab for the repo on github :)

You'll also need to keep your maxpatch inside the same folder with all the other js files and node_modules. saving a patch into a different folder often breaks things with node.

@LYSDEXIC

Thanks for your reply. Hmm, it's strange. I'm certain it has all downloaded - everything opens and looks right in Visual Studio.

Interestingly - I can't see a GUI to toggle the camera with. I also do get an error, but it doesn't look like a fatal one: Electron Security Warning (Insecure Content-Security-Policy). Electron is also managing to connect to Max.

Security-wise I'm on 10.12, and generally don't have problems granting access to things. Do you think there could be anything else up? Thanks.

Wow. thanks LYSDEXIC. Can't wait to see the updates

Hi Lysdexic, I am having issues trying to get my webcam running in handpose and with jit.grab. On my mbp w the built in cam this works, and with my external cam. But I am now running windows10 and only works one at a time. Do you know of a solution?

Great work, thanks for sharing!

I would like to draw the mesh in Max instead of the electron window.

Is it possible?

Great!

Really amazing workflow @LYSDEXIC ! ⚝⚝⚝⚝⚝

Thanks so much! And to @DANTE LENTZ for launching me here :)

Handpose framerate on Win 10 > from 20 to 28fps

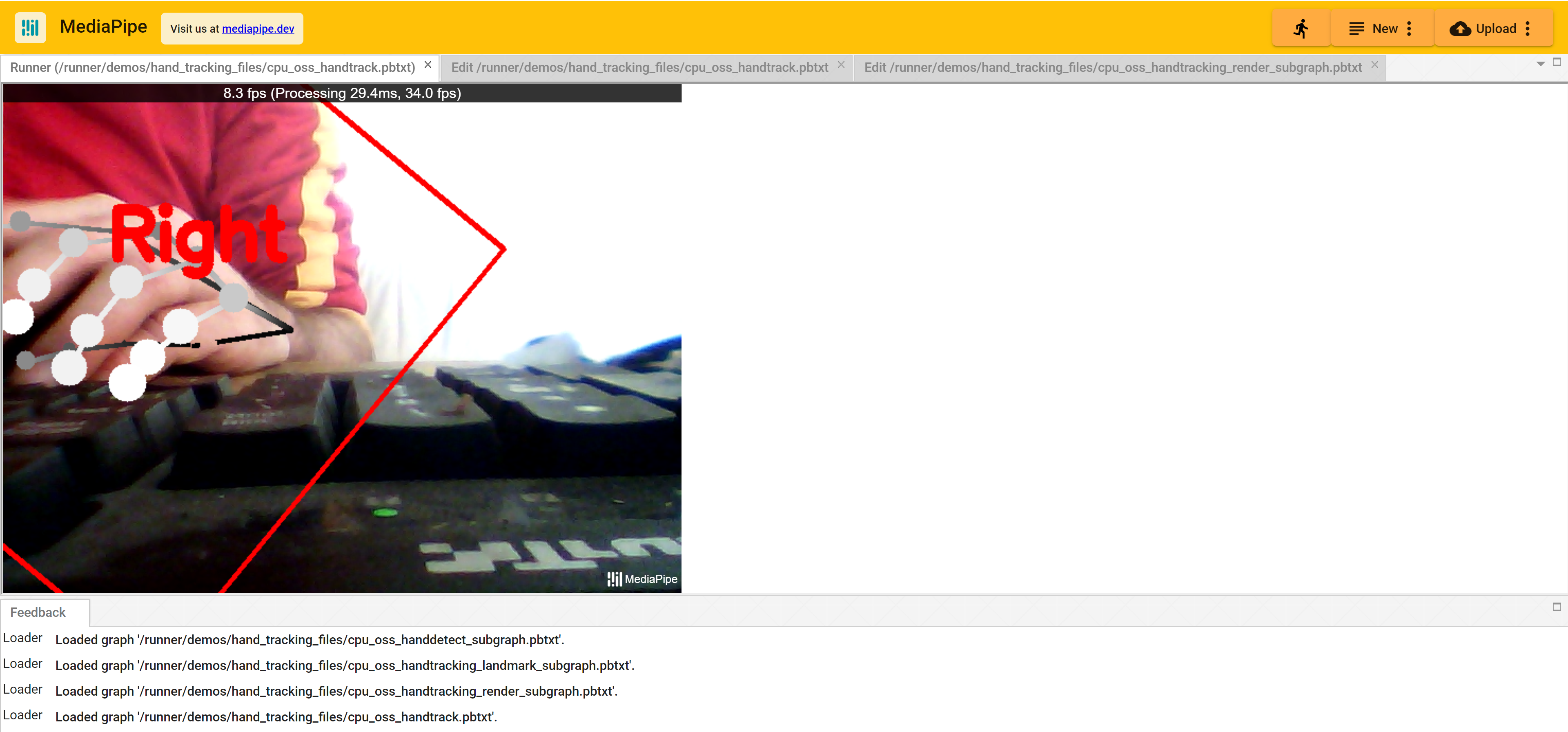

Notes: on fast hand movements the tracker in Electron looks less efficient then the example run by mediapipe... https://viz.mediapipe.dev/demo/hand_tracking

Is there something wrong I did using your dev?

Tnx a lot!

Hello!

I'm trying to open the patch (thanks for sharing) but every time it crashes after few seconds... So i didn't see a lot. I wanted to ask if there was a way to adjust this.

Anyway thank you a lot for sharing this!

Hi folks - thanks for the bug reports - can you make issues on github (make sure to list your system specs, param settings and OS) - just "it crashes" isn't quite as useful for finding out what that could be :)

I also have an update to both of these I need to push that is 80% complete when I can get two seconds free.. I'll do what I can to get it in soon - I use these patches for the course I teach at University and the semester starts again in a few days!

I would like to draw the mesh in Max instead of the electron window.

Is it possible?

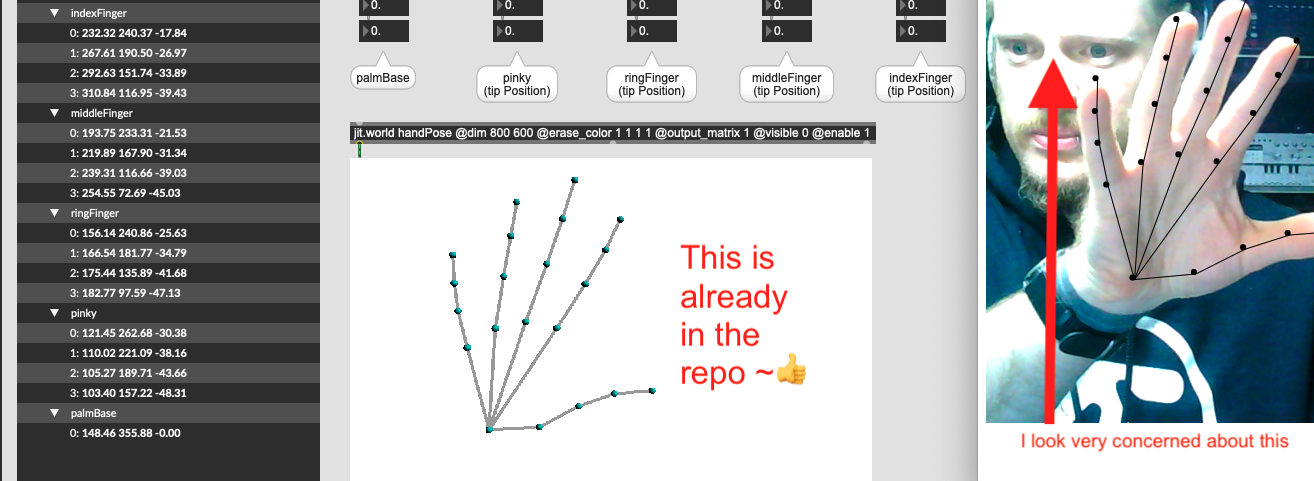

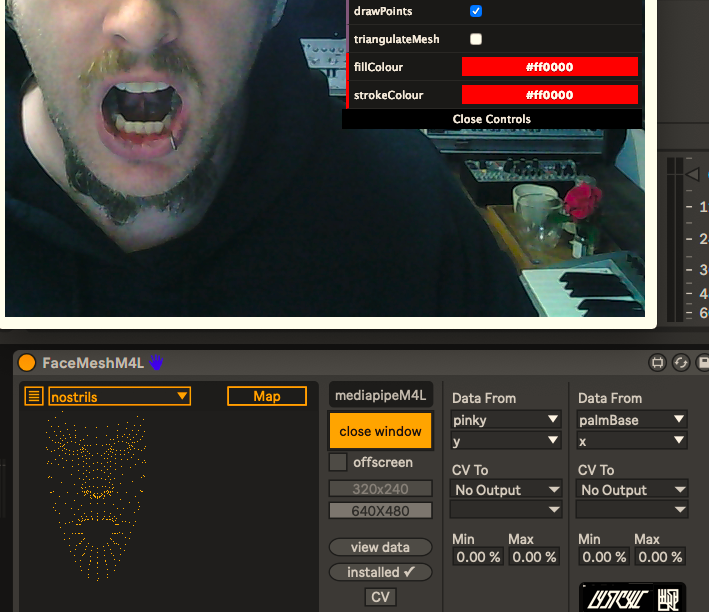

Yep! I'm drawing them in 3D in these two Max For Live devices you can see below.

But I just checked and the patch in the repo already has this in it for regular Max - so it's already ready to go :)

These M4L devices will come out for free in April and will be lots of fun, I actually engineered gesture recognition in them for the face and hands and worked in Palm normal to tell which way up your hands are and stuff too. Plus you can control your modular via CV :)

Gold!

BTW, for the face mesh do you know if it's possible to stop the jittering somehow?

And I see it does not detect ample motion like a wink. I am guessing there is no solving this, right?

Any news regarding the 2 things I wrote above? Are there any solutions?

Thank you so much!

BTW, for the face mesh do you know if it's possible to stop the jittering somehow?

And I see it does not detect ample motion like a wink. I am guessing there is no solving this, right?

@YGREQ - I've found lighting conditions dramatically affect it - also if you wear a hat, glasses or have facial hair it will not perform optimally (like FaceOSC)

I can accurately detect a wink when I place a good light source evenly frontlighting my face. I just use a small 30cm fluro tube I bought for $5 and it works much better for detecting eyes (front lighting will cut down on shadows in eye sockets) and also helps eliminating jitter (when the model can't find landmarks).

The jitter could also be from processing lag if you're dropping frames - but if you're getting a decent FPS it's most likely the lighting conditions. Give it a shot! I was surprised at the difference - but YMMV.

In two days I'm *finally* pushing this update that will help with some other stuff! Was going to wait to answer your question till then but that seems a little silly - LMK how you go

Oh! Thnk you so much for ayour answer. I will test asap with proper lighting conditions!<3

Thanks so much for this, it's great! Was wondering if you were thinking of doing the same for the holistic whole body tracking.....would be eternally grateful if you did or could point to how to do so.

@Monica, you can find an openPose integration here which is for body tracking: https://cycling74.com/forums/openpose-for-max-sh%C3%A4ring-some-hot-machine-learning-stuff

I am looking to map the MeshMap to a mask (an actual mask in a png file with alpha). Any clues on how I can achieve this? I am thinking of just sending the osc data to map the mask in openGL. But maybe there is a simpler solution out there. Also if you already achieved this maybe you don't mind sharing the patch?

Thank you so much!

A problem I notice:

1.Node for Max - Mediapipe:: Facemesh window looses its image and resets itself from time to time coming back up after a few seconds.

2.Once it comes back up, the OSC data stops streaming even though the face is detected again. I have to Stop the patch and restart it.

Is this a general problem or am I doing something wrong?

BTW, the link to https://github.com/tensorflow/tfjs-models/tree/master/handpose is taking nowhere. Is the original project dead? :/

Having a small problem with HandPose and was wondering if anyone else experienced it as well. I can only get handpose to recognize my webcam if I start the program with it unplugged and then access it through OBS. This is not the case with FaceMesh or Posenet, which i havent tested extensively, but seem to work fine.

The error message i get is : "this browser does not support video capture, or this device does not have a camera "

In the handpose camera.html file (when i Toggle Developer Tools) I am getting an error : "DOMException: Could not start video source" as well as a lot of "failed to load SourceMap" things.

Anyway just throwing this out there.

This is amazing work, but I'm having trouble with this patch. It won't turn on my webcam, and the jit.pwindow from the jit.world where it reads your hand mesh is just white/blank and no hand tracking data are going through the other parts of the patch. When I add a jit.grab to the jit.world it opens the webcam, but the image is just superimposed over the blank white pwindow and still no hand recognition.

When I open the patch, I hit the button to Install dependencies in NPM, it completes, then hit the toggle, but no camera or hand tracking. No Max errors listed either and Electron seems to open, though I haven't used these connective programs before so I'm not sure how to trouble shoot.

Any suggestions?

Thanks again for this work! I'm excited to experiment with it for a project I've wanted to do for a long time.

-j

Hi Jacob, Try what ive laid out above.

Start Handpose, and then try to access your webcam from OBS.

what operating system?

Thanks Dante!

Mac OS 12.0.1

I opened OBS which turns on my camera, and then opened the Handpose patch, but no change in the issue: a blank white image is displayed in both the pwindow coming out of the jit.world context and a blank white external display in the Electron program.

Any other suggestions?

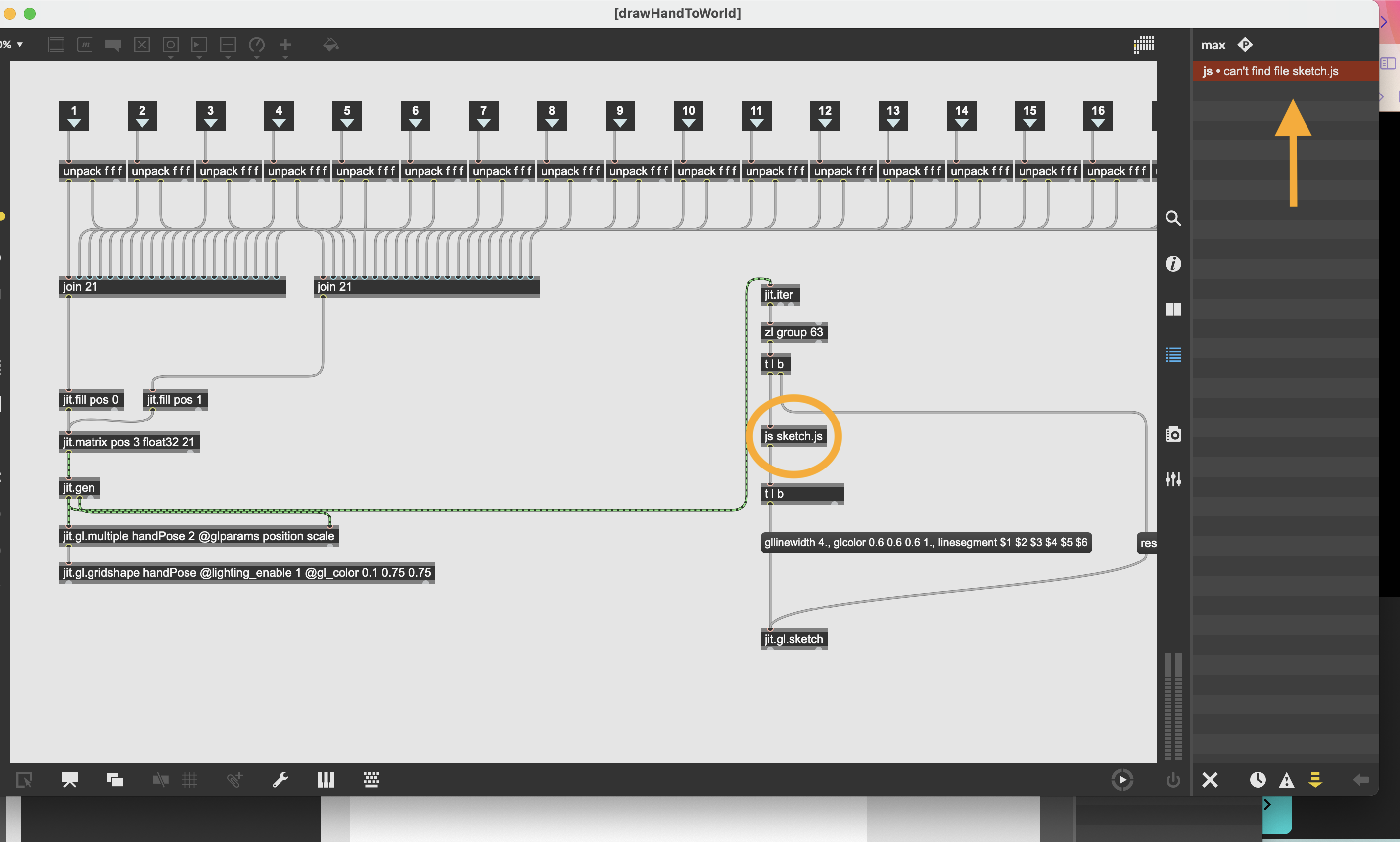

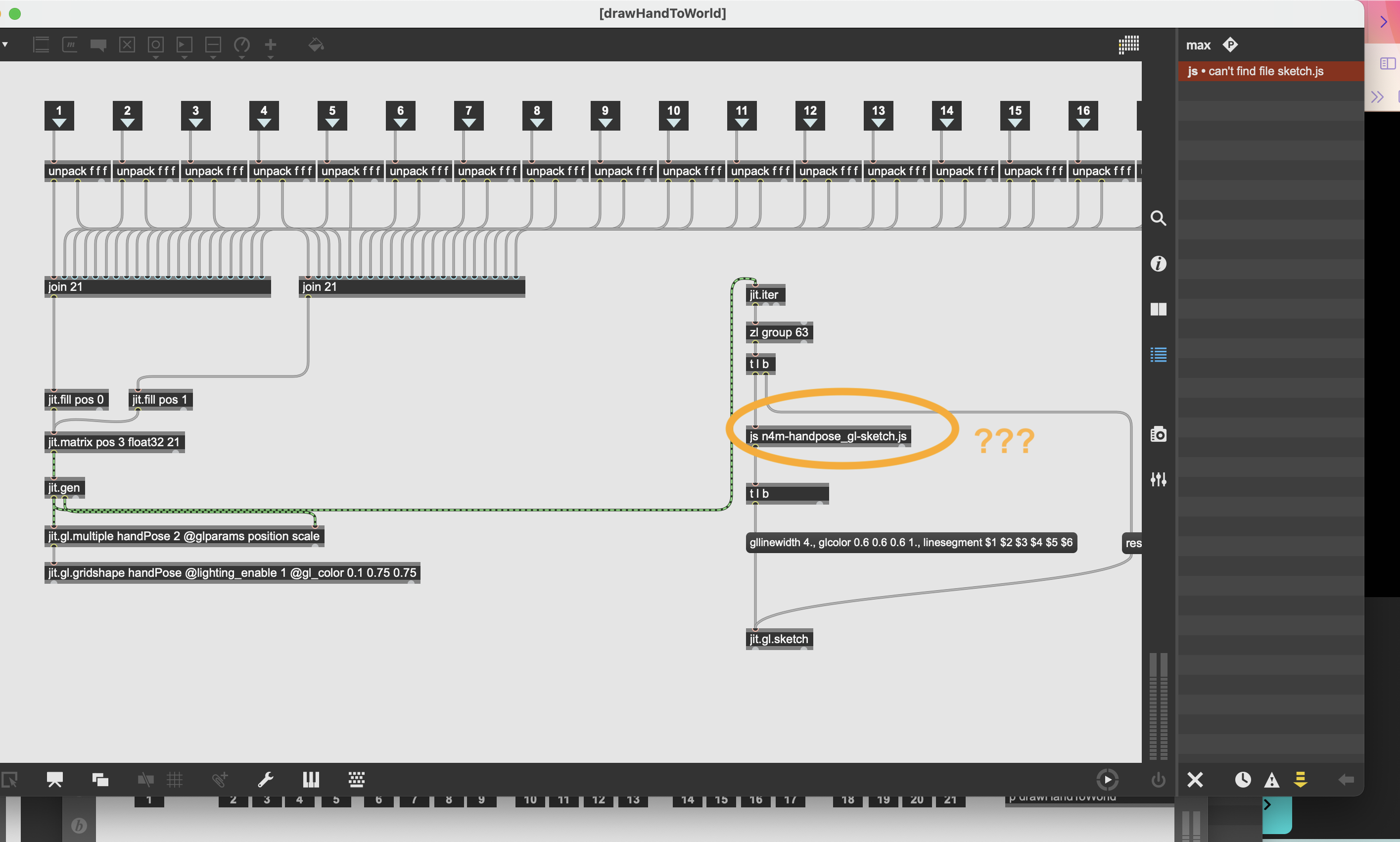

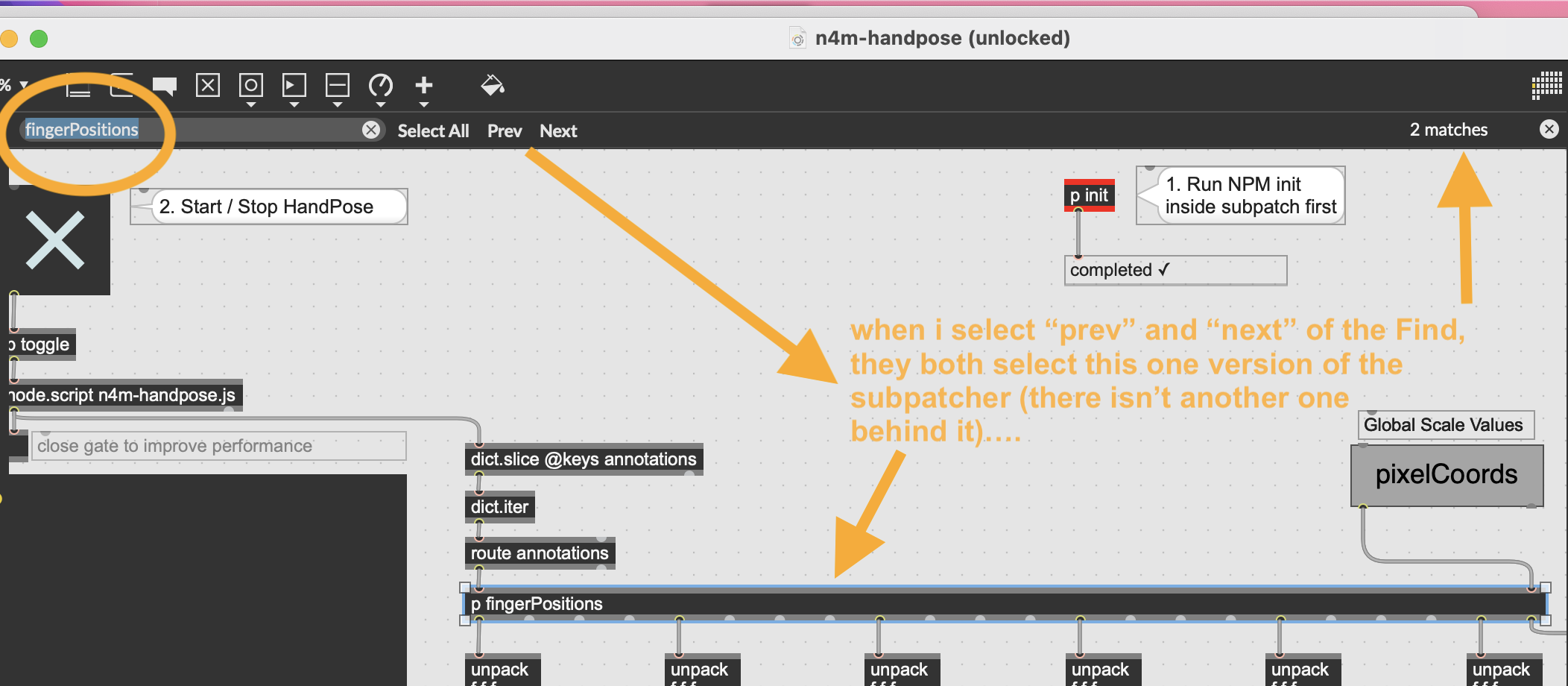

...actually i found a weird bug that might be a problem. It's a little hard to explain but in Max when I open the n4m-Handpose patch, there is an error saying "can't find file sketch.js" When I double click that error it opens the subpatch "p drawHandToWorld" and shows that there is an js call object "js sketch.js," (pics attached) and when I double click it, it opens an empty window (no code). That's the first pic below. Plus there isn't a "sketch.js" program in the n4m-Handpose directory, so i figure i maybe accidentally changed that js object when it was unlocked and saved it, etc. However, when I go back to the main patch window and then click on the "p fingerPositions"-->"p drawHandToWorld," the same js object that had the error is now named "js n4m-handpose_gl-sketch.js" and has a program written in it (second pic). So it seems like there are two versions of that subpatch in this patch somewhere, and when I do a Find for the "fingerPositions" text, it says there are 2 matches, but I can only find one from the main patch window (third pic)...something fishy?

Hey Jacob, Report the issues on github like Lysdexic requests. Maybe it just needs a little tweaking. Don't forget to list your specs.

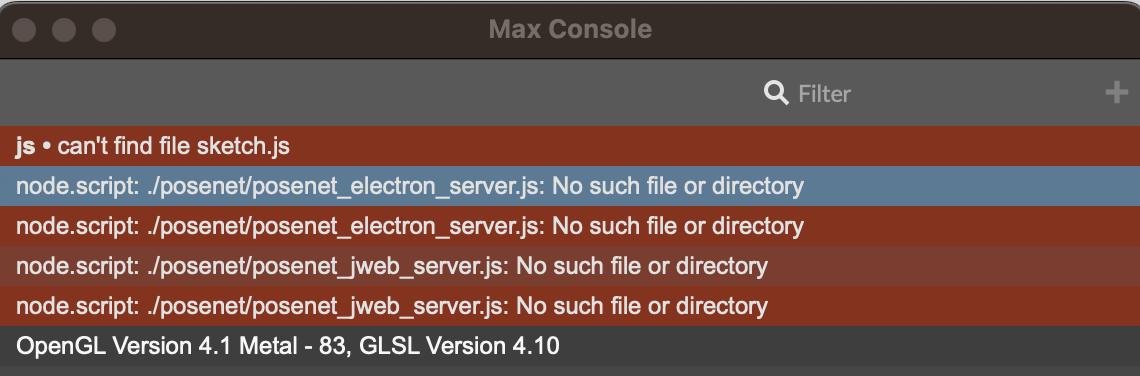

Trying to get the software installed (npm) but it seems the posenet files are not findable. There's already an open issue on this on GitHub. And please see my attached screenshot.

Maybe over time the file path is not correct any longer? Anyone has a solution for this? Are the files installable from somewhere else or anyone know the correct file path?

@DAVID RESNICK: I am facing the same issue :

js: can't find file sketch.js

Has any workout been identified ?

Many thanks in advance.

I think Mediapipe is now a good way to go for this kind of thing. You can run it all directly from jweb (no need for node). Here is a very basic example of hands tracking:

https://github.com/robtherich/jweb-mediapipe

Based on this code, and so should be fairly straight forward to add more tracking functionality.

super!

Thanks for the example, Rob.

It runs great here - I do have a better machine now, though.

I think you also deserve some stars: ⭐✰⋆🌟✪🔯✨

(and no, I am not trying to be negative... :x)

Thank you Rob, that's very helpful and quite impressive.

I wish I could extend to the face/facemesh tracking as well, but the process is unclear to me.

Just to explain:

I am working with disabled people. One of them can only move his head. I'm trying to build with MAX, a midi controller, that would follow his head movements. So far I have used the cv.jit package. It works well, but: 1. My computer tends to overheat rapidly when using this package. 2. I found the cv.objects to be not always fully robust, and a bit too sensitive to the surrounding context (we had recently very frustrating experience, on stage, with a dark background !).

I tried the Electron/MediaPipe solution a while ago, and wanted to see if it could be an option. But, as mentioned in a previous message, I was disappointed to see that, for some reasons, it doesn't work anymore.

Unfortunately, even if I understand the principle of collecting the mediaPipe data through jweb and the html code, I am not proficient enough to adjust your hand tracking js code in order to push the facemesh data into MAX instead (I tried ....but).

Any further help would be appreciated. I would be more than happy to provide any further information you may request.

Have a great day, all.

Sébastien

Hi folks, just bumped electron to 25.0.1 for both repos which fixes the patchers for me on MacOS 12 on a fresh install. Can Windows users give them a try too?

I get 60FPS for handpose and 49FPS for facemesh.

The missing `sketch.js` is a misnomer... the patcher objects were duplicated and laid over the top of each other (hard to spot, fixed now).

---

Nice work Rob!! I'm going to try native jweb also now. I'll post these ports up here a little later for folks who want to try them.

Thank you LYSDEXIC. I'll test the MAC update soon.

I (we) will be happy to test any other solution provided.

Sébastien.

I'd like to continue exploring the jweb route, and enabling other tracking functionality, but not sure what time I'll have. Very open to pull requests on the github if anyone else is doing the same.

Wow! This is great!

It would be really nice to have a face or pose detection like the hands tracking.

I tried to make the pose dectection with the files of Rob, but unfortunately i'm missing something/ doing it wrong because i don't get any output.

the files are attached, hopefully someone can help!

I have face and body working in jweb - I'm going to PR these into Rob's repo soon where you'll be able to get all of them :)

If you want to get into these now I'll edit this post and add them individually:

https://github.com/lysdexic-audio/jweb-pose-landmarker

https://github.com/lysdexic-audio/jweb-facemesh

Super Lysdexic! Can't wait!

I'm very curious how it's done, if you already have a beta, I would love to test it!

Thanks a lot Lysdexic!!!

It's working really good, i will test it further.

I will also going to study the js script, i had some differences, hopefully i can improve my java (which is very poor) and next time contribute a working patch.

Big up!

@LYSDEXIC: many many thanks. Facemesh is working on my station as well !

Great to hear Sébastien! I am very appreciative of people working directly with assistive music technologies for disabled folks. I'm really excited to know these technologies are being used in this context.

For the n4m facemesh example I had to do the vector math to get the gestures like Mouth width, Nostril flare, Jaw openness etc as Google doesn't provide this as part of the Facemesh API. I started to add it again for jweb yesterday - but I'm working today with one of their newer models which infers many gestures and should be nice and fast on regular spec'd laptops - but it doesn't "plug" straight into jweb as nicely as these earlier examples. Still - I should have it wrapped up by the end of the day I think.

edit - i've got the webassembly models running in jweb now and it's super fast! The latest models are really great for face tracking - nowhere near as much flickering. Blinking and iris detection seem really solid in lower lit conditions.

Just added to the top of the page

* Object Detection with 80 classifiers and

* the upgraded Face Landmarker example with 52 facial gestures

Quickly added camera input control to all of the jweb examples on my lunchbreak - i'll look into still images tomorrow :)

It works great!

I tested the pose running it for a longer time, with onscreen render mode after a while it looks like the framerate is dropping and the tracking goes slower, if there are other max windows in front of the jweb patch. But it works fine when the rendermode is on Offscreen and the draw_image and draw_body are off. (tested on a Mac Mini M2 )

On my MacBookPro i9 MacOS 13 it works good like on the MacMini M2.

The object detection is really cool!! could be really cool for performances or installations!

I'm now testing to make a still with jit.grab and link the pose info on the same frame, so if can load an image it would be easier. And then it isn't constantly processing anymore, only the still right?

Thanks so much again!

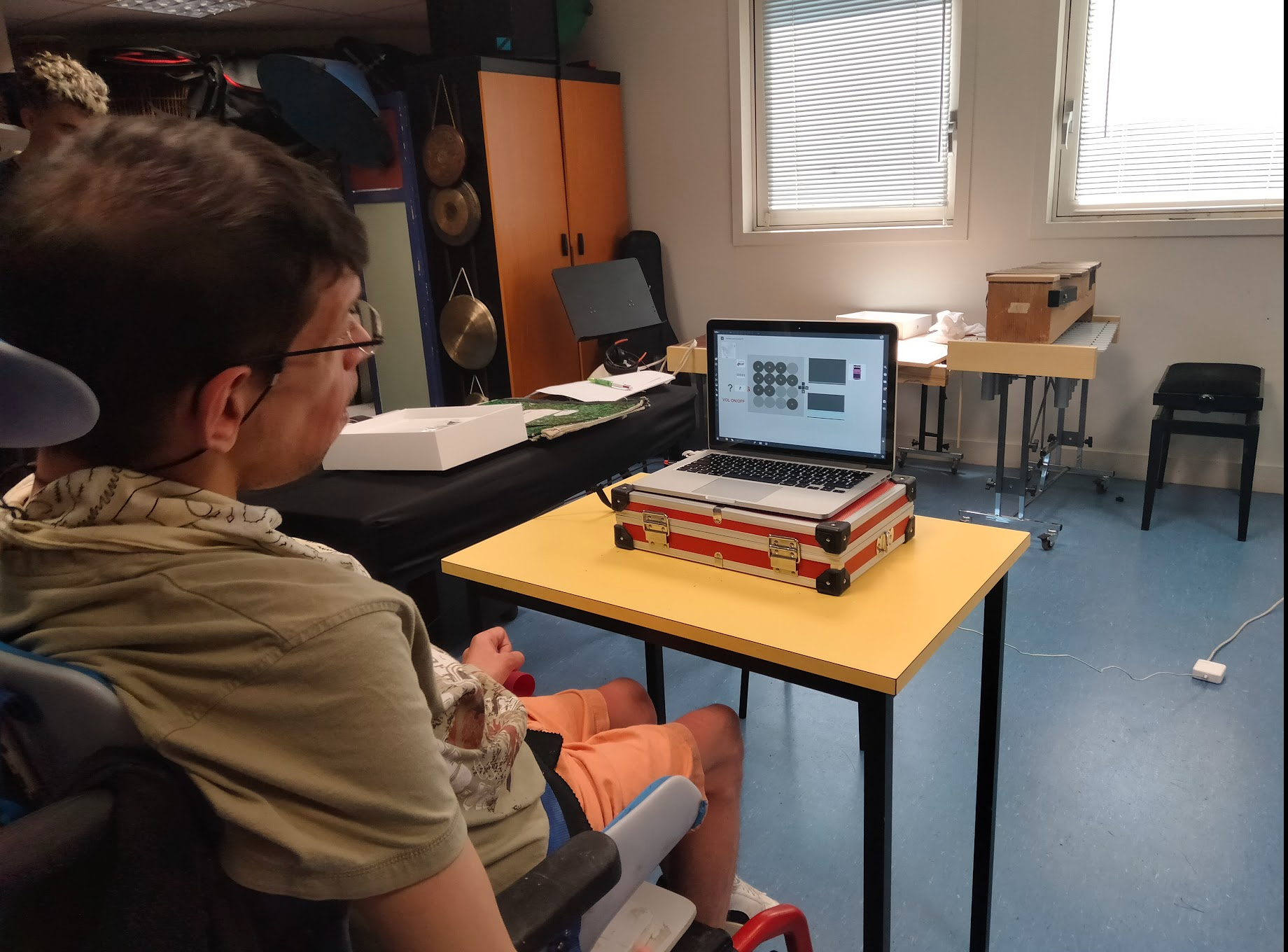

Hey LYSDEXIC,

I concur: many thanks to you. We played with it with my mate who is only able to move his head, and it worked perfectly fine. This is a big improvement compared to the previous solutions and solves the issues we had (the patch is CPU-friendly and faces are detected even in a complex environment).

You've made happy friends, here. We may use it for a little public performance soon. I'll credit you and mention how instrumental you have been to our project. And I'll keep you posted.

Cheers,

Sébastien

So great to hear! Please share anything you are comfortable sharing here to show folks your work - using this tech in context would be really cool to see (also there is way too much of my face in this thread!)

And please also thank/credit Rob - I am really just adapting his patch to use with the latest Mediapipe models

> with onscreen render mode after a while it looks like the framerate is dropping and the tracking goes slower

that sounds like a memory leak - but this Pose model is one of the first, there are much newer ones available I might try today

Hi,

This is a fascinating work. Thanks to Rob and Lysdexic for these amazing tools. I was wondering whether a hand gesture recognition solution was available. I have seen a post by Lysdexic mentioning a M4L device supporting this, but I have not found it nor a pure max patch in the github (I would mainly be interested in a max solution). Anyway, is this available somewhere?

Thanks a lot in advance,

Totally into this, LYSDEXIC and Rob. Wonderful shares, many thanks! I'm investigating the use of Mediapipe as an alternative to the Leap Motion, so I'm really interested in the fingers data, too - and gesture recognition. I won't have time to investigate any of the latter until end of July... But I'll keep a close watch at this thread. Thanks again.

@JBBollen - as an aside, if you are doing projects with the Leap Motion, they’ve just released/announced a new version, with upgraded specs, and also better support for advanced functionality in macOS.

Thanks, Ironside. I'm a beta for them. Looks great!

1000 thanks from me and also from all my students... i was waiting for something like this for long time... very impressive!!!

Hi folks I noticed a confusing issue where the left hand is actually identified as the right and vice versa. I've made a fix so it makes sense. Putting through the hands gestures and latest HandLandmaker model through later today. [Edit: they're up - see first post]

I'm putting these up individually but also PR'ing into Rob's megapatch so you'll be able to get everything in one place there.

I've also added still image as an input option to all of the examples too. I tend to update this thread when I've completed a round of updates - but if you follow me and Rob (or "watch" the repos you're interested in) on github you'll get direct updates :)

Nice one Stefano ! I initially made these to help me teach my classes too - glad it's helping :D

Thank you so much Lysdexic and Rob for this extremely awesome work!!

I especially love how it is all plug-and-play, and works flawlessly.

Really kudos for this amazing project.

I was playing around with the gesture recognition one, and noted that the camera stream displayed in the jweb is slightly cropped compared to the camera input from jit.grab (see image attached).

I see that the video size in the js code is set to 640x480, is there also the info about the cropping?

Thanks again, really excited about playing with this

Hi Federico :)

Nice to know you're having some fun with these! Looks like the jweb window was cut down a little (not sure why) - I've updated all the patches in each repo to use 640x480

There's no reason why you can't try other resolutions too - anyone experiencing performance issues just try a smaller res, conversely - feel free to make it larger

Hi all,

We have tested face-tracking last week, during a short on-stage event.

It worked perfectly fine !

I'll post some pictures when I receive appropriate authorizations.

Thank you again LYSDEXIC & Rob, and have a good day all.

Sébastien

"I've updated all the patches in each repo to use 640x480"

That did the trick, thank you!

Hi everyone,

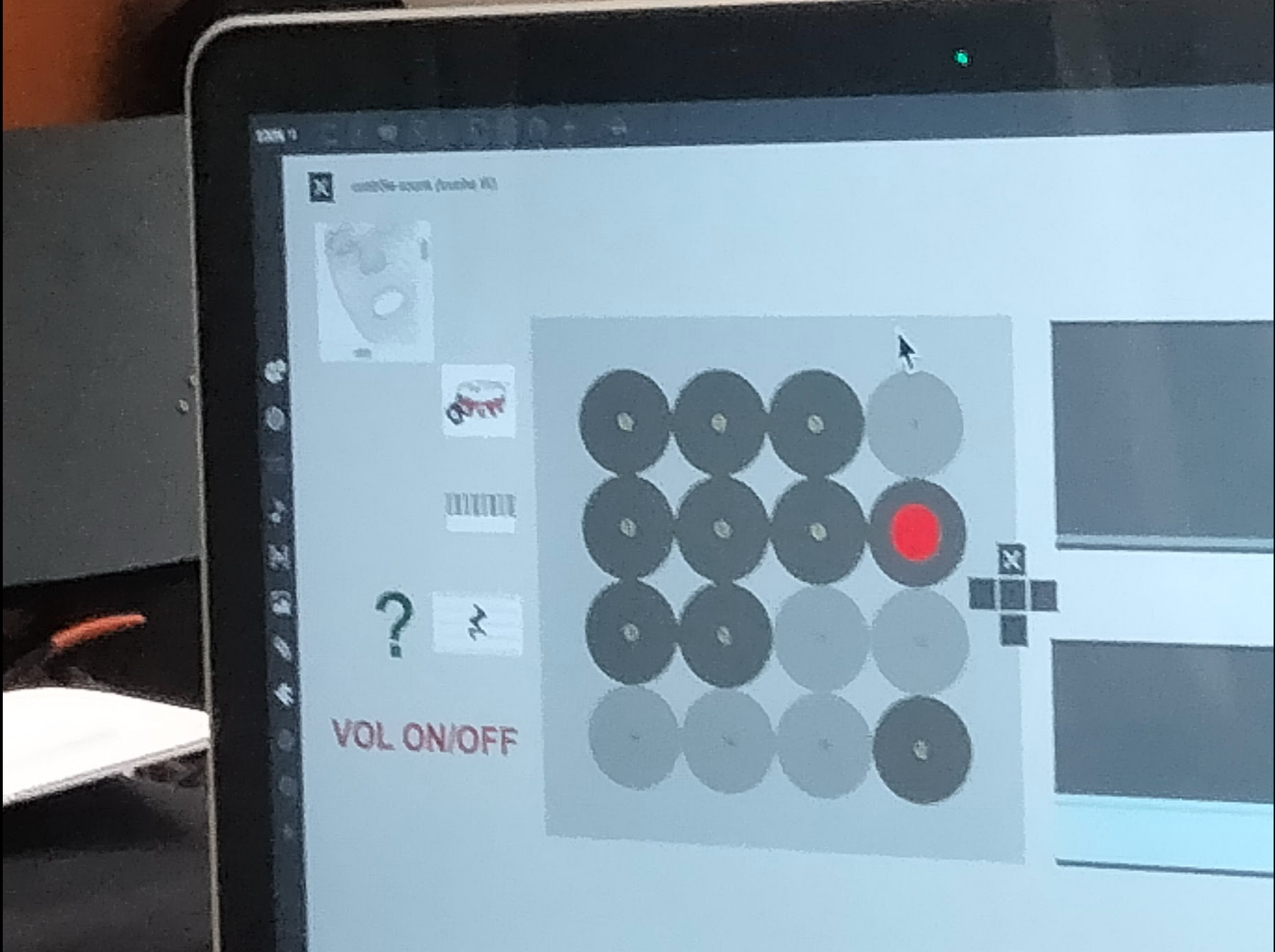

As promised, here is the patch developed based on Rob & LYSDEXIC code. This is basically a MIDI controller sending MIDI messages to an Ableton Live set:

IMPORTANT NOTE: use "R" key on the keyboard to lock/unclock mouse control

"In action" pictures of my friend playing with it (with his permission):

Still a lot of improvements/optimizations to consider, but this is already a good base : you both made it possible, and we are so grateful !

And many thanks also to SOURCE AUDIO and MIZU who helped me understanding the Nodes object better (https://cycling74.com/forums/strange-unpackpack-management-of-nodes-indexed-output).

Comments welcome !

Cheers,

Sébastien

Stunning work. ⭐️⭐️⭐️⭐️⭐️

Looking forward to play with the fingers. Indeed, lighting conditions matter significantly. I will report.

Amazing work Sébastien! So cool to see this in action. I hope your friend had fun and inspired more designs!

> Indeed, lighting conditions matter significantly. I will report.

I found front light helps a lot - top light casts a lot of shadows.

You don’t even need a ringlight - just any simple lamp you have laying around.

Hi Lysdexic. Re: Hand Landmarker: Have you got any idea why the z-axis of the wrist isn't giving any useful values? I only see the output alternating between -0. and 0.00.

Edit: I asked this question before I looked at various studies first...

As far as I understand, proper depth estimation can not be achieved with Mediapipe's currently available tracking points, so measuring the absolute position of a hand in space is not possible. One would either have to know the real-world size of the hand, use a depth cam or stereo cam (with or without infrared) and the latter, of course, brings the Leap Motion back onto the scene.

With Mediapipe, I found that tracking finger movement is a real challenge, since the output data is scaled by the distance to the camera, whereas with the Leap Motion, the output is relative to the hand itself.

All this is not to say that I can't see any interesting applications of Mediapipe for us artists!

Anyway, any thoughts or experiences about this from you guys are welcome!

Thanks Lysdexic.

This is fantastic work!

I'm interested in feeding in an image/matrix from a Kinect or other IR camera (the goal is being able to send a live image of a person in a dark room that is hopefully readable enough by Pose to make out a body and limbs). For matrix data from the Kinect, I'm using jit.freenect.grab.

Is there a way to feed a jit.matrix directly into the jweb-pose-landmarker object to be analyzed rather than an image from media device/camera?

I'm guessing the js code for jweb-pose-landmarker would have to be changed for a different input, but I don't know where to start.

Thanks again!

Jacob

Quick question : does the script work offline (jweb option) ? Thanks.

Quick answer: in theory no. Unless jweb might be doing some caching.

The reason being that each time jweb loads the html page (which is local), it (the page) loads several resources from the internet (not local). e.g. open handpose.html and look at the first few lines, you will see something like:

<script src="https://cdn.jsdelivr.net/npm/@mediapipe/control_utils@0.1/control_utils.js" crossorigin="anonymous"></script>now, you can always try to download all those resources and direct the src links to those local copies... ;)

Best,

○—●—◩

Many thanks !

Thank you so much for this infrastructure, both of you! I'm no coding magician, so this really is a life saver for someone like me...

I have one question--

I am using the body pose (jweb) tracker for an installation and am using 2 USB webcams of the same name. I want to get several streams of data at the same time, and it works fine for my laptop-based cam and a single USB webcam if I copy the body pose patch twice. However, when I try to use two USB webcams it prioritizes one and doesn't show the other--I suspect this is everything working as intended and me mangling something up--does anyone have an idea of what might be a good way to fix it?

Thanks!

I am a little late to the party coming from Kinect but wow thank you so much! So far this is super happy on Windows 10 on an Asus Rogue. Awesome work!

I tried to also get the midi face patchertracking working but am hitting this error.

poly~: no patcher SEBPOLYBOUTON

Also I wonder if I need to be running one of the main face mesh patches first for this to read? Looks like it is searching for jweb-facemes.html to read input.

Many thanks!

Robbie

Hey lovely ppl! (about the pose landmarks) anyone know how to get more than 1 pose out of the tracking? i see that the detection displays multiple poses but i am not sure if see any difference in the dict data. Cheers!

Hi Stefano,

The way I've done this is to open hands-gesture-recognizer.maxpat that should have been included in the downloads when you downloaded the jweb mediapipe stuff. Once into this max patch, you'll find dict.unpack Left and dict.unpack Right going into p left and p right. When you hover over the p left and p right outlets it will tell you which landmark it's unpacking such as ring_finger_tip. These can unpack all of them.

You should see an example of index_tip being unpacked so try to copy that format for which ever landmark you need specifically. It should output an x y and z.

I really hope this helps but give me a shout if I haven't made sense or I didn't answer your question. Fellow max folk, if there's a better way to do this let me know!

Hi E_S_,

thanks for answering,

i might need to better clarify my question, that is specific to the Pose landmark patch:

in the jweb overlay i see that 2 people are recognised as "skeletons". This is confirmed inside the jweb-pose-landmarker.js, at the end of which there is a "numposes:2"

i understand how to get the different joints of the pose but i don't know how to get the data about the 2nd tracked person.

i am now looking at the js script and i see that there is a

results.landmarks[0]which i think (i am far from knowledged in js) might return the 1st detected person...

i tried with results.landmarks[1] and having 2 ppl in front of the cam but it did not work

Got it!

for who's interested, attached a modified version of the js file (plus html) that streams also the data of the 2nd person. To get the data out, you need :

1- put the jweb-pose-landmarker2poses.js in the js folder (nearby the original)

2- put the jweb-pose-landmarker2poses.html where the example patch sees it (e.g nearby the original)

3- in your patch: load the right html file (jweb-pose-landmarker2poses.html)

4- add a [dict.unpack left1: right1: neutral1:] to get out a the dictionary with the coordinates about the 2nd tracked person, the procedure to get the specific joints is the same

Hi,

Following the advice of @Rob Ramirez, I just tried jweb-mediapipe patches and they work great out of the box !

Thanks for the amazing work.

But as @Jacob asked earlier, I'd like to know if there is a simple option to send Jitter matrixes or textures to the process ? I suppose I can work with some "Syphon Virtual Webcam" but I'd like to find something more integrated to Max...

Regards !

Jérémie

Hello! thanks for these amazing resources!!

I have what might be a really dumb question: honestly, i have little to no knowledge of js and how mediapipe works, so i was wondering if there was an easy way to train custom hand gestures directly inside the max patcher, sicnne the default ones are useful, but a bit limited for what i have to do.

thanks so much, sorry for my ignorance

@Tommaso Cherchi, this is a Mediapipe related question, and as far as I know, no, you cannot use your own dataset to train new models or existing ones. At least using js. After a quick search, I found that you can actually complete/refine some of their existing models (object detection, image classification, gesture recognition, but not the other ones) with your own data, using their python API. Here is the doc for customizing their gesture recognition model. So not an "easy way".

@TFL

Ok, good to know. Thank you!

Hi everyone!

I'm guessing this is a normal artifact, but when using the Hand Gesture Recognizer it often flips hands or mistakes the right for the left, vice-versa. Is there a way to make sure it doesn't happen? I'm mapping hand gestures to parameters in a plugin so it kinda messes things up when the hands are swapped.

Thanks for all this beautiful knowledge and those amazing tools !!!

Hello all,

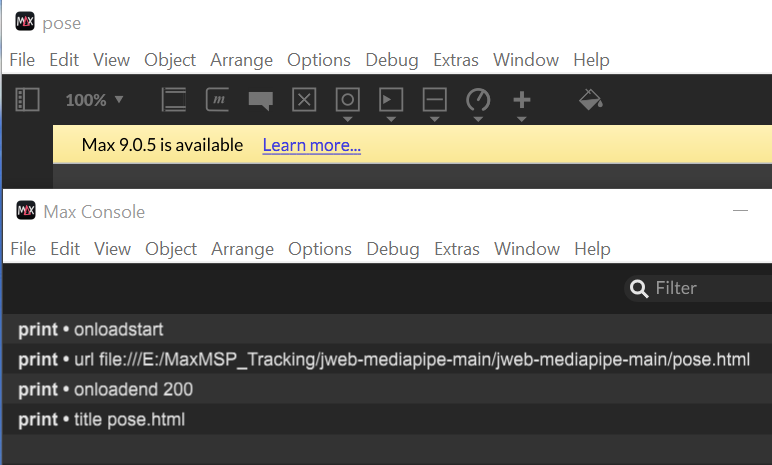

Whenever I try to use a MediaPipe based Max patch, for example, https://github.com/lysdexic-audio/jweb-pose-landmarker or https://github.com/robtherich/jweb-mediapipe, I get an error that says "Failed to acquire camera feed: NotReadableError: Could not start video source" (see image attached).

After doing some research, it appears that this is a common error when the camera is being used by a different application, however I'm certain this is not the case. If anyone can help me resolve this problem, I'd be greatly appreciative.

I'm running Max 9.0.4 (x64 windows) and my computer specs are as follows:

Edition Windows 10 Pro

Version 22H2

Installed on 10/20/2022

OS build 19045.5608

Experience Windows Feature Experience Pack 1000.19061.1000.0

Processor 12th Gen Intel(R) Core(TM) i9-12900K 3.20 GHz

Installed RAM 32.0 GB (31.8 GB usable)

System type 64-bit operating system, x64-based processor

@fas11030 can you get your camera to work just with the jit.grab help patch?

@tfl Yes jit.grab works

When I try using one of these patches, this is the type of console output I'm getting:

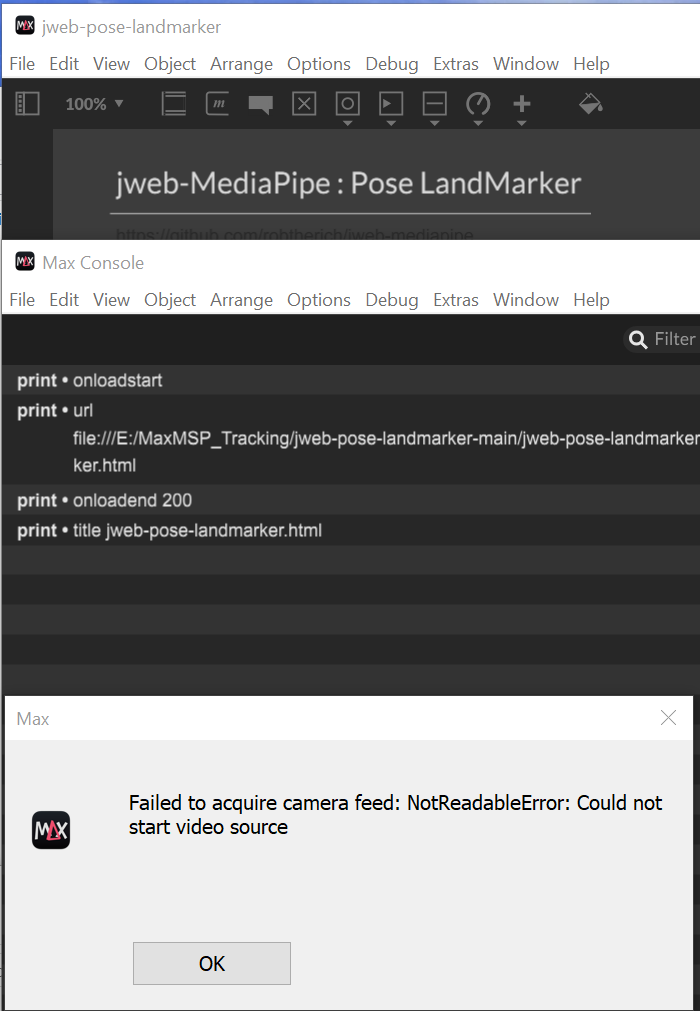

When I try to use https://github.com/yuichkun/n4m-posenet, the message attached to the outlet of the initialize subpatch stays at "started" indefinitely. I can see the folder for the patch being populated with files though. If I turn the toggle on, it doesn't do anything and when I turn it off, I get the node.script message in the console shown in the attached image. I'm not sure if this is a related or different problem.

do you have access to the mediapipe object recognition? or text sentiment analysis?

Facemesh example stopped working for me in 9.0.5 (or maybe some versions before i am not sure) but it did work on max 9.0.0 and does work on Max 8.

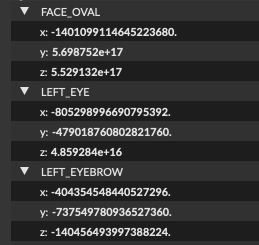

all loads correctly, the tracking starts and the the coordinates "fly off" reaching very big numbers (see image)

macbook m3 Max macOS Sequoia 15.4.1

ok i see that it gets fixed by triggering "reload" after the patch is running for circa 80 seconds (not before). But i guess the timing is very machine relative