TouchOSC switchable mapping to M4L Device

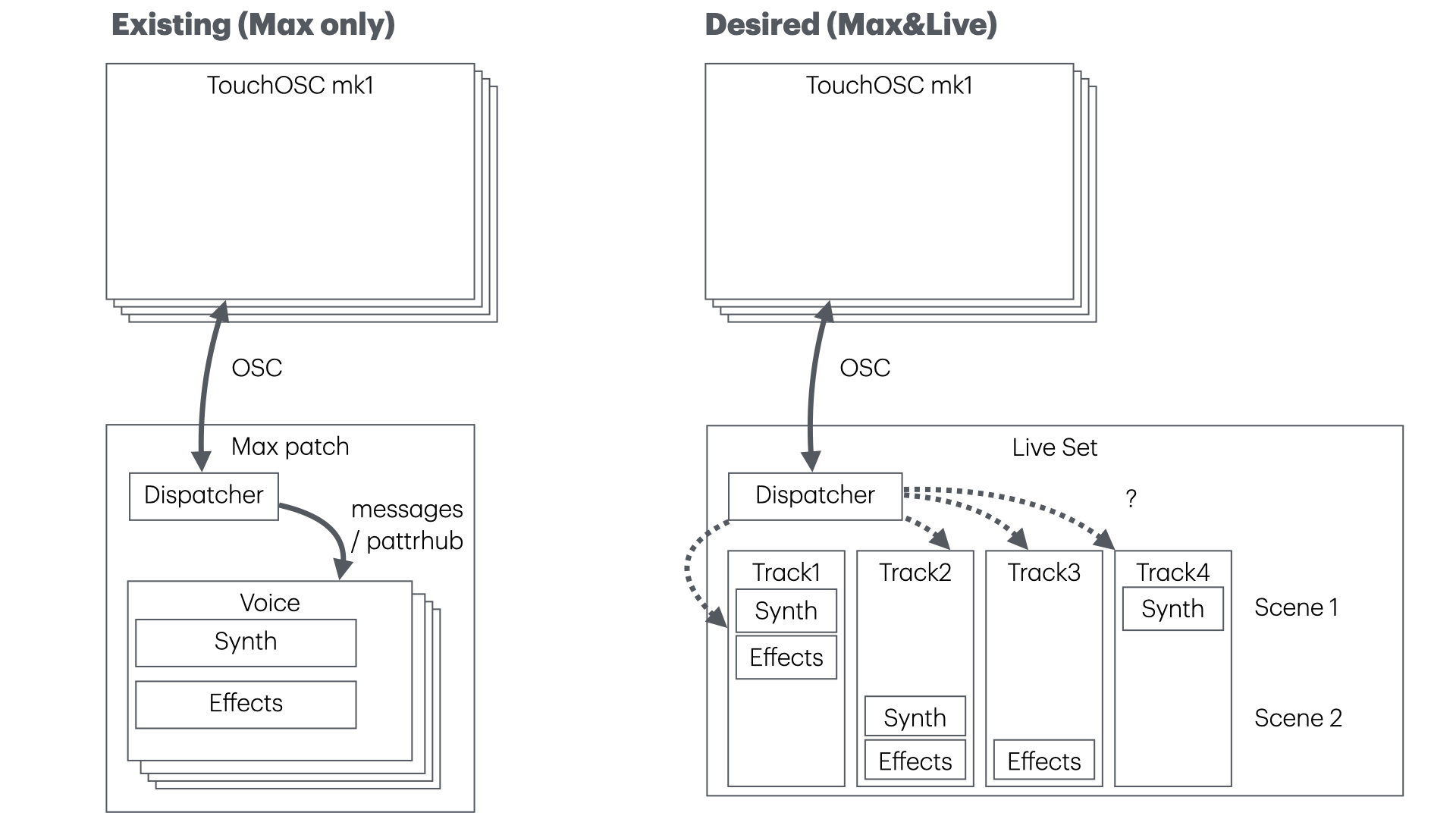

I'm looking for tips and experiences how best to go about controlling specific M4L devices from TouchOSC. This is based on stuff working in Max that I want to port to Live. I'm not so familiar yet with the LOM and so on, and am happy about all general pointers, too.

My starting point is (left part of the diagram below):

a specific TouchOSC template (with 4 similar pages) for my specific synth + effects patch

an existing message-based dispatcher in Max routing the controls to the parameters in the correct instances of the synth/effects patch

FWIW, I already ported this to use pattrhub to control live UI objects by name, stored in a dict.

What I'd like to achieve (might look like the right part of the diagram, but maybe there are better ways):

keep the same TouchOSC template

port the synth, effects, and dispatcher (if it is needed) to M4L devices, i.e. I know all the parameter names and ranges

allow to set which page controls which device in which one of several tracks

store that mapping in a Live set and switch it with a Live scene change

The questions I have:

How best to control the parameters in the devices? live.remote~ or directly via the LOM in javascript?

How to organise the dispatcher? A central one? One for each track? One in each device?

How to switch the mapping to a different device instance on a different track by preset, message or scene?

How to achieve the backwards mapping: when a parameter is moved in Live, the TouchOSC control should update, too.

The M4L devices could also run in Max; does this make a difference for the mapping implementation?

Does pattr work in M4L devices? (pattrhub can be handy at times.)

Any caveats? (like the dreaded only one process can udpreceive on a given port)

Thanks for any hints and pointers!