Equirectangular to Rectilinear (Gnomonic) 360° Video mapping with Gen/Jitter

Hey,

for 3 days now I've been trying to adapt the equation on this site, to convert an equirectangular 360° video into a rectilinear/ normal FOV:

http://blog.nitishmutha.com/equirectangular/360degree/2017/06/12/How-to-project-Equirectangular-image-to-rectilinear-view.html

I've been rebuilding the math from this equation (I think the “inverse equation” is the right one) in a gen patch within a jit.gl.pix object, but I can't get it to work. The resulting image never really looks like a normal FOV. Today I tried to interpret the python code, which was linked on that side and is based on that equation.

Here is the direct link: https://github.com/NitishMutha/equirectangular-toolbox/blob/master/nfov.py

But again with no success.

I might have the wrong assumption on what “lambda0” and “phi1” is suppose to be. I thought ist should both be 0 because it is pointing to the “S point”. I might be wrong, but entering other vaules didn't help.

I'm quite new to Max and Jitter + Gen in particular. Usually searching this forum and adapting some patches works when i get stuck, but this time I didn't really find an existing solution.

Is maybe someone able to point me in the right direction or knows what I did wrong?

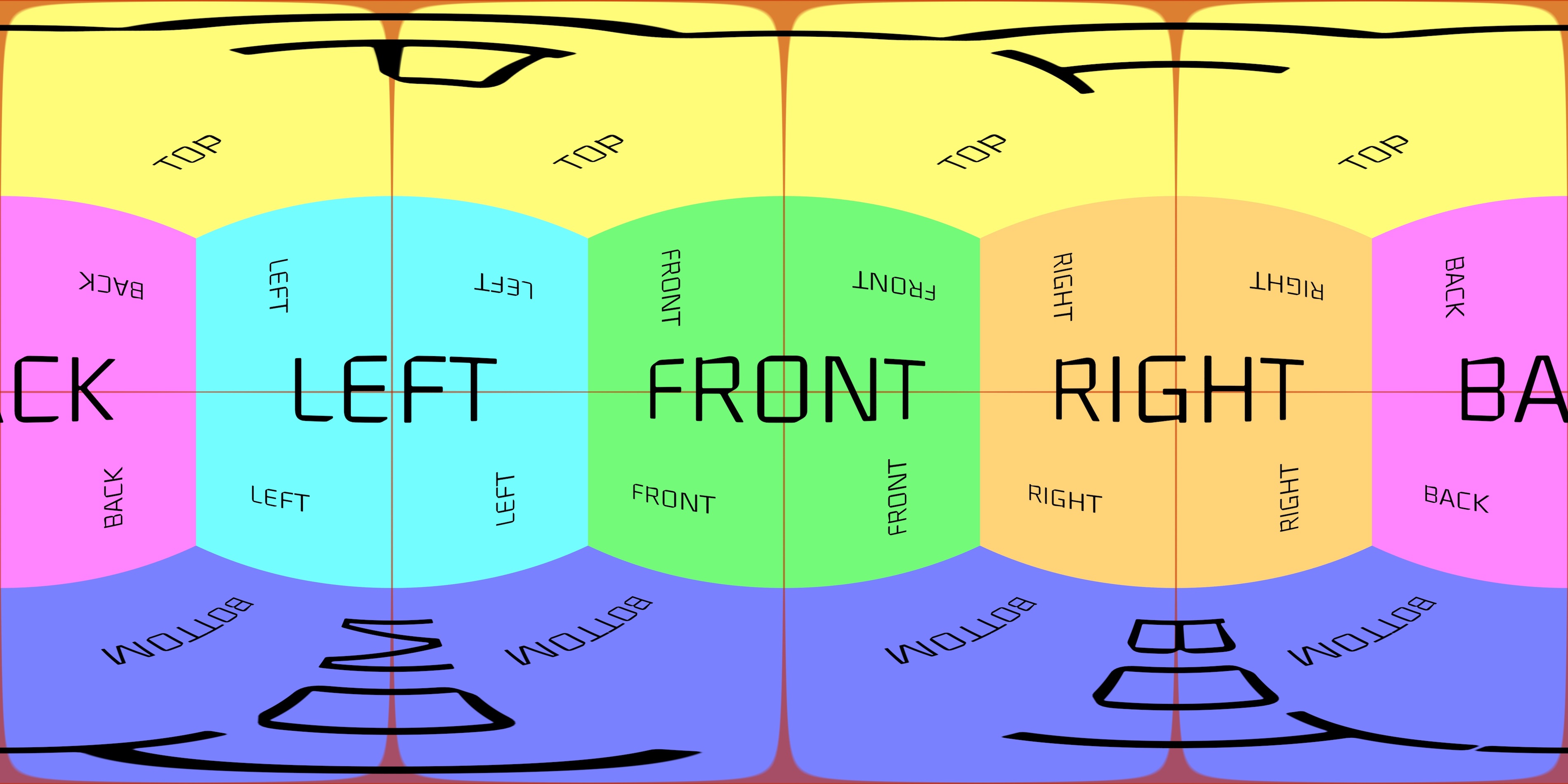

as it turns out the Max 8.3 ships with an equirect to cubemap converter shader. I forgot to include an example patch for this, but here is one that utilizes it to draw a 360 video to a skybox.

there appears to be a bug that is preventing scenes with skyboxes to draw if there are no other gl objects, so there is a dummy object in there drawing with scale 0.

hope this helps.

Hi Rob, thanks for your reply!

I know there are other ways of mapping an equirectangular image to get a normal FOV (the easyest is probably to just render it as a texture to a sphere), but I'm trying to avoid a 3D space at this point. My project uses multiple 360° videostreams and putting all of them in a 3D space is a huge perfomance killer. That's acctually what I have at the moment, but it runs at a non usable framrate. I'm looking for a way to do this conversion just via math equations, to get better performance.

The equation on the page I linked to should work, but I seem to make some mistake along the way.

Any ideas where i could have made a mistake in my attempt ?

Hey Jathi,

I usually use these two functions to pass from spherical to equirectangular coordinates and back:vec2 dir2uv(vec3 v)

{

vec2 uv = vec2(atan(v.z, v.x), asin(v.y));

uv *= vec2(-0.1591, 0.3183);

uv += 0.5;

uv.y = 1. - uv.y;

return uv;

}

vec3 uv2dir(vec2 uv)

{

float phi = (1. - uv.x - 0.5) * TWOPI;

float theta = (1. - uv.y) * PI;

float sintheta = sin(theta);

float x = cos(phi)*sintheta;

float y = cos(theta) ;

float z = sin(phi)*sintheta;

return vec3(x, y, z);

}

This is GLSL code, but it's not much different from jit.gl.pix codebox language.

"uv" contains values in the range [0 ; 1], while "dir" [-1 ; 1]

The first function is exactly the one used in the shader that Rob posted

Hope this helps

aha, reading more closely I understand better what this technique is doing. I took a crack at porting the python code in your link to Gen and it seems to work well. The leftmost texture input to jit.gl.pix is what sets the output dimensions. I got the best filtering results by using @mipmap linear on the movie input (bilinear and trilinear cause issues at the seam, not sure if there are ways to workaround that).

this seems generally useful so I'll look at including something like this in a future update of Jitter Tools, but please let me know if you make any improvements.

wow, thank you both so much!

Matteo I saw your reply in my e-mails and thought, awesome I have to definitely try your code later. Now I saw that Rob has already made a working patch! Thank you so much, it works really well! Honestly I hardly think I can improve your patch on my own. I've maybe 3-4 weeks of programming experience with max at this point. (and really not much more outside max) I'm really a beginner here.

The one thing I can think of, that one could add to your patch, is roll control. Then we would have a complete 360° viewer, similar to mapping the image to a 3d sphere and using a virtual camera to look around.

I have no Idea how to do this efficiently, because I don’t fully understand the code yet!

However, I was using this patch (https://cycling74.com/forums/manipulating-vr-source-video-without-changing-the-dimensions/replies/1#reply-58ed21fbb7244922ce26911c) from Graham Wakefield, to rotate the equirectangular image before the conversion. It might be possible to merge them into one gen patch somehow. I will try that and if it works I‘ll definitely post it here!

Hey Rob,

I managed to add rotation control to your (Gen-) patch!

I think this is now quite useful. One could build a full 360° viewer, similar to the mobile YouTube app, without using a 3D environment.

Here is the updated patch:

Maybe this is useful to others as well.

Thanks again for adapting that equation!

Hi, sorry for hijacking this thread, but I was just looking for something closely related:

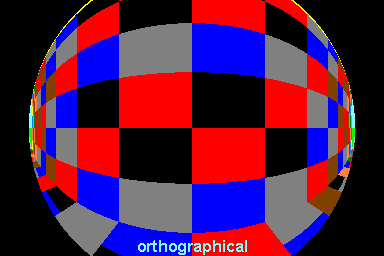

Would anyone have pointers how to do the inverse projection, i.e. warp an image so that it can be projected via a fisheye lens onto a planetarium dome?

I think the image should have this shape:

Cheers!

Hey Diemo,

this wouldn’t really be the inverse projection, otherwise you could just reverse the equation. Your picture says “orthographical”, so I assume you need the equation for that type of map projection.

This seems to be it: https://en.wikipedia.org/wiki/Orthographic_map_projection

On first glance it dosen’t seem too different from the gnomonic projection in the patch: http://blog.nitishmutha.com/equirectangular/360degree/2017/06/12/How-to-project-Equirectangular-image-to-rectilinear-view.html

You could try to compare the two equations and change the patch accordingly.

I’m not sure, but it might work.

If performance is not a big issue, you could also probably do what I tried to avoid and map it to a 3D sphere and record the result with a virtual camera.

Hey,

after I posted the updated patch a few days ago, I noticed that one thing still doesn't work as I need it to.

I’m trying to manipulate the equirectangular image with the rotation data from a VR Headset. Basically I try to follow the view of the virtual camera (controlled by the VR headset) on a 2D texture, to manipulate the texture a certain way before mapping it to the 3D space, which then the VR-headset can actually see. (That’s why I try to avoid doing all of this in 3D spaces. The fps become quite unusable then.)

I’ve connected the x and y axis with the center control and the z axis with the rotation control. At first glance it worked quite well and it “tracks” the virtual camera in most situations. But after some testing I noticed that there is still an issue with the z axis/ rotation. When looking up or down to the poles, manipulating rotation and longitude dose the same thing and manipulating the “z axis”, to look sideways, doesn't work. In fact looking sideways doesn’t work anywhere properly except on the “equator”. This makes sense, because the way I implemented rotation in the gen patch, it just rotates the image. It can not manipulate the latitude. I’ve tried to find a workaround, but only made it worse. The answer might be in spherical trigonometry, but I can’t wrap my head around that...

Like I wrote before, I’m quite new to programming and this is way out of my league! This forum has been immensely helpful so far, so maybe someone of you is able to help again?

Here is an example patch to show the issue:

And two equirectangular images for testing: