Exporting / Storing & Reading back automations and data flow from Live

Hi dear C74 community, I'm working on a way to do offline rendering also with Max.

(for reference purpose, the thread related to the jitter part here is there)

As my visuals react to some external bits (sound analysis, midi triggers, midi CC curves), I have to find the best way to record (or export) all these bits ( sound analysis, midi triggers, midi CC curves ) and to make my patch able to read it back while offline rendering.

Live is used for the sound part, here.

For automations, midi triggering, I have some options in mind :

- exporting by using a max for live based patch: you select the clip (or the track) from which you want to store data and it exports things. Actually, I don't see any benefits from this compared to export MIDI files (as far as I can imagine right now)

- exporting Clip as MIDI files

- recording flows from Live to Max (using MuBu library maybe)

For sound analysis, I just saw that MuBu library can do it (and store it) realtime or not realtime. In my setup, I often use sound analysis only for some tracks... so I can easily do it and store it, I think.

The idea is: it as to be the most accurate possible. Considering that jitter rendering, if I go to 60fps, or even 120fps while rendering not real time, it means I'd need 120 values to be read back per second... this is low sampling rate. BUT for instance, I have a midi trigger occuring NOT at the exact time position, it wouldn't be considered. Would require some quantization maybe.. could be a mess to design.

If some people have some experiences with that, I'd be happy to read any feedbacks and patches about this.

If I end up with something decent, I'll share it here.

Thanks and take care, Julien

So, I'm currently developing a proof of concept patch for the whole idea for checking the feasibility of the whole thing.

I progressed a bit about what to record.

Actually, I think I wouldn't record raw data (causes) coming from Live but the visuals system parameters (consequences) instead.

It would solve the problem of discrete/continuous stuff.

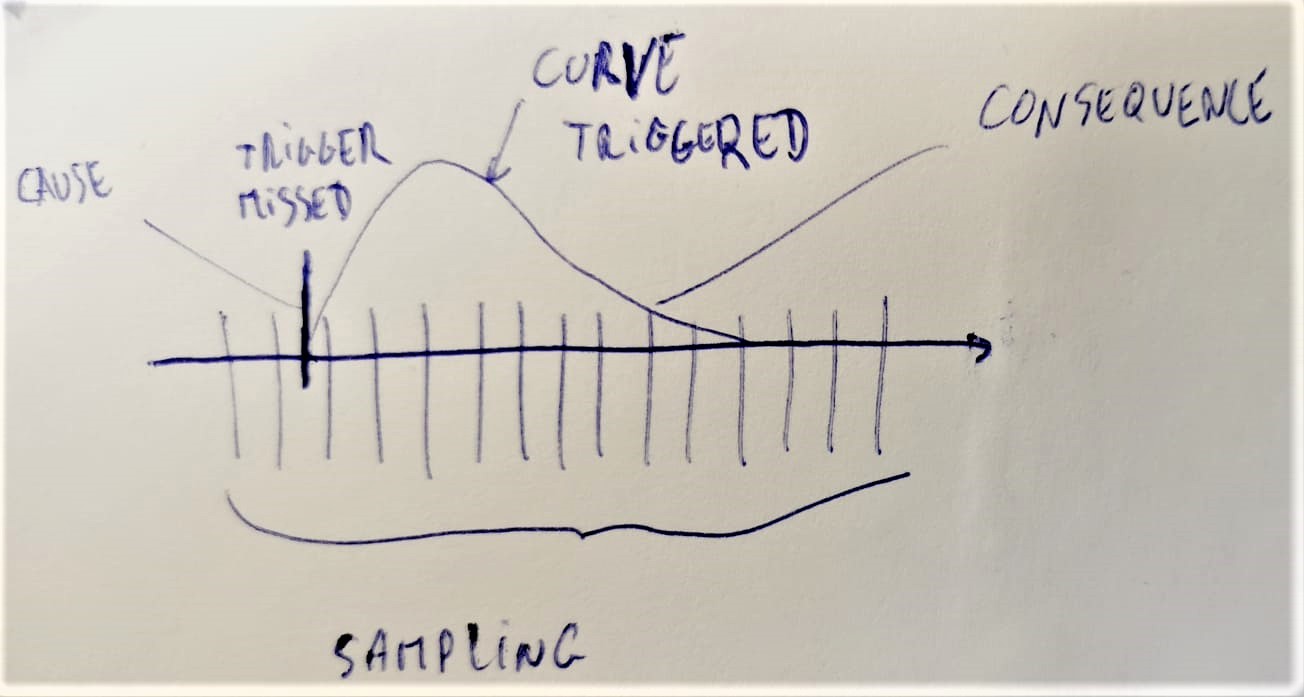

For instance, and this is what I was writing about in my previous post, I finally don't want to store triggers, but only the consequence of them. If my sampling rate is 60Hz (for 60fps in the end), when I would miss the trigger with the first way, I wouldn't miss its consequence (maybe the very start of it)

excuse my low fi schematic.

I just need to setup this now.

MuBu seems good for this

About the LFO thing you mentioned, I I record/store things at the closest of the parameters (consequence) I have, I wouldn't have to replace any LFO. I got the LFO, the time based stuff (line objects, line~ too etc) and they alter parameters. These parameters (scheduler domain of course, which mean, as you mentioned snapshooting this if control signals are there) will be the one recorded in MuBu.

Then, in the end, the idea would be to parse ALL MuBu tracks with all curves stored step by step, 60 times per second, and rendering each frame 8K for instance (so excited imagining this)

Considering Rob's example there, I don't even know if I will use MuBu for recording / playing my parameters. For offline (and realtime) sound analysis, MuBu could be nice (even if I have to store that in the same format as in the Rob's patch (jit.matrixset etc).

But as far as I tested, the system designed as rob did works like this out of the box.

Could record minutes of things here...

My parameters are coming from live (midi, osc, etc)

I'd need to check it with hard on gpu visuals now. But it would obviously work.

Just have to figure out how I will manage all visuals context (I have a visual context per song). It means: duplicating the setup for offline/online stuff, and putting my (complex) patches directly within the patcher named p PATCH-AND-PARAMETERS in the example. Then, all parameters has to be connected, recorded, then played back.

will try to post my results if they are interesting