jitter offline rendering, some inputs ?

Hi dear C74 community,

I'm working on a way to do offline rendering also with Max.

The underlying idea is: having a patch for visuals which could have 2 modes:

- studio mode: preview, low on cpu/gpu, listening to osc/midi flow from outside ; used for composing, tweaking

- offline recording mode: HQ, able to render it frame per frame

As my visuals react to some external bits (sound analysis, midi triggers, midi CC curves) It means I have to find ways to record (or export) all these bits ( sound analysis, midi triggers, midi CC curves ) and to make my patch able to read it back while offline rendering.

Even if I'm still working on this part, and still have at least 2 or 3 options to design it, this thread is more about jitter offline rendering itself.

did someone could point me to the right direction with this ?

I'm opening another thread for the part about storing/reading back data flows.

(for reference purpose, the thread related to the jitter part here is there)

Thanks and take care,

Julien

I provide a proof-of-concept patch for non-realtime recording in the best practices 2 article, scroll down to the final section.

No...... I'm so sorry for having missed it.

I'll try to check this out and test.

It would be combined with MuBu for reading back things. I'm just afraid about line object and interpolations stuff which are not stored in array.

I'm afraid about my parameters sampling rate. Like missing some fast triggers .

the most basic approach is to avoid "live" scheduler timing and audio rate.

so in a first step, things like "audio analysis" would have to be rendered to control rate. (think of it like implementing a "freeze" function for audio signals)

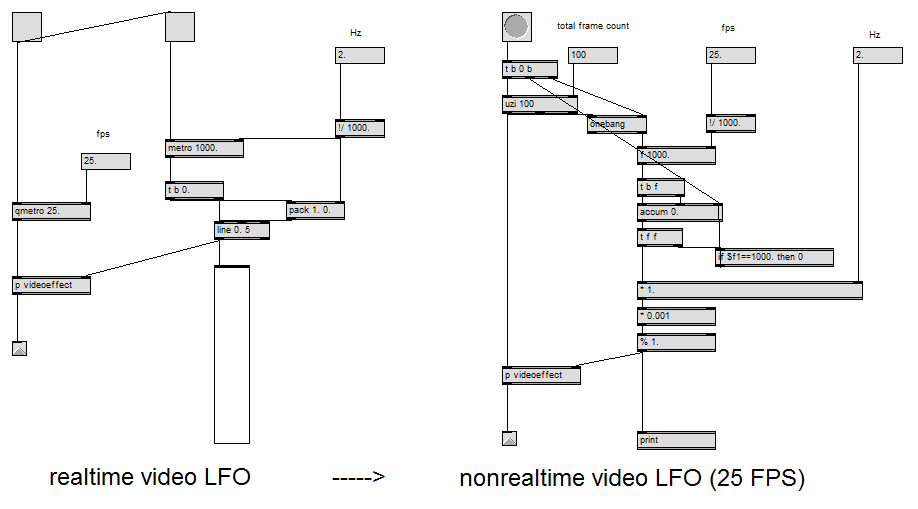

in a next step everything at data rate has to be timestamped and/or quantized to frame rate, be it milliseconds or be it frame number. (for example if you are normally using a free running LFO, this LFO needed to get a nonrealtime mode in addition to that, where it calculates the output values based on frames.) (if you think this is too much work, you could also just record all modulation stuff live once with some kind of dataplayer, based on seq or whatever)

now you only have to replace qmetro with uzi* - and your whole patch runs in a "video nonrealtime mode".

that is the moment where you might want to switch from 400*800 to 8k before you hit record. :)

*) no you dont have to, you can also keep qmetro bc most of the time it will be slower than realtime anyway.

Actually, this is not completely the approach I'd have considering uzi or qmetro.

I'm currently developing a proof of concept patch for the whole idea for checking the feasibility of the whole thing.

In order to keep things in place and references, I'll switch to my other thread for explaining a bit more:

https://cycling74.com/forums/exporting-storing-reading-back-automations-and-data-flow-from-live