Offline rendering, frame-per-frame and hiQ video production with Max ??

Hi there,

I hope you are all fine within a so touchy context.

Here is a special thread I needed to open, and I hope it will engage more discussions and ideas about the topic.

I know there are some threads about this, some are very old, some are about just a part of what I have in mind, so I hope you won't mind about this new one.

I program with Max since around 20 years.

I used the framework for almost everything from sound production, sequence generation, spatialization, binaural rendering from ambisonic, concatenative synthesis to granular one, linking sound with visual, video player using time fragmentation, 3D rendering depending on sound features analyzed in real-time, for making Ableton Live generative and non-linear, data sonifications & all we "all" do with it....

More and More, I need to render video, hi resolution and hi frame rates.

Until 2018, it was very ok to use a laptop for this and doing the syphon thing in real time and syphon recorder, as far as I had optimized my patch, was very ok, very enough. From my project FRGMENTS (using HAP Q video pre-recorded, processed and distorted and melted in real-time), to STRUCTURE using meshes and combination of DepthKit things) etc... very ok. I'd say, very enough.

More and More, I want to pre-render.

But as many persons (bunch of my students need this too, but also artists with which I collaborate), we need to pre-render according to sound features, logic or other bits of _things altering the rendering_

Short example for illustrating my point and purpose:

I got a patch, which renders a basic 3D mesh.

I have a routines that catches audio, and MIDI, and that alter the shape.

I want to capture the rendering, frame-per-frame.

Then, as an installation, or as a live performance (supposing I'm not altering the sequence of the whole song), I use the video, I play it with Max, and I alter it in real time, glitching it, shadering it on GPU etc.

It is cheaper on GPU/CPU during the live performance, and I can have the quality I want (in this case, I use HAP codec and it works well)

Would you have guidelines for this ?

Some strategies would be:

render everything as audio first and analyzing all offline, pre-storing curves for loudness, noisiness and all sound descriptors I want to use to alter visuals

convert midi triggers as data structure in Max for using it later at offline recording time

bind all my visuals parameters at first, and pre-record their curves themselves

The idea would be to record data, and to have a system able to render each frame by reading the pre-recorded data one frame-at-a-time.

I saw some patches around this, but these aren't really completely useable.

So I'm trying to figure out how to build mine and to share it with the world.

TouchDesigner got the frame per frame options, as far as I know.

As Houdini, and other rendering frameworks.

Basically, the idea would be: using timelines (I know this is the name in TD)

A timelines would describes all visuals parameters evolution. These could be moving by themselves (if there is an inner logic inside Max for it, like an oscillation not depending on external parameters) or being moved by parameters coming from sound analysis it itself.

At last, the idea would be to have "kind of" framework, why not libraries/packages, to help people to do it very easily. I mean:

You have a song (sound rendering, midi sequences)

You have a patch, generating visuals, you have/declare 6 parameters for instance. You compose, play with it.

Then you decide to make the first 2 parameters evolving by themselves, then the 4 others each one mapped with loudness of the song, the noisiness, this or that sound descriptors.

Then, you load all the curves, you press record.. and it does the job, even at very low framerate but hi res and exporting a hi framerate video.

Of course, I'd like to explore all possible ideas even going outside of Max.

But I'd like to keep the idea of using Max as I know how to build weird logic for playing video, altering things and more.

I even thought (and started) to create a loudness exporter (built upon MuBu) that would create a file that I could import in final cut or other software and that could control one parameters of the video. But this is only in case I use a pre-recorded video... but it would work.

I'm interested by all ideas, suggestions, critics and more.

Best regards,

Julien B.

Julien, thanks for opening this thread.

When it comes to the data recording, it might work to use the recently updated [mtr] object, maybe adding on the input(s) a kind of [speedlim] at the framerate you target (no need to record more data than what's going to be useful to render).

To read the data in [mtr], it might be a little tricky: AFAIK, you can't tell [mtr] "output all event from absolute-time 236 to absolute time 250". One option would be to do increment short bursts of playback (play/stop for each frame, stopping looking at the play point in the info dictionary). I don't like this idea very much. Another option is to build a little machine using the 'next' message and the information it gives. The output given on 'next' includes the absolute time of the event, so you could increment for each video frame through all corresponding events.

I think it would be very nice indeed to have some kind of package that easily allows this non-realtime rendering and control of a timeline. I think the jit.mo objects (.time, .sin, .perlin, .line etc...) are already quite useful since they are linked to the world and you can control their speed, but maybe a set of objects that are always synced to the rendering frames (and not the real time interval) could be included, like what [bline] does as alternative to [line] and [line~]. At the moment I usually make some kind of [coll] construction where I use a framecount (renderbang > counter) to store controller data (or any data) at an index, then when non-realtime capturing just use that exact framecount to retrieve the data from the coll and distribute to parameters. This could also be a way to extract audio amplitude with a [snapshot~] or other descriptors. I have not used touchdesigner so not sure how they go about implementing this timeline and especially how the environment switches (or combines) a fixed timeline versus a non-linear one.

If Ableton and Cycling '74 would expand the LOM giving access to automation curves, I think it would be the most interesting strategy to pursue. Biggest missing feature in my opinion is this here https://cycling74.com/forums/set-keyframes-from-m4l-device .

So I mean - If it is an option for you to work with Ableton Live and Max I would recommend to use MAX4Live and Max communicating via [udpsend], [udpreceive] for the parameter recording and playback and MIDI - Rob Ramirez shared some building blocks for sync here https://cycling74.com/forums/key-frame-synchautomation-in-jitter/replies/1#reply-5faed96327699f6309877ba9.

But as Ableton Live already consumes too much of my GPU resources and running Live and Max on two different computers for offline rendering was not an option for me, I was looking for a solution inside Max. I found that the parameter recorder from Best Practices in Jitter, Part 2 - Recording contains some interesting functionality, but it only works for float values. When [mtr] was refurbished I had big hope, but it turned out it was tailored to a totally different use case scenario and so misses a lot of features that are required in big patches like compatibility to [pattrstorage @subscribemode 1], the rest is summarised here

By the way the recommendation from JEAN-FRANCOIS CHARLES

you can't tell [mtr] "output all event from absolute-time 236 to absolute time 250" ... increment short bursts of playback

is implemented as a sketch here

https://cycling74.com/forums/-sharing-looping-gestures-with-mtr/replies/1#reply-5fa1d534cf19d0282883484b and I can say it works.

My actual problem is that I sometimes have stutter in my recordings with [mtr] although the patch is optimised. Especially with camera movements this issue is really a show stopper and it was one reason, why I started with TouchDesigner.

But I still try to find a solution inside Max. My latest approach is this https://cycling74.com/forums/-feature-request-mtr-binding-to-mc-function-for-display-and-editing-of-event-data/replies/1#reply-5fbedf57849b076a4f3d3e5b in connection with some data filtering of the recorded curves. I use [mc.funtion] for playback, because I prefer to have interpolated output, which is currently not an option for [mtr].

Conclusion for my work is that Max currently doesn't offer sufficient features on the level of objects to build a viable timeline editor. Furthermore recording messages via patch cords to [mtr] appears to be a bad architecture choice for timing critical processes. All of this might work if you have sufficient "distraction" in your visual content, but if you need smooth camera movements for calm scenes and tight audio to visual correlation you might have a hard time with [mtr] - not sure if this gets better if the @bindto feature is bug free.

Thanks for the links to those other topics Martin! Your patches so far seem very promising but I indeed also see obstacles you mention in your last post. I haven't had time to look at the new [mtr] functionalities and how it can work together with my jitter patches, so am keen on giving that a go over the christmas break! I would prefer native Max support over support via the LOM though.

like i always say, in best case you build everything from the beginning on in a manner which allows offline rendering with one click, you know like a "cubase" or "after effects" program does it.

to do so you would build everything "time" to run at integer frame values.

i know that it can be a bit against the usual way of working creatively with max and jitter, and cen get quite some work to even adapt simple processes like a metro-to-drunk object to that kind of process, but if rendering happens regulary for you it might be worth it.

the bigger problem is that when you need to use other modulators running at audio rate in addition. then i am clueless.

btw., aren´t there a lot of jitter externs which also needed to be adapted when the matrix dimensions change? that is quite a similar, if not more complicated issue.

At first, thanks a lot for the numerous comments and ideas and so fast. I hope we could drive and make something that could extend the use of Max further.

Of course, I know we are here at the border between generative / on the fly VS timelines (written things). I think, considering the cpu/gpu evolutions curves, everything push us to buy new bigger machines and even for making small operations, we need MORE. Philosophically speaking, this is really influencing & altering my way of creating in the way that I want to free myself from this force.

I know we can use blender, C4D, Houdini/TD etc. and actually, yes: we could import (on a way or another) our automation curves (our timelines) into them. And we can render videos, and play that live and eventually altering these live with fragment shader operations only (brcosa, feedbacks delay on gpu, etc) that wouldn't cost a lot because the whole rendering part would have been done before.

So it could make this post already obsolete or out of point.

But also, we all develop many things in max, our small routines for injecting many behaviors and adaptative things, including sound analysis and more, and we all want too keep developing with it.

Extending it to these new territories, in my opinion, would be a game changing thing. I'm actually dreaming of C74 working with us for that.

This was my first other way of thinking.

Then, in another post, answer/discussion :-)

We just had some related discussion lately here

https://cycling74.com/forums/key-frame-synchautomation-in-jitter

I just automated an whole project with ableton, with about 200 automation curves. You need to have a steady fps rate, and fix it to a minimal threshold you know it will be minimal. But it's working well. I love that set up actually.

In that thread, Rob also came up with an amxd utility device based on jit.time to control data flow in the world. Works great, but same constrain, fps rate has to be constant. But at least in that case, you dont need to report frame number from ableton to the world, it follows the flow.

Either in TD or in max, the problem is to synch two medias with their own clock. In Td basically you import your wave file and put some key frame on this reference. The problem of time reference remains pretty much the same if you have fps drops as the sound will keep on playing, but at least it offers a key frame solution already packaged which would also be super helpful in max. I remember some cool threads about that in the Fb forum.

How to proceed with this topic?

...we all want too keep developing with ... [Max]. Extending it to these new territories, in my opinion, would be a game changing thing. I'm actually dreaming of C74 working with us for that.

I totally agree, Julien.

David Zicarelli responded to my posts regarding a keyframe editor based on [mtr] and [mc.function]

Our basic sentiment is that what you're trying to do is worthwhile, but supporting it in a comprehensive way represents a major effort. We're in the very early stages of evaluating how we want to approach such a project. So at the very least we appreciate your trying to push the envelope here; it is having an influence on our long-term thinking.

If I would be in the position of Cycling's development team that has to keep Max running in a future full of architecture changes (OpenGL -> Vulkan, Metal. Windows, Mac Intel, Apple Silicon) I would ask how many users would benefit from new features as this thread is about and what is the essence of these feature requests.

Coming from a system engineering background I would guess it would at least take 300 man hours of discussions and prototyping to sufficiently describe all front end features (attributes, messages of objects, UI features, relationships to the rest of Max like e.g. pattrstorage system, transport, Jitter, MSP, M4L).

Regarding the back end I have no idea what it means.

Another thing is that Max just appears to fall behind TouchDesigner and similar programs like Notch if you are mainly interested in visual content creation and the audio to visual correlation is only based on, let's say, transient matching of both domains, which is easily achieved with envelope followers. If furthermore we are talking about offline rendering I also would prefer Houdini over TouchDesigner or Max in many cases, just because of the bigger variety of features.

With that in mind I suppose there is a certain development risk for Cycling '74.

So what would be the minimum viable solution or minimum set of features that are required for the offline rendering?

I find this super interesting, but a spontaneous question comes to my mind:

Why do this with Max?

It's clearly the wrong tool for offline rendering, it really lacks so many features in that direction that trying to use it for that goal feels like going upstream.

The most sensible approach could be to produce your rendering on a software that is made for that, and then modify them with shaders and stuff on Max in real-time.

Why use a hammer to screw a screw.

(I'm sorry, got some personal, and finished, issues which slew me down yesterday, coming back fast)

@federico, I won't debate. We know other tools, we know max, the way who drove me to this way is not "just a 1min thought". So answering to a question by "there is no question" is not really useful, imho.

I'll answer more a bit later and asap.

@yaniki, thanks for this. but the purpose here is more about finding global ways here. not "just" for rendering a video frame-per-frame, which is pretty straightforward indeed. I mean: rendering frame-per-frame a whole openGL scene, for instance.

@Julien

do you know the saying: "if you haven't got anything interesting to say then don't say anything"?

I'm so bad at it 😅

Probably it comes from personal frustration which I'm having with Max recently.

Using other softwares made esplicitely for the visuals it's so much faster and easier that I'm having kind of a middle age crisis in my Max marriage.

Said that, I'm really curious to see if there are viable strategies for the purpose, I'll go back in the shadows and keep reading.

@Fedrico, I wrote what I wrote, no more, no less.

Thank you Julien for bringing this up in such detail.

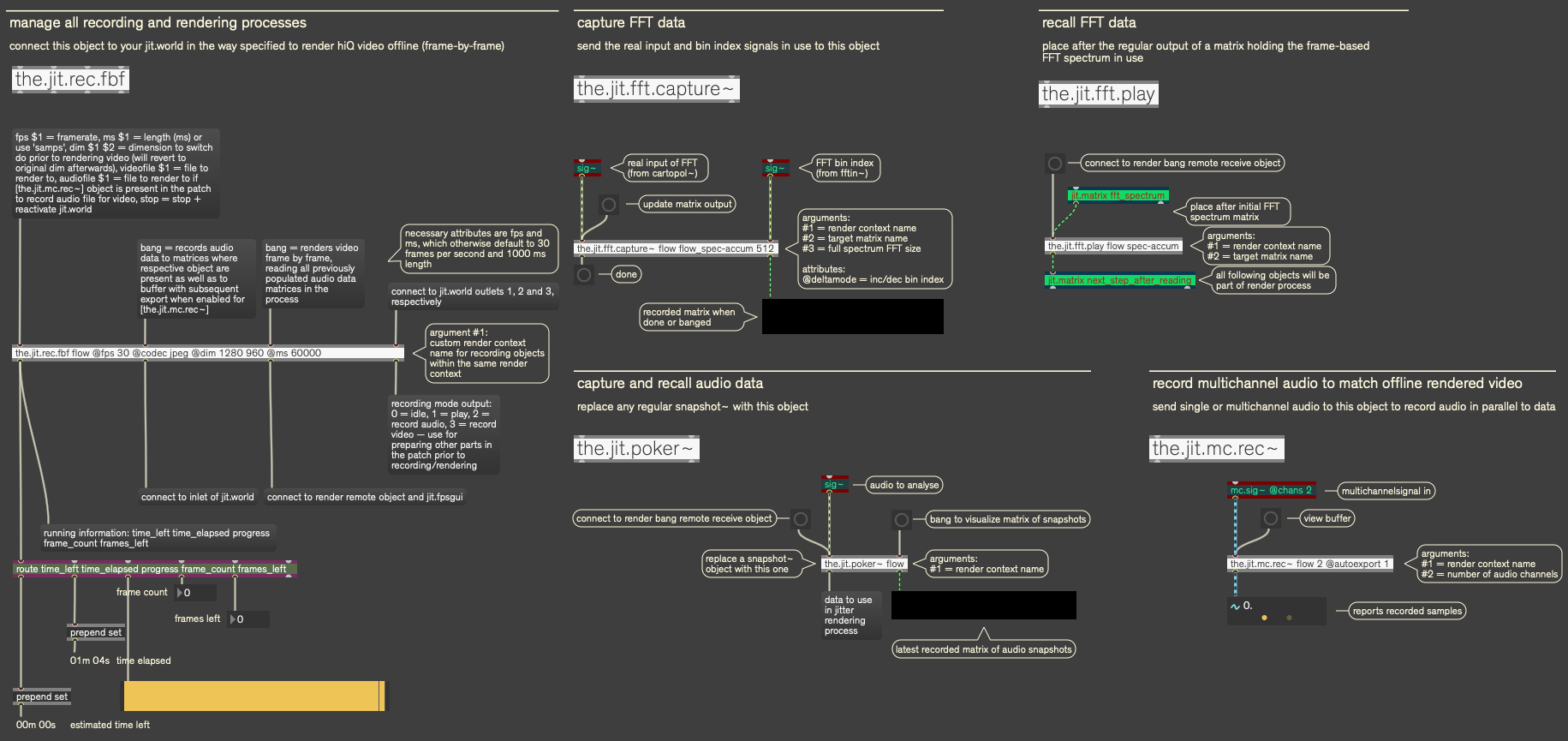

This post has kept me occupied throughout the previous days and while I was (and somewhat am) in need for a solid solution to this within a short foreseeable space of time I did in fact come up with a realm of abstractions which somehow made a tight, hiQ, offline, frame-by-frame rendering process amazingly intuitive and effective. There are numerous drawbacks to one such setup one may devise, i.e. applying it to other patching environments on the fly which initially had been setup without offline rendering on the radar. Of course, the parts of a patch to work on here are those where audio is translated to data or matrices and vice versa. Generic solutions as such can only be kept under continuous devlopment until those translation hubs (like snapshot~, jit.poke~ etc.) perhaps provide a support for internal storage to be recalled offline without realtime DSP and based on the desired framerate for the final product. Sir Zicarelli has dropped a few lines about the C74 take on this topic (thank you for sharing). What I am using as of now is an imitation of such storage/recalling logic which is based on incrementing frames, similar to Timo's strategy.

A central abstraction manages the timed transfer from audio to linear matrix data (replacing the snapshot~ objects entirely for the recording process given they caused crashes all through when writing to coll-objects for instance) — synchronizing running FFT-analysis required some extra nesting to make up for timing inaccuracies between spectrum size and the desired framerate using layered poly~-objects —, it manages the recording of corresponding multichannel audio to accompany the video rendering 1:1 and uses the pre-recorded matrix data to finally control the video rendering process instead of previously used audio.

This has worked pretty well here (talking of generative, audio-based 4K output) and almost all patches I applied it to have yielded the desired results. However, I found there to be many limitations (in general) for which I would love to find solutions myself. So far, these are mainly:

• timing inaccuracies caused by objects of the jit.mo family, which seem to behave differently when rendering offline while manual banging @automatic 0 is not supported with the objects under the test (jit.mo.func, jit.mo.join)

• objects like [clocker] will have to be replaced by [accum 0.] to yield tight and logical results

• [snapshot~] > [coll] causes crashes during rendering, I use it now for regular performance but the abstraction bypasses it during recording and playback for rendering

• a huge challenge to face is finding a generic strategy to replace complex [jit.poke~] logic where matrices are populated in more than one dimension for audio-reactivity or generative animation. I have not yet found a solution to this and while perhaps for one setup a way-to-go is thinkable it may not be applicable to any other environment or rendering chain. I'd be extremely happy to learn about someone's experiences or listen to some advice here.

Given the short time so far, I haven't tested or stumbled upon other limitations yet. Maintaining objects to apply and record automation data like [table], [function] and the likes shan't be much of a limitation as one could specify the domain and recording loop centrally. Talking of [mtr] it seems tailor-made for step-by-step recalling of data (a simple 'next' message suffices) and when data is continuously stored in all of its inlets tight timing should be doable, in theory.

And yes, I agree Federico :), talking of the results in mind and when starting out from scratch, using Max/Jitter will hardly be the environment of choice (totally agree), however, this topic is valid inasmuch as it discusses the possible options to render complex/generative/audioreactive video built in Max which cannot be easily translated to other environments 1:1 — in such case I'd be equally interested to learn about such ways (presumably a topic of similar scope)!

For those still interested in this topic, Id be curious about a list of objects which basically need a substitution or replacement to make them compatible with offline rendering … from the top of my head these are really only snapshot~ and jit.poke~, along with the timing objects from jit.mo, clocker … (at least when rendering audio and video separately).

what else? experiences?

This is what I'm going with since you brought this up. Had hoped there is a simpler solution to offline-rendering but the complexity of FFTs and envelope following for long sequences seems to demand an equally complex setup.

hey everyone, just popping in to say we appreciate the dialog here. As DZ said in the other thread "We're in the very early stages of evaluating how we want to approach such a project." I'll be keeping my eye on any convos that pop up related to the many different aspects of this problem space. Hopefully it is no surprise that a proper "max-centric" solution is a massive undertaking, and I think Fede's question of "why Max" is valid.

that being said, I do believe custom solution are possible with the currently existing tools, and I'll be happy to help out with any community driven efforts. My recommendation would be to study the parameter-record/offline-render prototype in the recording best practices article. I would also encourage some exploration of existing node-js tools that might be adapted into a max solution.

@Oni, very interesting abstractions. Are you planning on sharing those on github or some other platform? Would surely like to have look and maybe give them a try.

@Federico. I think you raise a valid point, why Max? But I also think that just because at the moment it is not easily possible with Max doesn't mean it shouldn't be part of the environment in the future, and also doesn't mean we should just switch to different platforms just for the rendering purpose. It is clear that the Max programming paradigm is very much about real-time, generative, non-linear processes, and this is what I love about Max, and when I'm running an installation i don't really mind running it in 720p if my graphics card can't handle it. But when I want to publish a video of the installation or "how-it-would-be-live"-performance It would be great to have the non-realtime rendering opportunity. Also because not every user has an expansive graphics card at their disposal, and a non-realtime rendering would basically allow everyone to capture their works at high quality, although it just takes more time.

Brings me back to the days when there used to be the timeline and related objects in Max. I always thought it was a great concept but it never quite worked as well as intended. I suspect the integration of a timeline back into Max in a way that would be robust would be a pretty massive endeavor.

I cant disagree with Testase.

whether it is offline rendered or not, the question to me is only relative on the means of writing "real time-generative" time-lined content in a realtime-generative dedicated environnement.

however i like just generative things that play by it own, i also personally find a lot interests when there's a writing of such content, means something is evolving within a 'score' like frame-story. It's not antagonist.

I found some kind of happiness with ableton bridge for now, but gee, it super fastidious effort because you spend a lot of inefficient time reporting parameters and managing osc messages. Then you access to the automation level, which is also given by other soft of course.

Max would immensely win over such add-on

@Timo that's actually a very nice use-case which I didn't think about!

Although it's not the primary focus of Max I surely agree that would be great to have the option

"So what would be the minimum viable solution or minimum set of features that are required for the offline rendering?"

probably a major change in the runtime and most of the externals, i am afraid, with unforeseeable impacts on other potential situations for users.

currently in max it is not possible to run "any" patch in nonrealtime audio and have datarate - including overdrive - do the same.

all you can do is to build your patch in a way that everything data is triggered by audio - or by video frames for that matter - and not use metro or pipe and not read files from disk or give visual feedback in a meter.

one simple solution would be to have the whole runtime use another time than the normal system time, so then you wouldnt run things in "nonrealtime" but a "slower realtime".

so you would have an option, lets says, similar to up- and downsampling in poly~, where you tell max to run at 1/16 of the normal speed. then you try if that is sufficient to make the video bigger and if still not, you can choose 1/64 systemtime.

this global time would automatically affect everything, including the samplingrate (audio output would have to be muted then or you had to deselect the IO driver)

I don't know if this has any applicability here but I have often wished for better interoperability between the setclock object, the transport, and some of the related objects (e.g. when).

one simple solution would be to have the whole runtime use another time than the normal system time, so then you wouldnt run things in "nonrealtime" but a "slower realtime".

@Roman

I very much like the idea of a decelerated operation mode of Max! This would likewise have to include a proportional recalculation of everything frequency-based though, whether by default, or manually.

Are you planning on sharing those on github or some other platform?

@Timo

Yes surey I can share them! I'll give them a thorough test over the upcoming week and see how I can compile a useful bundle. They are indeed as recent as this post…

I'll drop you a line once I reckon they can be operated intuitively.

Hi there,

sorry for my low answering speed, but we got some issues here. All (almost) ok now.

The idea of slowing down fps is a very nice idea/way, as far as I can imagine it.

One thing very important here is the continuous workflow. All these patching years, I used to optimize my patches progressively, while patching small bits, then I use to optimize these in the end but not so much as the whole thing is already globally optimized. Maybe this is completely foolish, but I need to keep patching like this.

And of course, if I prepare my patch specifically for real time at some point, if I wanted to capture them with higher fps (which is currently not possible on my hardware), I'd need to switch to the offline mode and depending on how I patched things, maybe it couldn't be totally possible.

Of course, the custom offline feature I could build got a cost ; I don't mean patching time but I mean: I add a new feature, it drives/constraints to several other things. Normal situation.

I just mean that it makes me confused here.

I can't ignore the "cost" of this. Development time, further constraints.

Wouldn't want to give up but maybe my way of producing would require a bigger machine, in any cases. If I could afford it, of course it would be the best way.

As far as fps can be ok, capture the rendering, using it real-time without previously capturing things would keep the workflow and dynamic way ok.

I saw some Clevo i9, massive RAM + RTX 2080 super. expensive, but seems to be very very solid. I don't want to turn this thread into "that's not easy to offline render so let's keep it real time with an IBM Mainframe", but this is just my current thoughts today about how to produce/express what I want to produce/express the best possible way, considering everything.

"I very much like the idea of a decelerated operation mode of Max! This would likewise have to include a proportional recalculation of everything frequency-based though, whether by default, or manually."

why? :)

aren´t all these things based on the same 3-4 things in max and the same 1-2 things in the operating system and/or hardware?

@julien

imho getting faster hardware is not at all a solution for the original issue.

as soon as you have a new GPU which allows 50% higher resolution in realtime for that same patch, youtube will allow 50% higher resolutions, too and you will hit the border again.

wouldnt it be cool to have literally no limit when processing audio or video offline?

@Roman

Unless I am overlooking something, an example of downsampling at fault would be a simple FFT analysis. i.e., »half the speed« would require all objects off the fft~ family to scale its analysis accordingly. while envelope following is infinitely scalable in time by default, working in the frequency domain may not natively yield the expected results when scaled and hence would require trespective objects to operate at lower interval while understanding the frequency data as higher up the spectrum… Just my thinking…

The real original issue, which I probably didn't explain well enough, is more that I can't event reach the minimal resolution/fps I need.

Triggering these ideas drive me (us) to your idea of "no limit" which is very good.

I have to confess that I started to dig TD and yes, it would push me to nvidia, which mean new machine, which mean a bigger one. Bigger one I could use obviously with Max too.

TD offers Timeline supports and it is quite easy to turn the system to non realtime and to export is as a movie. This is something I'D LOVE to help to design here for Max but time is flying.

If someone pops out a github and if we can do it all together, I could try to so my best for helping here.

@Julien

Since your post appeared (and I found it due to my very own urgency in this field) I rose to fever in designing an adaptable, intuitive and generic set of tools to convert any realtime rendering environment into one that supports its offline rendering based on realtime events (complex audio for now) by storing the latter in a format it can later read frame-by-frame. The idea is of course that the transition needn't be one of fundamental rebuilding of a patch, let alone overcoming black mountains of obstacles.

There are and will be thousandfold caveats to one such system, and the nth user will find it to be incompatible with their needs, however, it IS a start and I am currently casting it into a comprehensive set , yes, to share it: here — mostly because so far it answers my every question and renders the unstable content @4K @120fps if need be. I'd love to share it once it's tidy and working for sure, and am working on it.

This would be very amazing. And this is amazing to see that community & ideas sharing trigger things and other ways of coding etc.

Mubu (and more generally, buffer~) can also help a lot to store/retrieve signals.

I don't remember if I mentioned this before, and you probably all knew about this.

The idea of a timeline (which can be related to each parameter we need to control) could also explore the pattr way, but indeed coll could be probably better (interfacing coll with a graphical tool, or including import stuff from Ableton automations (from max for live maybe) could also be good.

As I wrote, I had many personal issues these days, and this is why I slew down my studies and answers here.

I'll do my best to add and debate more in this thread.

this thread: a brilliant meeting of brilliant minds 🙌

i have nothing to offer that hasn't been already, just wanted to add my praise... and also pledge my sword(⚔️ ...and my axe 🪓...and perhaps some magic, too 🪄) to fight alongside you all in the great wars to come against our common foe: the lack of timelines and hi-quality/accurate/efficient offline rendering solutions! 🦾

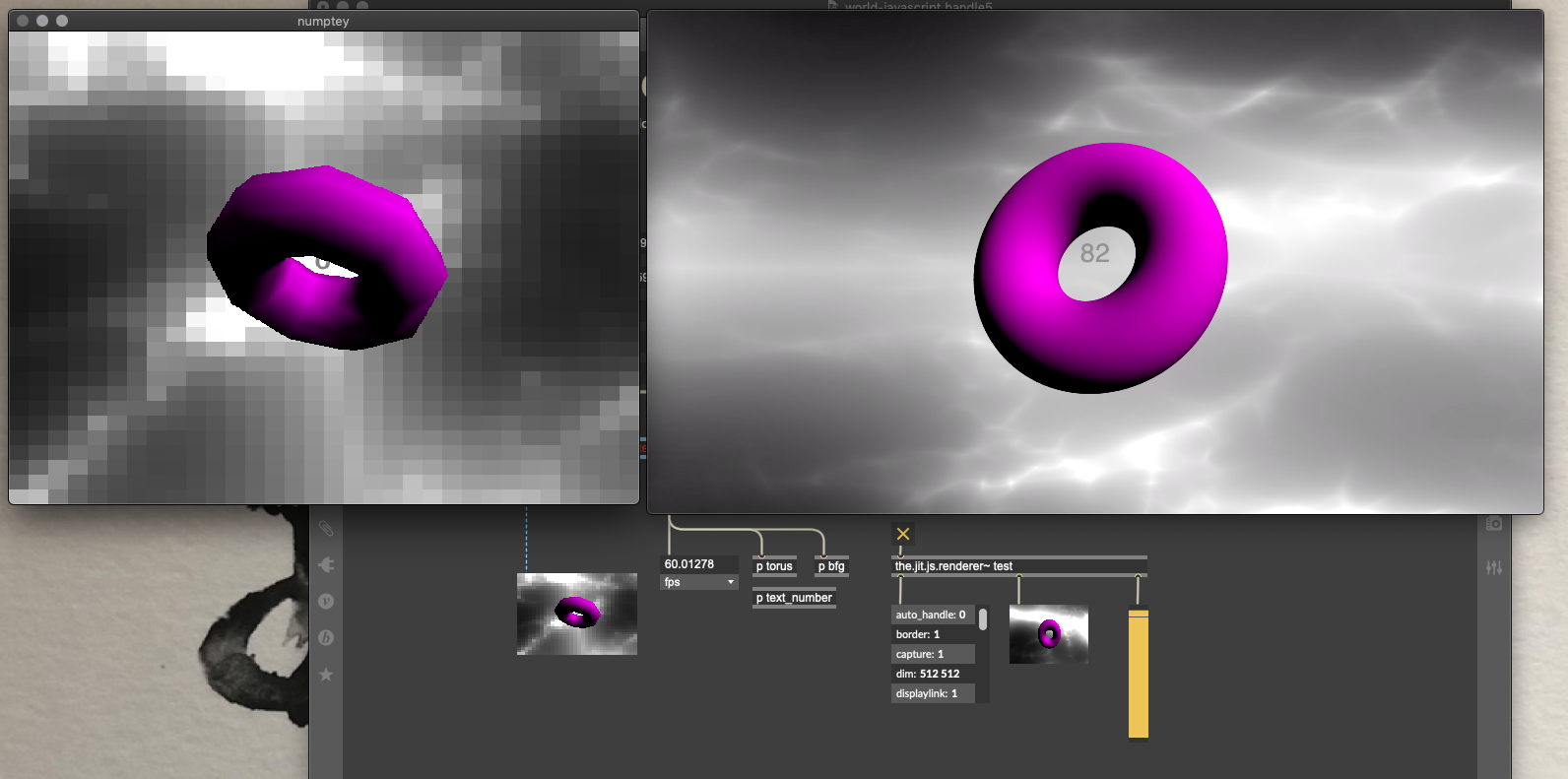

This thread made me also think it would be nice to share how I've gone about this non-realtime capturing, and also inspired me to try and make this feature more accessible. So I've started gathering the practices that I use into a few abstractions. It's far from complete and surely lacks many features, but maybe someone likes to have look already and maybe even contribute with some of your own patches or ideas.

At the moment there are 3 modes, realtime/capture/render. In realtime everything is as usual. In capture-mode the patch runs in realtime and records the sound output (record it to disk) while in the meantime also captures the data from all your signals (for which you connect a specific object between the signals) on per-frame basis to a dictionary. After you have run the capture you can start the render, which will then render all the frames with the recorded data from the dictionary. In the end you have the video and sound files separately, which you can then combine.

I was looking for some nice/funny names for the objects and then I thought, why not name it the jit.underworld, jit.hades~ (which captures the signals for the underworld) and jit.acheron~ (records the audio signal to disk). So here we go:

@Timo

Fantastic, we're pretty much on the exact same page here! Will give it a shot for sure…

The idea of 3 distinct operating modes is identical in the setup here, the recording and rendering approach ist identical. In my view, the only fruitful way to go about this is by separating the realtime and offline process entirely — there are still a bag full of objects which behave unpredictably at the conduit between signal, data and matrix domains. Meanwhile, the jit.poke~ I built a replacement abstraction for to include it in the recording/rendering process. Have you had any success supporting the objects from the jit.mo package? They seem to run autonomously, in line with timing objects but dependent on an enabled jit.world and cannot be controlled/incremented on a per-frame-basis it seems. Hence, I've got as far as recording their continuous matrix output into temp-matrices and re-reading them loslessly, but it seems non-signal timing objects require yet another mode to record just offline-data for otherwise the values are glitched when performing audio in parallel.

I'd opt for jit.theseus here for realtime data recording…

Any luck?

@Oni, cool. I missed your suggestions for jit.theseus, but surely we can use it for another object! I now have included jit.persephone (jit.hades~ spouse) as the int/float/list capturing object.

I was looking at the jit.mo objects, I think the only way to get those to work non-realtime at the moment is to just capture their matrices or float outputs. I don't see a way to manually set the time location for example. There is a (phase), but it only allows to reset phase to 0 when loop is off, but not to drive the object with an actual phase value. Maybe Rob can shed some light on what the possibilities are, or even if it would be possible to make an update that allows to manually set the time marker/phase of the jit.mo objects via a (time $1) attribute for example. If that would be possible then they can easily be hooked to the framecount by dividing the framecount by the fps.

Have to dive a bit deeper into capturing the matrices and a way to easily store them. How did you go about this? I guess jit.matrixset is key here, or maybe some [js], but I would prefer not to use [js] in the capture chain, since it defers to main thread, while I think we should have the capture tightly scheduled with a metro.

@Timo

Indeed, the jit.mo family is and remains a beaut but does not seem to be receptive to non-realtime/manual progression. Any such feature (like your suggested 'time' attribute, or 'offset' similar to a bfg) would totally solve the problem of having to run jit.world at realtime to record matrices/data/audio — currently it needs to be enabled for jit.mo.join to operate and therefore cannot produce flawless progressions in complex environments. Given the jit.mo.func relies on fixed functions, such attribute could be supported mereckons.

As it stands, one has to enable jit.world and devise a strategy to turn off all realtime activity »redundant in the process of capturing« for maximum accuracy. Big thing.

Talking of collecting the matrix data, I have tried different techniques and finally ended up using a method that requires a fixed length of the final video to be set prior to any recording/capturing/rendering, as one would in a sequencer or when poking audio to a buffer~. Initially I appended incoming matrices using jit.concat but it proved dodgy and produced gaps (however, that was when using signals to populate matrices, staying in jit-domain this could perhaps even work with the same caveats experienced when working with jit.mo anyway). At the moment, and with jit.mo objects only running along with jit.world, I time a metro to the desired framerate in samples to fill a jit.matrix with a fixed size (dim of the jit.mo.join x recording length in frames). A counter increments the target row (in jit.gen) which is to be populated by the incoming jit.mo-matrix and the entire result can be combed through during offline rendering by incrementing the @offset of a jit.submatrix easily.

jit.matrixset was on the radar as well, however, given that jit.mo.func objects produce one-dimensional matrices it would a) not be necessary to store them separately, b) jit.matrixset would require scripting to adapt to different sizes and c) could perhaps grow gigantically in terms of inlets and matrix-allocations.

My last obstacle in the system (apart from the jit.mo-incompatibility with manual purposes) is devising a method to collect all running data relating to timing in such a way that it can be called upon on a per-frame-basis. This should result an object to be placed behind every conduit of timing>matrix/texture-relevant data and of course draws many objects into its circle, like the aforementioned timer, when, clocker, transport, slide, you name it. Shouldn't be »undoable« though and I am quite confident at the minute………

I like the nomenclature of your objects by the way ;), very very similar to my own take on it, which meanwhile focuses on sensory/memory/motor regions of the body, like jit.cochlea~, jit.amygdala~, jit.cerebellum (the timing/coordination object!), jit.retina or jit.thalamus …

Note: While capturing audio, I write it all sequentially to matrices. Unfortunately, the 'exportimage' command does not work with RGB-matrices (useful for preset handling)… can anyone reproduce? Discussion from 2015 and the problem persists here: https://cycling74.com/forums/jit-matrix-exportimage-write-to-jpeg-not-working

Interesting discussion, and let me add to the mentions of MuBu (which is meant to facilitate online, offline, and relaxed-realtime applications in a unified framework) that it is made to record and manipulate synchronous audio and data streams, where the data can be scalars, vectors, or matrices.

@Oni, yeah jit.concat was also something I was thinking about. I would like to not have the limitation of setting a fixed length beforehand. Will investigate further. Your naming also appeals to me, great stuff!

About the exportimage, the message works fine for me with a 3-plane matrix. But maybe I'm misunderstanding your usecase. Care to share a patch to reproduce? I'm on MacOS 10.12.

@Diemo, thanks. I myself have little to zero experience with using the MuBu package/objects, do you (or someone else) have any pointers to what objects might be interesting/relevant to look into? For example on the usage of matrices in this matter?

I started the post, and what I see here is amazing.

Community, C74, people discussing, sharing ideas, pushing things further.

At the very eve of xmas, this is so inspiring & positive to see it.

I'm sorry for my silence here since this first post.

I lost someone close to me and I'm still slow and pausing a bit.

Thanks to everyone, thanks Timo too.

I can't wait for testing it in real conditions.

@oni

why would fft differ from other signal objects?

we would not be changing the samplingrate and the scheduler settings, we would just make 1 second 10 seconds long. :)

do you remember those old games which, when you brought them from MacOS 6 to MacOS7 PPC they were running too fast because they were somehow bound directly to the system clock? not sure if such a design is still possible with a modern OS but that´s what i was thinking of.

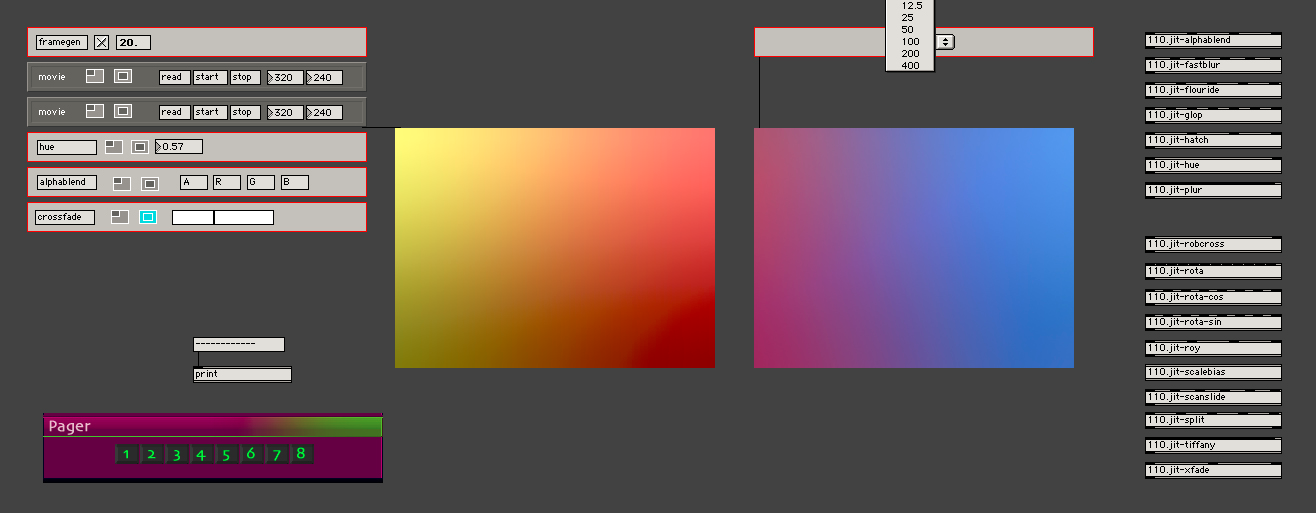

it is probably completely unneccessary to mention that 110.modular had video objects long before vizzie.

the size of the matrix can be controlled globally across filters, and of course including the diskrecorder module.

actually i even control the size of loose pwindows via the patchcord you see at the right side here.

for video all of this is really easy (unless you have already built tons of custom realtime effects which are _not based on frame values.)

and to bring data caused by realtime input to nonrealtime mode you can make a simple datarecorder/player, which, while realtime mode is on, overdub-records and when you turn nonrealtime mode on, it´ll replay the stored data.

the huge problem begins when you need to include scheduler and audio in the NRT game. then i got lost and that´s why the project once came to a halt.

Belated happy new year!

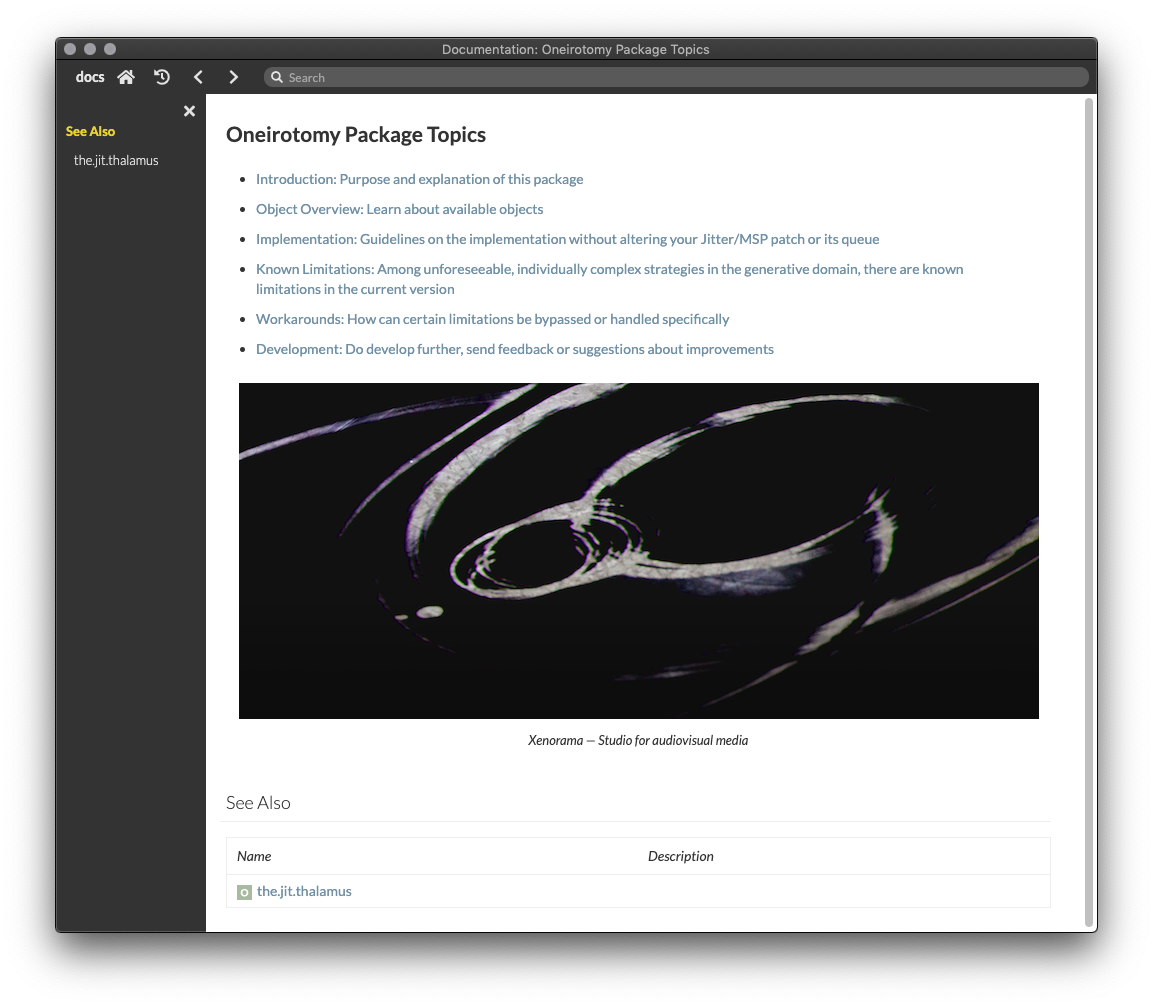

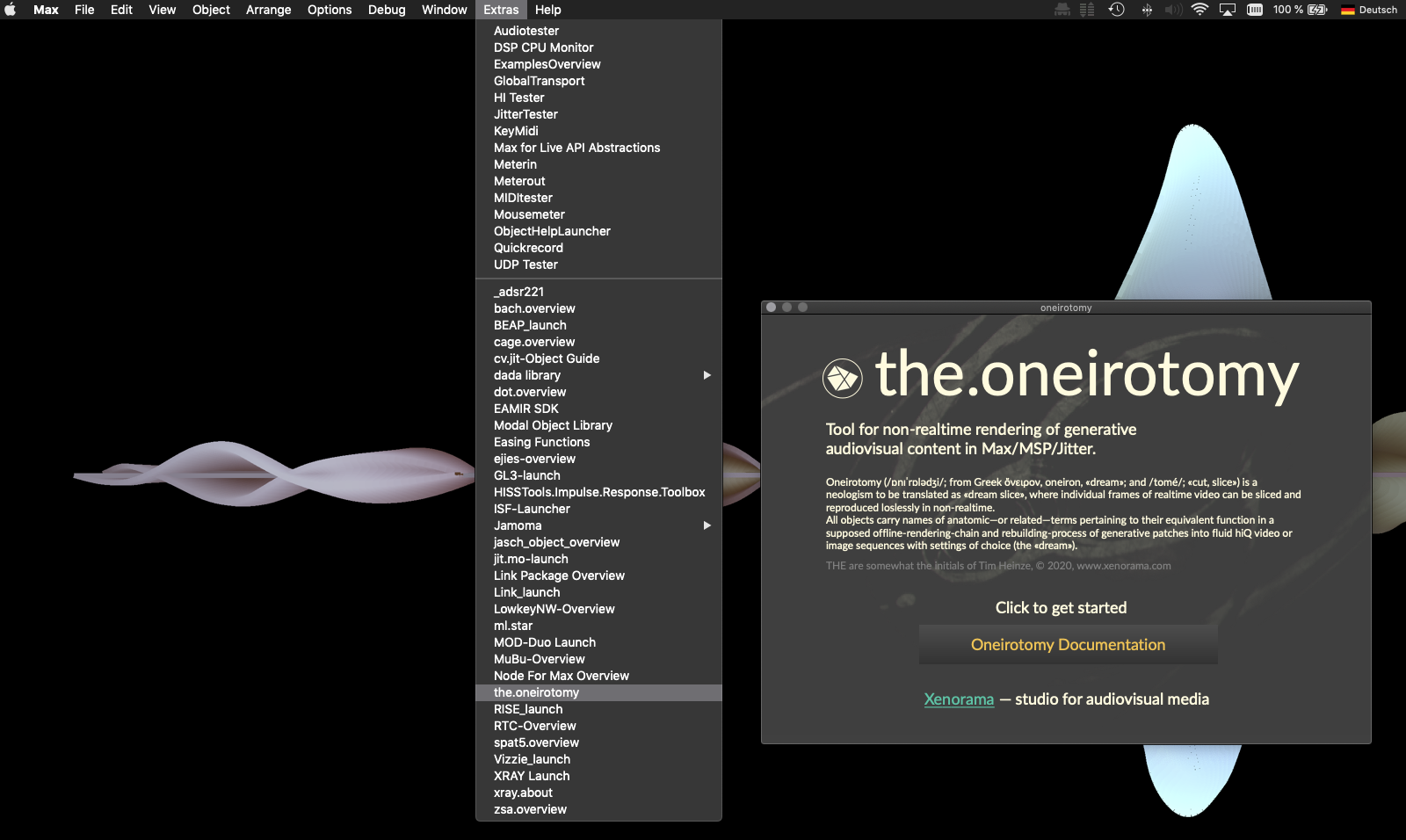

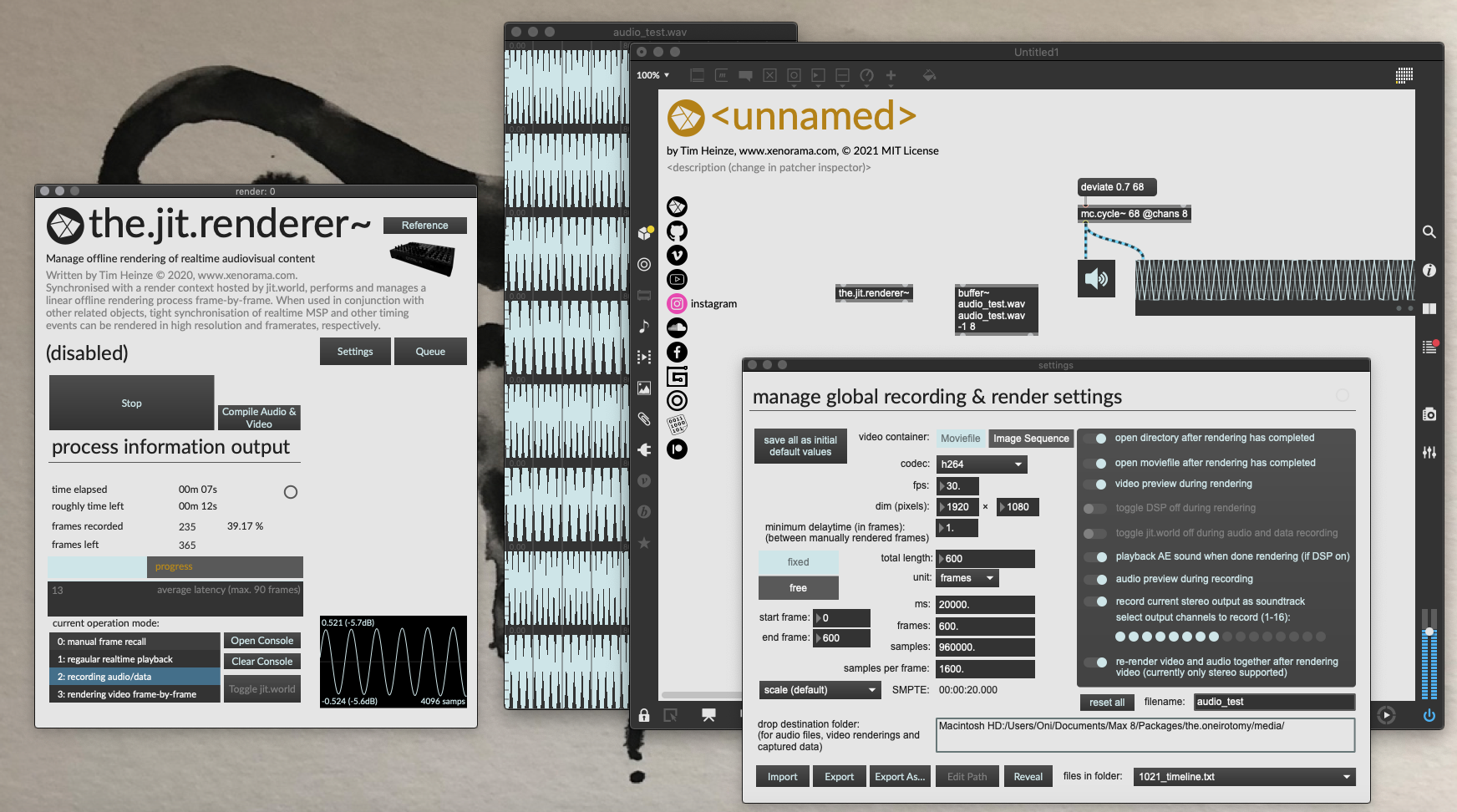

I would like to share this offline-rendering package with the community, it goes by the name «Oneirotomy».

Yesterday we completed a highly complex audiovisual project with an orchestral ensemble for which I needed to render my audio-based Jitter patches offline — having developed the package along the way since this post came up, it worked to 100% satisfaction and its implementation was not too challenging at all. The results were 100% accurate, hiQ image sequences later processed further in AE, and entirely based on audio and timing in Max.

A lot of time has been fueled into documenting this package quite meticulously, in form of helpfiles, refpages and guides with the odd smooth_shading applied. It includes one or two disclaimers about its limitations, but overall supports any translation from realtime to non-realtime.

I would wish for some improvement, especially in support of the jit.catch~ object, its implementation is slightly limited. Further assessment of the integration of jit.anim-family objects may also be necessary. Any suggestions, recommendations, feedback et al is highly welcome.

https://github.com/xenorama/oneirotomy/tree/dev

Belated happy new year! I would like to share this offline-rendering package with the community, it goes by the name «Oneirotomy». Yesterday we completed a highly complex audiovisual project with an orchestral ensemble for which I needed to render my audio-based Jitter patches offline — having developed the package along the way since this post came up, it worked to 100% satisfaction and its implementation was not too challenging at all. The results were 100% accurate, hiQ image sequences later processed further in AE, and entirely based on audio and timing in Max.

Thanks!

Thanks for sharing! Looking forward checking this out

Thx for this, about to have a look.

Would a 'jit.movie' integration be difficult?

B

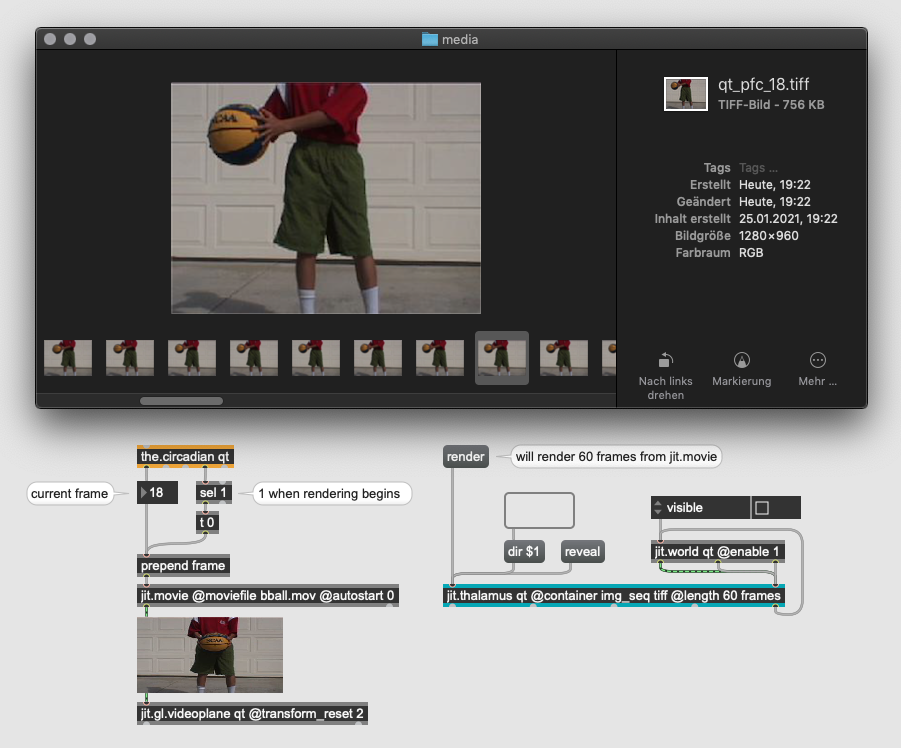

'jit.movie' in the sense of of manually incremented frames during the render process is no issue at all, since objects like the.circadian output every frame numerically while rendering. These can be used to query frames from jit.movie (frame, frame_true methods) and process them further within the queue:

wow, can't wait to spend some time with this! thanks for sharing!

omg. this thread became CRAZY !

I can't wait for testing it too

Hey that's crazy work ! However, when i first installed from the github zip into the packages folder, it didn't recognize any if the abstractions, because they are in an "abstraction" subfolder, and not a "patcher" subfolder... After renaming the folder from "abstractions" to "patchers", everything is recognized and works fine. is that just me ?

Super feedback Vichug, thanks much!

I've renamed the folder in the repo to 'patchers' now, since it won't make a difference here locally. However, I still am slightly surprised as (to my knowledge) the abstractions need just to be found, regardless of where in the searchpath; obviously works here. ++ Since I haven't shared an entire 'package' style library before, a few odd dependency-related issues may occur so do keep feeding them back when encountered!

Cheers.

cool ! (yes files in a package folder won't be all included in the search path automatically, for that you need to be precise in the naming conventions of subfolders inside a package ; that's actually cool because you can put source files and such which won't interfere with anything in max ; or keep old version of objects close, etc. It's just a little odd that the "abstractions" folder isn't one of the names that are added automatically to search path)

Hi Oni,

I have somewhat the same issue as VICHUG, except that renaming the abstractions folder to patchers doesn't provide the entry _the.oneirotomy_ in the extras menu.

Excited and about to try this! Thanks for sharing!

Sébastien I had the same issue, just delete the package-info.json

2k, that did it!

Thank you Oni for sharing this formidable package!

I suppose the entry in the 'extras' menu will not appear until Max has rebuilt its database in form of a restart. As far as the dependencies go, I am myself not too sure as to why they are loaded properly here and not on a different computer…

While triple-checking some improvements I found that the helpfiles are perhaps too clogged with information and the abstractions themselves, resulting in perhaps too little overview after all and some unwanted loading time for the helpfiles. If necessary, the details to the individual objects might be better kept in separate tutorial-pages which in return could be more comprehensive, too. I'll look into that if people confirm.

Furthermore, I am currently going through the jitter-examples/audio and adding the rendering engine to them, one by one — this could help understanding how the setup can be applied to existing patches.

Of course, since some of them are based on jit.catch~, this is becoming a little tricky.

jit.catch~ requires continuous matrix concatenation which is prone to dropouts when jit.world runs along audio, for example, while precise frame-based output of the captured matrix is what we never experience in realtime. Mode 0 yields different size matrices depending on the regularity of incoming bangs and I have not found a fruitful, stable solution to recording matrices with different dimensions yet (jit.gen adapts, smaller matrices truncate the entire matrix of jit.concat and too much patching may cause dropouts while the 'dim' attribute cannot be applied without switching to the data domain). Hence, the only way to ensure it all gets captured is by pre-defining a running capture-dim of 4096 (which jit.catch~never seems to exceed despite different framesizes). This is slowly getting somewhere but some experienced inputs is of course welcome.

Thank you for the awesome library! I just made my first none-realtime rendering in max:

https://www.youtube.com/watch?v=Mi4GtbKhv68

I haven't look into the other objects besides thalamus, it seems like that the rendered work plays faster than the play in real-time. May I know which object to look at to tweak timeline? (I haven't synced it with sound so its just all about the visual rendering here!)

cheers.

@Wei

please download the latest package from git:

https://github.com/xenorama/oneirotomy/tree/dev

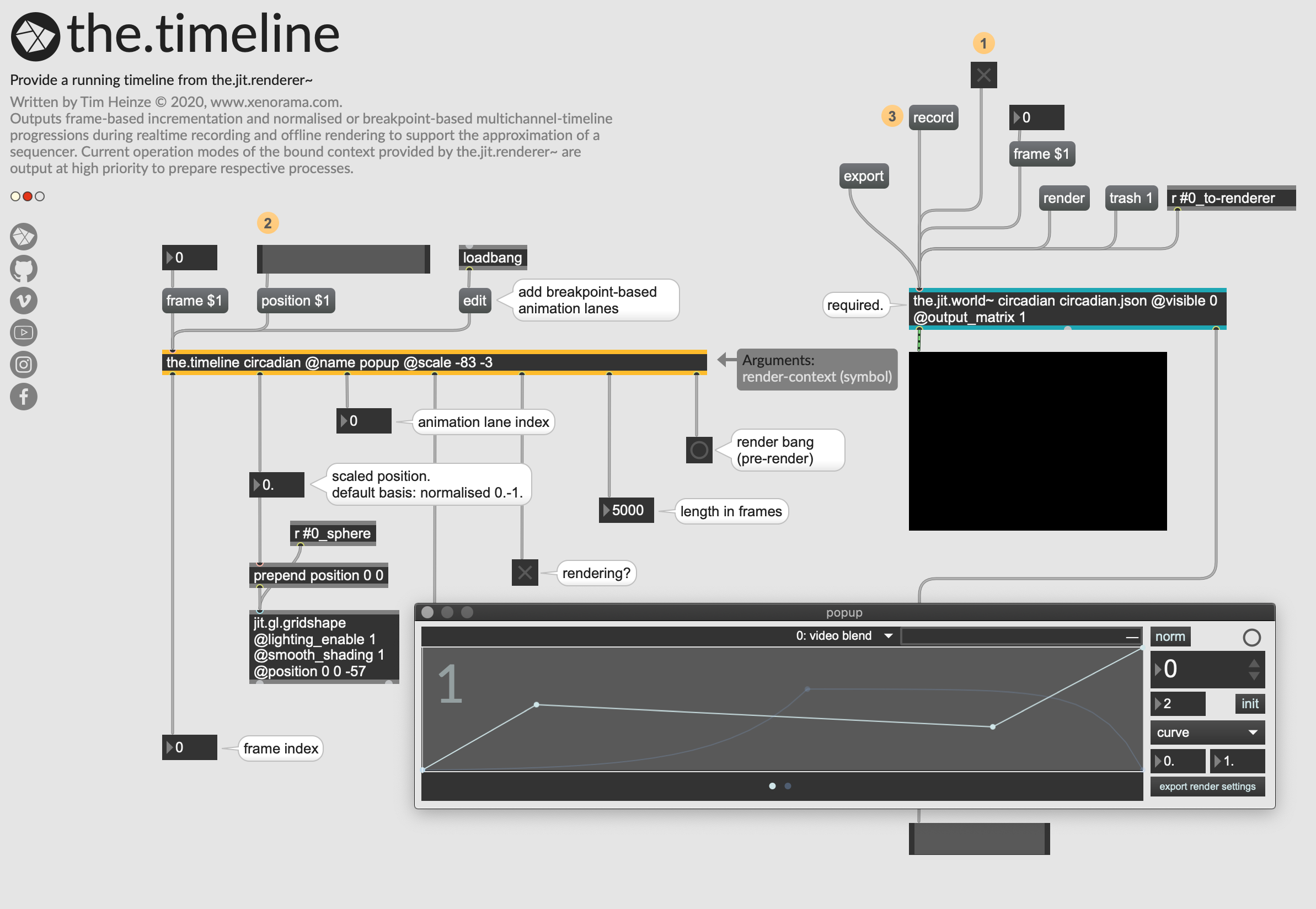

>> the nomenclature got renewed, hence the thalamus is legancy and as of now is called [the.jit.renderer~] along with other objects which now go by their native Max companions to avoid desorientation.

As for timeline automations, use [the.timeline]. I've only recently used it multifold in rendering complex 4K audio-reactive transform feedback + geometry shading.

PS: About your job inquiry I'll get back to you separately.

Best, Tim

Hello,

thank you for this great package. I tried using it for some audio-reactive visualisations, but ran into problems with jit.anim.drive. For some reasons "turn" commands don't get rendered. Rotateto works, but turn just gets ignored. I tried using the.snapshot~ (with @automatic 1) to capture the data that determines which messages get sent to jit.anim.drive and also the.mtr to capture the messages sent to jit.anim.drive, but neither worked. Is this a known issue or is there a better way to do this?

@MISEMAO

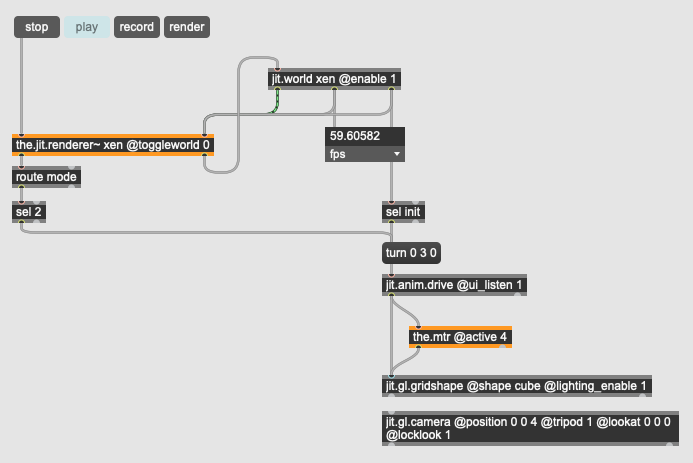

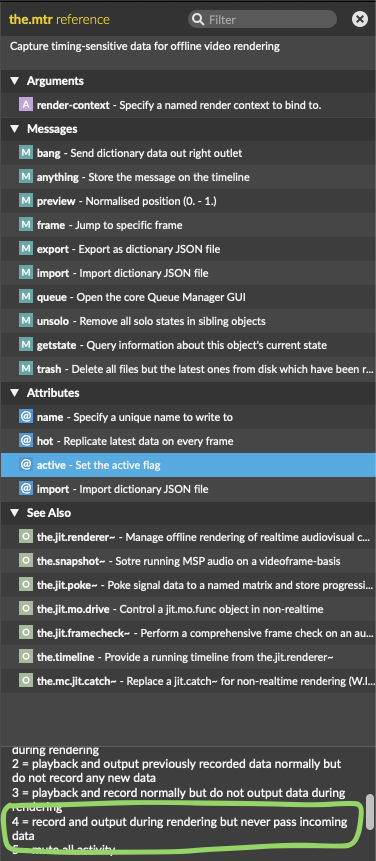

Yes, jit.anim.drive is whimsical, since it requires a rendering (running) gl context to operate and will refuse to output transformation commands when not attached to a gl-object. You can add the.mtr @active 4 (capturing but not outputting data during playback or recording) and set the.jit.renderer~ to @toggleword 0 to ensure jit.world runs during recording. This way, the.mtr will collect all commands to the gl object to output these commands per frame during rendering. Just tested it again and it works fine. I additionally send the 'turn' command again to jit.anim.drive when recording begins for otherwise the transformation won't show in the rendering window despite getting recorded by the.mtr.

The /dev/ repo is the recent version but the documentation still focuses legacy nomenclature objects, where [the.cerebellum] is what the.mtr is now. If you go under Extras>oneirotomy and open the documentation you'll find a page focusing on Workarounds where this setup is described in detail, albeit, based on previous objects (please extrapolate, I have little to no time to update the old documentation/helpfiles and not many people seem to use the package yet……). Do hit me with desired improvements or questions though, glad you can make use of it.

Patch example:

I endet up not using the mtr at all after noticing, that I could replace a few peakamp~ further up the chain with the.snapshot~ @automatic 1 @mode peakamp. This also brought down the render time considerably (with multiple the.snapshots: around 15min, with 1 mtr and 1 snapshot: around 3 hours).

One remaining problem is, that the speed and duration-values of the jit.anim.drive seems to be dependent on the framerate, meaning I get vastly different speeds and misaligned animations with 60fps (rendered) compared to the 24fps (realtime).

Another problem was, that on the last render (with snapshots only), the _soundtrack version was broken (no sound and still images instead of the animation). The video-only version worked fine, so it was not that tragic, since I could add the soundfile after the fact with final cut.

And a last issue: If the resolution set in the the.jit.renderer~ does not match the jit.world resolution, the rendered movie will be warped. I am not sure if this is a bug or just a non-feature (since the the.jit.renderer~ can send messages to jit.world, I would have expected it to set the resolution of jit.world).

I think I will have to dig through the old examples of oneirotomy to see if I have everything set up correctly. Is there a table of some sort to match the old object names to the new ones? Then I could change them in the example files and submit them to the git repo.

Thank you for your work on this, I really like it and it is incredibly useful.

Damn, just wrote a detailed response and an unattentive stroke of the mouse navigated to the previous page, nullifying my purpose… hopefully it was good for something or other..

Hence in more brevity:

1) thanks for the feedback regarding rendering times! Will check at some point how this can be improved. Appreciated.

2) jit.anim.family is not natively compatible with offline rendering, the latter operating at very low framerates. This is because these objects serve an intuitive purpose to an assumed observer's interpretation of speed as opposed to frame-based transformation (compare: camera eye space). The following patch illustrates this comprehensively:

The only way around this is the aforementioned the.mtr @active 4 as described above since it replicates transformations per frame instead. If I remember correctly, the jit.anim.drive will actually need to be disabled during rendering to not output transformation commands (use the 'automatic' flag).

Similar phenomena will be encountered with the jit.mo.family which operates only while jit.world is rendering — look at the.jit.mo.drive reference/helpfile for easy solutions to this problem.

3) As for (sad) soundtrack experience, are you recording the actual sound output to your soundcard from Max using the renderer GUI? Or a designated (legacy!) object? I strongly opt for the former.

4) Yes, the.jit.renderer should apply the 'dim' attribute according to the settings in the GUI, to jit.world during rendering and revert to previous settings afterwards. There might be some caveats when jit.world operates in unexpected modes (perhaps @output_texture could be an issue, I cannot be sure). I personally always double-update all settings before hitting 'RENDER', including the dimensions, just to be sure ;) — I know, it's not super elegant, but sure enough subject to improvements in the future. Hope this helps, keep us updated. Perhaps share a glimpse of the.jit.renderer~/jit.world connection and the settings?

5) No, there isn't a table for reference. Really what needs to be done is updating the documentation (on my part). I am inclined to implement a js solution which could eventually disconnect the.jit.renderer~ entirely from jit.world since all information can be grabbed and applied remotely. But.. uhm.. yeah.. I'll need some solid feature requests and feedback for that as guideline.

Thanks thus far!

hi Oni, I noticed the missing of the the.mc.pac~ object in the latest version (2021 nov), and it seems I couldn't find my audio generated, is it a bug? thanks!

there is no need for the.mc.pac~ any more. Audio can be recorded using the @adrecord flag for the.jit.renderer~. That being said, the attribute routing is bogus, so one has to acitvate it manually in the gui (see picture). This way up to 16 channels auf audio output from the mc.dac~ can be recorded as soundtrack.

hi Oni, thanks! the audio and video renderings are working perfectly with the dev version, thank you so much!

Just for the new "combile audio & video" button, the compile process doesnt stop automatically at the last frame. I tried to do the compile in the help file too and it's the same, I have to manually click the stop button to end the process and the video and audio are synched. Maybe it's me miss out something on the settings?

yes I too noticed that some time ago… (compile)

In fact, I just got round to redesigning the core engine to be run using JS, this way the.jit.renderer~ can (will!) be dropped into the patch and manages jit.world remotely without any further ado or pathcords. I've added an object which automatically adjusts gl-params remotely for named objects according to recording/rendering modes to support different aesthetics at higher resolutions, and likewise supports low budget rendering during patching and recording for better performance in realtime. This is all looking a lot more promising, however, it will take time until it's all implemented and upgraded. Will also transport a few functions to gen codebox for more efficiency and tightness. Another snazzy feature is the ability to render (capture) any jit_gl_texture in the environment instead of jit.world; this also helps recording post-processed scenes using @output_texture flag.

I'll have to seek some support on jit.gl.asyncread though. Since it's at the heart of remote rendering but also refuses to process textures if jit.world is set to @visible 0. The latter would be very handy since jit.world's window has to be visible and scaled up to the desired dimensions to be recorded this way (otherwise). This is the current solution and at leat does the job reliably.

More to follow.

That sounds extremely promising. Let me know if you need someone to alpha/beta test anything.

For questions regarding the inner workings of jitter and jit.gl.asyncread you could ask frederico-amazingmaxstuff. I also commented about your project under one of his videos and he seemed very interested.

Thank you Rob, jit.proxy solved it.

new refurbished Version 2.0 of the entire package is online under the 'automatic' tree of the repo:

https://github.com/xenorama/oneirotomy/tree/automatic

feel free to grab the package, check it out and give feedback — I am meanwhile improving some cosmetics and compatibility on designated objects and highly appreciate some guidance in different usecases than my own.

Version changes are endless, most noticeably the feature of remote control of jit.world, as well as an 'auotomatic upgrade' of patches with highlighting of relevant objects to consider for non-realtime purposes (the.oneirotomy.setup is of help here).

The entire idea is somehwat not easily reconciled with Max' native powers and requires a great deal of reconciderations. I'm happy to help out should questions arise.

Tim

Merged to main branch finally.

users are welcome to test:

https://github.com/xenorama/oneirotomy

hey. i'm super late to the party on this one

i'm currently in the process of building a live visual for my music but i don't have a particularly powerful GPU [2014 macbook pro] so i've been on the hunt for offline rendering solutions

oneirotomy seems like exactly the kind of thing i've been looking for but i'm struggling with exactly how to use it. i have learning difficulties so a lot of stuff takes a bit more of a thorough explaination than it might possibly need

i've got a patch where i'm loading in the pre-recorded audio into a 2 channel buffr~ and playing it through a 2 channel groove~ object, with this going into a pair of jit.catch objects [so i have have left and right channels] and then controlling an XY scope that i built with jit.matrix objects and into a gen patch, /and also/ going into a sub patcher that turns the matric outputs into a series of white flashing bars that react to the volume and frequency of the music

i've looked through all the documentation and examples but i can't work out how to get the oneirotomy objects to do the thing [i hope this makes sense]

i can share my patch if it might help?

but to put it simply, i might need someone to point me in the right direction or tell me where my patch is going wrong

thanks

Taking advantage of this thread bump to share a small package I made, allowing for frame-perfect, off-line video rendering. It was originally made to create seamlessly looping GIFs, so there is no built-in solution to work with audio (although this could be done), and it is far, far away from the level of completion and completeness of oneirotomy, but maybe some of you may find it more straightforward to use as it only consists of 3 abstractions.

It is called again. and distributed as a give-what-you-want-ware.

Hi Kezia,

no one is late to this party, since it will go on for a long, long while it seems…

And indeed, Oneirotomy seems to be what you need. At least it has been developed for exactly such purposes, including the system limitations for performances. So I am pretty positive that we wil lfind the solution for you.

Since you »[ha]ve looked through all the documentation and examples but […] can't work out how to get the oneirotomy objects to do the thing« I assume you mean the detailed Vignettes/Docs which are available in the Documentation under 'Extras/the.oneirotomy' or in the help file sidebars?

The challenges with [jit.catch] are described in detail there, including its limitations, check the Chapter Known Limitations (again) to fully grasp what the issues are in Max when operating non-realtime.

In general, all realtime stream needs to be recorded, somehow, to later be available for offline recall. In the case of [jit.catch] this means we have to record a jit_matrix stream since the signal domain is directly translated to the Jitter-domain without intermediate data to store in textfiles or in memory. The object the.mc.jit.catch does this for us, do check out the helpfile and related topics to understand where supposable issues in the result might hail from. For other data which is unaviable offline, we have to aptly decide where to place which kind of data capture to yield the results we desire. ping pong.

If you don't use presets like the Vizzie modules (most of which contains objects which only work in realtime, unfortunately), all categorised realtime-objects can be 'upgraded' to oneirotomy-compatibility using the.oneirotomy.setup. Even with Vizzie, this can be performed using a single command (see the helpfile, check the dictionary output from the.oneirotomy.setup to see which objects are being upgraded.

I get it, it's complicated, and potentially intimidating… but yo, Max is natively not compatible with these usecases and some Jitter/GL-objects are simply dysfunctional in offline-mode (jit.anim-family, jit.mo-package, etc.) and thus need dedicated handling which is 100% unintuitive and subject to brain massaging.

Either way, I am happy to help where I can. If you care, do share your setup and I'll get an idea of what it is you need to do and/or whether the setup contains yet another set of challenges hitherto unexpected by my humble self. Do accompany the sharing with any questions regarding the documentation that I can potentially clarify.

Note, since my system is not fully up-to-date and thus not 100% compatible with Max atm, I do get errors thrown at me myself and am currently not able to verify whether my system lies at the core of problem. But, if I get to work, this should too work for you.

I have only just re-visited the package and improved a few minor details, have updated the git as well.

Some objects do cause problems, like the.jit.movie.ctrl, for example.

Do get in touch!

best, Timbeaux

hello!

thanks for getting back to me!

i have looked through the vignettes and read the how to and all of that

it's highly possible that i'm missing something glaringly obvious

here's my patch

it's a fairly simple thing all in all

it's adapted from "Let's Create a 3D Oscilloscope with Jitter in Max/MSP" by Amazing Max Stuff on youtube but i've made it so that i can get a dedicated left and right signal which is required for the desired effect on the barcodes

thanks!

okay, the patch in itself is pretty straight forward for use with the package.

I've upgraded to multichannel and inserted the necessary object(s). I gave the catch-object a @name attribute for otherwise no data is written (bug); I am personally running into trouble, perhaps though because of my system requirements which currently aren't being met. At least only every second block is being rendered and the images freeze every 30 odd frames or so for just as long. Perhaps give it a go with a shorter length (like 20 seonds as test) to see if this also happens to you. If not, gerat, if so, you have a valid point (good job you got in touch) and I'll have to optimize the catch-object.

Fondings,

Timbeaux

hey

Thanks

i've had a play around with rendering 15 second clips, if i render it as a movie file, it has a very unstable framerate and if i export it as an image sequence, i also get image freezes in the render

i tried it a few times and got the same issues in the same places

it may well be my laptop having difficulties

but thank you for the help

it's gone a long way to helping me understand how this stuff works and how to set up a patch

Hi Kezia,

actually, jit.catch has been a latent problem and I think I also mentioned that in the docs. However, thanks to your tenacity I have now found quite a significant bug and seemed to have solved it. I've rendered sound + visuals as .mov and the timing is bang on with the events. So, I've uploaded a new version of the.mc.jit.catch~ in the git repo and you're welcome to use it, even if frustration may have darkened your day already.

What's noteworthy, is that changing the rendering dimensions arguably changes everything in the GL-domain, especially relating to sizes and ratios. This is why the patch contains the.obj.init to scale the mesh according to the rendering dimensions and the barcodes are set to resize to the rendering window (@transform_reset 2) by default.

I've tested, and it looks sweet.

Enjoy, if possible.

Timbeaux

thanks for all the help!

and i'm glad i could help find a bug

your frustrations haven't caused any bad feelings!

i downloaded the bew version from the git repository, i opened that patch you sent, but all the messages appear as objects that max can't understand, and the renderer doesn't seem to detect the jit.world

i copied the patch into a blank patcher and it still drops frames and now doesn't begin recording or rendering at the beginning of the audio. or it just renders a blank screen

i'm wondering if apart of the problem is that my version of max is maybe too old for it to work properly? [i'm on 8.3.3]

i do apologise for all the problems

if things go bogus, select-delete-and-undo the objects in question which link to the context. this should deffo work. And well, yes, Max 8.3.3 could surely cause problems — especially because I had to upgrade some parts of the renderer to be compatible with Max 8.6 and its string/array namespace. Therefore you'll want to consider a newer Version of Max. My system is actually too old for the latest Version itself, but noway can I downgrade anymore…

it's all good

i'll try delete and undo and i'll look into updating as soon as i can afford and see what's what

but thank you for all your help and work!