Husserl tutorial series (9). JavaScript for the UI, and JSUI

Husserl3 contains >1500 lines of JavaScript, ~800 for the UI, and about ~700 for pattrstorage management. However much of it is data, and the actual functions are quite simple. The second on JavaScript, this one builds on the last with practical examples, and prepares for the next. All the tutorials in this series:

Designing a good LFO in gen~ Codebox: https://cycling74.com/forums/gen~-codebox-tutorial-oscillators-part-one

Resampling: when Average is Better: https://cycling74.com/forums/gen~-codebox-tutorial-oscillators-part-2

Wavetables and Wavesets: https://cycling74.com/forums/gen~-codebox-tutorial-oscillators-part-3

Anti-Aliasing Oscillators: https://cycling74.com/forums/husserl-tutorial-series-part-4-anti-aliasing-oscillators

Implementing Multiphony in Max: https://cycling74.com/forums/implementing-multiphony-in-max

Envelope Followers, Limiters, and Compressors: https://cycling74.com/forums/husserl-tutorial-series-part-6-envelope-followers-limiting-and-compression

Repeating ADSR Envelope in gen~: https://cycling74.com/forums/husserl-tutorials-part-7-repeating-adsr-envelope-in-gen~

JavaScript: the Oddest Programming Language: https://cycling74.com/forums/husserl-tutorial-series-javascript-part-one

JavaScript for the UI, and JSUI:<a href="https://cycling74.com/forums/husserl-tutorial-9-javascript-for-the-ui-and-jsui"> https://cycling74.com/forums/husserl-tutorial-9-javascript-for-the-ui-and-jsui

Programming pattrstorage with JavaScript: https://cycling74.com/forums/husserl-tutorial-series-programming-pattrstorage-with-javascript

Applying gen to MIDI and real-world cases. https://cycling74.com/forums/husserl-tutorial-series-11-applying-gen-to-midi-and-real-world-cases

Custom Voice Allocation. https://cycling74.com/forums/husserl-tutorial-series-12-custom-voice-allocation

JavaScript, UI, and JSUI

If you read the prior tutorial, you already know that JavaScript in Max is particularly suited to user-interface management, because it runs on a lower-priority thread than the audio or video. User interface functions can use a great deal of CPU. For example, Husserl needs over half of one core of a 4GHz i7-6700K to maintain its several dozen panel indicators. Also, JavaScript is needed in Max to zoom the window properly for presentation mode and standalone applications.

Displaying and Hiding Parts of the Patch

There's a number of ways to do this, but I found it easiest to do in JavaScript. The below functions were added to the above script to switch display modes for the dynamics and LFO dial/bpm frequency controls in Husserl3:

These functions simply toggle the 'hide on lock' attribute of the display objects when they are to be shown or hidden in the presentation. Putting it in JavaScript had the added benefit that MIDI input can call the same functions when MIDI changes their CC values:

function lfo1Sel(x){

if (sinit == 0) iDinit();

if (x == 0){ // show dial, hide bpm

scriptIds[80].message( "hidden", 0);//l1f

scriptIds[129].message("hidden", 1);//libeats

scriptIds[130].message("hidden", 1);//libars

} else { // toggle display

scriptIds[80].message( "hidden", 1);//l1f

scriptIds[129].message("hidden", 0);//libeats

scriptIds[130].message("hidden", 0);//libars

}

}

function lfo2Sel(x){

if (sinit == 0) iDinit();

if (x == 0){ // show dial, hide bpm

scriptIds[81].message( "hidden", 0);//l2f

scriptIds[132].message("hidden", 1);//l2beats

scriptIds[133].message("hidden", 1);//l2bars

} else { // toggle display

scriptIds[81].message( "hidden", 1);//l2f

scriptIds[132].message("hidden", 0);//l2beats

scriptIds[133].message("hidden", 0);//l2bars

}

}

So the panel changes its display depending on the state of lfoSel() . When the displayed channel changes, or MIDI changes values, the JavaScript simply calls the same functions.

Resizing the Window

I've tried a number of ways to resize patches over the years, and this actually requires JavaScript, because not all the functionality is otherwise available in Max. The problem is that the window zooms in and out from the center of the display, so after changing the zoom, one has to reposition the presentation so its top-left corner is at the top left of the resized window. Also, one wishes to shrink or enlarge the presentation window for the user.

For this Husserl3 sends a 'scroll' message to JavaScript, which now performs all the resizing and rescrolling (previously the window was resized with commands to a thispatcher object, but as the scrolling needed to be done in JavaScript anyway, it's all now in JavaScript).

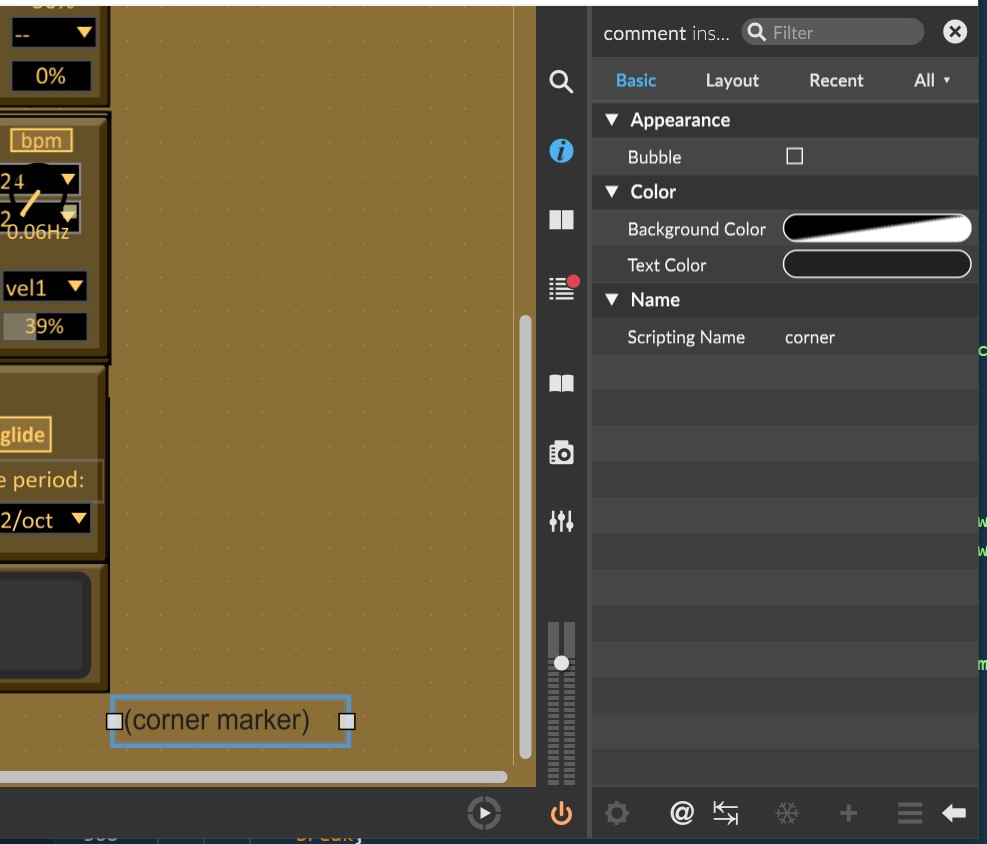

To ascertain the size of the window, I found it overwhelmingly best to make an invisible comment in the presentation, whose top-left corner sets the width and height of the presentation window.

This is vastly better than remeasuring the size of the window every time it changes size, because one only needs to move the comment. I named the comment 'corner,' and as this function is only called rarely, it just fetches the object reference to the 'corner' comment when it is called rather than caching it in an array:

function scroll(size) {

var loc = new Array(4);

var locb = new Array(4);

var p = this.patcher;

p.message('zoomfactor', sized);

p.wind.scrollto(0,0);

loc = p.wind.location; //left, top, right, bottom

locb = p.getnamed('corner').getattr("presentation_rect");

p.wind.location = [ loc[0], loc[1],

loc[0] +locb[0] *sized, loc[1] +locb[1] *sized ];

} Hover Tips in JavaScript

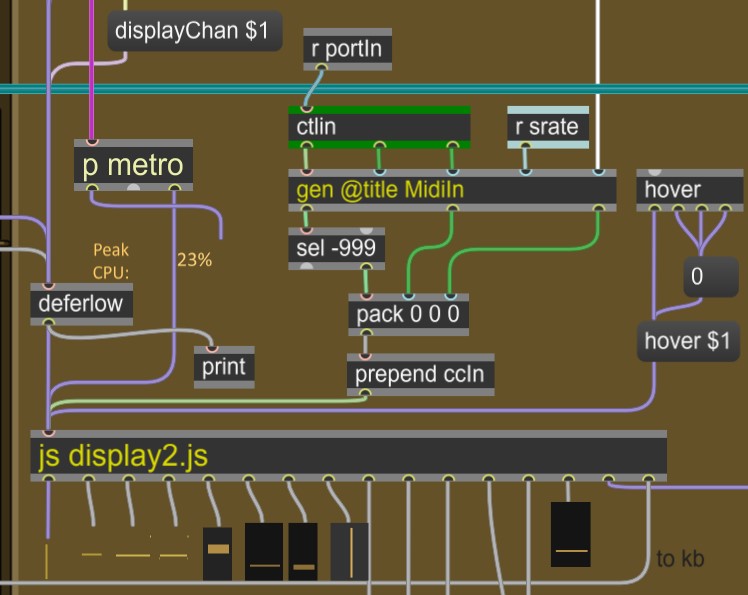

Max has two built-in functions for interactive help, both available as fields in each object's inspector window. However, there are issues with both if not designing an M4L device. The 'hints' window cannot be displayed in a standalone application, and is purely under the control of Ableton Live. And the 'info' displayed when hovering over a device is not very controllable; its font and background color are not settable, and the info overlays other controls when one is trying to use them. Fortunately, it's actually much easier to provide context sensitive help via JavaScript and the hover object, at the right of the below picture. the first outlet of the hover object passes straight into the JavaScript, as an argument to hover(), and the other three outlets reset the hover text when the user is no longer hovering over a UI element by setting the hover() argument to zero instead.

This sends a hover message to the js object, which contains descriptions for all the objects in strings. As described in the last tutorial, the names of all the UI objects are already in this javascript object, in order to create object references to them in an array of scripting IDs. So the additional javascript to create the hover text is very simple:

var scriptNames =[ "chan", "whl","e1a","e1d","e1s","e2a","e2d",

// (etc)...

"f1plot","o1plot","o2plot","l1plot","l2plot","msliders","msel"];

function iDinit(){

var p = this.patcher;

for(i = 0; i < scriptNames.length; i++){

scriptIds[i] =p.getnamed(scriptNames[i]);

}

}

var helptext = [

"CHANNEL. Selects and displays the current...",

// (etc, until entry 198)...

"MONITOR MODE MENU. When VOICE mode is selected...."

];

function hover(x){

if (sinit == 0) iDinit();

var s = scriptNames.indexOf(x);

if (s == -1 || x == 0 || x === 'undefined')

scriptIds[153].message("set", "HOVER TIPS.");

else

scriptIds[153].message("set", helptext[s]);

}First the hover function checks if the script IDs have been initialized, and if not calls iDinit(). The object reference to the text window where the help tips are displayed is at index 153 in the ID list, so again as described in the last tutorial, this script uses that index directly to write to the text box for the tips. (I should note that the help text adds one name to the global namespace, even though it contains 200 long text lines, because it's all in one array. ) To find the text to display upon hovering over a UI element, the JavaScript invokes JavaScript's Array.indexOf() method on the scriptNames array to find the locate the control index. The text from that index of the helptext[] array is then set in the textedit window. It's much easier to create the help all in one document than in separate inspector windows, although in the future, the script could copy the text into the info attribute of the display objects for an M4L hint window, if Ableton ever supports multicore instruments.

Putting matrixctrl values in a buffer

The encoding of matrixctrl values is a little bizarre. Thankfully the values issued when the object is banged are all stored in pattrstorage, so with outputmode set to 1, one can routepass them into javascript and put them in a buffer. If all the buttons are off, pattrstorage reports "0 0 0"; if the first three are on in the first column, pattrstorage reports "0 0 1 0 1 1 0 2 1." Thre's a triplet for each button that's on, and off buttons are skipped. A loop in JavaScript can easily make the latter example an array "[1, 1, 1]" for putting in a buffer via indirect indexing, shown in the example below.

There's two matrixctrl objects in Husserl3, one for muting voices 1~8, and one for muting voices 8~16. The following JavaScript function combines them both in one buffer, which is read by the gen 'audio3channels' object to determine which channels to mute:

function matrix1(){

var m = arrayfromargs(arguments);

var a = [0,0,0,0, 0,0,0,0];

if(m[2] !=0 ) // if not all off

for (i = 1; i < m.length; i +=3) a [m[i] ] =1;

layer.poke(2, 0, a);

}

function matrix2(){

var m = arrayfromargs(arguments);

var a = [0,0,0,0, 0,0,0,0];

if(m[2] !=0 ) // if not all off

for (i = 1; i < m.length; i +=3) a [m[i] ] =1;

layer.poke(2, 8, a);

}The functions are in the script for the multi pattrstorage object.

JSUI

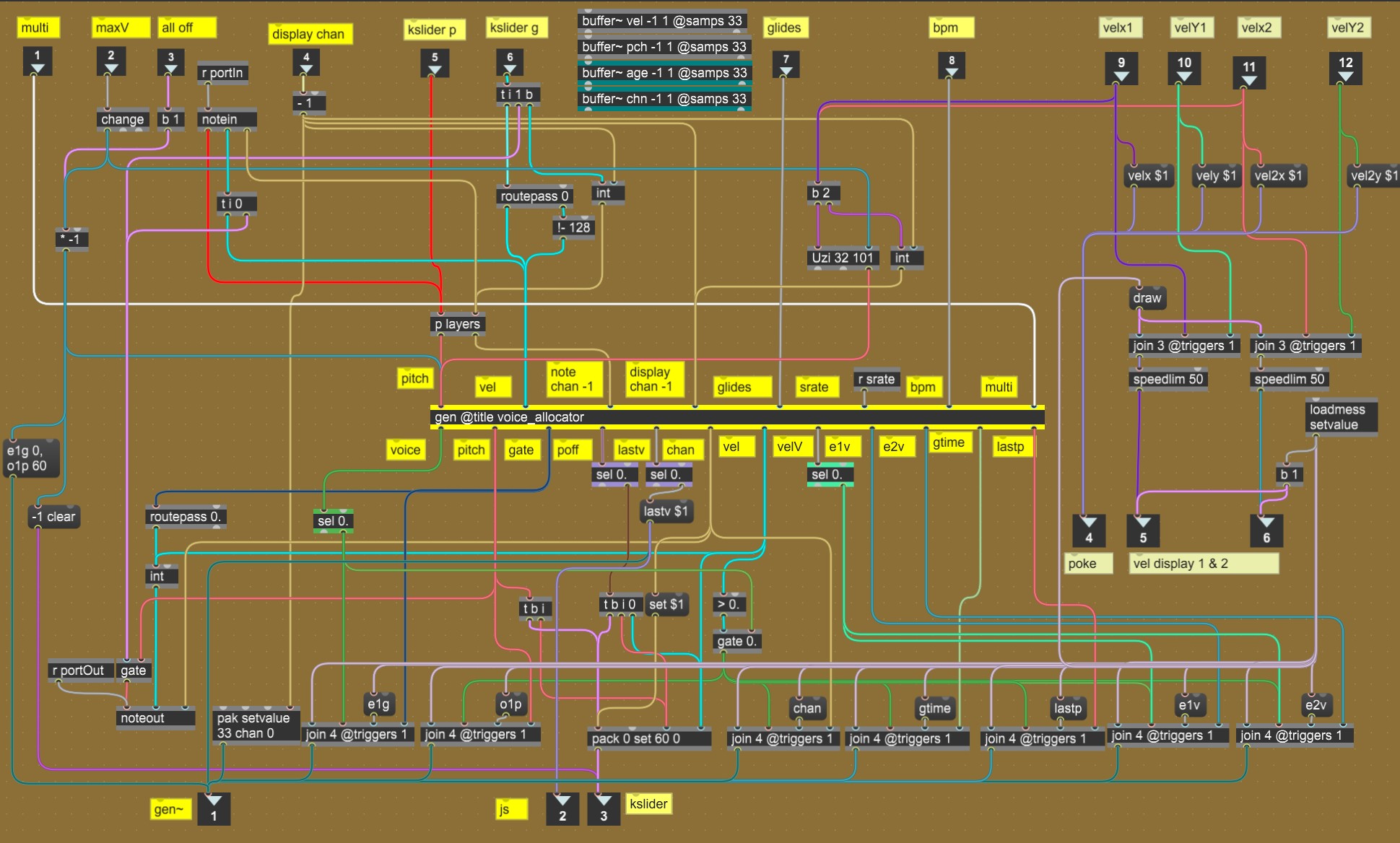

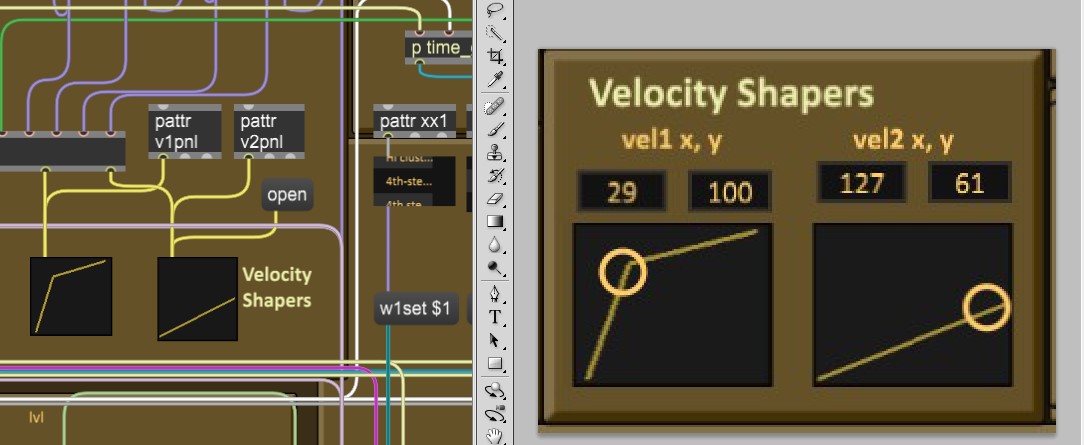

Husserl2 used the function object for displaying velocities, but it was extremely inefficient. Husserl changes all the playing voice volumes when the velocity shaper breakpoint is changed, so it required two function objects, one for the display and control, and one to process all the playing voices upon a change. Husserl3 instead performs velocity shaping inside the gen object for voice allocation, in a simple two lines of code:

calcBreakpoint(vel, x, y){

if (vel <= x) return vel * (y /x) *.007874;

else return (y + (vel -y) /(127-x)) *.007874;

}When the value changes, an uzi iterates through all active voices in the voice allocator and sends their new velocities to the appropriate voices in gen~. The uzi input seemed best, because gen cannot create more than one value per input received, so otherwise the gen object would have needed an extra 32 outputs/ the uzi is in the voice allocator subpatch, in the upper middle right area. The draw() message to jsui is generated whenever the pictctrl object over it changes, from the control logic on this subpatch's right side.

With Max function objects, I also was not happy with the tiny point necessary to click upon, and preferred the larger circle available in the pctctrl object. So a pictctrl object overlays a jsui object which draws the velocity shaping function with two lines. After initialization by a loadbang, the script is very simple:

inlets = 1;

outlets = 1;

function bang(){

sketch.default2d();

sketch.gldisable('blend');//turns off alpha processing

clear();

refresh();

}

function clear(){

with (sketch) {

// set the clear color

glclearcolor(0.1, 0.1, 0.1, 1.000);

glclear(); // erase the background

refresh();

glend();

}

}

function draw(x, y){

with (sketch) {

// set how the polygons are rendered

clear();

glcolor(0.7, 0.6, 0.2, 1.000);

gllinewidth(1.5);

if(x !=0){

// move the drawing point

moveto(-.915, -.915, 0.);

/// draw the first line segment

lineto(fit(x), fit(y), 0.);

} else{

// move the drawing point

moveto(-.915, fit(y), 0.);

}

if (x <127)

// draw the second line segment

lineto(.915, .915, 0.);

refresh();

glend();

}

}

function fit(x){

return (x >64) ? x *.015 -.99 : x *.015 -.9;

}I had to adjust the margins to display the line properly on boundary conditions, but otherwise it was exactly as anticipated. One quirkmm I found with the jsui object (and also for the chooser object) is that I could not send it messages directly from javascript using this.patcher.getnamed("scriptingName").message(); so I connected some pattr objects directly to their inputs and send messages to the pattr objects instead.

However, the JSUI object is very expensive on CPU, using 2% for almost half a second on every draw() operation. So for the waveform graphs, which can update dynamically in this design, I stuck with the plot() object; but the velocity shapers only redraw on channel load and when the breakpoint is changed by program recall or by panel editing, and when the JSUI object is not drawing, it uses no CPU. So it was absolutely the best choice for the velocity shapers.

Displaying values from gen~

To display the current waveforms, envelope levels, LFO levels, etc, gen~ pokes the values from the most recently played voice for the currently displayed channel into a shared buffer~ on the falling edge of a 30Hz ramp. A metro sends bangs into the JavaScript at the same frequency for it to pick up and display the values via a bang() function. The nice thing about playing with buffers in javascript is that it supports reading an array of buffer values all in at once. The 'dat' buffer shares the data with gen~, from which the bang() function fetches 18 values:

var dat = new Buffer("dat");

function bang(){

var a = new Array();

a = dat.peek(1, 1, 18);

outlet(0, a[0] ); // current x

outlet(1, a[1] ); // current y

outlet(2, a[2] ); // current l1 level

outlet(3, a[3] ); // current l2 level

outlet(4, a[4] ); // current l3 level

outlet(5, a[5] ); // current e2 level

outlet(6, a[6] ); // current e3 level

outlet(7, a[7] ); // current sah

//...

o1w = a[10]; //(see below)

o2w = a[11];

//....Javascript reads all 18 of the singleton values simultaneously and sends each one to the appropriate display object via outlets or via thispatcher/getnamed().message() calls.

Additionally, the poly~ object for the 16 channels sums the voice outputs for the 32 poly~ voice instances and sends the result to JavaScript for the current displayed channel. The same bang() function reads the values for all 16 channels from one array simultaneously again, and sends their values to a multislider:

function atodb(x){

return 20 * Math.log(x) * Math.LOG10E;

}

//... in bang() function:

var meter = dat.peek(1, 32, 16);

// put into live.gain~ for channel level display

outlet(15, meter[channel -1]);

// build array for multislider

for (i = 0; i <16; i++){

meter[i] = atodb(meter[i])+60;

}

// send to multislider

outlet(12, meter); The multislider is not an audio object, and only updated at 30Hz, so the multichannel volume display does not consume much CPU. Eventually I'll update the atodb() function to precalculate the atodb values and store them in a lookup array, to offload some CPU from the rather overladen drawing functions.

Using the plot() Object for waveforms

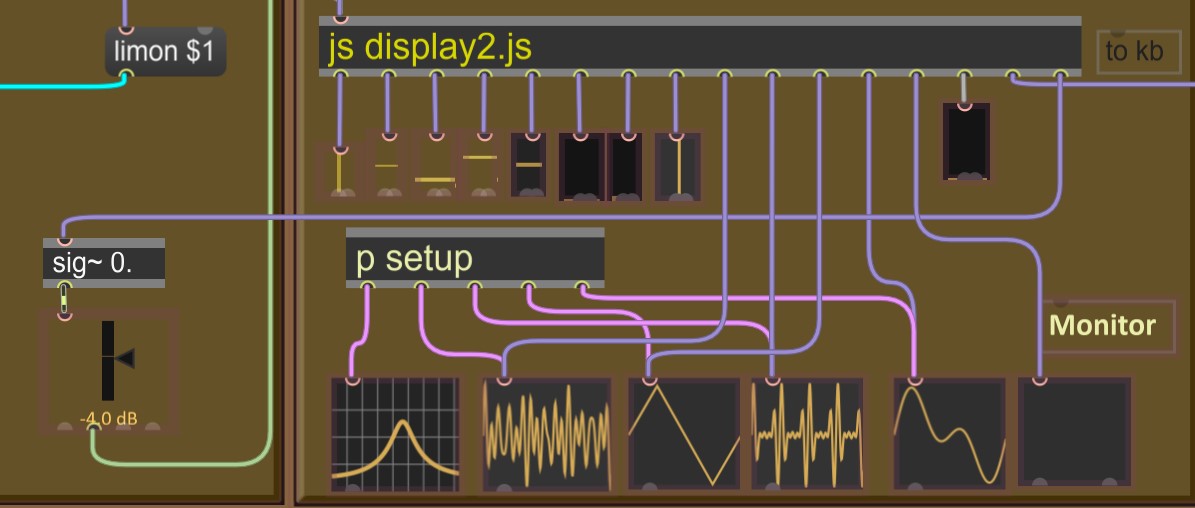

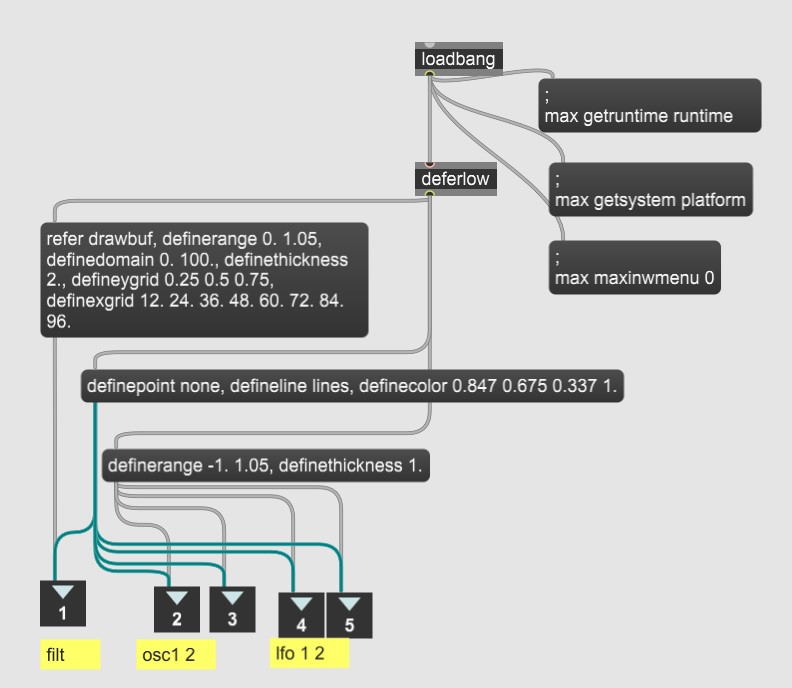

It takes a little message preparation to set up the plot() object. If I were doing this afresh, I would have sent the messages from javascript, but I had already created a subpatch to prepare the plot() objects.

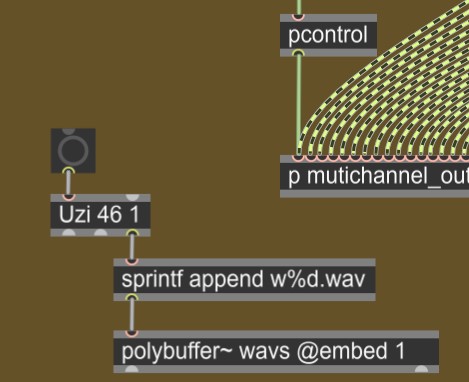

To fill the plot~ objects with oscillator and LFO waveforms, I ran into an old bug where the plot~ objects can only display from the first channel of a buffer~. So I split all the waves in my wavesets into 47 separate 128K single-channel files and load them into a polybuffer~, via the lower-left network below:

By setting the embed attribute on the polybuffer~ the waveform list is stored with the patch, so I only needed to run the uzi once. Then I access the waveforms from JavaScript depending on the chosen waveset by the chooser lists named o1set and o2set, which send w1set and w2set messages to the javascript of their current values:

//initialize in global namespace:

o1s, o1sx, o1wx, o2s, o2sx, o2wx = 0;

function w1set(x){

o1s = x +1;

o1sx = 1;

}

function w2set(x){

o2s = x +1;

o2sx = 1;

}The bang() function described above then sends a message to the plot() objects:

if(o1sx!=0 || o1wx!=o1w){

outlet(8, "refer",

"wavs." + o1s,

Math.max(o1w, 1.),

0,

wavelength

);

o1sx = 0;

o1wx = o1w;

}

if(o2sx!=0 || o2wx!=o2w){

outlet(9, "refer",

"wavs." + o2s,

Math.max(o2w, 1.),

0,

wavelength

);

o2sx = 0;

o2wx = o2w;

}The 'o1w' and 'o2w' values are from the shared gen~ buffer described above for the indicators, so the waveform selection updates dynamically with any modulation. The values are checked against prior values, and the spinlocks zeroed in the global namespace, before sending a message to the plot~ object. Because the plot~ objects do not receive messages setting them to display a different part of the buffer unless the oscillator waveform is being modulated, their CPU load is usually low; and CPU usage is still lower than for JSUI or scope~ objects even if the waveform is actively being modulated. The most significant impact on performance is the number of pixels in the display. Doubling the window size from 75% to 150% increases Husserl's CPU load on my 4GHz i7-6700K by 4%, which is, 24% of one core...perhaps the reason why Ableton likes to make things so small...

Drawing the Filter Curve

Drawing the filter curve was probably the most complex task to perform in all of Husserl3's design. When I started on this design in 2010, I predrew an array of filter curves for different LP/BP/HP mixes and different resonance settings. I drew 100 points for each curve using a function to calculate the output level of a filter at different frequencies (still in my SynthCore library) for 101 filter-type mixes and 100 resonance points. I put the results in 100 channels, each containing 101 curves. That made quite a large file, so when I built the 5D filter, which adds another two 100-point dimensions, it seemed best to modify the original filter curves rather than draw all of them.

In JavaScript, a routine in bang() fetches the current five points after filter modulations from gen~ as described above, reads the 100 points of the appropriate filter curve from the data buffer, adjusts its slope for filter type and saturation, then writes the results to another 100-point buffer for a plot~ object to display.

var fc1 = a[12];

var fp1 = a[13];

var fq1 = a[14];

var ft1 = a[15] * -1;

var fs1 = a[16];

var x = Math.floor(fq1 * 100);

var y = (Math.floor(ft1 *50) + 50) *256

+ 124 - Math.floor(fc1);

var v = new Array();

v = svfgraph.peek(x, y +20, 100);

var z = (fs1 + 1) * .25

for (i=0; i<100; i++){

if(fp1 < 0){

fgraph[i] = (v[i] * (1 + fp1) + Math.abs(fp1)) * .75 ;

} else {

fgraph[i] = (v[i] * v[i] * fp1 + v[i] * (1 -fp1)) * .75;

}

fgraph[i] = Math.min(fgraph[i] + z, 1);

drawbuf.poke(1, i, fgraph[i] * .7);

}Display Update for Channel Changes

When the current channel is changed, the JavaScript updates all the panel controls from a buffer. This could be a simple task, but as I had already tried moved as many of the control parameter scaling factors out of gen into the main patch, I also had to reverse-scale everything from the shared buffer~ data for each channel back to the value ranges for the panel controls; and that involved bit-unpacking of some control values. So it turned into some very long Javascript, with additional quirks to update panel controls with multiple modes or combined graphical/textual display. The patch uses the 'outputmode 1' of the pattrstorage objects to 'dirty' the preset if it has been changed, so it turns the outputmode off and on again, before and after the display update.

function displayChan(chan){

if (sinit == 0) iDinit();

if(channel == chan) return;

channel = chan;

scriptIds[151].message("outputmode", 0);

scriptIds[152].message("outputmode", 0);

chanParams = programs.peek(chan, Multi, 160);

// fetch and alter values from buffer for display

chanParams[1] *= 100; //whl

chanParams[2] = chanParams[135]; //e1a

chanParams[3] = chanParams[136]; //e1d

chanParams[4] = chanParams[4] *100; //e1s

//....

chanParams[118] *= 100; //l1w

chanParams[119] *= 100; //l2w

// chanParams 120~135 don't need scaling...

// set all the panel objects at once:

for (i = 1; i < 135; i++){

scriptIds[i].message("set", chanParams[i] );

}

// wiggle cases:

scriptIds[80].message(chanParams[144]); //l1f

scriptIds[81].message(chanParams[145]); //l2f

scriptIds[82].message(chanParams[146]); //l2f

scriptIds[151].message("outputmode", 1);

scriptIds[152].message("outputmode", 1);

}

Updating channel display from MIDI

An additional wrinkle of making a multiphonic display is that MIDI does not always update the display: it could be changing other channels not currently displayed. As explained in tutorial 5, I use set of panel controls and update them for the current channel, instead of making 16 panels with >200 display objects each, which also keeps the overall file size to <1.5MB instead of 30MB and makes loading the data much faster.

Tutorial 5 mentioned that 128-entry selector functions in gen scale the control parameters into the 128-value range of CC values. What I neglected to anticipate was that MIDI could ALSO update the display, requiring yet another long routine in javaScript, because the scaling of parameters for gen~, for MIDI, and for the display are all different. Fortunately JavaScript supports switch statements, and the ranges don't need to be clipped because the display objects clip out-of-range values automatically. So this last twiddle was much easier, and being mostly copy/paste, only took a day to write. It was one hell of a lot easier than trying to do the same thing with Max objects. Eventually before I add the 16 sequencer channels, I will update this function to use numeric indices, as that is more efficient than string parsing, but as I've met the performance goals for Husserl3, this is what it will look like, which is at least more human readable:

function ccIn(val, cc, chan){

if (sinit == 0) iDinit();

if (chan != channel) return;

var x, out;

switch(scriptNames[cc]){

case "f1c" : case "f1cm" : case "w1wam":

case "w1wbm": case "w1xp" : case "w1xpm":

case "w1d" : case "w2fm" : case "f2cm" :

case "w2wam": case "w2wbm": case "w2xp" :

case "w2xpm": case "w2d" : case "w1dm" :

case "w2dm" : case "w2fmm":

out = val -63;

break;

case "f1t" : case "f1pm": case "f1qm" :

case "f1sm" : case "f1tm": case "ringm":

case "ampm" : case "w2mixm": case "phasem":

case "e1mod": case "e2mod": case "e3mod":

out = val *2 -100;

break;

//...

case "w1sync": // decodes two bit fields

if(val>64){

out = 1;

scriptIds[130].message("set", val -64);

} else {

out = 0;

scriptIds[130].message("set", val);

}

break;

//...

case "l1snc":// decodes three bit fields

if(val>64){

out = 1;

x =val -64;

} else {

out = 0;

x =val;

}

if(x >32) {

scriptIds[129].message("set", x -32);

scriptIds[128].message("set", 1);

} else {

scriptIds[129].message("set", x);

scriptIds[128].message("set", 0);

}

break;

//...

}

scriptIds[cc].message("set", out);

// need to propagate changes for envelope bars:

switch(scriptNames[cc]){

case "e1a":case "e1d":case "e1s":case "e1r":

case "e2a":case "e2d":case "e2s":case "e2r":

case "e3a":case "e3d":case "e3s": case "e3r":

scriptIds[cc].message(out);

break;

default:

}

}

}

The most difficult part was storing the 'layers' buffer, because it caused terrible race conditions, until I cached the object reference to a pattr object called [multins]. , then sent the pattr object messages before preset store and after preset recall. I have to say caching the object reference (performed by minit(), in the variable [multinsIsd]) was actually crucial to getting the design working, because I got long and otherwise inexplicable hangs after clicking the "recall" or "restore" buttons more than once within a few seconds. I didn't even think it was so important myself, lol, but thankfully Id been taught what to do. See tutorial 8 for a detailed explanation.

And that concludes the UI portion of the JavaScript tutorial. Next week I hope to share on managing pattrstorage with JavaScript.