jitter noob help

jitter noob question:

i have a strong computer configuration and external graphics card, and STILL whenever I work with a 1920 ×1080 videos the UI objects get laggy and jittery, it takes 3 min to open dialog boxes, etc. in short it gets annoying to work in max.

Is this normal?

If it is, how do you do anything done in jitter?

If it isn't what could be my problem?

P.S.

i do use textures and not matrices, and max does use the external card, i can see the GPU use rising a little when doing video

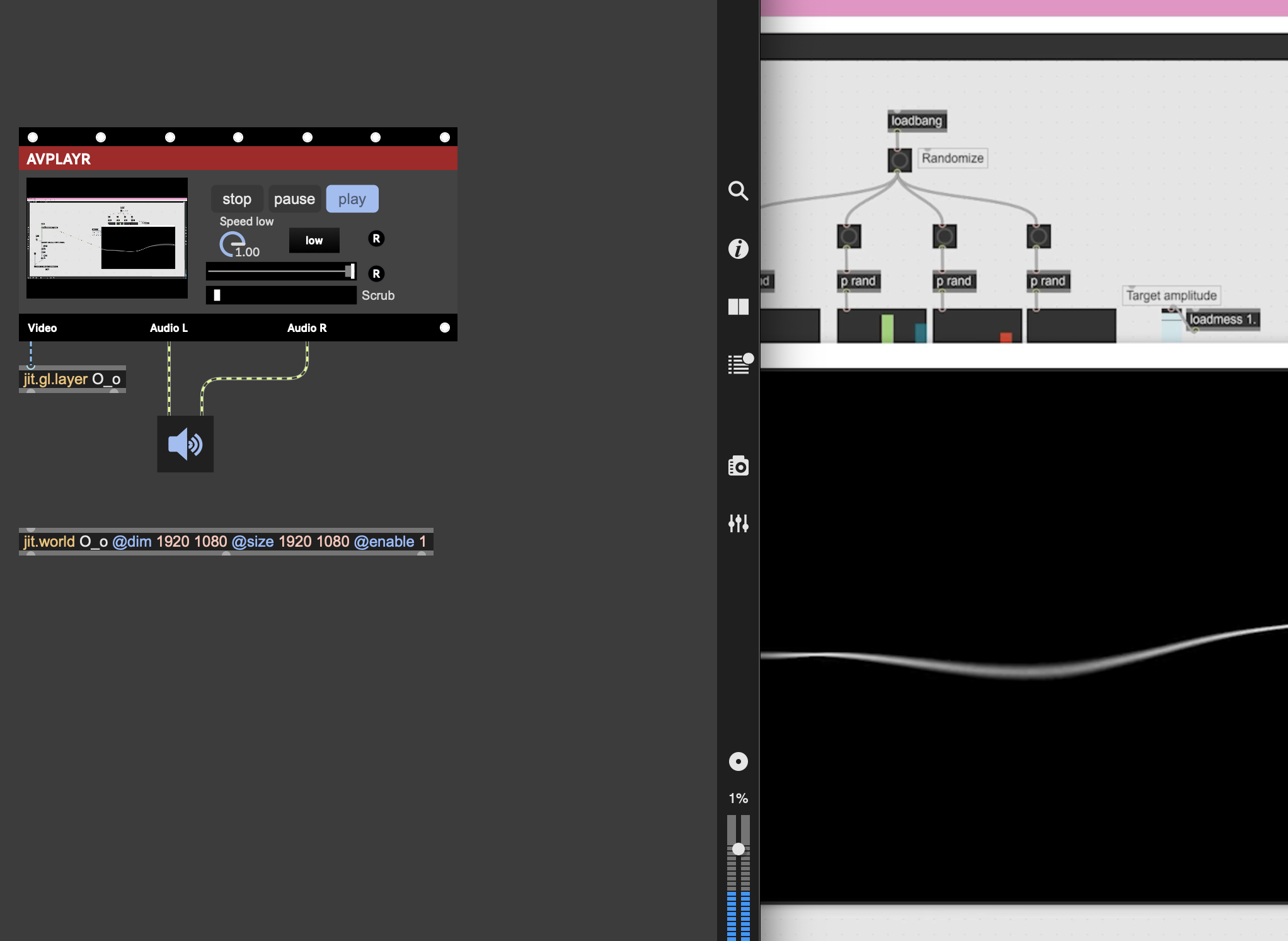

difficult to answer this question without the exact patch, and without knowing every computer's specs... but when i try a basic setup with vizzie's 'AVPLAYR', and a 1920x1080 video, it seems to work fine with just that much:

perhaps you could take a look at vizzie's 'AVPLAYR' for a quick guide, or see these articles:

...keep in mind the difference between your video dimensions and any geometry dimensions you might be using(for example, jit.gl.layer/jit.gl.videoplane do not require 1920x1080 for their 'dim' setting, those are dimensions of the GL geometry which are more about width and height for the grid of polygons used to construct the videoplane shape, a lower 'dim' is fine there)...

post back here if you still have trouble, others might have more advice given more detail(i'm always curious about this kinda thing, too).

hope this can help get you a bit further 🍻

You should get better performance than that. Maybe your Max installation needs refreshing ?

thank you for the links i did not come across those.

the thing you said about geometry, i did not know that. so you can do geometry in small dimension and then you resize it later when recording? so you put small dimensions in the jit.world or on the objects thenselves?

my setup is

amd radeon rx 9070 xt, amd ryzen 7 98003d 8core

so you can do geometry in small dimension and then you resize it later when recording?

no, sorry if i'm confusing this, to explain further: if you want to see it displayed in jit.world, you can set jit.world at the highest-quality 'size' you want to view it in;

in my screenshot, i left both the @size(which is the size of the display window), as well as the @dim(which is the dimension of the output texture) at the preferred size of 1920x1080... and now that i look closer, i see that i didn't need to set the @dim attribute for 'jit.world', it will automatically adapt to the display-window size:

but in my 1st screenshot(above this post), i'm using a 'jit.gl.layer' object which is a geometry object, to display the video within 'jit.world', so with that particular 'jit.gl.layer' object, i've left it at its default @dim setting of 20x20(because this is the 'geometry' dimensions, and not the output_texture's pixel-by-pixel dimensions)...

'jit.gl.layer' or 'jit.gl.videoplane' are geometry objects which are simply a 'flat plane'(like a cube but only in 2 dimensions: a flat rectangular or square plane)... for all these types of geometry objects which eventually get drawn by jit.world, the @dim attribute is not a pixel-by-pixel dimension but rather a dimension that somehow defines the 'polygon count' of the geometry(how those objects' @dim attribute translate into a certain amount of polygons is beyond my understanding to explain, but i learned this recently from some questions that were answered on the Max discord... apparently, this is a common misconception)

so the only thing you need to worry about setting dimensions exactly to your high-quality movie are the objects which work with the input and output textures themselves(that would likely be just 'jit.movie' at the input, and 'jit.world' at the output, in most cases).

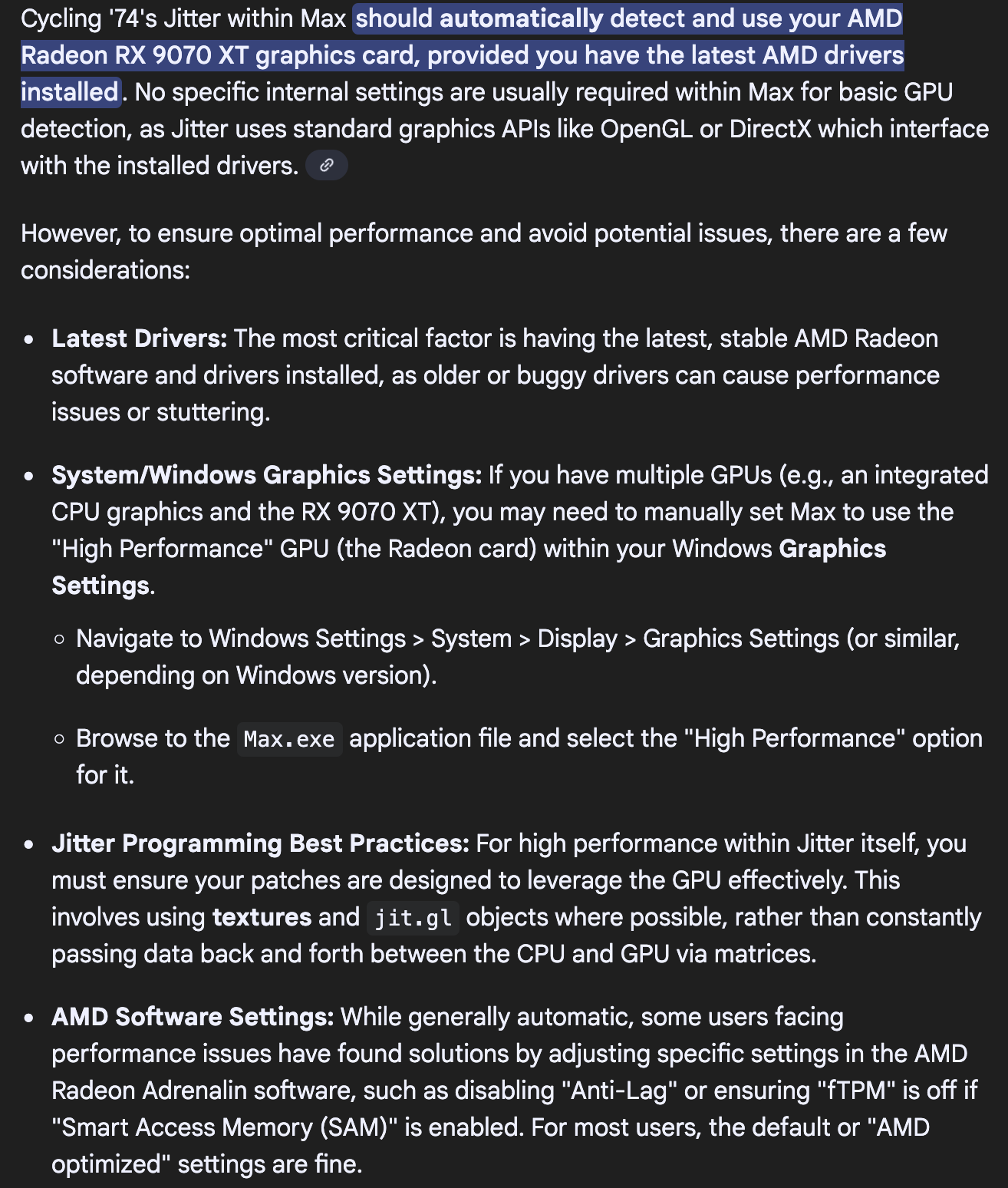

also, while i'm not familiar with your particular setup, sometimes, you may have an 'integrated graphics chip' which can read as a GPU, and Max might have to be set manually to utilize the "amd radeon rx 9070 xt"... when i did an AI search for your chips, i found the following information:

-first, your main 8core chip does have an integrated graphics chip👇

-secondly is this information, see where it says specifically how to manually link the 'System->Display->Graphics Settings' to 'Max.exe', that might help👇

you might not need to bother with the above about your graphics chip, it sounds like Max will automatically find it, but...

hope all of this information together, can help you figure out where the bottleneck is.

others might have better explanations about the 'polygon count' vs. 'pixel-by-pixel' dimensions for different parts of the chain, or even other advice as to what might be going on for your case, hoping they'll chime in here as well.

Max won't automatically detect and use a particular graphics card on Windows platforms. it is up to the user to manually set which card they want Max to use, as described here:

I have read the article, and it is mostly for laptop users, I am on a desktop windows computer. I have enabled the high performance option on windows, I have manually and explicitly put the discrete graphics card for use on Max, I have disabled all kinds of "enhancers" on the AMD graphics card settings, and it is still the same.

here is a private video of the help file of the new jit.fx.vhs object, when I move the mouse even without clicking, the position line of the playlist object starts lagging, when I change the wiggle attribute it lags. The video does not lag at all.

I bought this expensive video card just to fiddle around with jitter, and I would really like to know why is this happening? Should I contact people at cycling? or at AMD ?

You should definitely reach out to support, at the very least they can get this situation logged. But one of the steps they may suggest is a preferences reset or a clean install. So might make sense to try those two steps first, as it is definitely not expected behavior.

This also sounds more like a UI issue then a jitter graphics issue, but hard to say from the video.